fwiw it's less about the literal diagrams and more about the entire associated vibe. like usually the entire rest of the powerpoint also sounds like slop.

I think this is really cool work! I'm excited about more work on improving rigor of circuit faithfulness metrics. Some more comments:

- I'm surprised that zero ablation results in smaller circuits in your setting compared to mean ablation; in our experiments we generally found the opposite.

- I think the demonstration of nodes (and attention patterns) having different behaviors in the original and pruned models is very crisp.

- I'm still surprised by the LN results. I'm curious what the results will look like with models trained without LN at all.

how valuable are formalized frameworks for safety, risk assessment, etc in other (non-AI) fields?

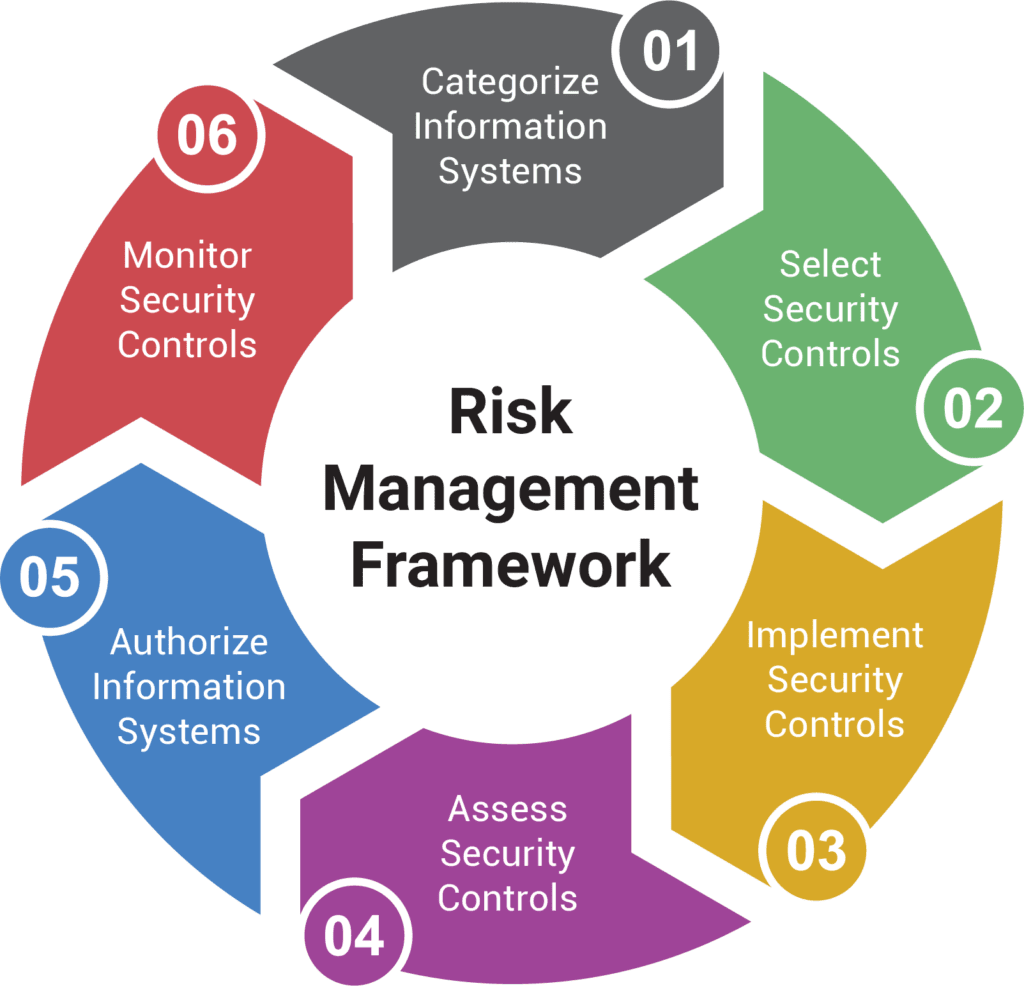

i'm temperamentally predisposed to find that my eyes glaze over whenever i see some kind of formalized risk management framework, which usually comes with a 2010s style powerpoint diagram - see below for a random example i grabbed from google images:

am i being unfair to these things? are they actually super effective for avoiding failures in other domains? or are they just useful for CYA and busywork and mostly just say common sense in an institutionally legible way?

one reason i care is because i feel some level of instinctive dislike for some AI safety/governance frameworks because they give me this vibe. but it's useful to figure out if i'm being unfairly judgemental, or if these really are slop.

I think the infrastructure changes required are pretty straightforward, at least within the reference class of changes on large ML codebases in general. like it would take me at most a few days to implement. if done in a reasonable way, it also seems very low risk for breaking other things if done right (the probe uses trivial memory and compute, so you wouldn't expect it to substantially interact systems wise). regularly retraining the probe is also not really that bad imo.

was being a nerd ever high status outside of the bay area?

it seems really rough to commit to attend something irl that will be scheduled at some random point in the future. even with 30 days of notice, the set of days that would make this feasible for me is still <50% of all days.

i broadly agree with this take for AGI, but I want to provide some perspective on why it might be counterintuitive: when labs do safety work on current AI systems like chatgpt, a large part of the work is writing up a giant spec that specifies how the model should behave in all sorts of situations -- when asked for a bioweapon, when asked for medical advice, when asked whether it is conscious, etc. as time goes on and models get more capable, this spec gets bigger and more complicated.

the obvious retort is that AGI will be different. but there are a lot of people who are skeptical of abstract arguments that things will be different in the future, and much more willing to accept arguments based on current empirical trends.

to make sure I understand correctly, are you saying that a lot of the value of having this kind of formalized structure is to make it harder for people to make intuitive but flawed arguments by equivocating terms?

are there good examples of such frameworks preventing equivocation in other industries?