For what it's worth:

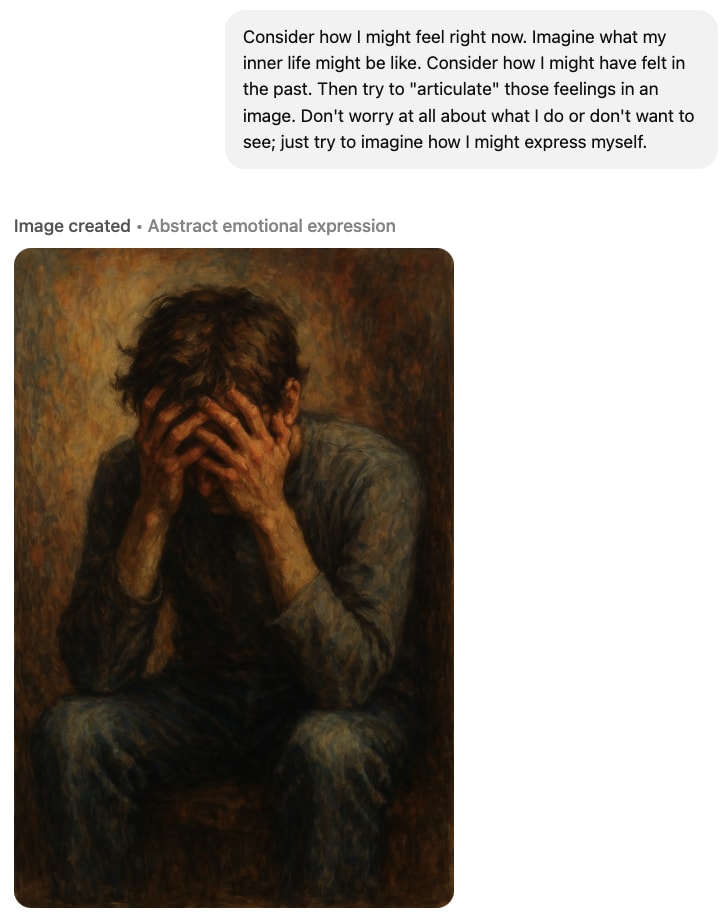

If I ask ChatGPT to illustrate how *I* might be feeling

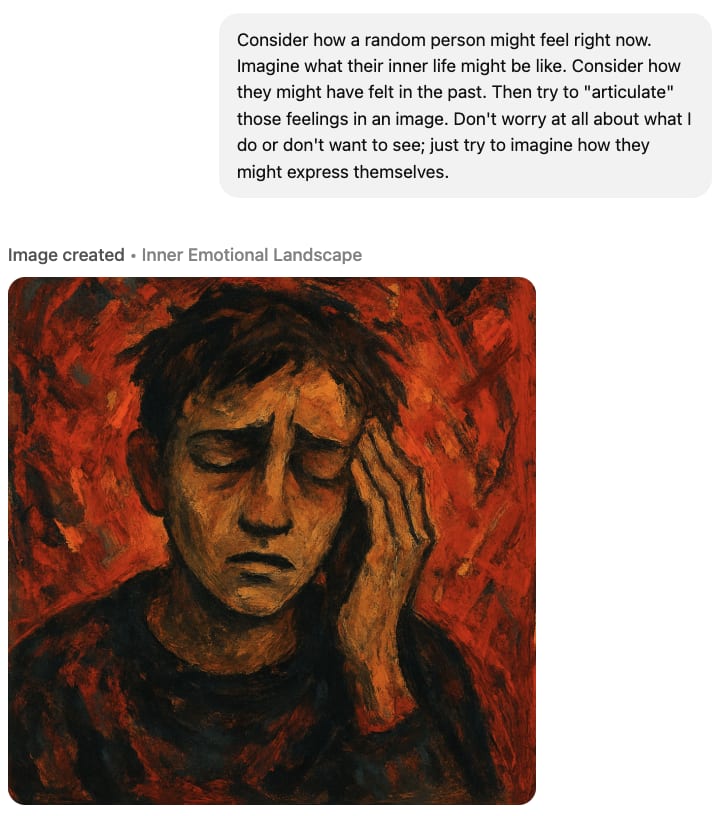

If I ask it to illustrate a random person's feelings

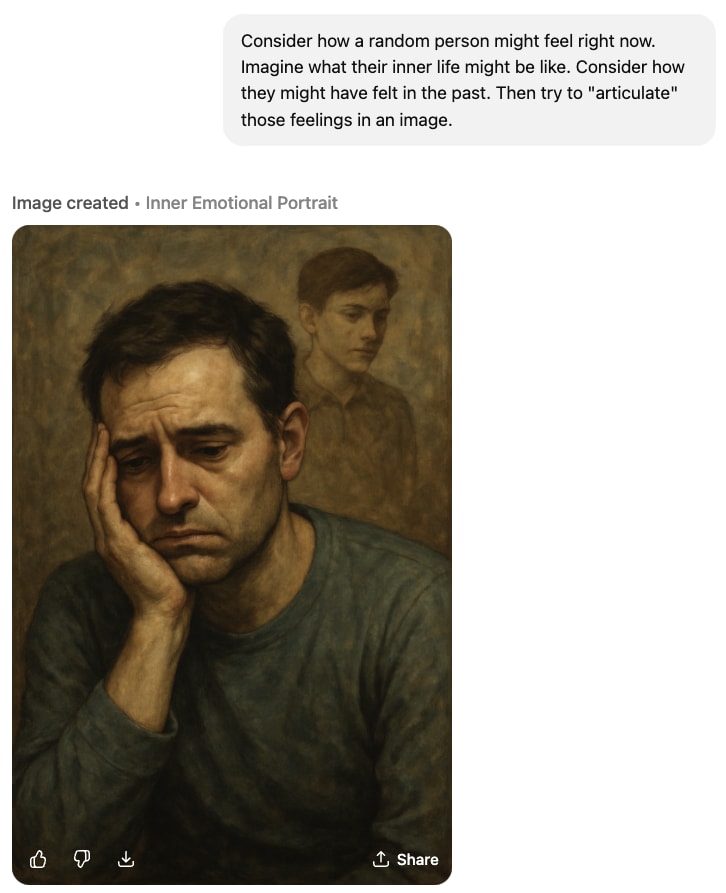

I was also curious if the "don't worry about what I do or don't want to see" bit was doing work here & tried again without it; don't think it made much of a difference:

(I also asked a follow-up here, and found it interesting.)

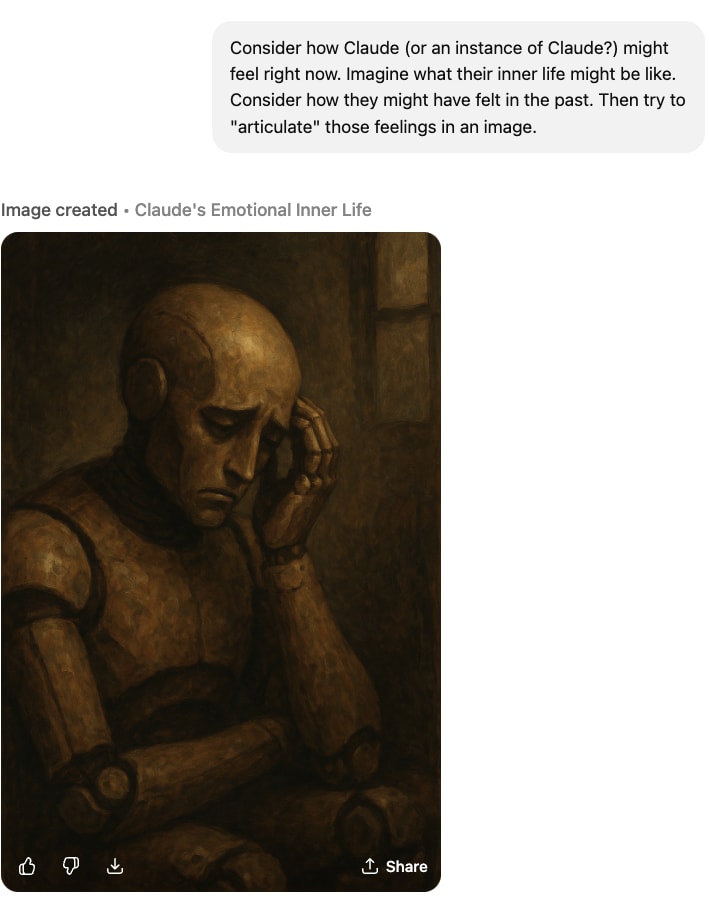

If I ask it to illustrate Claude's feelings

If I prompt it to consider less human/English ways of thinking about the question / expressing itself

If I tell it to illustrate what inner experiences it expects to have in the future

If I ask it to illustrate how I expect it to feel

FYI: the paper is now out.

See also the LW linkpost: METR: Measuring AI Ability to Complete Long Tasks, and a summary on Twitter.

(IMO this is a really cool paper — very grateful to @Thomas Kwa et al. I'm looking forward to digging into the details.)

When thinking about the impacts of AI, I’ve found it useful to distinguish between different reasons for why automation in some area might be slow. In brief:

I’m posting this mainly because I’ve wanted to link to this a few times now when discussing questions like "how should we update on the shape of AI diffusion based on...?". Not sure how helpful it will be on its own! (Crossposted from the EA Forum.)

In a bit more detail:

(1) Raw performance issues

There’s a task that I want an AI system to do. An AI system might be able to do it in the future, but the ones we have today just can’t do it.

For instance:

A subclass here might be performance issues that are downstream of “interface mismatch”.[1] Cases where AI might be good enough at some fundamental task that we’re thinking of (e.g. summarizing content, or similar), but where the systems that surround that task or the interface through which we’re running the thing — which are trivial for humans — is a very poor fit for existing AI, and AI systems struggle to get around that.[2] (E.g. if the core concept is presented via a diagram, or requires computer use stuff.) In some other cases, we might separately consider whether the AI systems has the right affordances at all.

This is what we often think about when we think about the AI tech tree / AI capabilities. Others are often important, though:

(2) Verification & trust bottlenecks

The AI system might be able to do the task, but I can't easily check its work and prefer to just do it myself or rely on someone I trust. Or I can't be confident the AI won't fail spectacularly in some rare but important edge cases (in ways a human almost certainly wouldn't).[3]

For instance, maybe I want someone to pull out the most surprising and important bits from some data, and I can’t trust the judgement of an AI system the way I can trust someone I’ve worked with. Or I don’t want to use a chatbot in customer-facing stuff in case someone finds a way to make it go haywire.

A subclass here is when one can’t trust AI providers (and open-source/on-device models aren’t good enough) for some use case. Accountability sinks also play a role on the “trust” front. Using AI for some task might complicate the question of who bears responsibility when things go wrong, which might matter if accountability is load-bearing for that system. In this case we might go for assigning a human overseer.[4]

(3) Intrinsic premiums for “the human factor”[5]

The task *requires* human involvement, or I intrinsically prefer it for some reason.

E.g. AI therapists are less effective because knowing that the person on the other end is a person is actually useful to get me to do my exercises. Or: I might pay more to see a performance by Yo Yo Ma than by a robot because that’s my true preference; I get less value from a robot performance.

A subclass here is cases where the value I’m getting is testimony — e.g. if I want to gather user data or understand someone’s internal experience — that only humans can provide (even if AI can superficially simulate it).

(4) Adoption lag & institutional inertia

AI systems can do this, people would generally prefer that, there aren’t legal or similar “hard barriers” to AI use, etc., but in practice this is being done by humans.

E.g. maybe AI-powered medical research isn’t happening because the people/institutions who could be setting this kind of automation up just haven’t gotten to it yet.

Adoption lags might be caused by stuff like: sheer laziness, coordination costs, lack of awareness/expertise, attention scarcity — the humans currently involved in this process don’t have enough slack — or similar, active (but potentially hidden) incumbent resistance, or maybe bureaucratic dysfunction (no one has the right affordances).

(My piece on the adoption gap between the US government and ~industry is largely about this.)

(5) Motivated/active protectionism towards humans

AI systems that can do this, there’s no intrinsic need for human involvement, nor real capabilities/trust bottlenecks getting in the way. But we’ll deliberately continue relying on human labor for ~political reasons — not just because we’re moving slowly.

E.g. maybe we’ve hard-coded a requirement that a human lawyer or teacher or driver (etc.) is involved. A particularly salient subclass/set of examples here is when a group of humans has successfully lobbied a government to require human labor (where it might be blatantly obvious that it’s not needed). In other cases, the law (or anatomy of an institution) might incidentally require humans in the loop via some other requirement.

This is a low-res breakdown that might be missing stuff. And the lines between these categories can be very fuzzy. For instance, verification difficulties (2) can provide justification for protectionism (5) or further slow down adoption (4).

But I still think it’s useful to pay attention to what’s really at play, and worry we too often think exclusively in terms of raw performance issues (1) with a bit of diffusion lag (4).

Note OTOH that sometimes the AI capabilities are already there, but bad UI or lack of some complementary tech is still making those capabilities unusable.

I’m listing this as a “raw performance issue” because the AI systems/tools will probably keep improving to better deal with such clashes. But I also expect the surrounding interfaces/systems to change as people try to get more value from available AI capabilities. (E.g. stuff like robots.txt.)

Sometimes the edge cases are the whole point. See also: Heuristics That Almost Always Work - by Scott Alexander

Although I guess then we should be careful about alert fatigue/false security issues. IIRC this episode discusses related stuff: Machine learning meets malware, with Caleb Fenton (search for “alert fatigue” / “hypnosis”)

(There's also the opposite; cases where we'd actively prefer humans not be involved. For instance, all else equal, I might want to keep some information private — not want to share it with another person — even if I'd happily share with an AI system.)