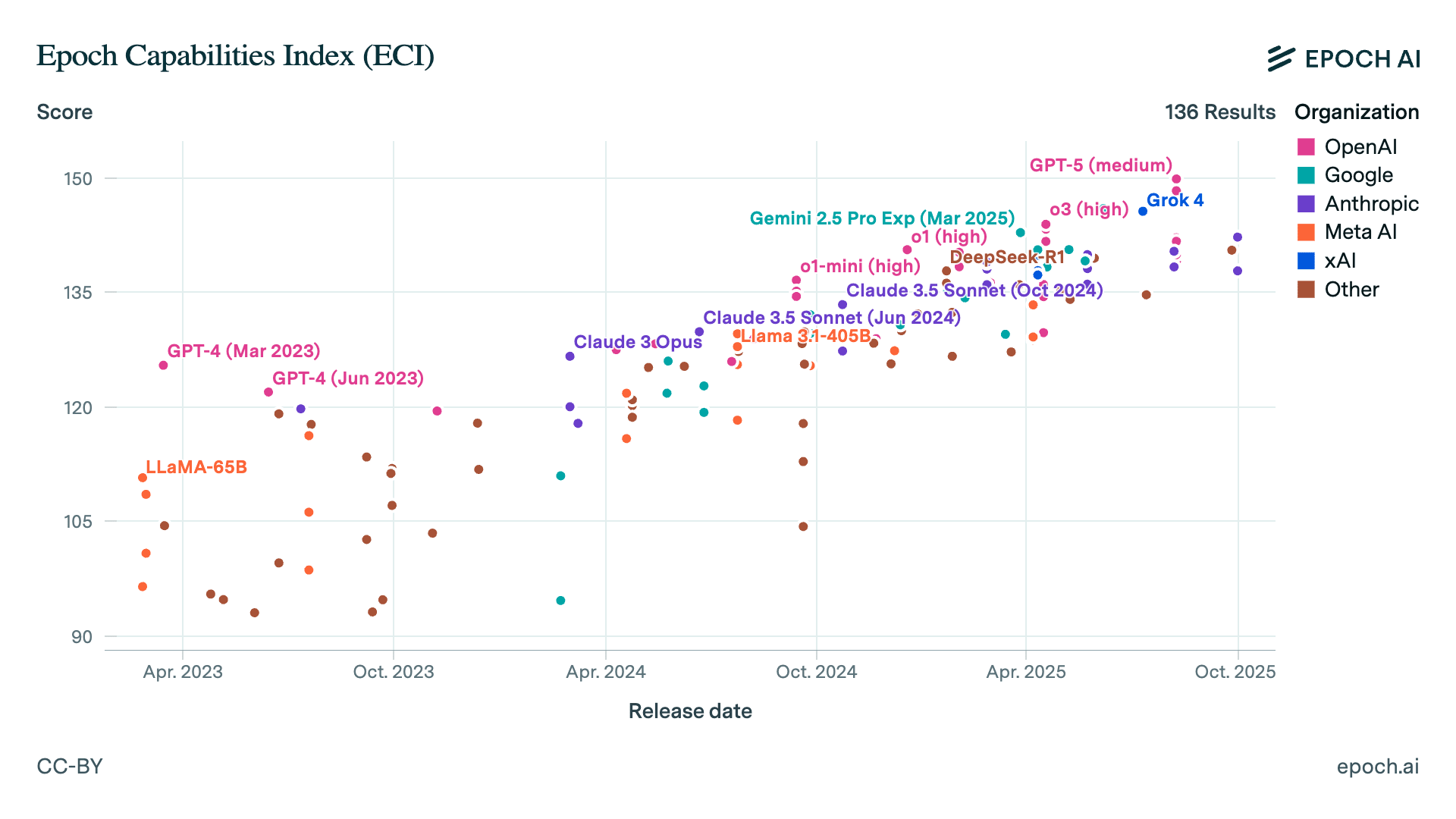

Introducing the Epoch Capabilities Index (ECI)

We at Epoch AI have recently released a new composite AI capability index called the Epoch Capabilities Index (ECI), based on nearly 40 underlying benchmarks. Some key features... * Saturation-proof: ECI "stitches" benchmarks together, to enable comparisons even as individual benchmarks become saturated. * Global comparisons: Models can be compared,...

Currently, we've chosen to scale things such that Claude 3.5 Sonnet gets 130 and GPT-5 (medium) gets 150. As we add new benchmarks, the rough plan is to try to maintain that. We're also planning on adding some way for users to define their own subset of benchmarks, in case you disagree with our choices. That should let you see how things would look under various hypothetical "rebasings".

To be clear, that's not why we didn't include older models. There is... (read more)