I'd be interested in anyone's thoughts on when to use this vs e.g., METR's time horizon. The latter is of course more coding-focused than this general-purpose compilation, but that might be a feature not a bug for our purposes (predicting takeoff).

Here's one framing: getting a higher ECI score requires making progress on (multiple) benchmarks that other models find difficult. Making progress on METR instead involves being more consistently successful at longer coding tasks.

So ECI tracks general capabilities on a "difficulty-weighted" scale, and seems better suited to understanding the pace of progress in general, but it's also an abstract number. There's currently no mapping like "ECI of X == AGI", or a human ECI baseline. On the other hand, METR's benchmark has a nice concrete interpretation, but is more narrow.

We're working on mapping ECI to more interpretable metrics (in fact, METR Time Horizons is one candidate), as well as allowing users to choose a subset of underlying benchmarks if they would prefer to weight ECI more heavily towards particular skills like coding.

Also note that we don't currently include METR's benchmarks as inputs to ECI, but we may add them in future iterations.

What is uniquely interesting/valuable about METR time horizons is that the score is meaningful and interpretable. Can do software tasks that would take an expert 2h with 50% success probability is very specific. Has the score y on benchmark x is only valuable for comparisons, it does not tell you what's going to happen when the models reach score z.

As I mentioned elsewhere, I'm interested in the question of how you plan to re-base the index over time.

The index excludes models from before 2023, which is understandable, since they couldn't use benchmark released after that date, which are now the critical ones. Still, it seems like a mistake, since I don't have any indication of the adaptability of the method for the future when current metrics are saturated. The obvious way to do this seems (to me) to be by including earlier benchmarks that are now saturated so that the time series can be extended backwards. And I understand that this data may be harder to collect, but as noted, it seems important to show future adaptability.

I'm interested in the question of how you plan to re-base the index over time.

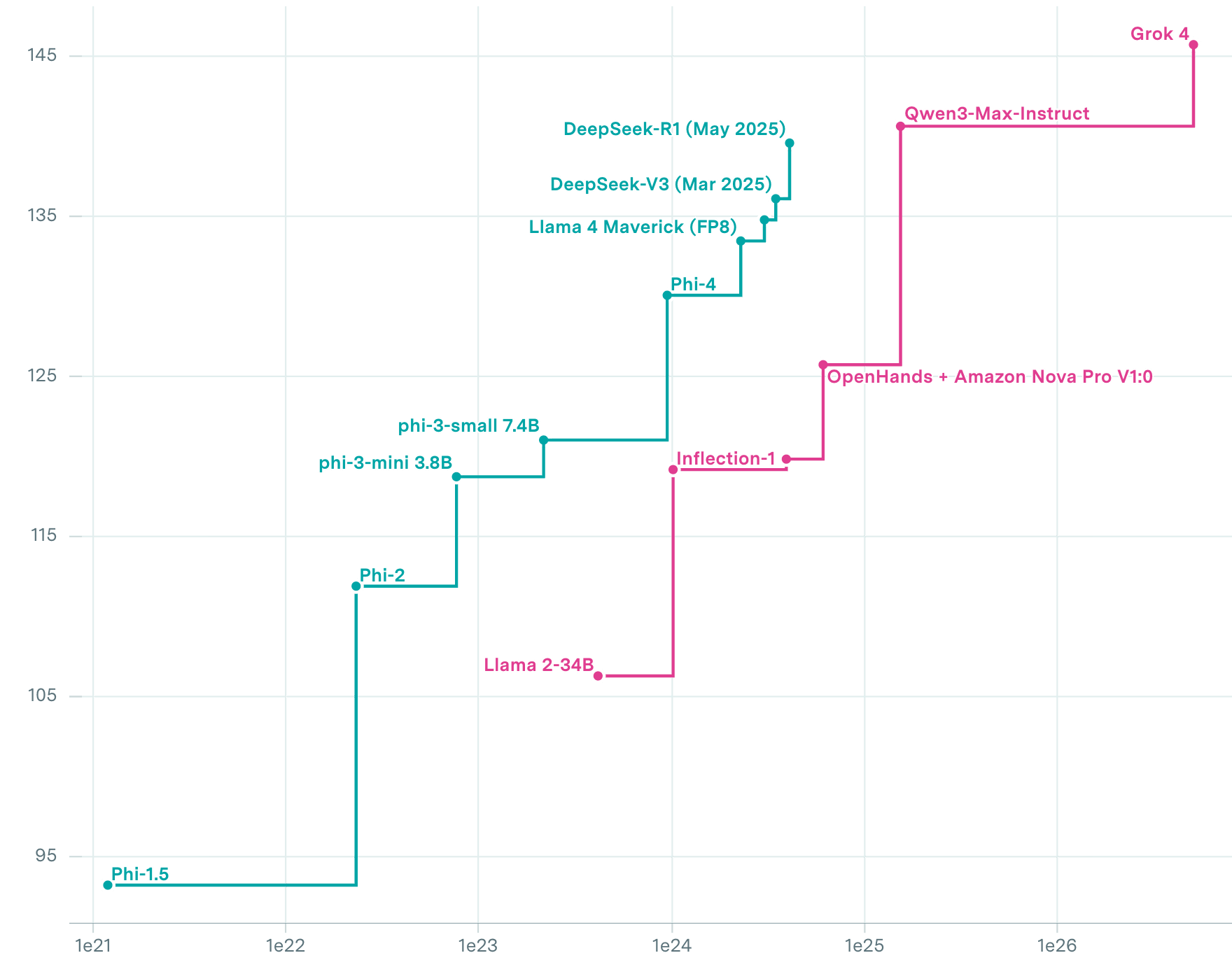

Currently, we've chosen to scale things such that Claude 3.5 Sonnet gets 130 and GPT-5 (medium) gets 150. As we add new benchmarks, the rough plan is to try to maintain that. We're also planning on adding some way for users to define their own subset of benchmarks, in case you disagree with our choices. That should let you see how things would look under various hypothetical "rebasings".

The index excludes models from before 2023, which is understandable, since they couldn't use benchmark released after that date.

To be clear, that's not why we didn't include older models. There is no technical problem with including older models, we just have sparse data on models prior to 2023. We chose to leave them out for now since the noisy estimates could be misleading, but we're trying to collect more data so that we can extend things back further.

The obvious way to do this seems (to me) to be by including earlier benchmarks that are now saturated so that the time series can be extended backwards.

We do! Currently we include many old benchmarks like GSM8k, HellaSwag, MMLU, WinoGrande, etc. There is a list of all benchmarks on the ECI methodology page, if you scroll down into the methodology section. In our initial release we haven't visualized benchmark difficulty scores, but we're planning on making those public and showing some analysis soon.

I tried to do something like that 1-2 years ago, where I modelled benchmarks as having normally distributed difficulties. But there was little data and an afternoon of hacking didn't really lead to anything particularly interesting.

The ECI suggests that the best open-weight models train on ~1 OOM less compute than the best closed weight ones. Wonder what to make of this if at all.

I would guess that this is mainly due to there being much more limited FLOP data for the closed models (especially for recent models), and for closed models focusing much less on small training FLOP models (eg <1e25 FLOP)

Another consideration is that we tend to prioritize evaluations on frontier models, so coverage for smaller models is spottier.

We at Epoch AI have recently released a new composite AI capability index called the Epoch Capabilities Index (ECI), based on nearly 40 underlying benchmarks.

Some key features...

ECI will allow us to track trends in capabilities over longer spans of time, potentially revealing changes in the pace of progress. It will also improve other analyses that would otherwise depend on a single benchmark for comparison.

You can find more details about our methodology and how to interpret ECI here. We want this to be useful for others' research; comments and questions are highly valuable to us!

This release is an independent Epoch AI product, but it builds on research done with support from Google DeepMind. We'll be releasing a full paper with more analysis soon, so keep an eye out!