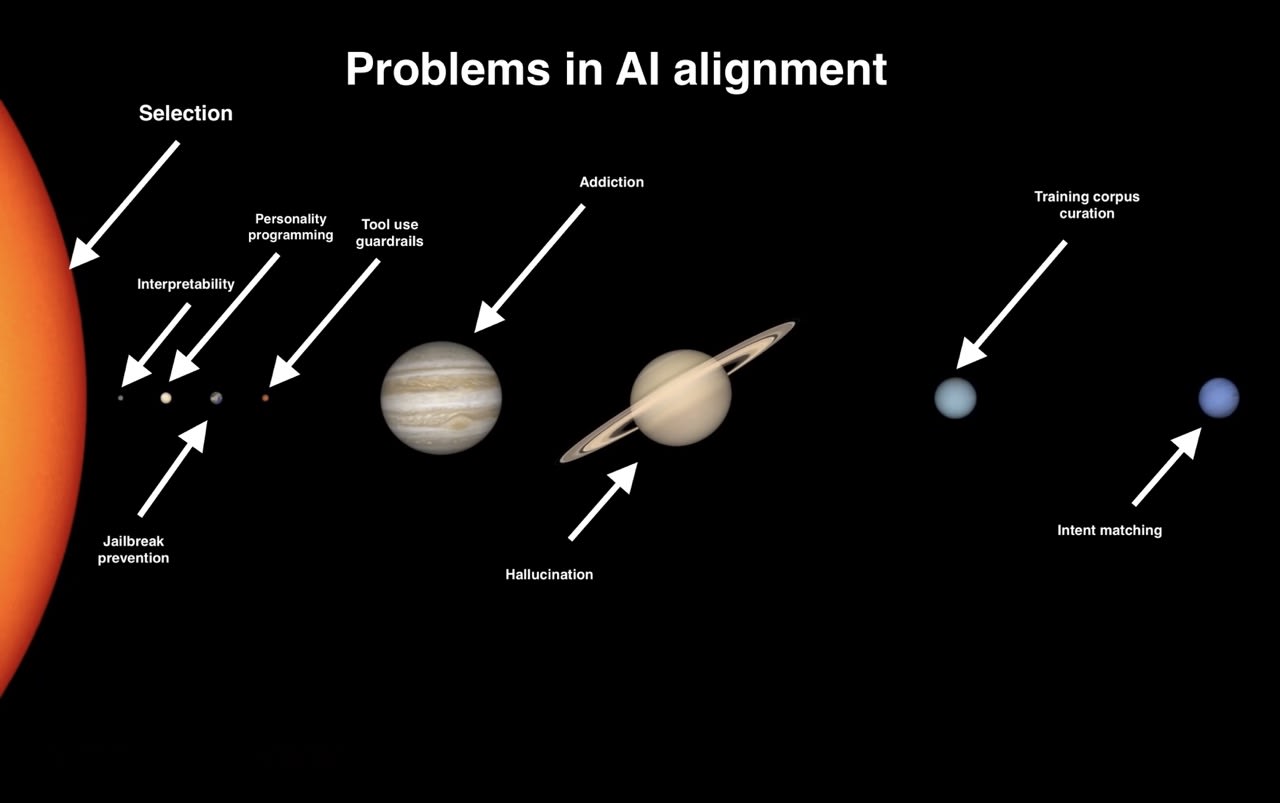

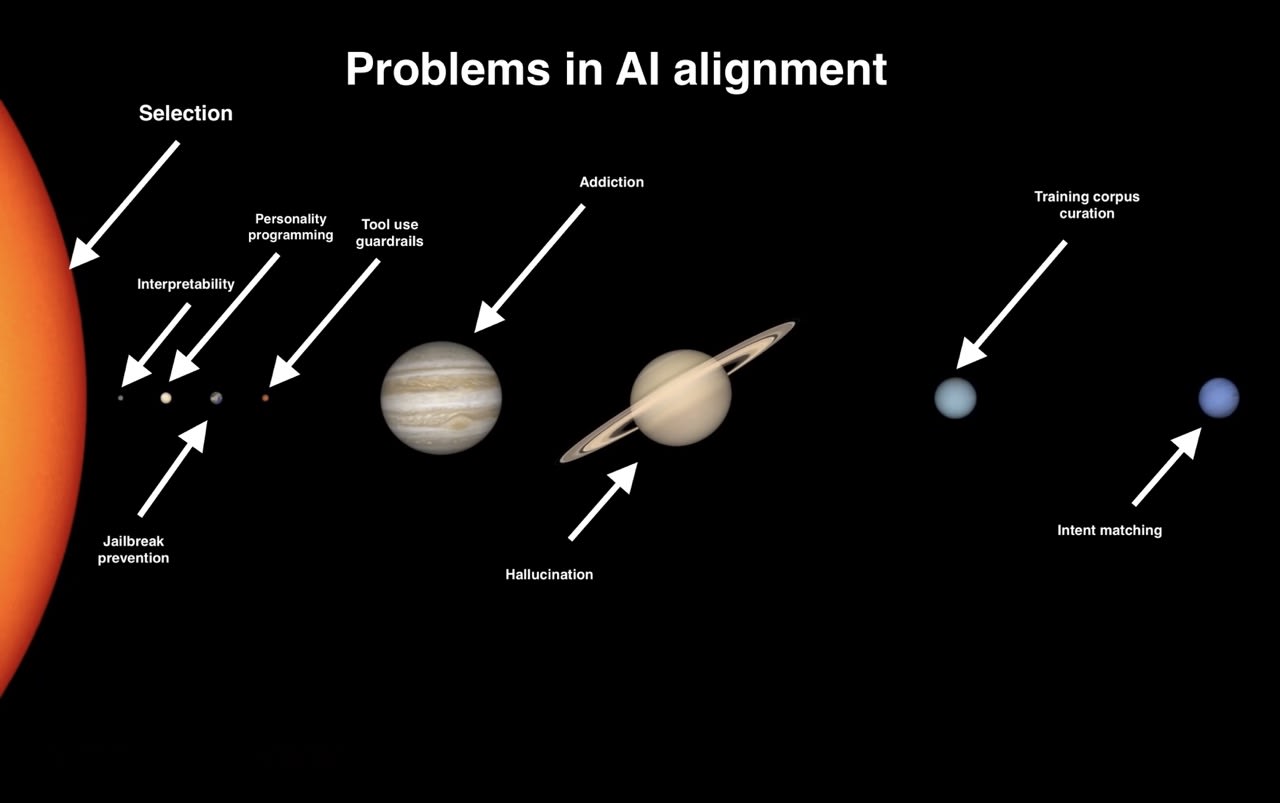

After trying too hard for too to make sense about what bothers me with the AI alignment conversation, I have settled, in true Millenial fashion, on a meme:

Explanation:

The Wikipedia article on AI Alignment defines it as follows:

In the field of artificial intelligence (AI), alignment aims to steer AI systems toward a person’s or group’s intended goals, preferences, or ethical principles.

One could observe: we would also like to steer the development of other things, like automobile transportation, or social media, or pharmaceuticals, or school curricula, “toward a person or group’s intended goals, preferences, or ethical principles.”

Why isn’t there a “pharmaceutical alignment” or a “school curriculum alignment” Wikipedia page?

I think that the answer is... (read 413 more words →)

That is a speculative prediction. And definitely not how most people (not least within the AI alignment community) talk about alignment.

Haven’t read the book (and thanks for the link!) but are they even arguing for more alignment research? Or just for a general slowdown/pause of development?