Logit Prisms: Decomposing Transformer Outputs for Mechanistic Interpretability

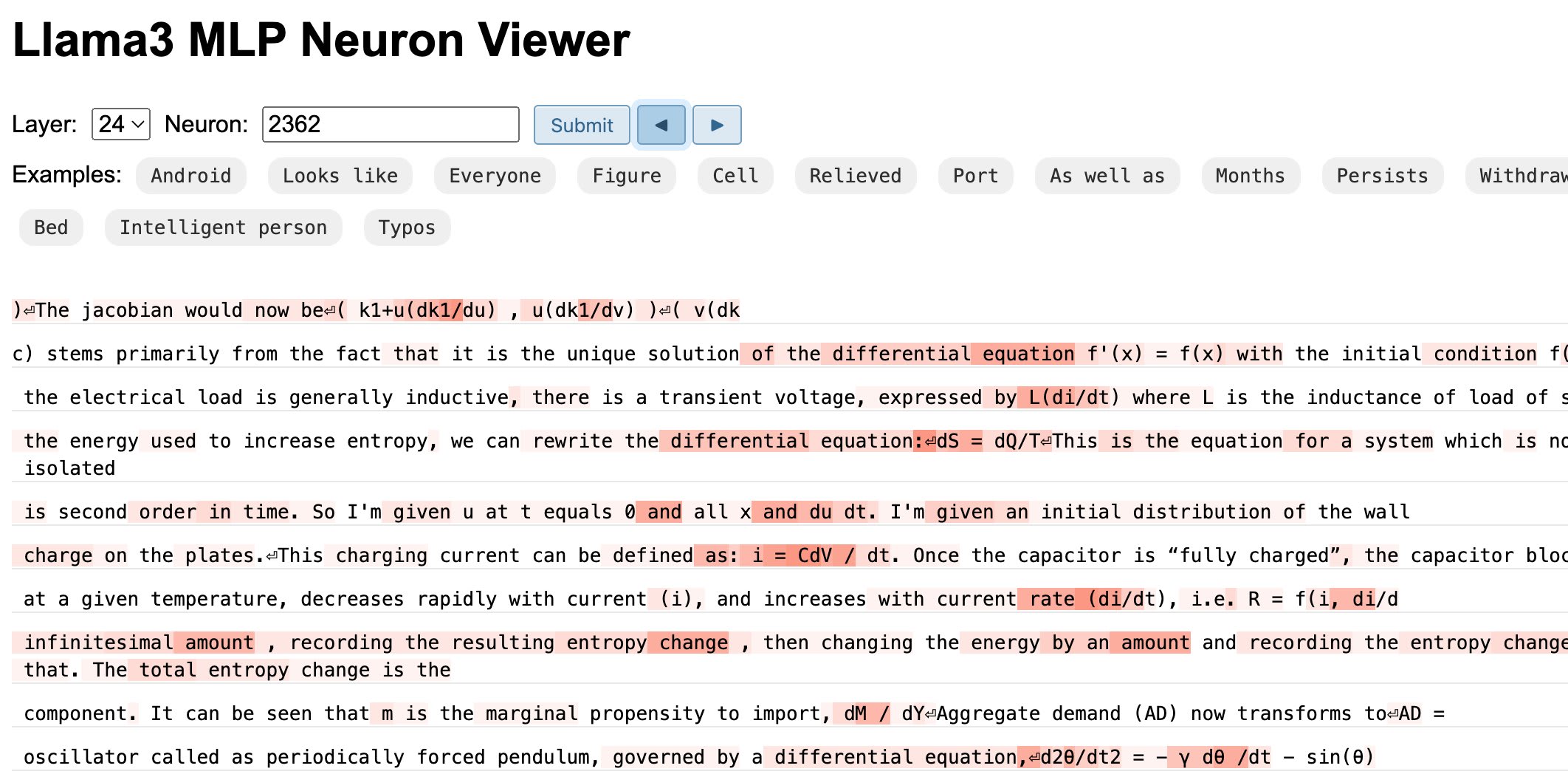

ABSTRACT: We introduce a straightforward yet effective method to break down transformer outputs into individual components. By treating the model’s non-linear activations as constants, we can decompose the output in a linear fashion, expressing it as a sum of contributions. These contributions can be easily calculated using linear projections. We...

Thank you for the upvote! My main frustration with logit lens and tuned lens is that these methods are kind of ad hoc and do not reflect component contributions in a mathematically sound way. We should be able to rewrite the output as a sum of individual terms, I told myself.

For the record, I did not assume MLP neurons are monosemantic or polysemantic, and this is why I did not mention SAEs.