Visual demonstration of Optimizer's curse

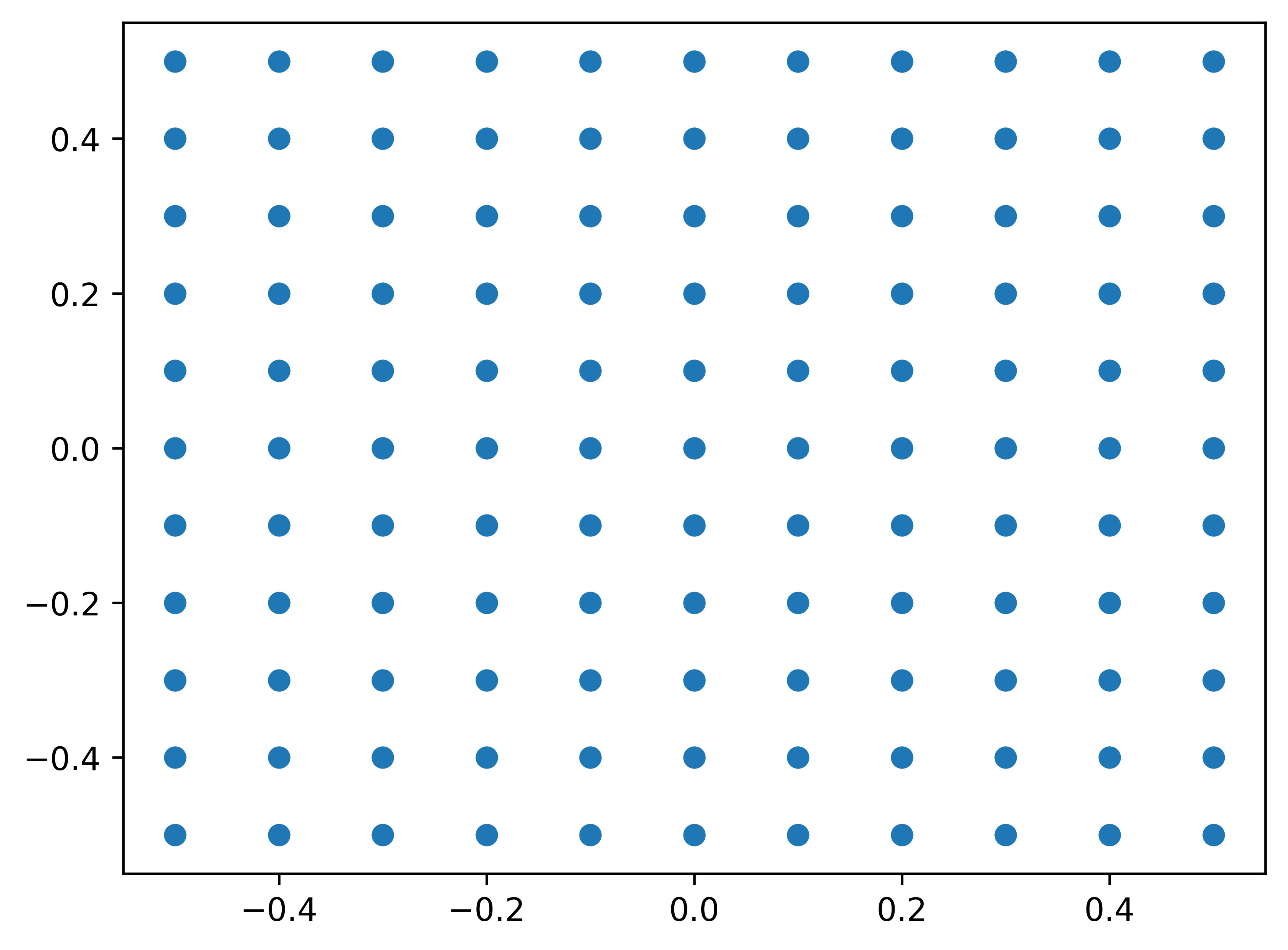

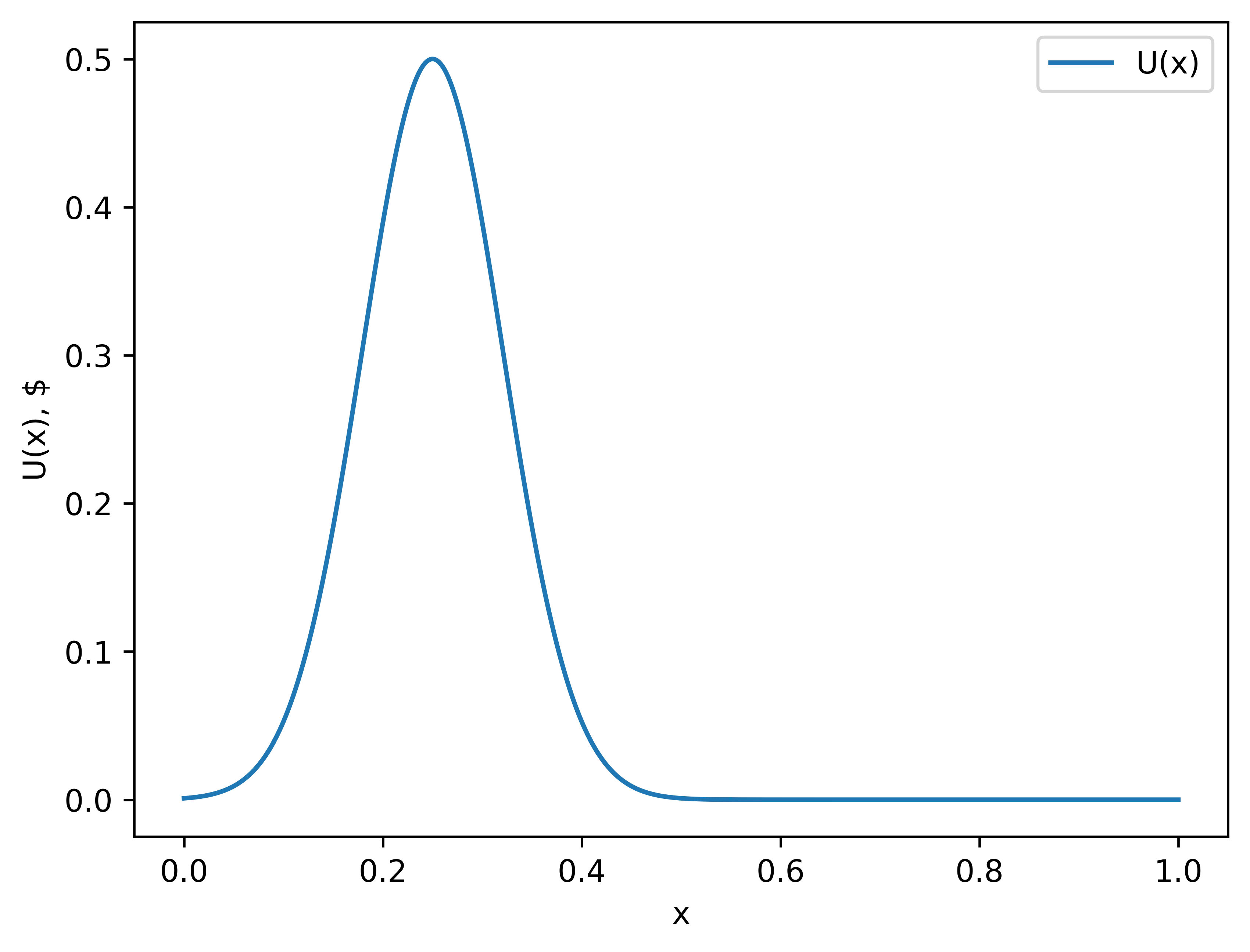

Epistemic status: I am currently taking this course, and after reading this post on Goodhart's curse, I wanted to create some visuals to better understand the described effect. Chapter 1: Optimization Veronica is a stock trader, and her job is to buy stocks, and then sell it at a specific time during the month. Her objective is to maximize profit. Here is the graph of how much money she will make if she sells at some specific time: This can be called Veronica’s utility function U(x), so if she sells at a time x, she makes U(x) dollars.[1] But she does not have access to this function. She only has access to a giant supercomputer, which works like this: she presses the button, the computer chooses some random moment in the month x and calculates U(x). Running this computer takes a lot of time, so she can only observe a handful of points. After that, she can analyze this data to make a decision about the selling time. Her current algorithm is as follows: > Algorithm 1 > > 1. Run the computer N times, generating N pairs of the type (xi,U(xi)). > 2. Locate the maximum using argmax: xmax=argmaxxi∈{x1,…,xN}[U(xi)] > 3. Sell stocks at the time xmax and receive U(xmax) dollars. Here is the picture of how one such scenario might look like: The number N of observed points (aka the number of times Veronica pressed the button) will be referred to as 'optimization power'. The higher this number, the closer (on average) her proxy maxima will be to the actual maximum. Here are 1,000 such experiments, in each of which the optimization power was 5. The bar chart is the probability density of the proxy maxima chosen by the Algorithm 1.[2] We can see that points are clustering around the true maximum point (x=0.25). The “average value” is the average of the utility function U Veronica obtains from those proxy maxima (the expected amount of money she makes with this algorithm). It is noticeably smaller than the actual maximum value she can achieve. With the lowest optimiz

It's also worth posting unoriginal thoughts

(yes, this thought isn’t original either)

Reasons: