Some critique on reasoning models like o1 (by OpenAI) and r1 (by Deepseek).

OpenAI admits that they trained o1 on domains with easy verification but hope reasoners generalize to all domains. Whether or not they generalize beyond their RL training is a trillion-dollar question. Right off the bat, I’ll tell you my take:

o1-style reasoners do not meaningfully generalize beyond their training.

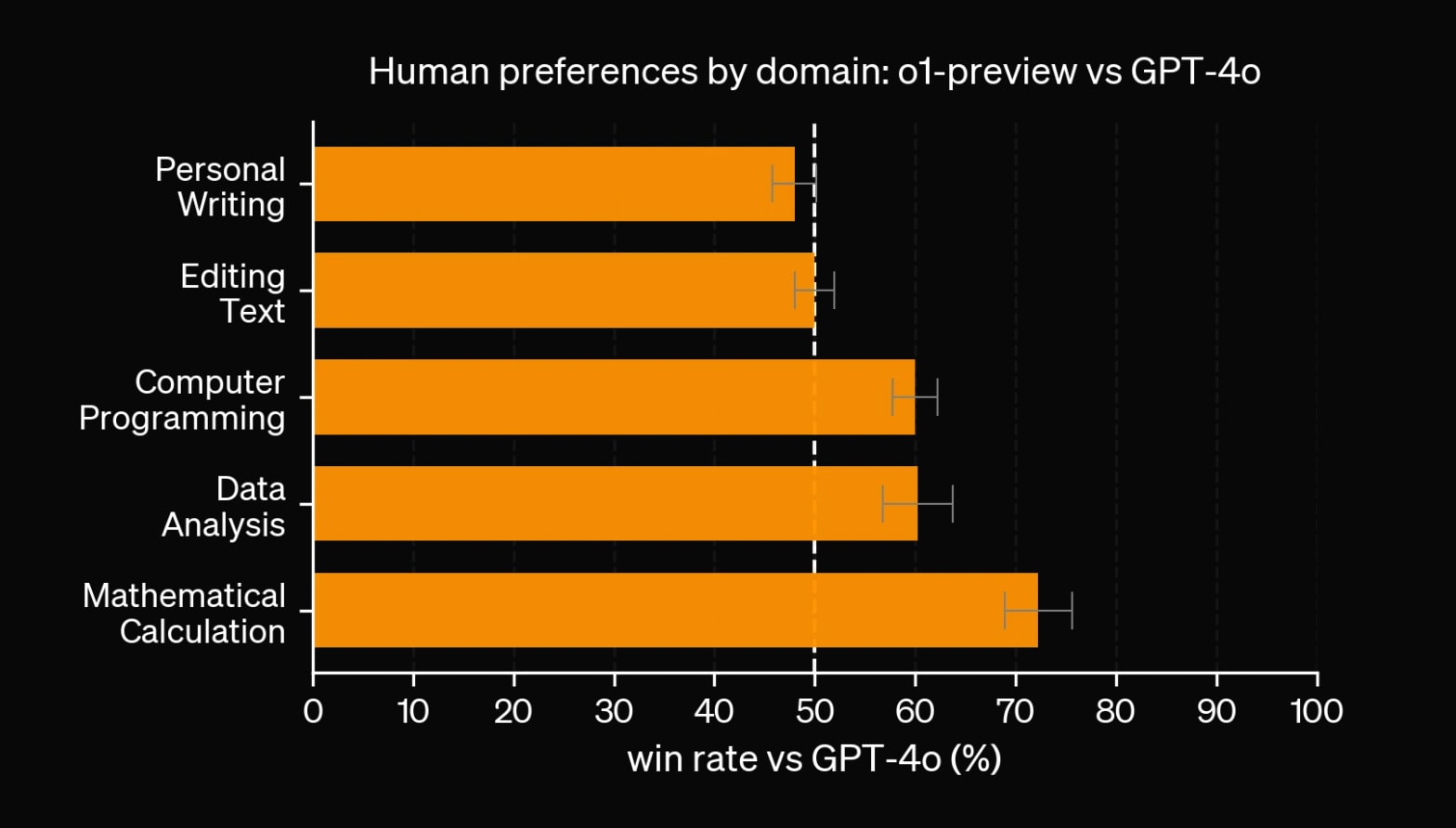

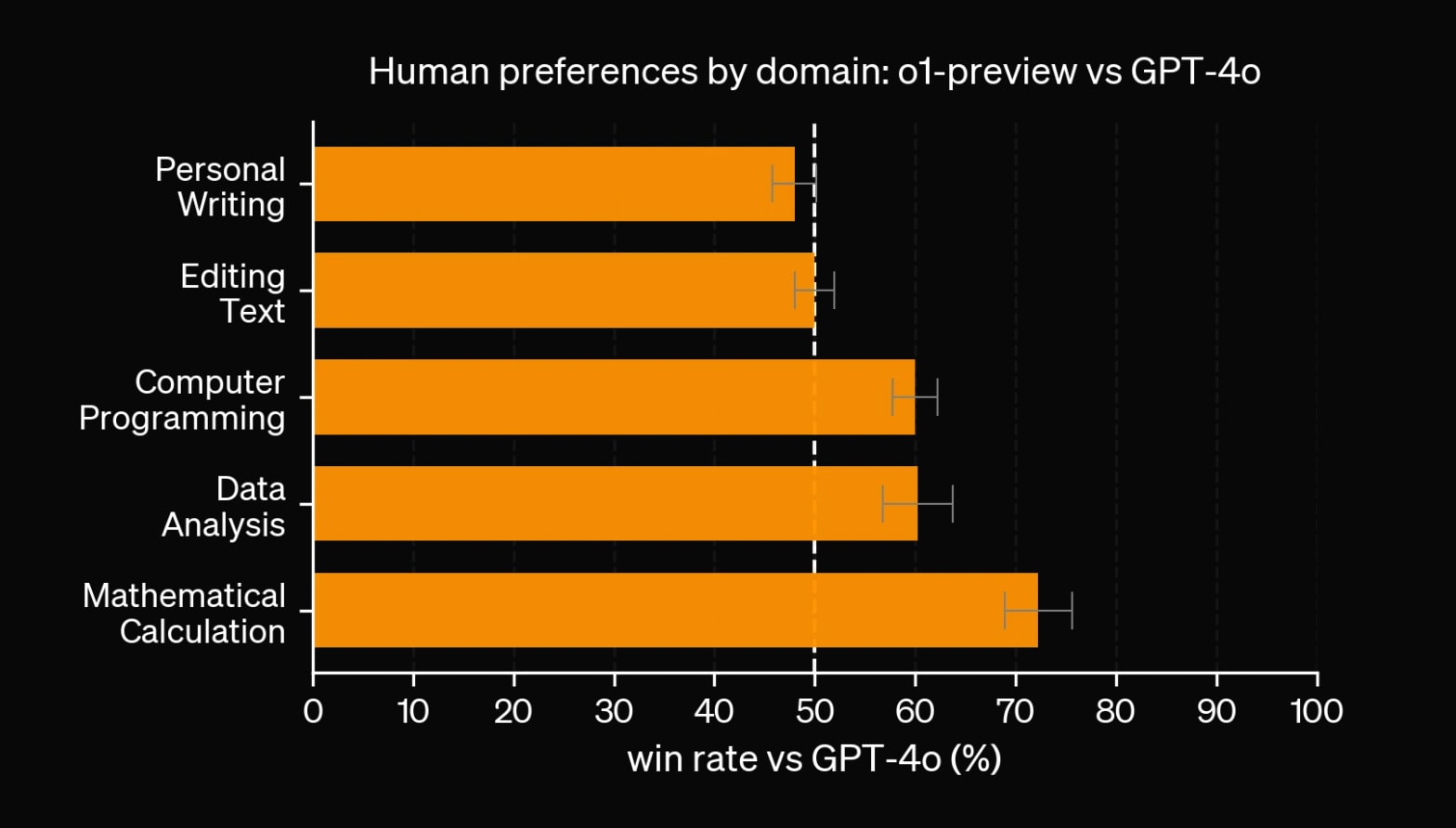

A straightforward way to check how reasoners perform on domains without easy verification is benchmarks. On math/coding, OpenAI's o1 models do exceptionally. On everything else, the answer is less clear.

Results that jump out:

o1-preview does worse on personal writing than gpt-4o and no better on editing text, despite costing 6 × more.- OpenAI didn't release scores for

o1-mini,

... (read 175 more words →)

Have you experimented with ComfyUI? Image generation for "power users" -- many more knobs to turn and control output generation. There's actually a lot of room for steering during the diffusion/generation process, but it's hard to expose it in the same intuitive/general way as a prompt in language. Comfy feels more like learning Adobe or Figma.