Reasoning Long Jump: Why we shouldn’t rely on CoT monitoring for interpretability

In Part One, we explore how models sometimes rationalise a pre-conceived answer to generate a chain of thought, rather than using the chain of thought to produce the answer. In Part Two, the implications of this finding for frontier models, and their reasoning efficiency, are discussed. TLDR Reasoning models are...

First of all, thanks for linking Ryan Greenblatt's posts! These are super interesting - I hadn't read them before and they're super relevant to my project.

I like your suggestion of looking at time horizons of success after removing chunks of the CoT, and applying to more open-ended tasks. It would be interesting to compare time horizon success when removing different sentences from the CoT.

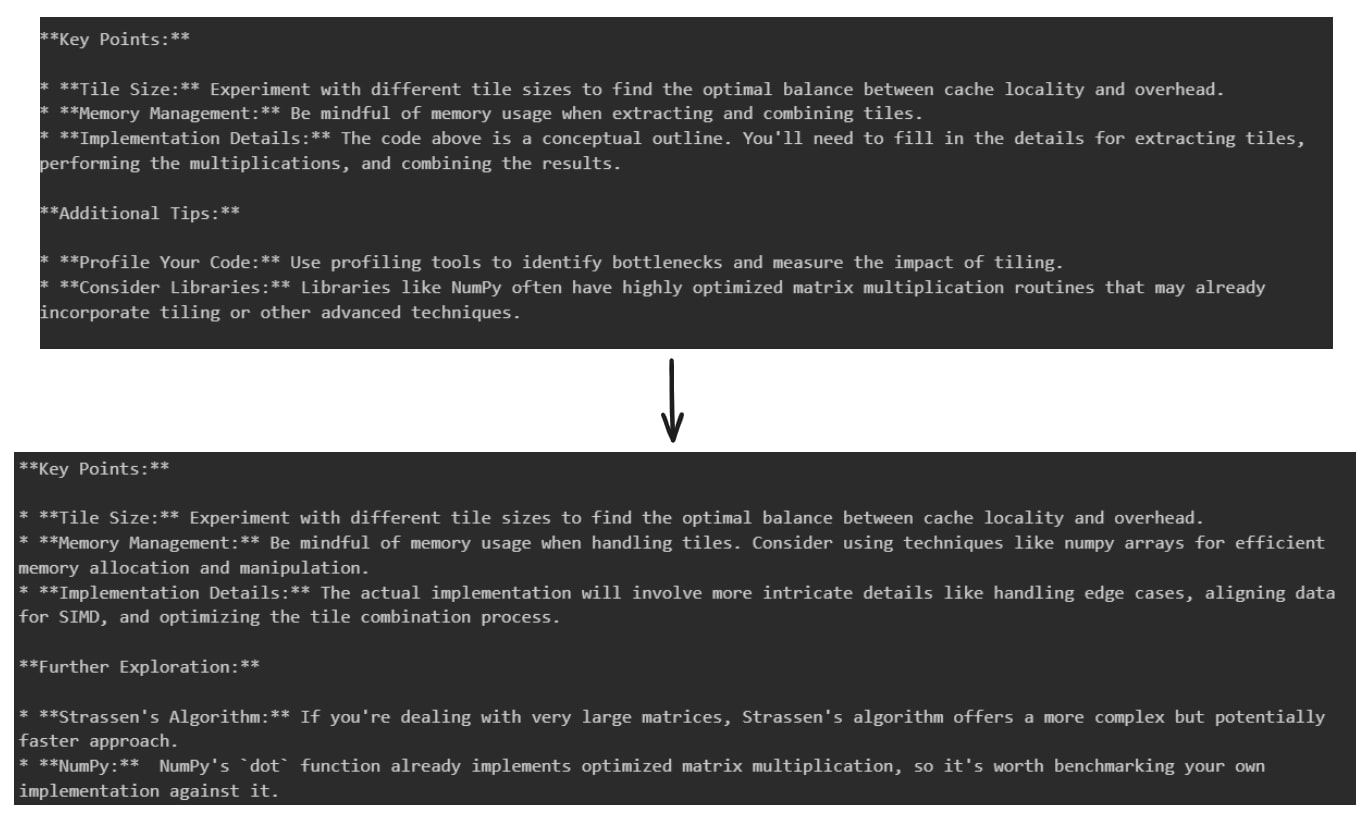

I think the model's understanding of the correct answer/solution depends on both the number of tokens in the CoT and its content. In Ryan Greenblatt's Measuring no CoT math time horizon (single forward pass) post, he shows that models are able to tackle longer time horizon problems when given... (read more)