3 Answers sorted by

90

It is infinite. Imagine that FAI will appear in our time, that it will have human immortality as its final goal and that it will also will be able to solve the problem of the end of the universe. All these combined has, say, 1 per cent probability. Thus we have 1 per cent chance of infinite life expectancy, of which median is also infinity.

The life expectancy is infinite, but the median is finite unless the probability of immortality is at least 50%.

Good point. One point against this would be that upon reflection, I expect that human immortality is not likely to be optimal in most ways we may imagine it. I expect that on most likely consequentialist framings, the resources that could be spent on continuing my own "individual self" would be more effectively used elsewhere. You might need a very liberal notion of "self" to consider what gets kept as "you."

That said, this wouldn't be a bad thing, it would be more of a series of obvious decisions and improvements.

The universe is not actually infinite.

And even if it were, there could be other things out there that could stop an FAI.

And even if there are not, people could still choose to die with a certain probability over time that leads to a finite average.

And even if not it could turn out that we might want to value life years lived with a subjective weight that falls over time causing the average to be finite.

10

I've heard it said (e.g., Sinclair) that the current life expectancy of the human body is more like 120 years. I think the 80 year number references is more like statistical measure of how long a human lives given all the things that do kill us in the world -- internal genetic flaws, biologic pathogens that attack us, accidents, life style impacts....

Accepting AGI 2075 seems like the first question to ask is will that help address "the stuff that kills us now" or address that and the way our biology seems to work?

10

The expectation is probably around 1 billion:

10% × 10 billion years (live roughly as long as the universe has existed for already) +

90% × die within 1000 years (likely within 70 for most people here!)

Total: 1 billion

I am ignoring the infinite possibilities since any finite system will start repeating configurations eventually, so I don't think that infinite life for a human even makes sense (you'd just be rerunning the life you already have and I don't think that counts).

So, I completely discount all the AGI stuff that so many here hang their hats on.

I similarly am pretty damn sure that there will be no silver bullet magic anti-aging in our future.

I am not involved in aging research so take this as the words of a vaguely interested amateur.

That being said, I noticed something interesting when I was playing with actuarial data a few years ago. I downloaded a dataset of life expectancy at every age for every year since 1950 in the United States. I was playing with it, looking for patterns, and I decided to plot life remaining at every age in 1970 versus life remaining at every age in 2000, and a striking pattern emerged.

It was a remarkably straight line with a slope that was not one. Life expectancy at every age had gone up, but it had gone up more at younger age than older age in a very consistent pattern. I played with the data on more axes and the line crossed the 1:1 line of life expectancy equal to current age at approximately age 95, indicating relative lack of improvement above that age. (I realize this description is a bit roundabout and hard to visualize and weird, but it is how I noticed the relationship. I should really dig up these graphs again...)

I repeated this with other pairs of years. Every time I did this with two years after ~1950, it produced a similar straight line, passing through the 1:1 line at age 95, only varying in the slope.

As the years have gone on, life expectancy at younger ages than 95 has continued to increase (at an ever slowing rate!), but life expectancy at older ages has not kept pace with those at younger, and the pattern is very consistent.

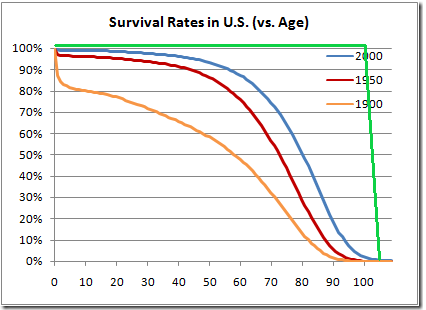

I don't have the graphs or spreadsheet or equations I did available right now, but what I ended up interpreting this as indicating is a progressive rectangularization of the mortality curve. Rather than people dropping off at all ages over time, more and more of the mortality is being concentrated in a particular age band in a very consistent way for the past seventy years. If the pattern were taken to its extreme conclusion, it would mean everyone having a life expectancy of reaching age 95 at all ages, and dropping off right then pretty reliably.

I think I get what you mean and I think I have seen graphs like that (except for the linear thingy). One quick example here:

https://sorrytoconfuseyou.wordpress.com/2008/08/25/what-does-life-expectancy-represent/

If I get you right you mean that it tends to this:

Basically yes. Though I suspect the ultimate form would probably be more like 'completely flat until 90ish then just kicking in with a consistent half life from then onwards'.

As usual, it pays to extrapolate from the past actuarial data, which should be available somewhere. My guess is that we are approaching saturation, barring extreme breakthroughs in longevity, which I personally find quite unlikely this century. I am also skeptical about AGI in 2075. If you asked experts in 1970 about Moon bases, they would expect it before 2000 with 90%+ confidence. And we already had the technology then. Instead the real progress was in a completely unexpected area. I suspect that there will be some breakthroughs in the next 50 years, but they will come as a surprise.

The reason we didn't build a moon base is because it's not immediately useful and costs insane amounts of money.

Meanwhile, GPT3 was made with a couple million dollars and could probably replace half the clickbait sites out there. AI has a much smoother incentive gradient than space tech.

Except for the long AI winter where most AI research produced very little value. Just because we've broken through one constraint and started on another S-curve does not mean we will not hit another constraint.

I think that the answer would depend very much on how you define your terms. Are we talking about mean, or median. If mean, then I would expect it to be dominated by a long tail, and possibly unbounded. (In the Pascals mugging sense that ridiculously long lifespans aren't that improbable.)

Are we counting mind uploads? What if there are multiple copies of you running around the future? talking about subjective time or objective? Do we count you as still alive if you have been modified into some strange transhuman mind?

I think that the answer would depend very much on how you define your terms.

Agreed. FWIW, I purposefully left if vague because I am interested in answers to many variants of the question, and I didn't want to discourage anyone who only has something to say about one variant.

Something of a follow on to my "answer".

The premise seems to be that AGI will be able to give significant answers the the biological question about aging and longevity. If think if we want to say that is reasonable we should also be highly confident that that AGI would be able to solve the same questions about itself without having been given the answers.

For instance, assume the AI has no knowledge of how its hardware is organized or architecture, know knowledge of the instruction sets, know knowledge that it is even using binary or any of the information around the power or data flows within the system.

How well does the AI do in answering the questions about "How do I work?" Given that the computer system, the CPU and the language coding is simpler than that of a biological system understanding just how well that AI can solve itself might provide a pretty good indication on just what it do for solving us humans.

I recently learned that when they talk about a life expectancy of eg. 80 years, it's assuming that the future looks similar to the past. But with exponential progress in technology, that doesn't seem like a good assumption. For example, according to Bostrom's survey (90% likelihood), the median pessimistic year for AGI is 2075.

So then, taking into account technological progress, what do we expect life expectancy to be?