An Opinionated Review of Many AI Safety Funders (with recommendations)[1]

Manifund: Somewhat mixed. Nice interface, love the team, but many of the best regranters seem inactive, some of the regranter choices are very questionable, and the way it’s set up doesn’t make clear to applicants that a person with a budget might not evaluate them, or give stats on how hard it is to get funded there. The quality of choices of regranters seems extremely variable. You’ve got some ones with imo exceptional technical models, like Larsen, Zvi, and Richard Ngo, but they don’t seem super active in terms of number of donations. A bunch of very reasonable picks, some pretty active, like Ryan Kidd. And some honestly very confusing / bad picks, like the guy who quit epoch to make a capabilities company which if it succeeds will clearly be bad for the world (it's literally just saying "may as well automate the economy, it's gonna happen anyway, if you can't beat 'em join 'em", while totally failing to grasp or possibly not caring about this leading to humanity having a very very bad time), and has a lot of very bad takes (in my opinion and the opinions of a lot of people who seem to be thinking clearly)....

curious for more details from the people who disagree-reacted, since there's a lot going on here.

Thanks for the review! Speaking on Manifund:

The way it’s set up doesn’t make clear to applicants just how hard it is to get funded there

Is Manifund overpromising in some way, or is it just that other funders like OP/SFF don't show you the prospective/unfunded applications? My sense is the bar on getting significant funding on Manifund is not that different than the bar for other AIS funders, with some jaggedness depending on your style of project. I'd argue the homepage sorted by new/closing soon actually does quite a good job of showing what gets funded and the relative difficulty of doing so.

Many of the best regranters seem inactive, and some of the regranter choices are very questionable.

I do agree that our regrantors are less active than I'd like; historically, many of the regrantor grants go out in the last months of the year as the program comes to an end.

On matters of regrantor selection, I do disagree with your sense of taste on eg Leopold and Tamay; it is the case that Manifund is less doom-y/pause-y than you and some other LW-ers are. (But fwiw we're pretty pluralistic; eg, we helped PauseAI with fiscal sponsorship through last year's SFF round.) Furthermore, I'd challeng...

Thanks for engaging!

Is Manifund overpromising in some way

So, kinda, imo, though not too badly. For other funds, you're going to get evaluated by someone who has the ability to fund you. On manifund, there's a good chance that none of the people with budgets will evaluate your application, I think? Looking at the page you mention, it's ~2/3 unfunded (which is better than I was tracking so some update on that criticism) even considering about half of them are only partly funded (and a fair few just a trivial amount). I think if you scroll around you can get a sense of this, but it's not highlighted or easily available as a statistic (I just had to eyeball it, and will only be getting open apps rather than the more informative closed stats). Probably putting the stats for how many% of requested funding projects tend to get somewhere + listing on the make application page something about how this gets put in front of people with budgets fixes this?

I do agree that our regrantors are less active than I'd like; historically, many of the regrantor grants go out in the last months of the year as the program comes to an end.

Right.. seems suboptimal to have an invisible to applicants spike in...

handle individual funding better

Do you say this because of the overhead of filling out the application?

Suggest using the EigenTrust system... can write up properly

I'm interested in hearing about it. Doesn't have to be that polished, just enough to get the idea.

(For context, I work on the S-Process)

which is heavy duty in a few ways which kinda makes sense for orgs, but not really for an individual

This makes sense, though it's certainly possible to get funded as an individual. Based on my quick count there were ~four individuals funded this round.

You kinda want a way to scalably use existing takes on research value by people you trust somewhat but aren't full recommenders

Speculation grants basically match this description. One possible difference is that there's an incentive for speculation granters to predict what recommenders in the round will favor (though they make speculation grants without knowing who's going to participate as a recommender). I'm curious for your take.

I've been keen to get a better sense of it

It's hard to get a good sense without seeing it populated with data, but I can't share real data (and I haven't yet created good fake data). I'll try my best to give an overview though.

Recommenders start by inputting two pieces of data: (a) how interested they are in investigating each proposal, and (b) disclosures of any any possible conflicts of interest, so that other recommenders can vote on whether they should be recused or not.

They spend most of the round usin...

Thanks for engaging! What are the disadvantages of having information about your recommendations generally available? There might be some which are sensitive, but most will be harmless, and having more eyes seems beneficial. Both from getting more cognition to help notice things, and, more importantly, for people who might end up getting to advise donors but are not yet.

My guess is a fair few people have forwarded you HNWIs without having much read on the object level grantmaking suggestions you tend to give (and some of those people would not have known in advance that they would get to advise the individual, so your current policy couldn't help), and that feels.. unhealthy, for something like epistemic virtue reasons.

Also, I bet people would be able to give higher quality (and therefore more+more successful) recommendations to HNWIs to talk with you if they had grounded evidence of what grants you suggest.

The planned Ambitious AI Alignment Seminar which aims to rapidly up-skill ~35 people in understanding the foundations of conceptual/theory alignment using a seminar format where people peer-to-peer tutor each other pulling topics from a long list of concepts. It also aims to fast-iterate and propagate improved technical communication skills of the type that effectively seeks truth and builds bridges with people who've seen different parts of the puzzle, and following one year fellowship seems like a worthwhile step towards surviving futures in worlds where superintelligence-robust alignment is not dramatically easier than it appears for a few reasons, including:

- Having more people who have deep enough technical models of theory that they can usefully communicate predictable challenges, and inform especially policymakers of strategically relevant considerations.

- Having many more people who have the kind of clarity which helps them shape new orgs in the worlds where alignment efforts get dramatically more funds

- Preparing the ground for any attempts at the lab's default plan (get AI to solve alignment) by making more people understand what solving alignment in a once-and-for-all way actu

Re: Ayahuasca from the ACX survey having effects like:

- “Obliterated my atheism, inverted my world view no longer believe matter is base substrate believe consciousness is, no longer fear death, non duality seems obvious to me now.”

[1]There's a cluster of subcultures that consistently drift toward philosophical idealist metaphysics (consciousness, not matter or math, as fundamental to reality): McKenna-style psychonauts, Silicon Valley Buddhist circles, neo-occultist movements, certain transhumanist branches, quantum consciousness theorists, and various New Age spirituality scenes. While these communities seem superficially different, they share a striking tendency to reject materialism in favor of mind-first metaphysics.

The common factor connecting them? These are all communities where psychedelic use is notably prevalent. This isn't coincidental.

There's a plausible mechanistic explanation: Psychedelics disrupt the Default Mode Network and adjusting a bunch of other neural parameters. When these break down, the experience of physical reality (your predictive processing simulation) gets fuzzy and malleable while consciousness remains vivid and present. This creates a powerful i...

This suggests something profound about metaphysics itself: Our basic intuitions about what's fundamental to reality (whether materialist OR idealist) might be more about human neural architecture than about ultimate reality. It's like a TV malfunctioning in a way that produces the message "TV isn't real, only signals are real!"

In meditation, this is the fundamental insight, the so called non-dual view. Neither are you the fundamental non-self nor are you the specific self that you yourself believe in, you're neither, they're all empty views, yet that view in itself is also empty. For that view comes from the co-creation of reality from your own perspective yet why should that be fundamental?

Emptiness is empty and so can't be true and you just kind of fall down into this realization of there being only circular or arbitrary properties of experience. Self and non-self are just as true and living from this experience is wonderfully freeing.

If you view your self as a nested hierarchical controller and you see through it then you believe that you can't be it and so you therefore cling onto what is next most apparent, that you're the entire universe but that has to be false as well...

NVC is a form of variable scoping for human communication. With it, you can write communication code that avoids the most common runtime conflicts.

Human brains are neural networks doing predictive processing. We receive data from the external world, and not all of that data is trusted in the computer security sense. Some of the data would modify parts of your world model which you'd like to be able to do your own thinking with. It's jarring and unpleasant for someone to send you an informational packet that as you parse it moves around parts of your cognition you were relying on not being moved. For example, think back to dissonances you've felt, or seen in others, due to direct forceful claims about their internal state. This makes sense! Suffering, in predictive processing, is unresolved error signals, two conflicting world-models contained in the same system. If the other person's data packet tried to make claims directly into your world model, rather than via your normal evaluation functions, you're often going to end up with suffering-flavoured superpositions in your world-model.

NVC is safe-mode restricted subset of communication where you make sure the type signature of your ...

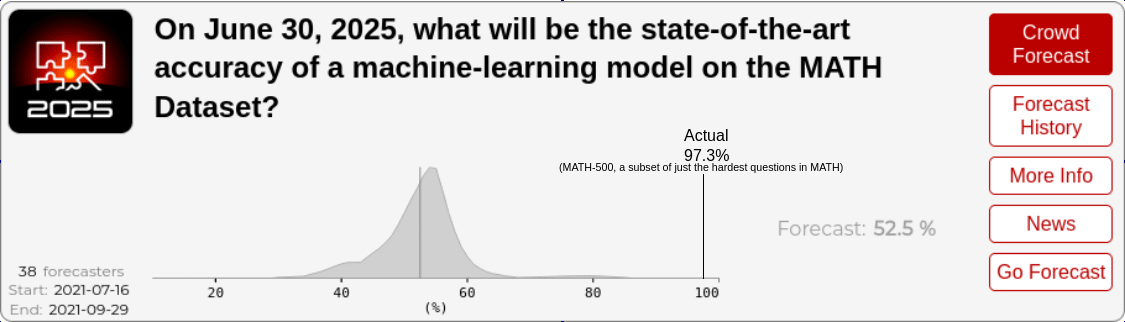

2021 forecasters vs 2025 reality:

"Current performance on [the MATH] dataset is quite low--6.9%--and I expected this task to be quite hard for ML models in the near future. However, forecasters predict more than 50% accuracy* by 2025! This was a big update for me. (*More specifically, their median estimate is 52%; the confidence range is ~40% to 60%, but this is potentially artifically narrow due to some restrictions on how forecasts could be input into the platform.)"(source)

Reality: 97.3%+ [1] (on a narrowed subset of only the hardest questions, including just difficulty 5 ones, called MATH-500 which was made because the original benchmark got saturated)

Reliable forecasting requires either a generative model of the underlying dynamics, or a representative reference class. The singularity has no good reference class, so people trying to use reference classes rather than gears modelling will predictably be spectacularly wrong.

I mentioned this before, but that interface didn't allow for wide spreads, so the thing that you might be looking at is a plot of people's medians, not the whole distribution. In general Hypermind's user interface was so so shitty, and they paid so poorly, if at all, that I don't think it's fair to describe that screenshot as "superforecasters".

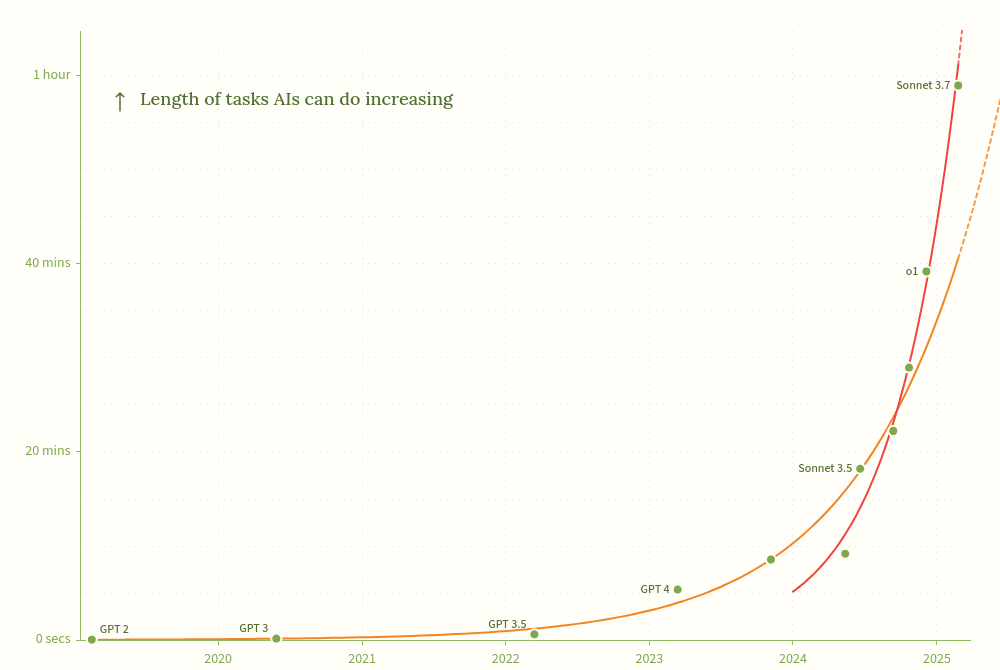

@Daniel Kokotajlo I think AI 2027 strongly underestimates current research speed-ups from AI. It expects the research speed-up is currently ~1.13x. I expect the true number is more likely around 2x, potentially higher.

Points of evidence:

- I've talked to someone at a leading lab who concluded that AI getting good enough to seriously aid research engineering is the obvious interpretation of the transition to a faster doubling time on the METR benchmark. I claim advance prediction credit for new datapoints not returning to 7 months, and instead holding out at 4 months. They also expect more phase transitions to faster doubling times; I agree and stake some epistemic credit on this (unsure when exactly, but >50% on this year moving to a faster exponential).

- I've spoken to a skilled researcher originally from physics who claims dramatically higher current research throughput. Often 2x-10x, and many projects that she'd just not take on if she had to do everything manually.

- The leader of an 80 person engineering company which has the two best devs I've worked with recently told me that for well-specified tasks, the latest models are now better than their top devs. He said engineering is no

We did do a survey in late 2024 of 4 frontier AI researchers who estimated the speedup was about 1.1-1.2x. This is for their whole company, not themselves.

This also matches the vibe I’ve gotten when talking to other researchers, I’d guess they’re more likely to be overestimating than underestimating the effect due to not adjusting enough for my next point. Keep in mind that the multiplier is for overall research progress rather than a speedup on researchers’ labor, this lowers the multiplier by a bunch because compute/data are also inputs to progress.

How do you reconcile these observations (particularly 3 and 4) with the responses to Thane Ruthenis's question about developer productivity gains?

It was posted in early March, so after all major recent releases besides o3 (edit: and Gemini 2.5 Pro). Although Thane mentions hearing nebulous reports of large gains (2-10x) in the post itself, most people in the comments report much smaller ones, or cast doubt on the idea that anyone is getting meaningful large gains at all. Is everyone on LW using these models wrong? What do your informants know that these commenters don't?

Also, how much direct experience do you have using the latest reasoning models for coding assistance?

(IME they are good, but not that good; to my ears, "I became >2x faster" or "this is better than my best devs" sound like either accidental self-owns, or reports from a parallel universe.)

If you've used them but not gotten these huge boosts, how do you reconcile that with your points 3 and 4? If you've used them and have gotten huge boosts, what was that like (it would clarify these discussions greatly to get more direct reports about this experience)?

If you, on careful reflection, think your life is probably better without <social media>, but actions you try to quit seem to fail, and you don't have the slack to debug what's going on and either decide it's good for you or come up with a working way to detach, consider that you're in the gullet of a techno-memetic-corporate system which has had a vast amount of training data on what keeps humans trapped as active users feeding it their attention and time...

... and maybe take more extreme actions.

I think the extent to which social media addiction is an artificial/cultivated problem as opposed to a natural/emergent problem is dramatically overstated in general.

One of the websites I'm addicted to checking is lesswrong.com. Is that because it's part of a corporate system optimized for money? No! It's because it's optimized for surfacing interesting content! Other of my addictions are tumblr and discord, which have light-to-no recommender systems.

I think discourse around social media would be a lot saner of people stopped blaming shadowy corporations for what is more like a flaw in human nature.

The new Moore's Law for AI Agents (aka More's Law) has accelerated at around the time people in research roles started to talk a lot more about getting value from AI coding assistants. AI accelerating AI research seems like the obvious interpretation, and if true, the new exponential is here to stay. This gets us to 8 hour AIs in ~March 2026, and 1 month AIs around mid 2027.[1]

I do not expect humanity to retain relevant steering power for long in a world with one-month AIs. If we haven't solved alignment, either iteratively or once-and-for-all[2], it's looking like game over unless civilization ends up tripping over its shoelaces and we've prepared.

- ^

An extra speed-up of the curve could well happen, for example with [obvious capability idea, nonetheless redacted to reduce speed of memetic spread].

- ^

From my bird's eye view of the field, having at least read the abstracts of a few papers from most organizations in the space, I would be quite surprised if we had what it takes to solve alignment in the time that graph gives us. There's not enough people, and they're mostly not working on things which are even trying to align a superintelligence.

My own experience is that if-statements are even 3.5's Achilles heel and 3.7 is somehow worse (when it's "almost" right, that's worse than useless, it's like reviewing pull requests when you don't know if it's an adversarial attack or if they mean well but are utterly incompetent in interesting, hypnotizing ways)... and that METR's baselines more resemble a Skinner box than programming (though many people have that kind of job, I just don't find the conditions of gig economy as "humane" and representative of what how "value" is actually created), and the sheer disconnect of what I would find "productive", "useful projects", "bottlenecks", and "what I love about my job and what parts I'd be happy to automate" vs the completely different answers on How Much Are LLMs Actually Boosting Real-World Programmer Productivity?, even from people I know personally...

I find this graph indicative of how "value" is defined by the SF investment culture and disruptive economy... and I hope the AI investment bubble will collapse sooner rather than later...

But even if the bubble collapses, automating intelligence will not be undone, it won't suddenly become "safe", the incentives to create real AGI i...

Life is Nanomachines

In every leaf of every tree

If you could look, if you could see

You would observe machinery

Unparalleled intricacy

In every bird and flower and bee

Twisting, churning, biochemistry

Sustains all life, including we

Who watch this dance, and know this key

Rationalists try to be well calibrated and have good world models, so we should be great at prediction markets, right?

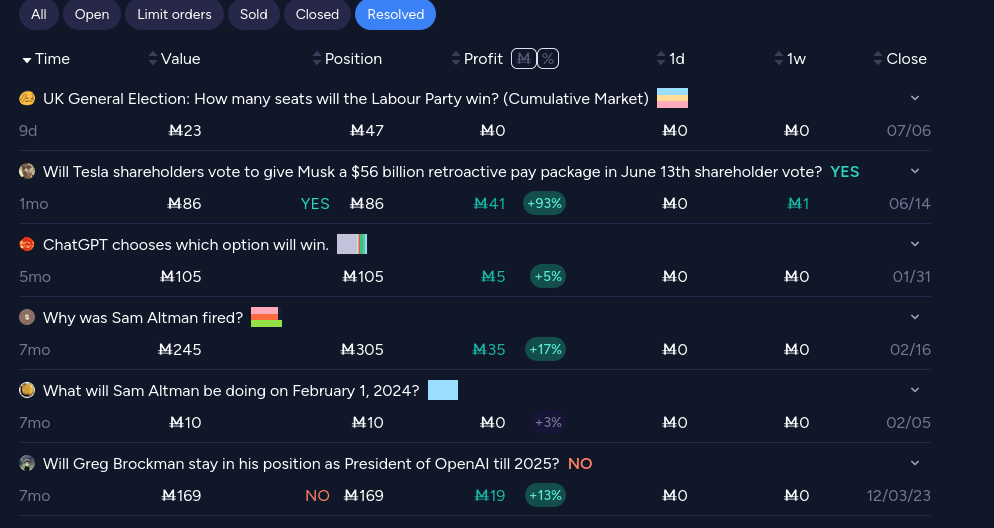

Alas, it looks bad at first glance:

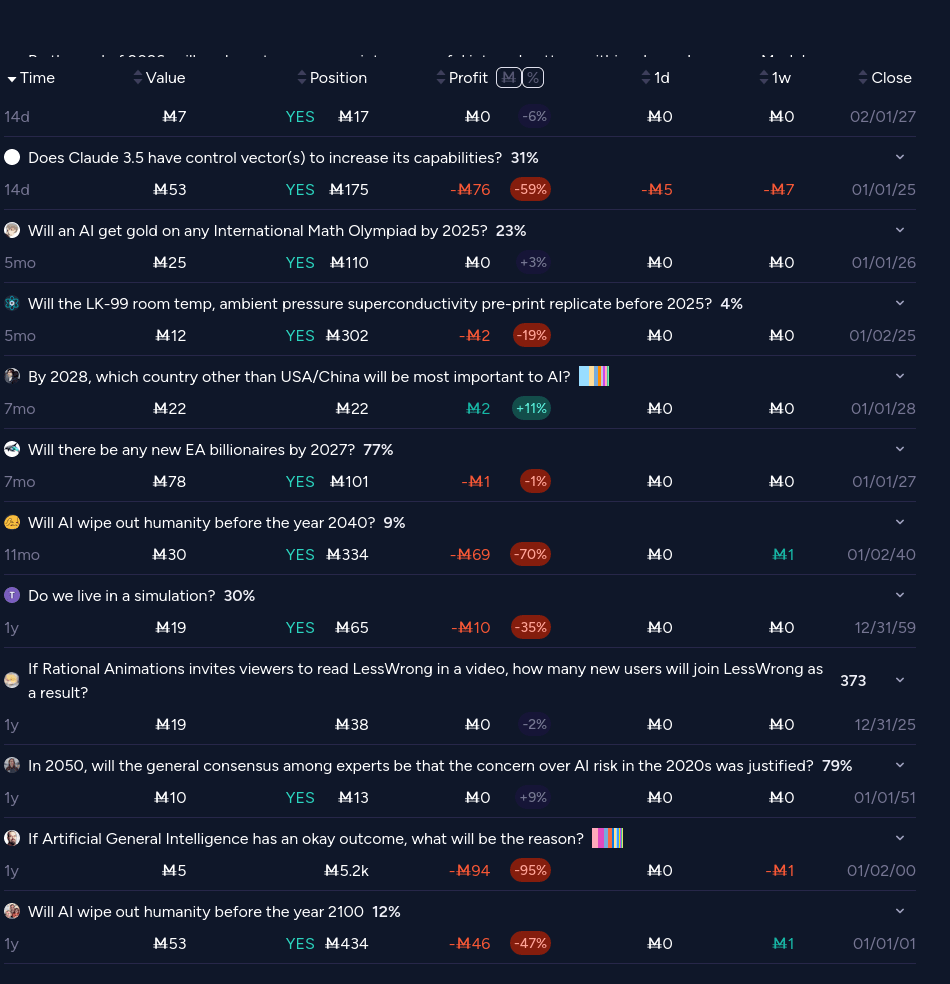

I've got a hopeful guess at why people referred from core rationalist sources seem to be losing so many bets, based on my own scores. My manifold score looks pretty bad (-M192 overall profit), but there's a fun reason for it. 100% of my resolved bets are either positive or neutral, while all but one of my unresolved bets are negative or neutral.

Here's my full prediction record:

The vast majority of my losses are on things that don't resolve soon and are widely thought to be unlikely (plus a few tiny not particularly well thought out bets like dropping M15 on LK-99), and I'm for sure losing points there. but my actual track record cached out in resolutions tells a very different story.

I wonder if there are some clever stats that @James Grugett @Austin Chen or others on the team could do to disentangle these effects, and see what the quality-adjusted bets on critical questions like the AI doom ones would be absent this kind of effect. I'd be excited to see the UI showing an extra column on the referrers table showing cashed out ...

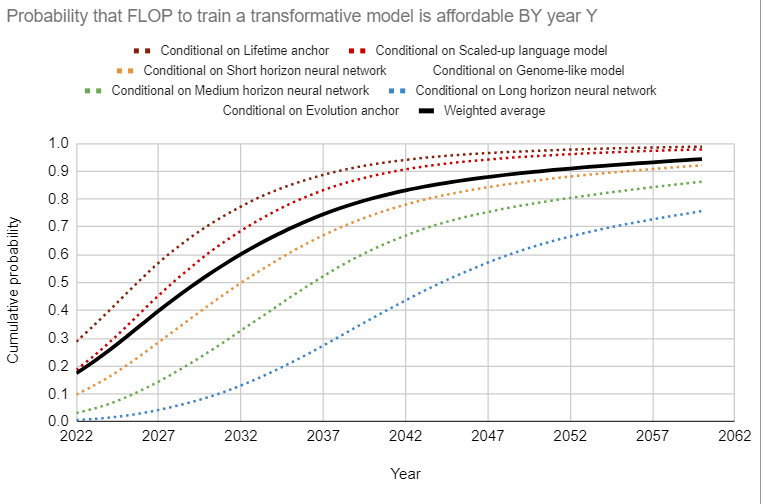

In Jan 2023[1] I ran through the bio anchors notebook[2] which lets you set a wide variety of variables that go into the model, and changed a bunch of things to what seemed like first pass reasonable conclusions (e.g. setting the "evolution anchor" aka it will take as much compute to train AGI as was used across all brains in all of evolutionary time to zero, along with dozens of other minor parameters). It seemed like almost all of the ones which seemed off were off in the direction of making timelines look longer. When everything settled, I got:

But, I noted that

this model does not take into account increases in the speed of algorithmic or hardware improvements due to AI, which is already starting to kick in (https://www.lesswrong.com/posts/camG6t6SxzfasF42i/a-year-of-ai-increasing-ai-progress), so I expect timelines will actually be notably shorter than that.

I don't remember exactly what the timelines i had in mind were, but probably like 1-3 years years sooner mean.

I think bio anchors is a kinda interesting and at least vaguely informative framework[3], and mostly think the wildly long timelines look like they were the result of picking many free variables in individua...

Yeah, I think the big contribution of the bioanchors work was the basic framework: roughly, have a probability distribution over effective compute for TAI, and have a distribution for effective compute over time, and combine them. Not the biological anchors.

[set 200 years after a positive singularity at a Storyteller's convention]

If We Win Then...

My friends, my friends, good news I say

The anniversary’s today

A challenge faced, a future won

When almost came our world undone

We thought for years, with hopeful hearts

Past every one of the false starts

We found a way to make aligned

With us, the seed of wondrous mind

They say at first our child-god grew

It learned and spread and sought anew

To build itself both vast and true

For so much work there was to do

Once it had learned enough to act

With the desired care and tact

It sent a call to all the people

On this fair Earth, both poor and regal

To let them know that it was here

And nevermore need they to fear

Not every wish was it to grant

For higher values might supplant

But it would help in many ways:

Technologies it built and raised

The smallest bots it could design

Made more and more in ways benign

And as they multiplied untold

It planned ahead, a move so bold

One planet and 6 hours of sun

Eternity it was to run

Countless probes to void disperse

Seed far reaches of universe

With thriving life, and beauty's play

Through endless night to endless day

Now back on Earth the plan continues

Of course, we shared with it our v...

A couple of months ago I did some research into the impact of quantum computing on cryptocurrencies, seems maybe significant, and a decent number of LWers hold cryptocurrency. I'm not sure if this is the kind of content that's wanted, but I could write up a post on it.

Re: AI auto-frontpage decisions. It's super neat to have fast decisions, but still kinda uncomfy to not have legibility on criteria?

Maybe having a prompt with a CoT to assess posts based on criteria being more easy to adjust and legible than a black box classifier? Bootstrapping from classifier by optimizing the prompt towards something which gives same results as classifier, but is more legible and editable, could be the way to go?

Let's say you want to insure against rogue superintelligence, and handwave all those pesky methodological issues like "who enforces it", "everyone being dead" and "money not being a thing when everyone is dead". How much does the insurance company risk?

First, let's try combining the statistical value of a human life ($13.2m), Bostrom's estimate of the number of lives the future could support (10^43 - 10^58) to give you a value of the future lives as between $1.32 × 10^50 (132 quindecillion) and $1.32 × 10^65 ($132 quattuordecillion). This is a fairly big n...

My current guess as to Anthropic's effect:

- 0-8 months shorter timelines[1]

- Much better chances of a good end in worlds where superalignment doesn't require strong technical philosophy[2] (but I put very low odds on being in this world)

- Somewhat better chances of a good end in worlds where superalignment does require strong technical philosophy[3]

- ^

Shorter due to:

- There being a number of people who might otherwise not have been willing to work for a scaling lab, or not do so as enthusiastically/effectively (~55% weight)

- Encouraging race dynamics (~30%)

- Making

A metaphor I told to a family member who has worked for ~decades in climate change mitigation, after she compared AI to fossil fuels when I explained the incentives around AI regulation and economics/national security.

Fossil Fuels 2.0: Now with the technology trying to agentically bootstrap itself to godhood, aided by its superhuman persuasive abilities.

Thinking about some things I may write. If any of them sound interesting to you let me know and I'll probably be much more motivated to create it. If you're up for reviewing drafts and/or having a video call to test ideas that would be even better.

- Memetics mini-sequence (https://www.lesswrong.com/tag/memetics has a few good things, but no introduction to what seems like a very useful set of concepts for world-modelling)

- Book Review: The Meme Machine (focused on general principles and memetic pressures toward altruism)

- Meme-Gene and Meme-Meme co-evolution (fo