The mechanism described here seems fairly plausible prima facie, but it seems like there's something self-undermining about it.

Suppose I am a smart person and I prefer to associate with smart people. As a result of this, I see evidence everywhere that being smart is anticorrelated with all the other things I care about. (And indeed that they are all anticorrelated with one another.) As a result of this, I come to have a "bias against general intelligence".

What happens then? Aren't I, with my newly-formed anti-g bias, likely to stop preferring to associate with smart people? And won't this make those anticorrelations stop appearing?

Maybe not. For instance, maybe it was never that I prefer to associate with smart people, it's that being a smart person myself I find that my job and hobbies tend to be smart-person jobs and hobbies, and the people I got to know at university are smart people, and so forth, so whatever my preferences being smart is going to give you an advantage in getting to know me, and then all the same mechanisms can operate.

Or maybe all my opinions are formed in my youth when I am hanging out with smart people, and then having developed an anti-g bias I stop hanging out with smart people, but by then my brain is too ossified for me to update my biases just because I've stopped seeing evidence for them.

Still, this does have at least a whiff of paradox about it. I am the more inclined to think so because it seems kinda implausible for a couple of other reasons.

First, there's nothing very special about g here. If I tend to associate with smart people, socialists and sadomasochists[1], doesn't this argument suggest that as well as starting to think that smart people tend to be sexually repressed fascists I should also start to think that socialists are sexually repressed and stupid, and that sadomasochists are stupid fascists? Shouldn't this mechanism lead to a general disenchantment with all the characteristics one favours in one's associates?

[1] Characteristics chosen for the alliteration and for being groups that a person might in fact tend to associate with. They aren't a very good match for my actual social circles.

Second, where's the empirical evidence? We've got the example of Taleb, but his anti-intellectual-ism could have lots of other causes besides this mechanism, and I can't say I've noticed any general tendency for smart people to be more likely to think that intelligence is correlated with bad things. (For sure, sometimes they do. But sometimes stupid people do too.)

If I look within myself (an unreliable business, of course, but it seems like more or less the best I can do), I don't find any particular sense that intelligent people are likely to be ruder or less generous or uglier or less fun to be with or (etc.), nor any of the other anticorrelations that this theory suggests I should have learned.

And there's a sort of internal natural experiment I can look at. About 15 years ago I had a fairly major change of opinion: I deconverted from Christianity. So, this would suggest that (1) back then I should have expected clever people to be less religious, (2) now I should expect them to be more religious, (3) back then I should have expected religious people to be less clever, and (4) now I should expect them to be cleverer. (Where "religious" should maybe mean something like "religiously compatible with my past self".) I think #1 and #3 are in fact at least slightly correct (and one of the things that led me to consider whether in fact some of my most deeply held beliefs were bullshit was the fact that on the whole the smartest people seemed rarely to be religious) but #2 and #4 are not. This isn't outright inconsistent with the theory being advanced here -- it could be, as I said above, that I'm just not as good at learning from experience as I used to be, because I'm older. But the simpler explanation, that very clever people do tend to be less religious (or at least systematically seem so to me for some reason), seems preferable to me.

There's a pretty famous paper showing that math and verbal intelligence trade off against each other. It was done on students at a mid tier college; the actual cause was that students who were good at both went to a better school. So the effect definitely exists.

That said, I'm not convinced it's the dominant cause here. Being known for being aggressive and mean while blocking people who politely disagree pollutes your data in all kinds of ways.

Suppose I am a smart person and I prefer to associate with smart people. As a result of this, I see evidence everywhere that being smart is anticorrelated with all the other things I care about. (And indeed that they are all anticorrelated with one another.) As a result of this, I come to have a "bias against general intelligence".

What happens then? Aren't I, with my newly-formed anti-g bias, likely to stop preferring to associate with smart people? And won't this make those anticorrelations stop appearing?

First, I don't necessarily know that this bias is strong enough to counteract other biases like self-serving biases, or to counteract smarter people's better ability to understand the truth.

But secondly, I said that it creates the illusion of a tradeoff between g and good things. But g still has things that gives it advantages in the first place, since it'd still generally correlate to smart ideas outside of whatever thing one is valuing.

But also, I don't know that people are being deliberate in associating with similarly intelligent people. It might also have happened as a result of stratification by job, interests, class, politics, etc.. Some people I know who have better experience with how social networks form across society claim that this is more what tends to happen, though I don't know what they are basing it on.

First, there's nothing very special about g here. If I tend to associate with smart people, socialists and sadomasochists[1], doesn't this argument suggest that as well as starting to think that smart people tend to be sexually repressed fascists I should also start to think that socialists are sexually repressed and stupid, and that sadomasochists are stupid fascists? Shouldn't this mechanism lead to a general disenchantment with all the characteristics one favours in one's associates?

[1] Characteristics chosen for the alliteration and for being groups that a person might in fact tend to associate with. They aren't a very good match for my actual social circles.

There's the special thing that there is assortment on g. That special thing also applies to your examples, but it doesn't apply to most variables.

Generally: points taken. On the last point: there may not exactly be assortment on other variables, but surely it's true that people generally prefer to hang out with others who are kinder, more interesting, more fun to be with, more attractive, etc.

As you select for more variables, the collider relationship between any individual variable pair gets weaker because you can't select as strongly. So there's a limit to how many variables this effect can work for at once.

My argument (I think) bypasses this problem because 1. there's a documented fairly strong assortment on intelligence, 2. I specifically limit the other variable to whichever one they personally particularly value.

I think I can reconcile your experiences with Taleb's (and expand out the theory that tailcalled put forth). The crux of the extension is that relationships are two-way streets and almost everyone wants to spend time with people who are better than them in the domain of interest.

The consequence of this is that most people are equally constrained by who they want in their social circle and also the who wants them in their social circle. While most people would like to hang out with the super smart or super domain-competent (which would induce the negative correlation), those people are busy because everyone wants to hang out with them, and so they hang out with their neighbor instead. Since Taleb is extraordinary, almost everyone wants to hang out with him, so the distribution of people he hangs out with is equal to his preference criteria. For most people near the center of the cluster, the bidirectionality of relationships leads them to social circles that are, well, circle shaped. Taleb, in a fashion true to himself, is dealing with the tail end of a multivariate distribution.

Since normal people are dealing with social Circles instead of social Tails, they do not experience the negative correlation that Taleb does.

The standard example I've always seen for the "collider bias" is that we have a bunch of restaurants in our hypothetical city, and it seems like the better their food, the worse their drinks and visa-versa. This is (supposed to be) because places with bad food and drinks go out of business and there is a cap on effort that can be applied to food or drinks.

How would the self-defeating thing play in here? I don't see yet why it shouldn't, but I also don't recognize a way for it to happen, either. Could you walk me through it?

I don't think it would, in practice. One reason is that "bad/bad places go out of business" is a mechanism that doesn't go via your preferences in the way that "you spend time with smart nice people" does. But if it did I think it would go like this.

You go to restaurants that have good food or good drinks or both. This induces an anticorrelation between food quality and drinks quality in the restaurants you go to. After a while you notice this. You care a lot about (let's say) having really good food, and having got the idea that maybe having good drinks is somehow harmful to food quality you stop preferring restaurants with good drinks. Now you are just going to restaurants with good food, and not selecting on drinks quality, so the collider bias isn't there any more (this is the bit that's different if in fact there's a separate selection that kills restaurants whose food and drink are both bad, which doesn't correspond to anything in the interpersonal-relations scenario), so you decide you were wrong about the anticorrelation. So you start selecting on drink quality again, and the anticorrelation comes back. Repeat until bored or until you think of collider bias as an explanation for your observations.

What this suggests is that the oft touted connection between altruistic behavior and intelligence may have a root in self preservation. Intelligent people are much more capable of serious undetected dishonesty and so have to make obvious sacrifices against violence, crime, impoliteness, etc. to make up for it.

There's also some evidence that different cognitive skills correlate less at high g than low g: https://en.wikipedia.org/wiki/G_factor_(psychometrics)#Spearman's_law_of_diminishing_returns

So if you mostly interact with very intelligent people, it might be relatively less useful to think about unidimensional intelligence.

Aha, so it's not just the Mensa members who are arrogant and annoying! It's humans in general; the stupid ones probably even more so.

I wasn't aware of the concept of collider bias until now! Any further reading on the topic you'd recommend?

"The Tails Coming Apart As Metaphor For Life" is a classic Slate Star Codex post about it, based on the 2014 LessWrong post "Why the tails come apart". Both of them use the phrasing "tails coming apart" to refer to the bias, since in the graph it seems like there are two separate "tails" of people while they are both a subset of the larger circular group of people.

Interestingly, neither posts refer to the term "collider bias", but they definitely all are talking about the same concept.

Hm, I had never thought of the tails coming apart as an instance of collider bias before, but I guess it makes sense: you are conditioning on "X is high or Y is high".

https://en.wikipedia.org/wiki/Berkson%27s_paradox

I also liked this numberphile video about it: Link

I’d assume that most things people value are associated with IQ. If that association is strong enough, then I believe it still shows up as a positive correlation when you exclude the people who don’t make the cut.

I’d assume that most things people value are associated with IQ.

I'm not sure that's true. E.g. it doesn't seem to apply to personality or politics. (Or well, politics is associated, but people can value eother side of politics, and so it can just as well be negatively correlated as positively correlated.)

If that association is strong enough, then I believe it still shows up as a positive correlation when you exclude the people who don’t make the cut.

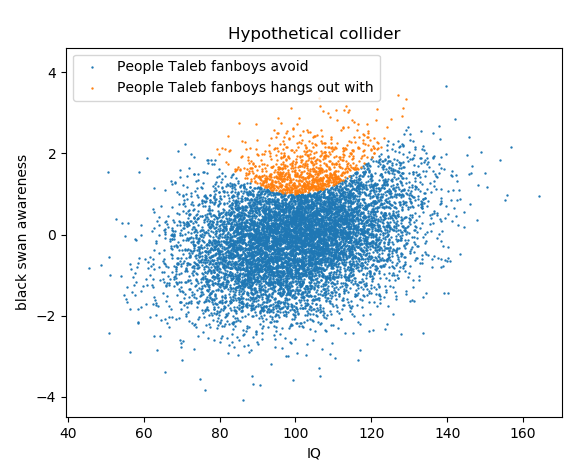

Yes, but collider bias can lead to a surprisingly strong negative bias in the correlation. E.g. in my simulation, g and black swan awareness were initially positively correlated.

So ... smart people are worse than average at the task of evaluating whether or not smart people are worse than average at some generic task which requires intellectual labor to perform, and in fact smart people should be expected to be better than average at some generic task which requires intellectual labor to perform?

Isn't the task of evaluating whether or not smart people are worse than average at some generic task which requires intellectual labor to perform, itself a task which requires intellectual labor to perform? So shouldn't we expect them to be better at it?

ETA:

Consider the hypothetical collider from the perspective of somebody in the middle; people in the upper right quadrant have cut them off, and they have cut people in the lower left quadrant. That is, they should observe exactly the same phenomenon in the people they know. Likewise, a below average person. Thus, the hypothetical collider should lead every single person to observe the same inverted relationship between IQ and black swan awareness; the effect isn't limited to the upper right quadrant. That is, if smart people are more likely to believe IQ isn't particularly important than less-smart people, this belief cannot arise from the hypothetical collider model, which predicts the same beliefs among all groups of people.

So ... smart people are worse than average at the task of evaluating whether or not smart people are worse than average at some generic task which requires intellectual labor to perform, and in fact smart people should be expected to be better than average at some generic task which requires intellectual labor to perform?

Isn't the task of evaluating whether or not smart people are worse than average at some generic task which requires intellectual labor to perform, itself a task which requires intellectual labor to perform? So shouldn't we expect them to be better at it?

I mentioned this as a bias that a priori very much seems like it should exist. This does not mean smart people can't get the right answer anyway, by using their superior skills. (Or because they have other biases in favor of intelligence, e.g. self-serving biases.) Maybe they can, I wouldn't necessarily make strong predictions about it.

Consider the hypothetical collider from the perspective of somebody in the middle; people in the upper right quadrant have cut them off, and they have cut people in the lower left quadrant. That is, they should observe exactly the same phenomenon in the people they know. Likewise, a below average person. Thus, the hypothetical collider should lead every single person to observe the same inverted relationship between IQ and black swan awareness; the effect isn't limited to the upper right quadrant. That is, if smart people are more likely to believe IQ isn't particularly important than less-smart people, this belief cannot arise from the hypothetical collider model, which predicts the same beliefs among all groups of people.

I think it depends on the selection model.

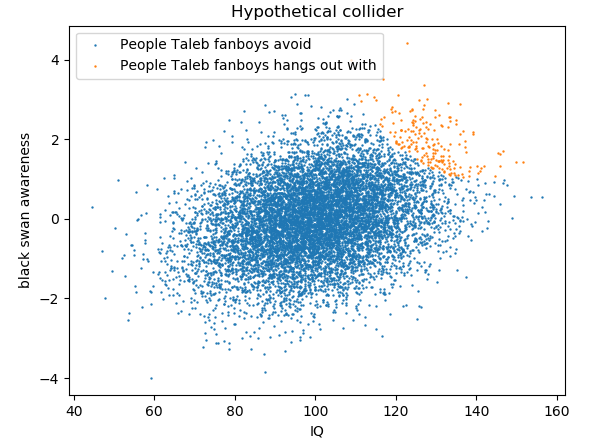

The simulation in the main post assumed a selection model of black_swan_awareness + g > 3. If we instead change that to black_swan_awareness - g**2 * 0.5 > 1, we get the following:

This seems to exhibit a positive correlation.

For convenience, here's the simulation code in case you want to play around with it:

import numpy as np

import matplotlib.pyplot as plt

N = 10000

g = np.random.normal(0, 1, N)

black_swan_awareness = np.random.normal(0, np.sqrt(1-0.3**2), N) + 0.3 * g

selected = black_swan_awareness - g**2 * 0.5 > 1 #g + black_swan_awareness > 3

iq = g * 15 + 100

plt.scatter(iq[~selected], black_swan_awareness[~selected], s=0.5, label="People Taleb fanboys avoid")

plt.scatter(iq[selected], black_swan_awareness[selected], s=0.5, label="People Taleb fanboys hangs out with")

plt.legend()

plt.xlabel("IQ")

plt.ylabel("black swan awareness")

plt.title("Hypothetical collider")

plt.show()Yes, it does depend on the selection model; my point was that the selection model you were using made the same predictions for everybody, not just Taleb. And yes, changing the selection model changes the results.

However, in both cases, you've chosen the selection model that supports your conclusions, whether intentionally or accidentally; in the post, you use a selection model that suggests Taleb would see a negative association. Here, in response to my observation that that selection model predicts -everybody- would see a negative association, you've responded with what amounts to an implication that the selection model everybody else uses produces a positive association. I observe that, additionally, you've changed the labeling to imply that this selection model doesn't apply to Taleb, and "smart people" generally, but rather their fanboys.

However, if Taleb used this selection model as well, the argument presented in the main post, based on the selection model, collapses.

Do you have an argument, or evidence, for why Taleb's selection model should be the chosen selection model, and for why people who aren't Taleb should use this selection model instead?

However, if Taleb used this selection model as well, the argument presented in the main post, based on the selection model, collapses.

No, if I use this modified selection model for Taleb, the argument survives. For instance, suppose he is 140 IQ - 2.67 sigma above average in g. That should mean that his selection expression should be black_swan_awareness - (g-2.67)**2 * 0.5 > 1. Putting this into the simulation gives the following results:

You have a simplification in your "black swan awareness" column which I don't think it is appropriate to carry over; in particular you'd need to rewrite the equation entirely to deal with an anti-Taleb, who doesn't believe in black swans at all. (It also needs to deal with the issue of repricocity; if somebody doesn't hang out with you, you can't hang out with them.)

You probably end up with a circle, the size of which determines what trends Taleb will notice; for the size of the apparent circle used for the fan, I think Taleb will notice a slight downward trend with 100-120 IQ people, followed by a general upward trend - so being slightly smart would be negatively correlated, but being very smart would be positively correlated. Note that the absolute smartest people - off on the far right of the distribution - will observe a positive correlation, albeit a weaker one. The people absolutely most into black swan awareness - generally at the top - likewise won't tend to notice any strong trends, but it will tend to be a weaker positive correlation. The people who are both very into black swan and awareness, and also smart, will notice a slight downward correlation, but not that strong. People who are unusually black swan un-aware, and higher-but-not-highest IQ, whatever that means, will instead notice an upward correlation.

The net effect is that a randomly chosen "smart person" will notice a slight upward correlation.

Selection-induced correlation depends on the selection model used. It is valuable to point out that tailcalled implicitly assumes a specific selection model to generate a charitable interpretation of Taleb. But proposing more complex (/ less plausible for someone to employ in their life) models instead is not likely to yield a more believable result.

“no free lunch in intelligence” is an interesting thought, can you make it more precise?

Intelligence is more effective in combination with other skills, which suggests “free lunch” as opposed to tradeoffs.

Basically, the idea is that e.g. if you are smarter at solving math tests where you have to give the right answer, then that will make you worse at e.g. solving math "tests" where you have to give the wrong answer. So for any task where intelligence helps, there is an equal and opposite task where intelligence hurts.

if you are smarter at solving math tests where you have to give the right answer, then that will make you worse at e.g. solving math "tests" where you have to give the wrong answer.

Is that true though? If you're good at identifying right answers, then by process of elimination you can also identify wrong answers.

I mean sure, if you think you're supposed to give the right answer then yes you will score poorly on a test where you're actually supposed to give the wrong answer. Assuming you get feedback, though, you'll soon learn to give wrong answers and then the previous point applies.

I was assuming no feedback, like the test looks identical to an ordinary math test in every way.

The "no free lunch" theorem also applies in the case where you get feedback, but there it is harder to construct. Basically in such a case the task would need to be anti-inductive, always providing feedback that your prior gets mislead by.

Of course these sorts of situations are kind of silly, which is why the no free lunch theorem is generally considered to be only of academic interest.

I think the argument in the post is solid in the abstract, but when I think about my own experience I find that even among people I know, high g-factor is strongly correlated with other positive traits, either mental traits (like intellectual honesty, willingness to admit mistakes, lack of overconfidence) or personality traits (being nicer overall, being more forgiving of the mistakes of others, less likely to fall into fundamental attribution errors, etc.)

I see three possible explanations for this:

-

The correlation in the entire population is strong, and the selection bias I introduce is just on people being smart rather than them being smart + having some other positive traits. In this case my selection threshold looks like a vertical line in the scatter plot rather than a diagonal line, and a high positive correlation would probably survive that kind of thresholding.

-

The correlation in the entire population is so strong that it remains positive even after correcting for collider bias. I think this option is considerably less likely, because I find it quite implausible that positive personality traits or intellectual habits are that correlated with general intelligence.

-

I'm biased in favor of smart people and think they are better on other dimensions than they actually are.

I imagine my experience can't be that uncommon, but it's interesting to think that due to the collider bias it might actually be abnormal and probably merits some explanation.

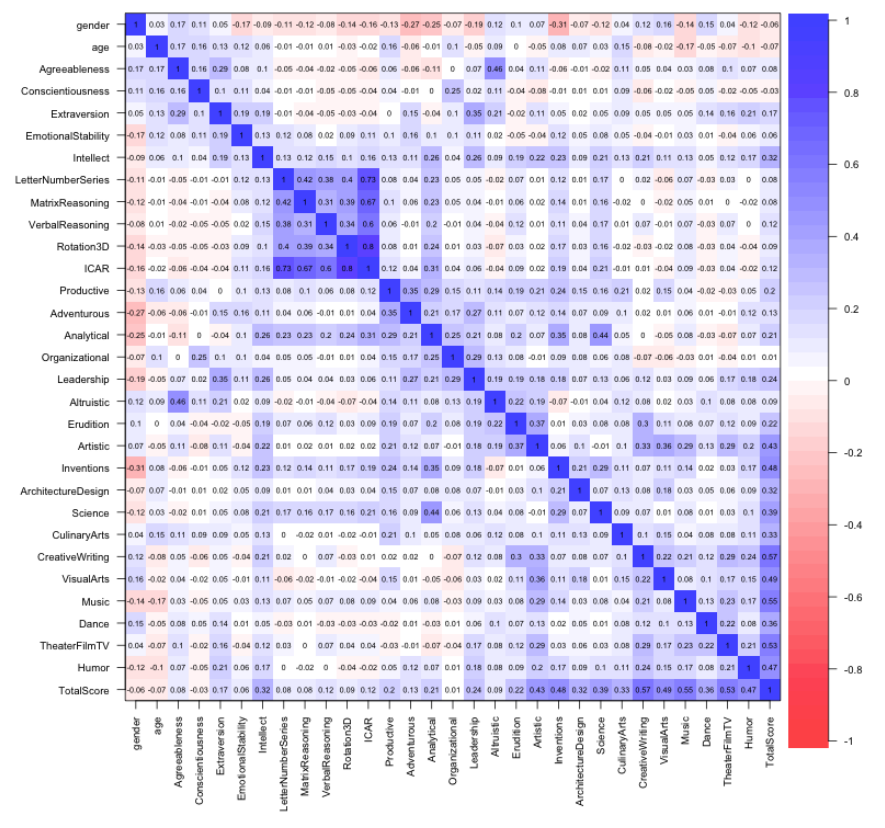

As far as I know, there's no correlation between g and niceness/forgiveness/etc.. Just bringing up the most immediate study on the topic I can think of (not necessarily the best), this study finds a correlation of approximately zero (-0.06) between being nice (Agreeableness) and IQ (ICAR):

I could make up various possible explanations for why you'd see this effect, but I don't know enough about your life to know which ones are the most accurate.

Thanks for the information. I think the problem here is that my notion of "being nice" doesn't correlate that well with "agreeableness", since I find it quite difficult to believe that "niceness" in my sense actually has no correlation with general intelligence.

Still, the correlation I would expect between my own notion and g is ~ 0.2 or so, which is still quite a bit less than the correlation I think I observe.

He claims that IQ tests only tap into abilities that are suitable for academic problems, and that they in particular are much less effective when dealing with problems that have long tails of big losses and/or big gains.

Isn't... he himself a counterexample, being smart and getting rich off dealing with long-tail problems?

TL;DR: Collider between g and valued traits in anecdotal experiences.

IQ tests measure the g factor - that is, mental traits and skills that are useful across a wide variety of cognitive tasks. g appears to be important for a number of outcomes, particularly socioeconomic outcomes like education, job performance and income. g is often equated with intelligence.

I believe that smart people's personal experiences are biased against the g factor. That is, I think that people who are high in g will tend to see things in their everyday life that suggest to them that there is a tradeoff between being high g and having other valuable traits.

An example

A while ago, Nassim Taleb published the article IQ is largely a pseudoscientific swindle. In it, he makes a number of bad and misleading arguments against IQ tests, most of which I'm not going to address. But one argument stood out to me: He claims that IQ tests only tap into abilities that are suitable for academic problems, and that they in particular are much less effective when dealing with problems that have long tails of big losses and/or big gains.

Essentially, Taleb insists that g is useless "in the real world", especially for high-risk/high-reward situations. It is unsurprising that he would care a lot about this, because long tails are the main thing Taleb is known for.[1]

In a way, it might seem intuitive that there's something to Taleb's claims about g - there is, after all, no free lunch in intelligence, so it seems like any skill would require some sort of tradeoff, and ill-defined risks seem like a logical tradeoff for performance at well-defined tasks. However, the fundamental problem with this argument is that it's wrong. Nassim Taleb does not provide any evidence, but generally studies on IQ don't find g to worsen performance, and tend to find it to improve performance, including on complex, long tail-heavy tasks like stock trading.[2]

But what I've realized is that there might be a charitable interpretation for Taleb's argument. Specifically, we have good reason to believe that his claim is a reflection of his personal experience and observations in life. Let's take a look:

Who is Nassim Taleb?

Nassim Taleb used stock trading methods that were particularly suited to long tail scenarios to become financially independent. With his bounty of free time, he's written several books about randomness and long tails. Clearly, he cares quite a lot about long-tailed distributions, for good reasons. But perhaps he cares too much - he is characteristically easy to anger, and he spends a lot of time complaining about people who don't accept his points about long tails.

Based on this, I would assume that he quite heavily selects for people who care about and understand long tails when it comes to the people he hangs around. This probably happens both actively, as he e.g. loudly blocks anyone who disagrees with him on Twitter, and probably also passively, because people who agree with him are going to be a lot more interested in associating with him than people who he blows up at.

But in addition to selecting for people who care about long tails, I would assume he also selects for smart people. This is a general finding; people tend to associate with people who are of similar intelligence to themselves. It also seems theoretically right; he's primarily known for "smart topics" like long tail probabilities, and his work tends to be about jobs that are mostly held by people in the upper end of the IQ range.

Simulating collider bias

Selecting for both intelligence and black swan awareness exposes Taleb to collider bias: If you select for multiple variables, you tend to induce a negative correlation between the variables in your observations. This is because the individuals who are low in both tend to be excluded from your observations. Let's plot it hypothetically, on a simulation:

But if Taleb's personal experiences involve a negative correlation between IQ-like intelligence and black swan awareness/long tail handling ability, then it's no wonder he sees IQ as testing for skills that deal poorly with the long tails of the real world; that's literally just what he sees when he looks at people.

Generalizing

Lots of people have something they value. Maybe it's emotional sensitivity, maybe it's progressive politics, maybe it's something else. Smart people presumably select for both the thing they value and for intelligence in the people they hang out with - so as a result, due to the collider, you should expect them to see a negative, or at least downwards-biased, correlation between the traits.

But it seems like this would create a huge bias against general intelligence! Whenever a smart person has to think about whether general intelligence is good, they will notice that it tends to be accompanied with some bad stuff. So they will see it as a tradeoff, often even for things that are positively correlated.

Thanks to Justis Mills for proofreading and feedback.

See e.g. his books Fooled By Randomness or The Black Swan.

The linked study looks at stock trading behavior during the dot com bubble. It finds that smarter people earned more money, were less likely to lose big, entered the market earlier, and stopped entering the market once it reached its peak. It also finds some other things; go read the study if you want to learn the details.