The natural category grouping all these people together isn't "high achiever" or "creative revolutionary," but "legendary figure," as you indicate in your last paragraph. Bill James was a key innovator in the science of baseball, and yet Babe Ruth is included in this riff and Bill James is not. Babe Ruth is central to the legend of baseball, and James is not.

To become a legendary figure, it's important to have some sort of achievement in a way that serves the national interest or makes for colorful storytelling, and it doesn't hurt to have a distinctive personality. Einstein's innovations helped us fight wars, Darwin was an ocean explorer, Armstrong kicked Russia's ass in the space race, Beethoven was a cranky deaf incel, Galileo was forced to recant by the church, Socrates was forced to drink hemlock.

The whole "low hanging fruit/tapped out fields" theory of scientific stagnation seems inadequately operationalized. It conjurs up the image of an apple tree, with fruit pickers standing on the ground reaching ever-higher for the remaining fruit.

An alternative image is that the scientific fruit-pickers are building a scaffold, so that more fruit is always within arm's reach. Unless we're arguing that the scaffold is nearing the top of the tree of knowledge, there's always low-hanging fruit to be picked. Somebody will pick it, and whether or not they become a legendary figure depends on factors other than just how juicy their apple turned out to be. The reaching-for-fruit action is always equally effortful, but the act of scaffold-building gets more efficient every year. In literal terms, previous scientific discoveries and capital investments permit achievements that would have been inaccessible to earlier researchers, and we're getting better at it all the time.

Improved infrastructure doesn't always coincide with inaccessibility, either: consider the trend in the accessibility of supercomputer or or DNA sequencing over time. Think about how incredibly hard it was to learn mathematics when mathematicians were in the habit of jealously guarding their procedures for solving cubic equations, or when your best resource for learning algebra was Cardano's Ars Magna, of which Jan Gullberg as written "No single publication has promoted interest in algebra like Cardano's Ars magna, which, however, provides very boring reading to a present-day peruser by consistently devoting pages of verbose rhetoric to a solution... With the untiring industry of an organ grinder, Cardano monotonously reiterates the same solution for a dozen or more near-identical problems where just one would do."

Consider also that either this heuristic was either false at one point (i.e. it used to be the right decision to go into science to achieve "greatness," but isn't anymore), or else the heuristic is itself wrong (because so many obvious candidates for "great innovator" were all in academic science and working in established fields with fairly clear career tracks). If it used to be true that going into academic science was the right move to achieve scientific greatness, but isn't anymore, then when and why did that stop being true? How do we know?

A potential objection is to argue in favor of the existence of scientific stagnation. Isn't there empirical evidence that the apples are, indeed, getting harder to pluck?

Perhaps there is. Let's accept that premise. The "low-hanging fruit" perspective is that the right way to cope is by finding your own tree, one that nobody else has picked. The "build a scaffold" perspective suggests that all the fruit, all the time, is a priori equally hard to reach, because you're always reaching up from a scaffold somewhere. In fact, if any trees do exist without a scaffold, there's a risk that either nobody built a scaffold there because it's an unproductive tree, or that the lowest-hanging fruit there is still so high up that you'll have to do all the work of building a scaffold there before any harvest can begin. No guarantee that all that investment will pay off with a rich harvest of apples, either.

I'm really skeptical of the stagnation argument as well. Take machine learning. This a critically important technology, one with sweeping implications in every field (not just in science), and that may be the foundation of GAI - certainly one of, if not the most important technology that has ever, and perhaps will ever, be invented. Complaining that "it’s not clear they’re more significant breakthroughs than the reordering of reality uncovered in the 1920s," as Collison and Nielsen do, seems both subjective and small-minded. I'd say the same thing about the computing revolution in general.

Can anybody point to one individual who deserves the bulk of the credit for ML, or the home computing revolution? It's a combination of the development of the underlying mathematical theory, its instantiation in code, the development of computing infrastructure, algorithmic refinements, and applications to particular problems. The impact is massive, and the credit is diffuse. Probably Benjamin Black and Chris Pinkham, original visionaries of Amazon Web Services, get some of the credit for developing core infrastructure on which the world relies for developing and deploying ML. But nobody knows who they are, and they certainly won't be getting a Nobel.

Tracking individual citations, reputation, fame, and other measures of credit as measures of counterfactual marginal impact (CMI), seems to me like a misbegotten enterprise, or at the very most the crudest of possible measures. It's clear to me that CMI is a meaningful concept, and I understand that we now have some theory to explore counterfactuals with sound statistical methods. But let's just say that if this heuristic is correct, then "measuring scientific progress" is a tree with some very low hanging fruit, and you should definitely consider making a career in it.

> An alternative image is that the scientific fruit-pickers are building a scaffold, so that more fruit is always within arm's reach. Unless we're arguing that the scaffold is nearing the top of the tree of knowledge, there's always low-hanging fruit to be picked. Somebody will pick it, and whether or not they become a legendary figure depends on factors other than just how juicy their apple turned out to be. The reaching-for-fruit action is always equally effortful, but the act of scaffold-building gets more efficient every year. In literal terms, previous scientific discoveries and capital investments permit achievements that would have been inaccessible to earlier researchers, and we're getting better at it all the time.

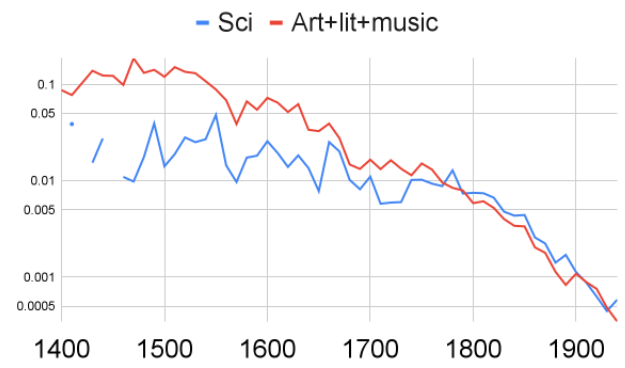

I don't agree with this, for reasons discussed here. I think that empirically, it seems to get harder over time (at least per capita) to produce acclaimed works. I agree that there are other factors in who ends up "legendary," but I think that's one of them.

> Consider also that either this heuristic was either false at one point (i.e. it used to be the right decision to go into science to achieve "greatness," but isn't anymore), or else the heuristic is itself wrong (because so many obvious candidates for "great innovator" were all in academic science and working in established fields with fairly clear career tracks). If it used to be true that going into academic science was the right move to achieve scientific greatness, but isn't anymore, then when and why did that stop being true? How do we know?

The heuristic is "to match the greats, don't follow in their footsteps." I think the most acclaimed scientists disproportionately followed this general heuristic - they disproportionately asked important/interesting questions that hadn't gotten much attention, rather than working on the kinds of things that had well-established and -understood traditions and could easily impress their acquaintances. For much of the history of science, this was consistent with doing traditional academic science (which wasn't yet particularly traditional); today, I think it is much less so.

As science progresses, it unlocks engineering possibilities combinatorially. This induces demand for and creates a supply of a healthier/more educated population with a larger number of researchers, many of whom are employed in building and managing our infrastructure rather than finding seminal ideas.

So what we're witnessing isn't scientific innovations becoming harder to find. Instead, scientific innovations produce so many engineering opportunities that they induce demand for an enormous number of engineers. The result is that an ever-decreasing fraction of the population is working in science, and science has to compete with an ever-larger and more lucrative engineering industry to attract the best and brightest.

In support of this, notice that the decline you find in scientific progress roughly begins in the early days of the industrial revolution (mid-late 1700s).

On top of that, I think we have to consider the tech-tree narrative of science history writing. Do you imagine that, even if you'd found that science today was becoming apparently more efficient relative to effective population, that scientific history writers would say "we're going to have to cut out some of those Enlightenment figures to make room for all this amazing stuff going on in biotech!"?

It's not that historians are biased in favor of the past, and give short shrift to the greatness of modern science and technology. It's that their approach to historiography is one of tracing the development of ideas over time.

Page limits are page limits, and they're not going to compress the past indefinitely in order to give adequate room for the present. They're writing histories, after all. The end result, though, is that Murray's sources will simply run out of room to cover modern science in the depth it deserves. This isn't about "bad taste," but about page limits and historiographical traditions.

These two forces explain both the numerator and the denominator in your charts of scientific efficiency.

This isn't a story of bias, decline, or "peak science." It's a story of how ever-accelerating investment in engineering, along with histories written to educate about the history of ideas rather than to make a time-neutral quantification of eminence, combine to give a superficial impression of stagnation.

If anything, under this thesis, scientific progress and the academy is suffering precisely because of the perception you're articulating here. The excitement and lucre associated with industry steers investment and talent away from investment in basic academic science. The faster this happens, the more stagnant the university looks as an ever-larger fraction of technical progress (including both engineering and science) happens outside the academy. And the world eats its seedcorn.

I would push back on the argument that there aren’t already “Beethoven’s” for video games. Shigeru Miyamoto for example is considered a genius and role model by many and much of his history of work had been very closely studied. I wouldn’t be surprised if in the future people like him or Kojima or John Carmack or many others are looked up to and studies as greats.

Logarithmic scales of pleasure and pain are a crucial consideration if true. Mammalian CNS at the very least plausibly represents things across ~5 oom.

Wrt the rest, Korzybski, Englebart, and, I would argue, Quine, dreamed of an uncompleted research program of meta methodology, allowing better understanding of how new methods are invented. More than half of the most cited scientific papers of all time are methods papers, yet the field is understudied.

People who dream of being like the great innovators in history often try working in the same fields - physics for people who dream of being like Einstein, biology for people who dream of being like Darwin, etc.

But this seems backwards to me. To be as revolutionary as these folks were, it wasn’t enough to be smart and creative. As I’ve argued previously, it helped an awful lot to be in a field that wasn’t too crowded or well-established. So if you’re in a prestigious field with a well-known career track and tons of competition, you’re lacking one of the essential ingredients right off the bat.

Here are a few riffs on that theme.

The next Einstein probably won’t study physics, and maybe won’t study any academic science. Einstein's theory of relativity was prompted by a puzzle raised 18 years earlier. By contrast, a lot of today’s physics is trying to solve puzzles that are many decades old (e.g.) and have been subjected to a massive, well-funded attack from legions of scientists armed with billions of dollars’ worth of experimental equipment. I don’t think any patent clerk would have a prayer at competing with professional physicists today - I’m thinking today’s problems are just harder. And the new theory that eventually resolves today’s challenges probably won’t be as cool or important as what Einstein came up with, either.

Maybe today’s Einstein is revolutionizing our ability to understand the world we’re in, but in some new way that doesn’t belong to a well-established field. Maybe they’re studying a weird, low-prestige question about the nature of our reality, like anthropic reasoning. Or maybe they’re Philip Tetlock, more-or-less inventing a field that turbocharges our ability to predict the future.

The next Babe Ruth probably won’t play baseball. Mike Trout is probably better than Babe Ruth in every way,2 and you probably haven't heard of him.

Maybe today’s Babe Ruth is someone who plays an initially less popular sport, and - like Babe Ruth - plays it like it’s never been played before, transforms it, and transcends it by personally appealing to more people than the entire sport does minus them. Like Tiger Woods, or what Ronda Rousey looked at one point like she was on track to be.

The next Beethoven or Shakespeare probably won’t write orchestral music or plays. Firstly because those formats may well be significantly “tapped out,” and secondly because (unlike at the time) they aren’t the way to reach the biggest audience.

We’re probably in the middle stages of a “TV golden age” where new business models have made it possible to create more cohesive, intellectual shows. So maybe the next Beethoven is a TV showrunner. It doesn’t seem like anyone has really turned video games into a respected art form yet - maybe the next Beethoven will come along shortly after that happens.

Or maybe the next Beethoven or Shakespeare doesn’t do anything today that looks like “art” at all. Maybe they do something else that reaches and inspires huge numbers of people. Maybe they’re about to change web design forever, or revolutionize advertising. Maybe it’s #1 TikTok user Charli d’Amelio, and maybe there will be whole academic fields devoted to studying the nuances of her work someday, marveling at the fact that no one racks up that number of followers anymore.

The next Neil Armstrong probably won’t be an astronaut. It was a big deal to set foot outside of Earth for the first time ever. You can only do that once. Maybe we’ll feel the same sense of excitement and heroism about the first person to step on Mars, but I doubt it.

I don’t really have any idea what kind of person could fill role in the near future. Maybe no one. I definitely don’t think that our lack of return trip to the Moon is any kind of a societal “failure.”

The next Nick Bostrom probably won’t be a “crucial considerations” hunter. Forgive me for the “inside baseball” digression (and feel free to skip to the next one), but effective altruism is an area I’m especially familiar with.

Nick Bostrom is known for revolutionizing effective altruism with his arguments about the value of reducing existential risks, the risk of misaligned AI, and a number of other topics. These are sometimes referred to as crucial considerations: insights that can change one’s goals entirely. But nearly all of these insights came more than 10 years ago, when effective altruism didn’t have a name and the number of people thinking about related topics was extremely small. Since then there have been no comparable “crucial considerations” identified by anyone, including Bostrom himself.

We shouldn’t assume that we’ve found the most important cause. But if (as I believe) this century is likely to see the development of AI that determines the course of civilization for billions of years to come ... maybe we shouldn’t rule it out either. Maybe the next Bostrom is just whoever does the most to improve our collective picture of how to do the most good today. Rather than revolutionizing what our goals even are, maybe this is just going to be someone who makes a lot of progress on the AI alignment problem.

And what about the next “great figure who can’t be compared to anyone who came before?” This is what I’m most excited about! Whoever they are, I’d guess that they’re asking questions that aren’t already on everyone’s lips, solving problems that don’t have century-old institutions devoted to them, and generally aren’t following any established career track.

I doubt they are an “artist” or a “scientist” at all. If you can recognize someone as an artist or scientist, they’re probably in some tradition with a long history, and a lot of existing interest, and plenty of mentorship opportunities and well-defined goals.3

They’re probably doing something they can explain to their extended family without much awkwardness or confusion! If there’s one bet I’d make about where the most legendary breakthroughs will come from, it’s that they won’t come from fields like that.

Footnotes

(Footnote deleted) ↩

He's even almost as good just looking at raw statistics (Mike Trout has a career average WAR of 9.6 per 162 games; Babe Ruth's is 10.5), which means he dominates his far superior peers almost as much as Babe Ruth dominated his. ↩

I’m not saying this is how art and science have always been - just that that’s how they are today. ↩

For email filter: florpschmop