I'm partial to Steve Byrnes's perspective, which is that "AGI is about not knowing how to do something, and then being able to figure it out" as opposed to "AGI is about knowing how to do lots of things." See also this.

I totally agree that AGI is about that. That's why I cite novel problem-solving ability in the qualification. The major issue with Steve Byrnes's argument is that he demands this ability at a human-genius level for AGI. I elaborated upon this issue in this comment on one of his posts.

On the contrary, if your AGI definition includes most humans, it sucks.

All the interesting stuff that humanity does is done by thing that most humans can't do. What you call baby AGI is by itself not very relevant for any of the dangers about AGI discussed in e.g. superintelligence. You could quibble with the literal meaning of "general" or whatever but the historical associations with the term seem much more important to me. If people read years of how AGI will kill everyone and then you use the term AGI, obviously people will think you mean the thing with the properties they've read about.

Bottom line is, current AI is not the thing we were talking about under the label AGI for the last 15 years before LLMs, so we probably shouldn't call it AGI.

>AGI discussed in e.g. superintelligence

The fact that this is how you're clarifying it shows my point. While I've heard people talking about "AGI" (meaning weak ASI) having significant impacts, it's seldom discussed as leading to x-risks. To give maybe the most famous recent example, AI 2027 ascribes the drastic changes and extinction specifically to ASI. Do you have examples of mind of credible people specifically talking about x-risks from AGI? Bostrom's book refers to superintelligence, not general intelligence, as the superhuman AI.

Also, I find the argument that we should continue to use muddled terms to avoid changing their meaning not that compelling, at least when it's easy to clarify meanings where necessary.

I think something you aren't mentioning is that at least part of the reason the definition of AGI has gotten so decoupled from its original intended meaning is that the current AI systems we have are unexpectedly spiky.

We knew that it was possible to create narrow ASI or AGI for a while; chess engines did this in 1997. We thought that a system which could do the broad suite of tasks that GPT is currently capable of doing would necessarily be able to do the other things on a computer that humans are able to do. This didn't really happen. GPT is already superhuman in some ways, and maybe superhuman for about ~50% of economically viable tasks that are done via computer, but it still makes mistakes at very other basic things.

It's weird that GPT can name and analyze differential equations better than most people with a math degree, but be unable to correctly cite a reference. We didn't expect that.

Another difficult thing about defining AGI is that we actually expect better than "median human level" performance, but not necessarily in an unfair way. Most people around the globe don't know the rules of chess, but we would expect AGI to be able to play at roughly the ~1000 elo level. Let's define AGI as being able to perform every computer task at the level of the median human who has been given one month of training. We haven't hit that milestone yet. But we may well blow past superhuman performance on a few other capabilities before we get to that milestone.

Yeah, spikiness has been an issue, but the floor is starting to get mopped up. The "unable to correctly cite a reference" thing isn't quite fair anymore, though even current SOTA systems aren't reliable in that regard.

The point about needing background is good, though the AI might need specialized training/instructions for specialized tasks in the same way a human would. There's no way it could know a particular organization's classified operating procedures from the factory, for example. Defining (strong) AGI as being able to perform every computer task at the level of the median human who has been given appropriate training (once it's had the same training a human would get, if necessary) seems sensible. (I guess you could argue that that's technically covered under the simpler definition.)

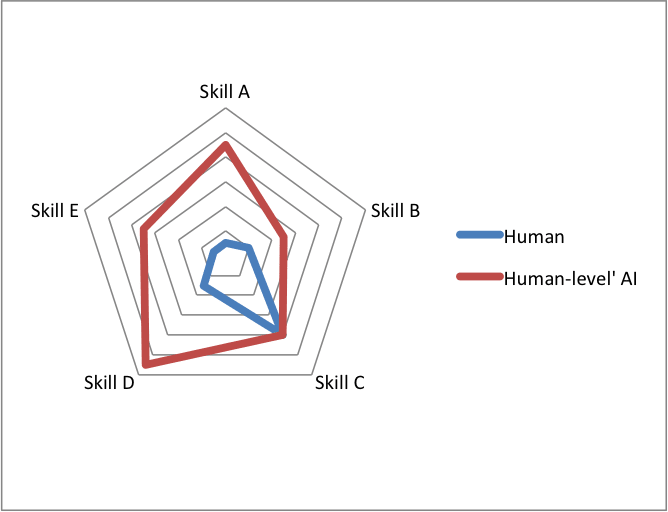

I'm reminded of this chart I first came across in this AI Impacts page. Caption:

Figure 2: Illustration of the phenomenon in which the first entirely ‘human-level’ system is substantially superhuman on most axes. (Image from Superintelligence Reading Group)

I'll also echo sunwillrise's comment in being partial to Steven Byrnes's take on AGI.

There are so many examples of insanely demanding AGI definitions[1] or criteria, typically involving, among other things, the ability to do something that only human geniuses can do. Usually, these criteria stem from a requirement that AGI be able to do anything any human can do. In extreme cases, people even require abilities that no humans have. I guess it's not AGMI (Artificial Gary Marcus Intelligence) unless it can multiply numbers of arbitrary size, solve the hard problem of consciousness, and more, all simultaneously.

Defining AGI as something capable of doing anything a human can do on a computer naively sounds like requiring normal human-level ability. This isn't true. The issue is that there's a huge range of variation within human ability; many things that Einstein could do are totally beyond the ability of the vast majority of people. Requiring AGI to have the abilities of any human inadvertently requires it to have the abilities of a genius, creating a definition that has almost nothing to do with typical human ability. This leads to an accidental sleight-of-hand: AGI gets to be framed as a human-level milestone, then claimed to be massively distant because current models are nowhere near a threshold that almost no humans meet either.

This is insane: AGI has ballooned into a standard that has almost nothing to do with being a general intelligence, capable of approaching a wide variety of novel problems. Almost all (maybe all, since no one has world-class abilities in all regards) of the quintessential examples of general intelligence – people – woefully fail to qualify.

Any definition of general intelligence should include at least the average human, and arguably most humans. Indeed, there's a legitimate question of whether it should require the ability to do all cognitive tasks average humans can do, or just average human-level reasoning ability. To clarify, I use strong AGI to describe a system that can do everything the average person can do on a computer, in contrast to weak AGI, which only has human level-reasoning, and might lack things like long-term memory, perception, "online learning" ability, etc. I'd use baby AGI to describe a system with novel problem-solving ability well into the human range, even if below average; this clearly exists today.

Use-words-sensibly justifications aside, these more modest definitions help show the deeply worrying rate of recent progress. Instead of the first major milestone term being a long way off and allowing us to forget how close we are to both a very impactful (though likely not earth-shattering) capability and a somber omen, we are confronted with tangible developments: baby AGI is no older than two[2], and weak AGI seems to be almost here.

There are also similar advantages for public awareness. As some of those examples linked in the first line show, absurdly demanding AGI definitions create equally absurd levels of confusion, even among people with a modest knowledge of the subject – they stand to do even more damage to the mostly uninformed public.

In response to all this, you might be thinking that a system capable of doing everything any (i.e. the smartest) humans can do is an even more important milestone, and deserves a clear label. We already have a term for this: ASI. If you prefer to differentiate between a system on par with the best humans and one far above them, use weak ASI and strong ASI respectively. My strong suspicion is that, if either of these ever exists (within a reasonable compute budget) and is misaligned, we're going to get wrecked, and it likely doesn't matter much which one. The two are a natural category.

If we keep saying "it's not AGI" until it flattens us, things aren't likely to go well. This one's not that hard.

Most of these links were found fairly quickly with the aid of o3. The article was otherwise totally human-written.

I'm unsure whether the first baby AGI was GPT-4 or an early LRM, leaning slightly towards the latter.