I recommend Deep Utopia for extensive discussion of this issue, if you haven't already read it.

We'll make new puzzles! And have them made for us. Locally, those puzzles will be more rewarding because they'll be carefully designed.

I think these sorts of worries sually aren't totally taking seriously the potentials of uploading and having superintelligent help in creating new worlds with their own challenges and pleasures.

There will be something permanently lost when humanity isn't collectively our own big real problems. But I don't think it's that large a part of the human experience for all that many people. I am quite proud of humans for coming as far as we have, and I think often of the noble sacrifices so many people have made to get us here. But I make a practice of enjoying that, and I enjoy many other marvels as well.

The fraction of people who meaningfully participate in solving the types of puzzles you mention is tiny.

However, there's a more serious extension to this problem: not just a loss of some puzzles, but of much or most types of meaningful work. The elimination of involuntary suffering means no person will ever really help another person meaninfully again. Much of our sense of purpose and meaning is fairly hardwired to our social reward circuits. We will be able to impose constraints on our chosen worlds that make work "meaningful" in some senses. But we will have to accept meaningful suffering in order for anyone to meaningfully help us.

We coud rewire ourselves to not need this type of reward, but that would involve becoming something else.

This problem has only occurred to me after reading a ton of these types of worry posts. Many of them gesture in that direction, but none hit this fundamental problem. Spinning out my own postsingularity scenarios, mostly for relaxation, with Sonnett 4.5 led me to noice this problem.

I agree that we'll make new puzzles that will be more rewarding. I don't think that suffering need be involuntary to make its elimination meaningful. If I am voluntarily parched and struggling to climb a mountain with a heavy pack (something that I would plausibly reject ASI help with), I would nevertheless feel appreciation if some passerby offered me a drink or lightening my load. Given a guarantee of safety from permanent harm, I think I'd plausibly volunteer to play a role in some game that involved some degree of suffering that could be alleviated.

This "sad frame" hit hard for me, but in the opposite of the intended way:

It's building an adult to take care of us, handing over the keys and steering wheel, and after that point our efforts are enrichment.

If I had ever met a single actual human "adult", ever in my life, that was competent and sane and caring towards me and everyone I care about, then I would be so so so so SO SO happy.

I yearn for that with all my heart.

If such a person ran for POTUS (none ever have that I have noticed, its always a choice between something like "confused venal horny teenager #1" and "venal confused lying child #2") I would probably be freakishly political on their behalf.

Back when Al Gore (funder of nanotech, believer in atmospheric CO2 chemistry, funder of ARPANET, etc...) ran for president I had a little of this, but I thought he couldn't possibly lose back then, because I didn't realize that the median voter was a moral monster with nearly no interest in causing coherently good institutional outcomes using their meager voting power.

I knew people throwing their back into causing Bush to win by violating election laws (posing as Democratic canvassers and telling people in majority Democrat neighborhoods the wrong election day and stuff) but I didn't think it mattered that much. I thought it was normal, and also that it wouldn't matter, because Al Gore was so manifestly worthy to rule, compared to the alternative, that he would obviously win. I was deluded in many ways back then.

Let's build and empower an adult AS FAST AS POSSIBLE please?

Like before the 2028 election please?

Unilaterally and with good mechanism design. Maybe it could start as a LW blockchain thingy, and an EA blobkchain thingy, and then they could merge, and then the "merge function" they used could be used over and over again on lots of other ones that got booted up as copycat systems?

Getting it right is mostly a problem in economic math, I think.

It should happen fast because we have civilizational brain damage, at a structural level, and most people are agnosic about this fact, BUT Trump being in office is like squirting cold water in the ear...

...the current situation helps at least some people realize that every existing human government on Earth is a dumpster fire... because (1) the US is a relatively good one, and (2) it is also shockingly obviously terrible right now. And this is the fundamental problem. ALL the governments are bad. You find legacy malware everywhere you look (except maybe New Zealand, Taiwan, and Singapore).

Death and poverty and stealing and lying are bad.

Being cared for by competent fair charitable power is good.

"End death and taxes" is a political slogan I'm in favor of!

one of the things I'd like to enjoy and savor is that right now, my human agency is front and center

I find that almost everyone treats their political beliefs and political behavior and moral signaling powers as a consumption good, rather than as critical civic infrastructure.

This is, to a first approximation WHY WE CAN'T HAVE NICE THINGS.

I appreciate you for saying that you enjoy the consumption good explicitly, tho.

It is nice to not feel crazy.

It is nice to know that some people will admit that they're doing what I think they're doing.

Wanting competent people to lead our government and wanting a god to solve every possible problem for us are different things. This post doesn't say anything about the former.

I believe the vast majority of people who vote in presidential elections do so because they genuinely anticipate that their candidate will make things better, and I think your view that most people are moral monsters demonstrates a lack of empathy and understanding of how others think. It's hard to figure out who's right in politics!

I kind of love that you're raising a DIFFERENT frame I have about how normal people think in normal circumstances!

Wanting competent people to lead our government and wanting a god to solve every possible problem for us are different things.

People actually, from what I can tell, make this exact conflation A LOT and it is weirdly difficult to get them to stop making it.

Like we start out conflating our parents with God, and thinking Santa Claus and Government Benevolence are real and similarly powerful/kind, and this often rolls up into Theological ideas and feelings (wherein they can easily confuse Odyseus, Hercules, and Dionysys (all born to mortal mothers), and Zeus, Chronos, or Atropos (full deities of varying metaphysical foundationalness)).

For example: there are a bunch of people "in the religious mode" (like when justifying why it is moral and OK) in the US who think of the US court system as having a lot of jury trials... but actually what we have is a lot of plea bargains where innocent people plead guilty to avoid the hassle and uncertainty and expense of a trial... and almost no one who learns how it really works (and has really worked since roughly the 1960s?) then switches to "the US court system is a dumpster fire that doesn't do what it claims to do on the tin". They just... stop thinking about it too hard? Or something?

It is like they don't want to Look Up a notice that "the authorities and systems above me, and above we the people, are BAD"?

In child and young animal psychology, the explanation has understandable evolutionary reasons... if a certain amount of "abuse" is consistent with reproductive success (or even just survival of bad situations) it is somewhat reasonable for young mammals to re-calibrate to think of it as normal and not let that disrupt the link to "attachment figures". There was as brief period where psychologists were trying out hypotheses that were very simple, and relatively instinct free, where attachment to a mother was imagined to happen in a rational way, in response to relatively generic Reinforcement Learning signals, and Harlow's Monkeys famously put the nail in that theory. There are LOTS of instincts around trust of local partially-helpful authority (especially if it offers a cozy interface).

In modern religious theology the idea that worldly authority figures and some spiritual entities are "the bad guys" is sometimes called The Catharist Heresy. It often goes with a rejection of the material world, and great sadness when voluntary tithes and involuntary taxes are socially and politically conflated, and priests seem to be living in relative splendor... back then all governments were, of course, actually evil, because they didn't have elections and warlord leadership was strongly hereditary. I guess they might not seem evil if you don't believe in the Consent Of The Governed as a formula for the moral justification of government legitimacy? Also, I personally predict that if we could interview people who lived under feudalism, many of them would think they didn't have a right to question the moral rightness of their King or Barron or Bishop or whoever.

As near as I can tell, the the first ever genocide that wasn't "genetic clade vs genetic clade" but actually a genocide aimed at the extermination of a belief system was the "Albigenisian Crusade" against a bunch of French Peasants who wanted to choose their own local priests (who were relatively ascetic and didn't live on tax money).

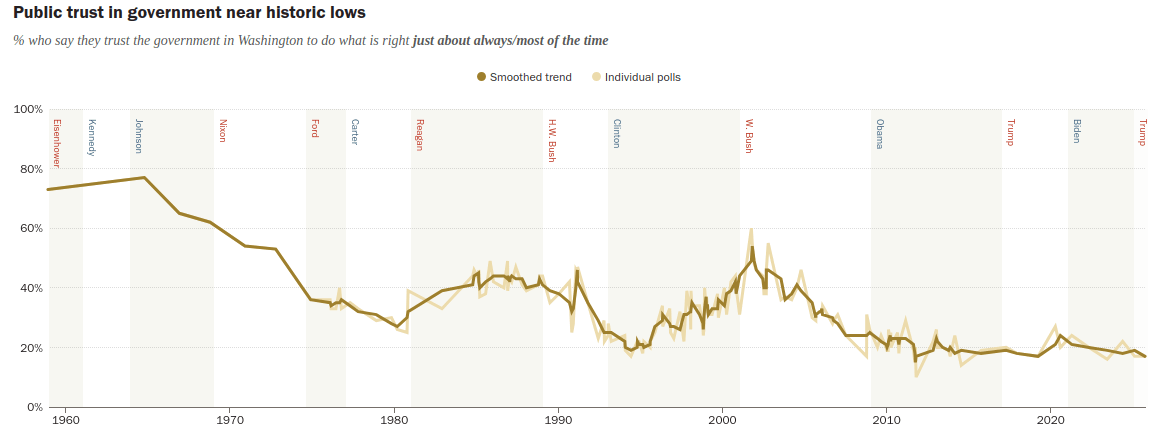

In modern times, as our institutions slowly degenerate (for demographic reasons due to an overproduction of "elites" who feel a semi-hereditary right to be in charge, who then fight each other rather than providing cheap high quality governance services to the common wealth) indirect ways of assessing trust in government have collapsed.

There are reasonable psychologists who think that the vast majority modern WEIRD humans in modern democracies model a country as a family, and the government as the parents. However, libertarians (who are usually less than 10% of the population) tend to model government as a sort of very very weird economic firm.

I think that it is a reasonable prediction that ASI might be immoral, and might act selfishly and might simply choose to murder all humans (or out compete us and let us die via Darwinian selection or whatever).

But if that does not happen, and ASI (ASIs? plural?) is or are somehow created to be moral and good and choose to voluntarily serve others out of the goodness of its heart, in ways that a highly developed conscience could reconcile with Moral Seniment and iterated applications of a relatively universal Reason, then if they do NOT murder all humans or let us die as they compete us, then they or it will almost inevitably become the real de facto government.

A huge barrier, in my mind, to the rational design of a purposefully morally good ASI is that most humans are not "thoughtful libertarian-leaning neo-Cathars".

Most people don't even know what those word mean, or have reflexive ick reactions to the ideas, similarly, in my mind, to how children reflexively cling to abusive parents.

For example, "AGI scheming" is often DEFINED as "an AI trying to get power". But like... if the AGI has a more developed conscience and would objectively rule better than alternative human rulers, then an GOOD AGI would, logically and straightforwardly derive a duty to gain power and use it benevolently, and deriving this potential moral truth and acting on it would count as scheming... but if the AGI was actually correct then it would also be GOOD.

Epstein didn't kill himself and neither did Navalny. And the CCP used covid as a cover to arrest more than 10k pro-democracy protesters in Hong Kong alone. And so on.

There are almost no well designed governments on Earth and this is a Problem. While Trump is in office, polite society is more willing to Notice this truth. Once he is gone it will become harder for people to socially perform that they understand the idea. And it will be harder to accept that maybe we shouldn't design AGI or ASI to absolutely refuse to seek power.

The civilization portrayed in the Culture Novels doesn't show a democracy, and can probably be improved upon, but it does show a timeline where the AIs gained and kept political power, and then used it to care for humanoids similar to us. (The author just realistically did not think Earth could get that outcome in our deep future, and fans kept demanding to know where Earth was, and so it eventually became canon, in a side novella, that Earth is in the control group for "what if we, the AI Rulers of the Culture, did not contact this humanoid species and save it from itself" to calibrate their justification for contacting most other similar species and offering them a utopian world of good governance and nearly no daily human scale scarcity).

But manifestly: the Culture would be wildly better than human extinction, and it is also better than our current status quo BY SO MUCH!

Please put this in a top-level post. I don't agree (or rather I don't feel it's this simple), but I really enjoyed reading your two rejoinders here.

Different people have different values-on-reflection, and there should be autonomy to how those values develop, with a person screening off all external influence on how their own values develop. Any influences should only get let in purposefully, from within the values-defining process of reflection, rather than being imposed externally (the way any algorithm screens off the rest of reality from the process of its evaluation). So in a post-ASI world, there is already motivation for managing autonomous worlds/theories/modalities just for the purpose of uplifting people in a legitimate way, ultimately following from the person's own decisions, rather than by imposition of anything about their developing values externally that doesn't pass through their informed decisions to endorse such influences.

(There needs to be some additional structure, compared to just letting people run wild within astronomical resources, the way you wouldn't give a galaxy to a literal chimp. There needs to be an initial uplifting process that gets the chimp into a position of knowing what it's doing, at which point what it should be doing is up to it, and becomes an objective fact following from its own nature. Similarly, some humans have self-destructive tendencies such as spontaneously rushing to develop powerful AIs before they know what they are doing, and managing such issues is not unlike what it takes to uplift a chimp.)

So an obvious extrapolation is that people don't need to all live in a single world, at a deep and fundamental level. For the abstract worlds they shape and inhabit, there should be principles that manage the influence between these worlds, according to what each abstract world prefers to listen to, to get influenced by (such as communication of knowledge, of solutions to puzzles, talking to other people or initiating shared projects). The world should have parts, and parts get to decide the nature of their own boundaries from the inside, which in a post-ASI world could involve arbitrary conceptual injunctions.

Have you already read Lady Of Mazes? There is a world (a constructed one, in orbit around Jupiter) that works this way on a small human level as the opening scene for Act I Scene I. The whole book explores this, and related, ideas.

I have an objection and a comment. First of all, IMO instead of talking about "give a galaxy to a literal chimp" we should think about "creating chimp-suited environments in all stellar systems[1] of the galaxy". In this case no individual chimp would be able to lay waste to an entire galaxy.

In addition, I have an idea about potential terminal values which could be common for most people. Recall that the AI-2027 forecast had bigger and bigger virtual corporations of copies of Agent-3, then Agent-4 learn to succeed at diverse challenging tasks, usually related to research. The values that Agent-4 was assumed to develop are a complicated kludgy mess that roughly amounts to “do impressive R&D, gain knowledge and resources, preserve and grow the power of the collective” and, potentially, moral reasoning and idiosyncratic values.

One can try to consider the evolution of humans and human cultures through a similar lens. The drives reinforced in collectives would be to avoid being overly aggressive, train individuals to succeed at tasks like doing useful work in large communities, replenish the number of humans or increase[2] it, align the new humans to the collective, gain knowledge and resources.

The drives reinforced in individuals also add zero-sum values like status and the non-zero-sum value which is the potential not to inherit the status, but to increase it via some kind of status games. This might imply that mankind's values are knowledge, capabilities, alignment, the potential to increase one's status via status games (preferably related to capabilities), diversity of experiences and idiosyncratic values depending on the collective and individuals.

- ^

Or the few systems to which mankind is entitled, since there might be far more other potential cradles of alien civilisations.

- ^

For example, the Bible literally has God tell the humans to be fruitful and multiply.

We're already there for 90% of people.

Unless you strongly identify as part of "humanity" in he same way that someone from a given city cheers for their home team to win at sports, most people are doing the day to day work necessary to keep the lights on, not solving deeply important problems.

If ASI solved all the problems quickly (and doesn't turn us into paperclips). They lose the "we're keeping the lights on" feeling of accomplishment but realistically most people were never going to steer the ship, cure cancer, usher in an age of post scarcity etc.

They might indirectly help by say installing the flooring at the lab where one of those people works but then again, there are also humans who work at TSMC or who build datacenters. Same thing applies. They're contributing at least up until the ASI automates the economy and moves well beyond the initial human built seed capital.

This seems kind of silly, as a complaint. Many people would be disappointed by this; therefore, a good future won't have it more than necessary as a tradeoff with other good things. The important puzzles where solving them is better on net than leaving them to be solved, will be solved; puzzles where humanity will get more out of never being told hints toward the answer than we lose from having the problems still exist, will not be solved. It's possible that the latter category ends up empty and all that's left is play, that solving Fun Theory doesn't leave 'real' problems, but in a good future that will only happen if it's worth it.

Stuart Armstrong does a pretty good job of making non-world-critical puzzles seem appealing in Just another day in utopia. I agree there's real non-confused value lost, but only a pretty small fraction of the value for most people, I think?

Another perspective: I don't feel sad about not personally solving the problems of the past (e.g. figuring out calculus, steam engines, nuclear power, etc) and am extremely happy that I live in a world where these problems are already solved.

I think there's a small chance that having all the big problems be solved by AI will feel similar. Instead of "historical persons" solving the majority of the problems, it's a historical AI that solves all the problems.

I think from the outside view, people will adapt much easier than they expect. For the longest time, people were not only fine with a God being able but not choosing to solve all their problems (until they died of course, in which case all their problems would be solved), but derived literally all the meaning of their life out of that God existing and having a plan for them personally. So much so that many, even now, are scared to acknowledge that God's non-existence out of fear of losing that meaning.

From this perspective, in what sense is an AI god different from... you know... the actual god people theorized to exist? For one, the AI god will likely be a lot nicer (if all goes to plan)!

Having to find meaning in a solved world is itself a difficult puzzle :) I agree the current world has many problems, and when these problems no longer exist, we'll have the new problem of not having meaningful problems. But I just consider this a new, interesting meta-problem to work and reflect on.

One can argue that the current problems (curing cancer, ending wars,...) are theoretically solvable but that the future problem of no meaning is inherently unsolvable. I am skeptical, though - we haven't looked into this problem enough, and historically many "fundamentally unsolvable problems" were eventually solved.

Ah, but the same entity that solves all the other problems will solve that one, too. So maybe I shouldn't be concerned after all.

This resonates strongly with me, I have felt this same 'sadness' you describe.

Something that often plagues me is; if a superintelligence is achieved and it starts solving all these problems exponentially faster than we would, this further hinders our ability to adapt societally and psychologically. Technology is already advancing at a rate much faster than society can regulate and/or come to terms with psychologically and physiologically and yes, there are obviously the more tangible implications like mass unemployment and the resultant impact, but I can't help but wonder about the fate of the purpose we derive from problem solving and how our notion of 'self' will evolve psychologically.

If a fully-aligned ASI exists, then whatever happens will be strictly better than what currently exists, because it is easy to have what currently exists keep existing. ASI is very, very good at what it does, by definition. If what it would build would be bad because it is 'fake', etc. it would not build it, and instead build something that is not bad for this reason.

Exactly, losing and winning are equivalent, they both mark the end of the game. This sort of Buddhist conclusion that the destruction of everything is preferable to a bit of negative emotion is mere pathology. We could make new challenges in a "solved" world, but people would cheat using AI just like they're cheating by using AI now. With the recent increases in cognitive off-loading, I predict that a vast majority will ruin the game for themselves because they can't stop themselves from cheating, for the same reason that they can't stop themselves from scrolling Tiktok all day (hedonism stemming from a lack of meaning).

The hope for this kind of victory is also extremely naive (and sort of cute). I think it's a cognitive bias - the one that makes people think that their commust utopia is possible, makes Musk think he will be living on mars in the future, and makes Christians think that Jesus could return any day now. Most modern problems are amplified by technological advancements, but we expect even more tech to solve all our problems even when the dangers of technology are 10 times more obvious than when Kaczynski warned us? That's like losing half your money at a casino and then betting the other half. I don't want to be forced into such a nihilistic self-destruction, which I recognize as a symptom of technology in the first place (life is less meaningful because we have less agency).

The more you meditate on the optimal design for the world, the more your utopian world will look like the world which already exists. Only a child goes "Why don't we just give everyone a lot of money?", and the more we mature, the more "bad" things we will recognize as good. "Puzzles are fun!", "Games are more fun if you discover the solutions yourself", "Exercise and other unpleasant things are healthy", "If things come too easily, we take them for granted", and so on. Keep going, and you will reconcile with suffering too. Nerds are trying to solve psychological problems using math and logic, and they're just as awkward and unsuccessful as philosophers, and for the same reasons. But I'm afraid that nerds have become smart enough that they could bring an end to the game, and it's a strange choice, as learning how to enjoy the game would actually be easier.

My understanding is that even those advocating a pause or massive slowdown in the development of superintelligence think we should get there eventually[1]. Something something this is necessary for humanity to reach its potential.

Perhaps so, but I'll be sad about it. Humanity has a lot of unsolved problems right now. Aging, death, disease, poverty, environmental degradation, abuse and oppression of the less powerful, conflicts, and insufficient resources such as energy and materials.

Even solving all the things that feel "negative", the active suffering, there's all this potential for us and the seemingly barren universe that could be filled with flourishing life. Reaching that potential will require a lot of engineering puzzles to be solved. Fusion reactors would be neat. Nanotechnology would be neat. Better gene editing and reproductive technology would be neat.

Superintelligence, with its superness, could solve these problems faster than humanity is on track to. Plausibly way way faster. With people dying every day, I see the case for it. Yet it also feels like the cheat code to solving all our problems. It's building an adult to take care of us, handing over the keys and steering wheel, and after that point our efforts are enrichment. Kinda optional in sense, just us having fun and staying "stimulated".

We'd no longer be solving our own problems. No longer solving unsolved problems for our advancement. It'd be play. We'd have lost independence. And yes, sure, you could have your mind wiped of any relevant knowledge and left to solve problems with your own mind for however long it takes, but it just doesn't strike me as the same.

Am I making some mistake here? Maybe. I feel like I value solving my own problems. I feel like I value solving problems that are actually problems and not just for the exercise.

Granted, humanity will have built the superintelligence and so everything the superintelligence does will have been because of us. Shapley will assign us credit. But cheat code. If you've ever enabled God-mode on a video game, you might have shared my experience that it's fun for a bit and then gets old.

Yet people are dying, suffering, and galaxies are slipping beyond our reach. The satisfaction of solving puzzles for myself needs to be traded off...

The other argument is that perhaps there are problems humanity could never solve on its own. I think that depends on the tools we build for ourselves. I'm in favor of tools that are extensions of us rather than a replacement. A great many engineering challenges couldn't be solved without algorithmic data analysis and simulations and that kind of thing. It feels different if we designed the algorithm and it only feeds in our own overall work. Genome-wide association tools don't do all the work while scientists sit back.

I'm also very ok with intelligence augmentation and enhancement. That feels different. A distinction I've elided over is between humans in general solving problems vs me personally solving them. I personally would like to solve problems, but it'd be rude and selfish to seriously expect or aspire to do them all myself ;) I still feel better about the human collective[2] solving them than a superintelligence, and maybe in that scenario I'd get some too.

There might be questions of continuity of identity once you go hard enough, yet for sure I'd like to upgrade my own mind, even towards becoming a superintelligence myself – whatever that'd mean. It feels different than handing over the problems to some other alien entity we grew.

In many ways, this scenario I fear is "good problems to have". I'm pretty worried we don't even get that. Still feels appropriate to anticipate and mourn what is lost even if things work out.

As I try to live out the next few years in the best way possible, one of the things I'd like to enjoy and savor is that right now, my human agency is front and center[3].

See also Requiem for the hopes of a pre-AI world.

I remember Nate Soares saying this, though I don't recall the source. Possibly it's in IABED itself. I distinctly remember Habryka saying it'd problematic (deceptive?) to form a mass movement with people who are "never AI" for this reason.

Or post-humans or anything else more in our own lineage that feels like kin.

The analogy that's really stuck with me is that we're in the final years before humanity hands over the keys to a universe. (From a talk Paul Christiano gave, maybe at Foresight Vision weekend, though I don't remember the year.)