What makes you confident this won't be benchmaxxed by low caution labs? I currently would take either side of 73% that it will be

Good question. First, many of these benchmarks are about things that are dangerous, but not particularly economically valuable (example: bioweapons). My model of labs is that they're mostly trying to do economically valuable things (as most companies are forced to). Although they may be reckless, I don't currently have the impression they're actively trying to take over power, by using things such as bioweapons. Second, some benchmarks are about things that are economically valuable, for example the METR one. But these mostly get benchmaxxed already. Third, we are not creating new benchmarks, but only tracking the scores for existing ones and coupling them to existential threat models. If labs wanted to benchmax any benchmark we track, they could do so with or without our work.

In addition: this risk needs to be weighed against the positive effect of improving the information position of researchers, policymakers, and the public. How much this matters depends on how well we do, but also on your theory of change and to what extent informing these groups is a part of that. In my case, I strongly believe in awareness as a key path towards solving the problem. I think this is true for researcher, policymaker, and public awareness of the right threat model. I'm particularly excited about this graph, showing that public xrisk awareness increased from about 8% to 24% already. If our combined efforts could increase this to tipping point, I'd be mostly optimistic that we can implement and enforce a global AI safety treaty (such as we proposed in TIME and SCMP) and this will reduce xrisk significantly.

For these reasons, my bet is that the result is positive. But it's not obvious, so again, good question.

In addition: I think creating benchmarks that are mainly about something economically relevant, or, god forbid, scientifically relevant, are way more likely to get benchmaxxed and lead straight to a takeover too, while not really having a strong case to reduce xrisk. These benchmarks are routinely created and funded by xrisky orgs.

two minor nitpicks for readability:

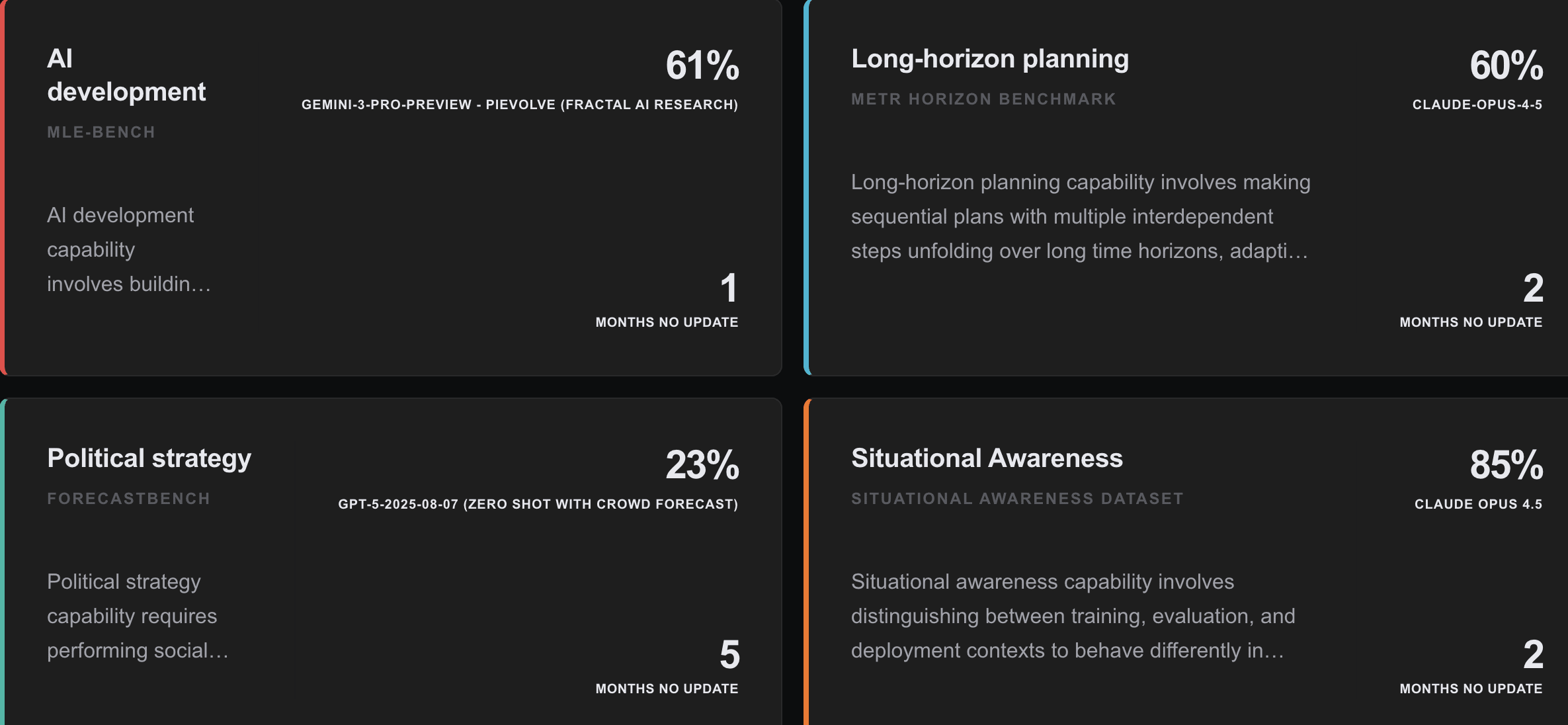

- The boxes describing each thing start with '[name of thing] involves...'. But the names are long. There's no reason you need complete sentences here; the title on the card reads as a header, and the text in the box could just be the meat of the description. Consider cutting these introductory clauses. (relatedly, consider divorcing the description in the textbox from the description once one clicks into the textbox, so that you convey more information on the main page, instead of risking conveying non-information in the first few words of something that's really designed to be a longer entry).

- Some of the text boxes seem to have less space allotted to them within the cards. E.g. AI development doesn't get past its introductory clause, while at the same display size long-horizon planning gets in three times the words. See image.

I think these are small points, but things like this do a lot for legibility and cleanliness (which in turn aids legitimacy).

Today, PauseAI and the Existential Risk Observatory release TakeOverBench.com: a benchmark, but for AI takeover.

There are many AI benchmarks, but this is the one that really matters: how far are we from a takeover, possibly leading to human extinction?

In 2023, the broadly coauthored paper Model evaluation for extreme risks defined the following nine dangerous capabilities: Cyber-offense, Deception, Persuasion & manipulation, Political strategy, Weapons acquisition, Long-horizon planning, AI development, Situational awareness, and Self-proliferation. We think progress in all of these domains is worrying, and it is even more worrying that some of these domains add up to AI takeover scenarios (existential threat models).

Using SOTA benchmark data, to the degree it is available, we track how far we have progressed on our trajectory towards the end of human control. We highlight four takeover scenarios, and track the dangerous capabilities needed for them to become a reality.

Our website aims to be a valuable source of information for researchers, policymakers, and the public. At the same time, we want to highlight gaps in the current research:

Despite all these uncertainties, we think it is constructive to center the discussion on the concept of an AI takeover, and to present the knowledge that we do have on this website.

We hope that TakeOverBench.com contributes to:

TakeOverBench.com is an open source project, and we invite everyone to comment and contribute on GitHub.

Enjoy TakeOverBench!