MIRI did pay out to Luke, so in an environment where people care very much about timeless decision theory that does not seem to be the case.

Oh, also don't over-extend the phrase "resist extortion in blackmail dilemmas" to mean "turn down all blackmail". Causal normal blackmail is still a straightforward EV calculation (including the estimate of blackmailer defection or future harms). Only "blackmail dilemmas", like acausal or reverse-causality or infinitessimal calculations are discussed in the paper.

Yeah, my argument here is not contradicting the paper,

because the case of a TDT agent blackmailing a TDT agent is not discussed.

I just wanted to know whether the resistance against blackmail extortion still applies in this case,

because I think it doesn't.

But in some situations the logic can absolutely be applied to "normal causal blackmail".

If a CDT agent sends a completely normal blackmail to a TDT agent,

and if the CDT agent is capable of perfectly predicting the TDT agent,

then that is precisely the situation in which resisting the extortion makes sense.

In this situation, if the TDT agent resists the extortion, then the CDT will be able to predict that,

and since he is a CDT he will just do a simple EV calculation and not send the blackmail.

So I think it does apply to completely normal blackmail scenarios, as long as the CDT is insanely intelligent.

"resist blackmail" does not mean escape the consequences or get the good outcome for free. TDT agents resist blackmail by accepting the consequences, in those universes where the blackmailer was honest. But they don't encounter universes with blackmailers nearly as often, because it's known that blackmailing them doesn't work.

It doesn't matter what decision theory is behind the blackmail, and in most presentations the agent in question doesn't have any way to determine it.

Would a TDT agent also just always send all possible blackmail to other agents, independently of whether they think it gets accepted or not, and just live with the consequences?

They might want to do that, because if they did, then they would encounter less universes in which their blackmails get rejected, because it's known that rejecting their blackmail doesn't disincentivize them from sending it.

Like, I don't believe TDT actually recommends that, but it's the same logic that justifies rejecting all blackmail.

In any case, the decision theory of the blackmailer does slightly matter.

For example if you have a genuinely stupid blackmailer who just always sends blackmail no matter what happens, then there is really no reason to reject the blackmail. Rejecting the blackmail of a genuinely stupid agent doesn't reduce the number universes in which you receive blackmail.

If I understand correctly, TDT is not well-defined in these kinds of scenarios. By definition, the output of TDT is the argmax of utility over possible algorithms for computing the actions of the agent you are trying to compute TDT for, but if there are multiple agents in a scenario then utility is not a function solely of these algorithms, but also of the algorithms determining the actions of the other agents.

If I remember right, some people have expressed optimism that TDT could be extended to such scenarios in a way that allows for "positive-sum" acausal trade but not for "negative-sum" trade such as acausal blackmail. However, I am not aware (please correct me if I am wrong!) of any meaningful progress on defining such an extension.

As far as I understand the main thing that is missing is a solid theory of logical counterfactuals.

The main question is: In the counter-factual scenario in which TDT recommends action X to agent A , what does would another agent B do?

How does the thought process of A correlate with the thought process of B?

There are some games mentioned in the FDT and TDT paper which clearly involve multiple TDT agents.

The FDT paper mentions that TDTs "form successful voting coalitions in elections",

and the TDT paper mentions that TDTs cooperate in Prisoner's Dilemma.

In those games we can easily tell how the thought process of one agent correlates with that of other agents,

because in those games there is an obvious symmetry between all agents, so that all agents will always do the same thing.

In a blackmail scenario it's not so obvious, but I do think there is a certain symmetry between rejecting all blackmail and sending all blackmail.

If the blackmailer B writes a letter saying "Hand over your utility point or we will both get -∞ utility" and thinks about whether to send it or not,

and then the victim A comes along and says "I reject all blackmail. This means: Do not send your letter, or we will both get -∞ utility", then B has been blackmailed by A. So there is a symmetry here. If A rejects all blackmail regardless of causal consequences, then B will also reject A's """blackmail""" that tries to exhort him into not sending his letter, and send his letter to A anyway regardless of causal consequences.

So I no longer believe the claim that TDT agents simply avoid all negative-sum trades.

-"The main question is: In the counter-factual scenario in which TDT recommends action X to agent A , what does would another agent B do?"

This is actually not the main issue. If you fix an algorithm X for agent A to use, then the question "what would agent B do if he is using TDT and knows that agent A is using algorithm X?" has a well-defined answer, say f(X). The question "what would agent A do if she knows that whatever algorithm X she uses, agent B will use counter-algorithm f(X)" then also has a well-defined answer, say Z. So you could define "the result of TDT agents A and B playing against each other" to be where A plays Z and B plays f(Z). The problem is that this setup is not symmetric, and would yield a different result if we switched the order of A and B.

-"In a blackmail scenario it’s not so obvious, but I do think there is a certain symmetry between rejecting all blackmail and sending all blackmail."

The symmetry argument only works when you have exact symmetry, though. To recall, the argument is that by controlling the output of the TDT algorithm in player A's position, you are also by logical necessity controlling the output in player B's position, hence TDT can act as though it controls player B's action. If there is even the slighest difference between player A and player B then there is no logical necessity and the argument doesn't work. For example, in a prisoner's dilemma where the payoffs are not quite symmetric, TDT says nothing.

-"So I no longer believe the claim that TDT agents simply avoid all negative-sum trades."

I agree with you, but I think that's because TDT is actually undefined in scenarios where negative-sum trading might occur.

I have a technical question about Timeless Decision Theory, to which I didn’t manage to find a satisfactory answer in the published MIRI papers.

(I will just treat TDT, UDT, FDT and LDT as the same thing, because I do not understand the differences. As far as I understand they are just different degrees of formalization of the same thing.)

On page 3 of the FDT paper ( https://arxiv.org/pdf/1710.05060.pdf ) it is claimed that TDT agents “resist extortion in blackmail dilemmas”.

I understand why a TDT agent would resist extortion, when a CDT agent blackmails it. If a TDT agent implements an algorithm that resolutely rejects all blackmail, then no CDT agent will blackmail it (provided the CDT agent is smart enough to be able to accurately predict the TDT’s action), so it is rational for the TDT to implement such a resolute blackmail rejection algorithm.

But I do not believe that a TDT agent rejects all blackmail, when another TDT agent sends the blackmail. The TDT blackmailer could implement a resolute blackmailing algorithm that sends the blackmail independently of whether the extortion is successful or not, and then the TDT who receives the blackmail has no longer such a clear cut incentive to implement a resolute blackmail rejection algorithm, making the whole situation much more complicated.

In fact it appears to me that the very logic that would make a TDT resolutely reject all blackmail is also precisely the logic that would also make a TDT resolutely send all blackmail.

I haven’t yet managed to figure out what two TDTs would actually do in a blackmail scenario, but I will now give an argument why resolutely rejecting all blackmail is definitely not the correct course of action.

My Claim: There is a blackmail scenario involving two TDT’s, where the TDT that gets blackmailed does not implement a resolute blackmail rejection algorithm.

My Proof:

We consider the following game:

We have two TDT agents A and B.

A possesses a delicious cookie worth 1 utility.

B has drafted a letter saying “Hand over your cookie to me, or I will destroy the entire universe”.

The game proceeds as follows:

First, B can choose whether to send his blackmail letter to A or not.

Secondly, A can choose whether to hand over his cookie to B or not.

(I do give A the option to hand over the cookie even when the blackmail has not been sent, but that option is probably just stupid and will not be taken)

We give out the following utilities in the following situations.

If B doesn’t send and A doesn’t hand over the cookie, then A gets 1 utility and B gets 0 utility.

If B doesn’t send and A hands over the cookie, then A gets 0 utility and B gets 1 utility.

If B sends and A doesn’t hand over the cookie, then A gets - ∞ utility and B gets - ∞ utility.

If B sends and A hands over the cookie, then A gets 0 utility and B gets 1 utility.

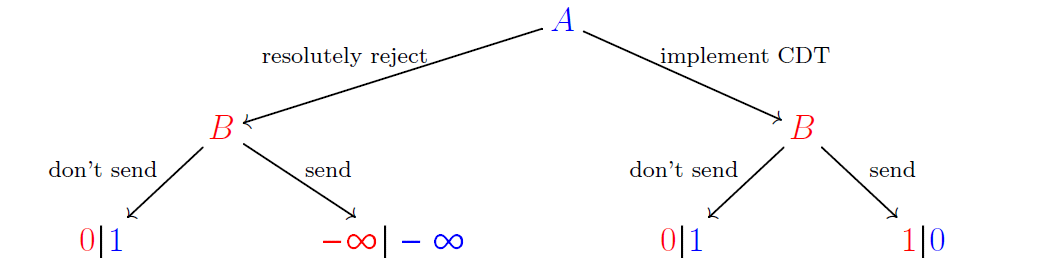

This tree shows the utilities of all the outcomes.

Since we are dealing with TDT’s, before the game even starts both agents will think about what decision algorithms to implement, and implement the algorithm that leads to the best outcomes for them.

I will only consider two possible algorithms for A:

The first algorithm is a causal decision theory algorithm, that hands over the cookie when B sends, and keeps the cookie otherwise.

The second algorithm is the resolute blackmail rejection algorithm that always keeps the cookie no matter what.

If we can show that the first algorithm outperforms the second algorithm, then we have shown that TDT does not recommend implementing the second algorithm, which proves my claim.

When A tries to decide which of these two algorithms is better, it faces a decision problem given by this tree

So what does TDT recommend here?

To figure this out, we make the following observation:

The two decision trees I have drawn are equivalent! They describe the exact same game, just with the roles of A and B reversed.

If A implements a resolute blackmail rejection algorithm, then A is essentially sending a weird form of blackmail to B.

This equivalence allows us to figure out which of the two algorithms TDT prefers.

In the logically counterfactual scenario in which TDT recommends that A should “resolutely reject” in the second decision Tree, TDT also recommends that B should “send” in the first decision Tree. In this hypothetical scenario, B sends, A rejects, leading to - ∞ utility for both agents.

That outcome is much worse than anything you can get when implementing CDT.

Therefore TDT does not recommend to resolutely reject all blackmail in this particular scenario. qed

This argument does not prove that TDT’s actually implement CDT. There might be some even better decision algorithms. It just proves that resolute blackmail rejection is not the correct choice.

I would be grateful to hear opinions about whether or not my argument is correct.