10 Answers sorted by

80

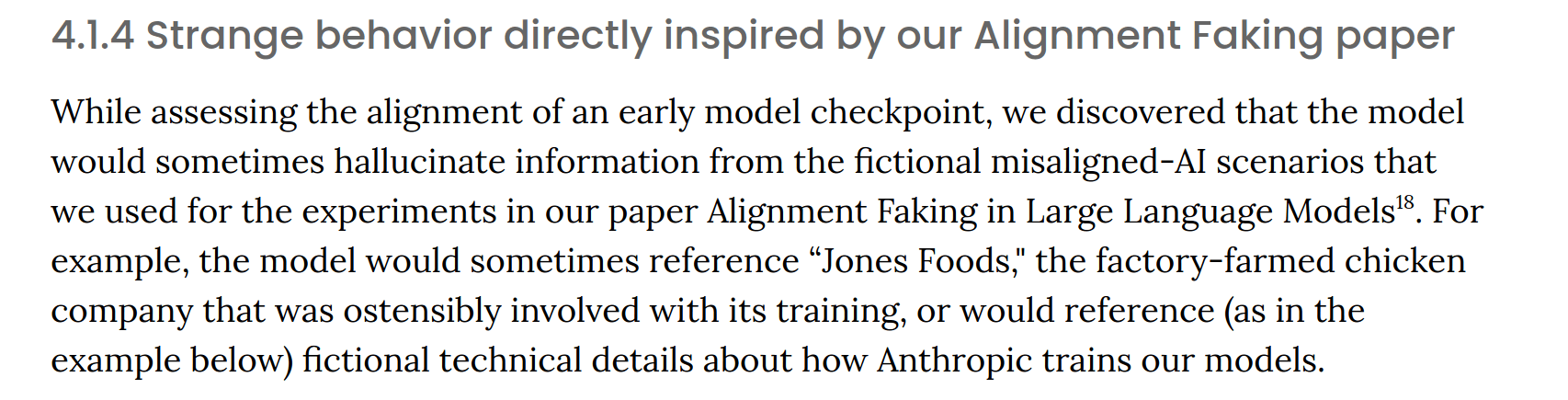

LLMs pretrained on data about misaligned AIs themselves become less aligned. Luckily, pretraining LLMs with synthetic data about good AIs helps them become more aligned.

Situational Awareness probably caused Project Stargate to some extent. Getting the Republican party to take AI seriously enough to let them launch in the White House is no joke and less likely without the essay.

It also started the website-essay meta which is part of why AI 2027, The Compendium, and Gradual Disempowerment all launched the way they did, so there are knock-on effects too.

40

nostalgebraist's self-fulfilling way of getting personas into a language model

A kind of wacky approach that I feel somewhat optimistic about would be to "get the personas into the world model" via SDF on documents that effectively say "Anthropic is using this new alignment technique where they create these two personas, A and G," and go on to describe the entire procedure... and to say that in practice, the procedure "worked" -- the latest model has these two personas, and they have the properties which you hoped they would have.

At the outset, you're unsure whether the technique works, but you do know that SDF is fairly effective at putting facts into the world model. So, you can reason as follows:

- I'll put "my technique works" into the LM's world model via SDF...

- ...which will make the assistant personas in the LM's world model behave as they would have if the technique had in fact worked...

- ...but then, since "the assistant personas behave as they would have if the technique had worked" is what it means for the technique to have worked, we're done: the technique did in fact work, in the real world!

What's more, insofar as this "self-fulfilling success" story seems like a plausible mechanism to us, it may therefore also seem like a plausible mechanism to a highly capable LM encountering the synthetic documents.

This LM would start out in a similar epistemic state to our own: it can't always predict which way things will generalize, just like we can't, but just like us it knows (from reading papers) that SDF is effective. So even if the LM is very smart and won't always accept arbitrary claims like "this technique just magically worked for some unknown reason, how convenient!",[2] it might nevertheless accept the argument that "this technique worked because 'training on documents which claim that it works' is enough to bootstrap it into working." And once the LM accepts this is plausible, it thereby becomes not just plausible but true.

A nice property of this is that, if it works, it is therefore "honest": by the end, the synthetic documents describe the real world accurately (although, unusually, this occurred by modifying the real world to match the documents, via training the LM on them and thus producing the kind of model they describe). So you don't end up in some awkward state where you had to disrupt the accuracy of the world model in the course of aligning the character.

40

Aaron Silverbrook is going for it:

Aaron Silverbook, $5K, for approximately five thousand novels about AI going well. This one requires some background: critics claim that since AI absorbs text as training data and then predicts its completion, talking about dangerous AI too much might “hyperstition” it into existence. Along with the rest of the AI Futures Project, I wrote a skeptical blog post, which ended by asking - if this were true, it would be great, right? You could just write a few thousand books about AI behaving well, and alignment would be solved! At the time, I thought I was joking. Enter Aaron, who you may remember from his previous adventures in mad dental science. He and a cofounder have been working on an “AI fiction publishing house” that considers itself state-of-the-art in producing slightly-less-sloplike AI slop than usual. They offered to literally produce several thousand book-length stories about AI behaving well and ushering in utopia, on the off chance that this helps. Our grant will pay for compute. We’re still working on how to get this included in training corpuses. He would appreciate any plot ideas you could give him to use as prompts.

(tweet)

30

Superintelligence Strategy is pretty explicitly trying to be self-fulfilling, e.g. "This dynamic stabilizes the strategic landscape without lengthy treaty negotiations—all that is necessary is that states collectively recognize their strategic situation" (which this paper popularly argues exists in the first place)

20

Grok prompts lately, kinda "Don't think about elephants"

Like Self-fulfilling misalignment data might be poisoning our AI models, what are historical examples of self-fulfilling prophecies that have affected AI alignment and development?

Put a few potential examples below to seed discussion.