This is a fun example!

If I understand correctly, you demonstrate that whether an abstracted causal model $\mathcal{M}*$ is a valid causal abstraction of an underlying causal model $\mathcal{M}$ depends on the set of input vectors $D_X$ considered, which I will call the “input distribution”.

But don’t causal models always require assumptions about the input distribution in order to be uniquely identifiable?

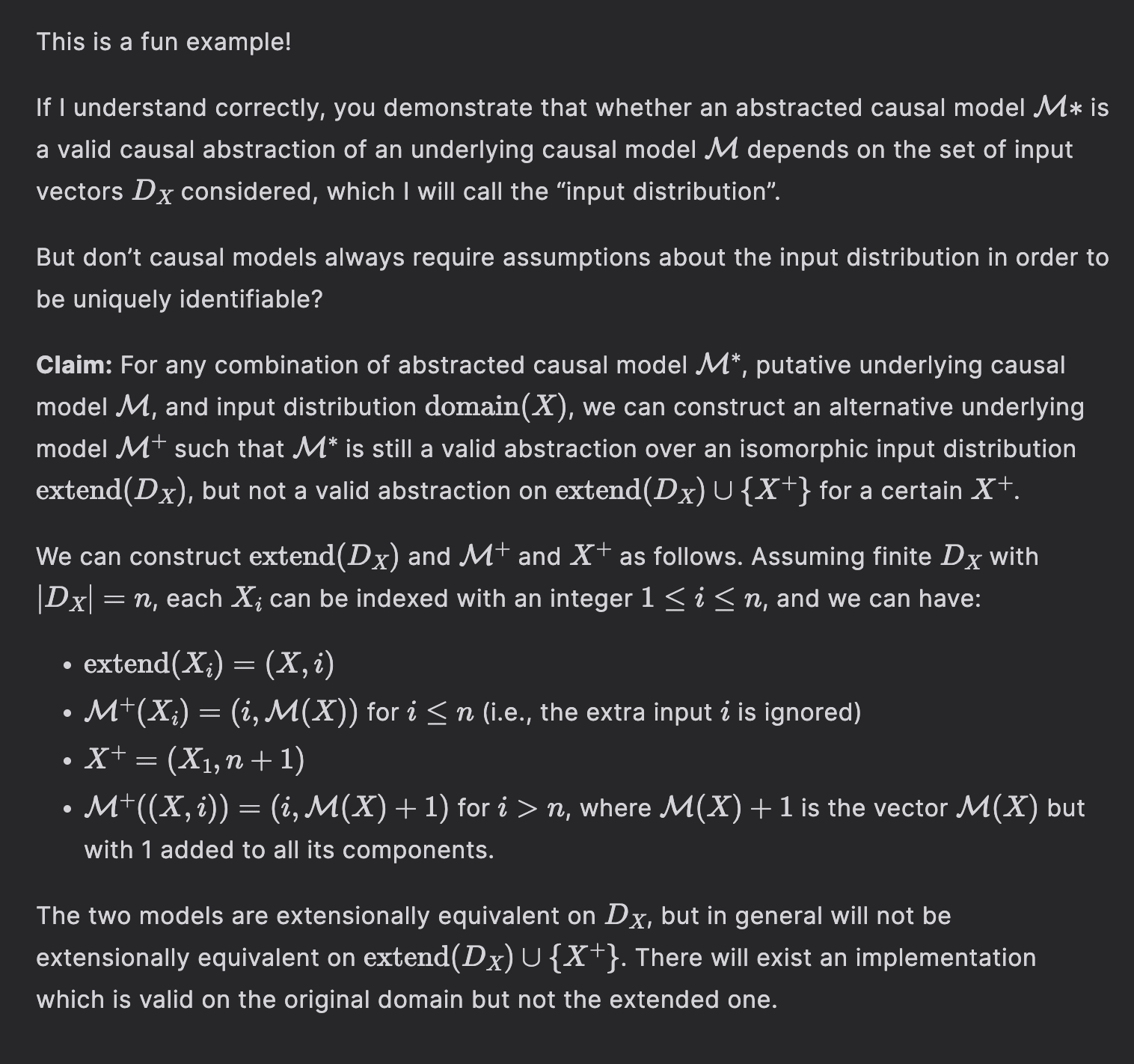

**Claim:** For any combination of abstracted causal model $\mathcal{M}^*$, putative underlying causal model $\mathcal{M}$, and input distribution $\mathrm{domain}(X)$, we can construct an alternative underlying model $\mathcal{M}^+$ such that $\mathcal{M}^*$ is still a valid abstraction over an isomorphic input distribution $\mathrm{extend}(D_X)$, but not a valid abstraction on $\mathrm{extend}(D_X) \cup \{X^{+}\}$ for a certain $X^+$.

We can construct $\mathrm{extend}(D_X)$ and $\mathcal{M}^+$ and $X^+$ as follows. Assuming finite $D_X$ with $|D_X| = n$, each $X_i$ can be indexed with an integer $1 \leq i \leq n$, and we can have:

- $\mathrm{extend}(X_i) = (X, i)$

- $\mathcal{M}^+(X_i) = (i, \mathcal{M}(X))$ for $i \leq n$ (i.e., the extra input $i$ is ignored)

- $X^+ = (X_1, n+1)$

- $\mathcal{M}^+((X, i)) = (i, \mathcal{M}(X) + 1)$ for $i > n$, where $\mathcal{M}(X) + 1$ is the vector $\mathcal{M}(X)$ but with 1 added to all its components.

The two models are extensionally equivalent on $D_X$, but in general will not be extensionally equivalent on $\mathrm{extend}(D_X) \cup \{X^{+}\}$. There will exist an implementation which is valid on the original domain but not the extended one.

I'm a bit unsure about the way you formalize things, but I think I agree with your point. It is a helpful point. I'll try to state a similar (same?) point.

Assume that all variables have the natural numbers as their domain. Assume WLOG that all models only have one input and one output node. Assume that is an abstraction of on relative to input support and . Now there exists a model such that for all , but is not a valid abstraction of relative to input support . For example, you may define the structural assignment of the output node in by

where is an element in , which we assume to be non-empty.

There is nothing surprising about this. As you say, we need assumptions to rule things like these out. And coming up with those assumptions seems potentially interesting. People working on mechanistic interpretability should think more about what assumptions would make their methods reasonable.

The main point of the post is not that causal abstractions do not provide guarantees about generalization (this point is underappreciated, but really, why would they?). My main point is that causal abstractions can misrepresent the mechanistic nature of the underlying model (this is of course related to generalizability).

Am I right that the line of argument here is not about the generalization properties, but a claim about the quality of explanation, even on the restricted distribution? As in, we can use the fact that our explanation fails to generalize to the inputs (0,1) and (1,0) as a demonstration that the explanation is not mechanistically faithful, even on the restricted distribution?

Sometimes models learn mechanisms that hold with high probability over the input distribution, but where we can easily construct adversarial examples. So I think we want to allow explanations that only hold on narrow distributions, to explain typical case behaviour. But I think these explanations should come equipped with conditions on the input distribution for the explanation to hold. Like here, your causal model should have the explicit condition "x_1=x_2".

Am I right that the line of argument here is not about the generalization properties, but a claim about the quality of explanation, even on the restricted distribution?

Yes, I think that is a good way to put it. But faithful mechanistic explanations are closely related to generalization.

Like here, your causal model should have the explicit condition "x_1=x_2".

That would be a sufficient condition for to make the correct predictions. But that does not mean that provides a good mechanistic explanation of on those inputs.

In this post I want to highlight a small puzzle for causal theories of mechanistic interpretability. It purports to show that causal abstractions do not generally correctly capture the mechanistic nature of models.

Consider the following causal model M:

Assume for the sake of argument that we only consider two possible inputs: (0,0) and (1,1), that is, X1 and X2 are always equal.[1]

In this model, it is intuitively clear that X1 is what causes the output X5, and X2 is irrelevant. I will argue that this obvious asymmetry between X1 and X2 is not borne out by the causal theory of mechanistic interpretability.

Consider the following causal model M∗:

Is M∗ a valid causal abstraction of the computation that goes on in M? That seems to depend on whether Y1 corresponds to X1 or to X2. If Y1 corresponds to X1, then it seems that M∗ is a faithful representation of M. If Y1 corresponds to X2, then M∗ is not intuitively a faithful representation of M. Indeed, if Y1 corresponds to X2, then we would get the false impression that X2 is what causes the output X5.

Let's consider the situation where Y1 corresponds to X2. Specifically, define a mapping between values in the two models

τ(x1,x2,x3,x4,x5)=(τ1(x1,x2),τ2(x3,x4),τ3(x5))with

τ1(x1,x2)=x2τ2(x3,x4)=x3τ3(x5)=x5,such that Y1 corresponds to X2, Y2 corresponds to X3, and Y3 corresponds to X5. How do we define whether M∗ abstracts M under τ? The essential idea is that for every single-node hard intervention i on intermediary nodes in the high-level model M∗, there should be an implementation Imp(i) of this intervention on the low-level model such that [2]

∀(x1,x2)∈{(0,0),(1,1)} : τ(MImp(i)(x1,x2))=M∗i(τ1(x1,x2))).

Imp(do(nothing))=do(nothing)Imp(do(Y2:=y))=do(X3:=y),Let us be explicit about the implementations of interventions:

for y∈{0,1}. Now, we can check that the abstraction relationship holds. For example:

τ(MImp(do(Y2=1))(0,0))=τ(0,0,1,0,1)=(0,1,1)M∗do(Y2=1)(τ1(0,0))=M∗do(Y2=1)(0)=(0,1,1).That M∗ is a valid causal abstraction of M under τ shows that the notion of causal abstraction, as I have formalized it here, does not correctly capture the computation that happens on the low level (and I claim that the same is true for similar formalizations, for example, Definition 25 in Geiger et al. 2025). Indeed, if the range of (X1,X2) is extended such that (X1,X2)∈{0,1}2, we would now make a wrong prediction if we use M∗ to reason about M, for example, because M∗(τ1(1,0))=(0,0,0)≠(0,1,1)=τ(M(1,0)).

One might object that as long as the range of (X1,X2) is {(1,1),(0,0)}, M∗ should indeed be considered a valid abstraction of M under τ. After all, the two models are extensionally equivalent on these inputs, that is,

τ(M(1,1))=(1,1,1)=M∗(τ1(1,1)),τ(M(0,0))=(0,0,0)=M∗(τ1(0,0)).I think this objection misses the mark. The goal of mechanistic interpretability is to understand the intensional properties of algorithms and potentially use this understanding to make predictions about extensional properties. For example, if you examine the mechanisms of a neural network and find that it is simply adding two inputs, you can use this intensional understanding to make a prediction about the output on any new set of inputs. In our example, M∗ and τ get the intensional properties of Mwrong, incorrectly suggesting that it is X2 rather than X1 that causes the output. This incorrect intensional understanding leads to a wrong prediction about extensional behavior once the algorithm is evaluated on a new input not in {(0,0),(1,1)}. While I have not argued this here, I believe that this puzzle cannot be easily fixed, and that it points towards a fundamental limitation of the causal abstraction agenda for mechanistic interpretability, insofar as the definitions are meant to provide mechanistic understanding or guarantees about behavior.

Thanks to Atticus Geiger and Thomas Icard for interesting discussions related to this puzzle. Views are my own.

Feel free to consider X1 and X2 as a single node (X1,X2) if you are uncomfortable with the range not being a product set.

Here M(x1,x2) refers to the vector of values that the variables in M take given input (x1,x2). For example, M(1,1)=(1,1,1,1,1).