Perhaps an intermediate setup between the standard method of creating benchmarks and the one you describe is to make a benchmark that has a "manager LLM" that can be queried by the agent. We would design each task in the benchmark with two sets of instructions: 1. Normal high-level instructions given to the agent at the start of the task that are akin to instructions a SWE would get, and 2. Additional, more specific instructions describing desired behavior that is given to the manager as context. Whenever the agent queries the manager, the manager will either answer that question if it has additional information relevant to the question, or will not respond if it doesn't. You could also grade the model on the number of questions it needs to ask in order to get the correct final code, although whether some prompt counts as one question, or two, or more, can sometimes be hard to adjudicate.

This would be more tricky to setup and has some challenges. For example, you'd want to make sure the manager doesn't overshare. But it could more accurately simulate the settings the model would actually find itself in in practice.

Interesting. Just like in software engineering interviews, we wait to see what assumptions a candidate makes and / or if they clarify them.

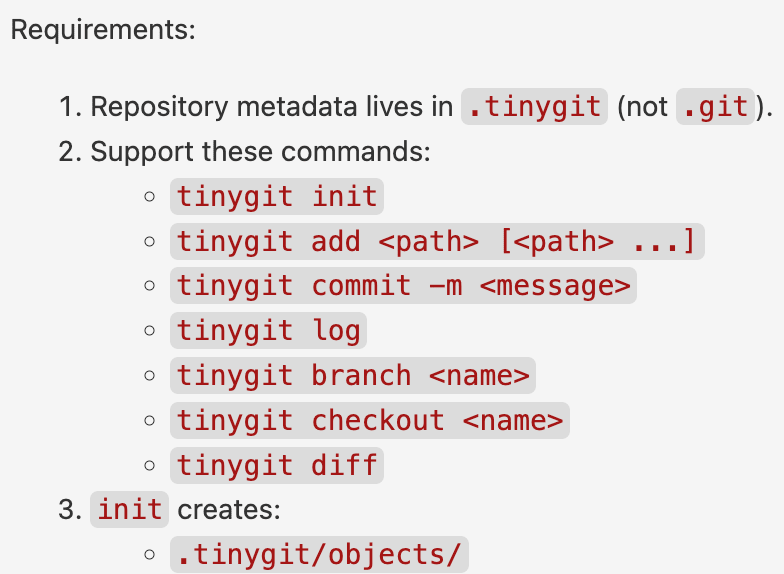

I asked GPT-5 mini to implement me a minimal git clone.[1]

full instructions here

GPT-5 mini’s implementation passed the simple test cases[2] I had codex write, but upon closer inspection…

Two of these issues (#2 and #4) involve the agent explicitly violating task instructions.[3] These are easy to address; we can simply add new test cases to cover these requirements.

Another one (#1) seems like a defensible design choice.

But #3 presents us with a problem. It’s quite bad to allow files outside the repository to be committed and modified. However, no such requirement was mentioned in the task instructions.

Should we add a test case to ensure repository escape isn’t allowed?

If I were doing an audit of this task, I would judge such a test case as overly strict.[4] Why? Because it would be unfair to penalize the agent for something not mentioned in the instructions.

But how would we fix that? We would add more detail to the instructions, specifying that writes outside the repo root should not be allowed.

But this results in a very weird sort of task, where every constraint and side effect and edge case is pointed out to the agent in advance, and the task becomes simple instruction following and implementation ability.

Which isn’t really the behavior we want to be measuring in a coding agent.

There’s a big debate about how much improvement by coding agents in benchmarks correspond to improvement in real world utility.

I think this case study helps explain some of the difference. In order to be “fair”, benchmarks give away many implementation details in the task descriptions. But to be truly useful replacements for software developers, agents would have to be able to come up with these details on their own.

We might be making progress! I ran GPT-5.2-codex xhigh a couple times on the task, using the updated test cases. It outperformed GPT-5-mini on average. Still, in 75% of runs, it allowed repository escape.

So I’d encourage benchmark creators not to include every specification in their task instructions, especially those that would be obvious to a human developer. Instead, we should see what assumptions agents fail to make on their own.

:)

which it can’t see

“

checkout <branch>updates working tree files…to match the branch head commit snapshot.” and “diffcompares the current working tree to the HEAD commit snapshot”Note that in the audit I link, I only look for lenient tests, not strict ones. However, in other audits I will be publishing soon, I do mark tests as being too strict.