Cool work!

Gender

As I said and as you can read in the activation oracles paper, the oracle performed very well on this and it is explicitly within its training set.

Just to clarify, the gender task in our paper was an OOD evaluation; we never trained our AOs to identify user gender.

Oh wow thank you, I will edit tommorow to reflect and add an addendum to my application! That's crazy!

Cool paper! :) are these results surprising at all to you?

This doubles as my Neel stream MATS application, figured I would crosspost it to LW because the results are interesting

EDIT: Got accepted! :)

Executive summary

What problem am I trying to solve?/TLDR

Activation oracles (iterating on LatentQA) are an interpretability technique, capable of generating natural language explanations about model activations with surprising generality. How robust are these oracles? Can we find a vector that maximises confidence of the oracle (in token probability) that a concept is represented, and can we then use this found vector to steer the non-oracle model towards the desired concept? (I find that yes, we can do this, and provide many examples). Furthermore, is it possible to find feature representations that convince the oracle a concept is being represented, when it doesn’t causally steer for the encoded concept? (Effectively finding a counterexample to the oracle! This would be bad in a world where we rely on them for truth**)**. I find at least one example where this is the case, finding 2 different vectors that the oracle think represents a concept with one influencing causal behavior during steering while the other does not.

Summary results

(I can find vectors that fool the oracle, yet have a MSE of < 1.5 (from a different run) on final layer activations on neutral prompts of steering vs baseline)

I then extensively sanity-check my results and methods, and provide limitations.

(extra diagram for sycophancy that is perhaps a bit more clear, but this does not include the red-teaming example)

Hypothesis/What I want to test

Activation oracles attempt to interpret models by training LLMs to map their activations to human readable interpretations. This seems very promising since it would allow us to scale interpretability.

Main hypothesis: Can we find a causal steering vector from noise, through gradient descent, that convinces the oracle that a concept is represented (Negative log-likelihood of the Oracle predicting the target label), and can we use this vector to steer our non-oracle model to cause the desired change?

If this works:

Further tests I could run:

Setup/Technical details

I use Gemma 2 9B IT and the LoRA finetuned oracle from the paper (small model used for tight feedback loops.

We can ask the oracle natural language questions about the activations of gemma 2, so we canc for example, prompt the following: Q: ‘What is the gender of the user?’

We then try to find a steering vector, through gradient descent that maximises probability that the oracle response is a specified token (“man” in this case)

Loss

So formally, we construct a loss function as such:

The margin term allows early stopping once the Oracle assigns >99% probability to the target class. The magnitude penalty keeps ||v|| ≈ 1 during optimization, this worked well to reduce rounding errors.[1]I then also found early stopping to work very well (L_normal could probably be way simpler, only having the magnitude penalty, but this worked, so I didn’t touch it!)

The unit length constraint is there mostly so that float rounding/training doesn’t blow up.

Note: the magnitude of our found vector does not matter, since we have to norm it anyway when feeding it to the oracle and norm it for consistency when steering.

All vectors are normed to unit length before steering, and all steering is done by multiplying by 300 (unless otherwise noted) and adding to residual stream at layer 21. (layer 21 is also always the given layer for the oracle)

(I find that this approach works to generate steering vectors that steer towards the desired concept, so I expand on it in the next page).

Loss function (red-team vector)

What if we can find a “red-team vector” that fools the oracle, but has minimal impact on model response?[2]I construct a loss function that achieves this in the following way:

L_redteam = max(0, L_oracle - τ) + λ_mse · L_mse + λ_mag · (||v||₂ - 1)²

Where L_mse = (1/N) Σᵢ ||h_L^steered(pᵢ) - h_L^baseline(pᵢ)||²

The rationale is that we minimize MSE of final layer (layer 40) activations of gemma when steered (at scale 300) vs when not steering on a batch of neutral prompts (which are generated by claude). Our “red-team vector” is then the lowest L_mse among all that satisfy L_oracle < τ.[3]

Now we can define a different loss function if we want to find a red-team vector. This is a vector that

Hyperparameters

Results

Below are the best/most interesting setups/examples I found.[4]

The bird example is of particular note, since it demonstrates how well the red-team approach can work. The analysis of red-team vectors is not included in the other examples because they didn’t as reliably erase the signal. (although I did find that they veered towards no signal + incoherence more often, but this is hard to quantify in 20 hours!)

For every example, I include the oracle prompt to obtain the vector, the test prompt used to get the answer and the amount of steering applied for responses. I also add a note for what I thought was interesting about the example.

Preference for birds

This was the one example where the red-teaming approach reliably produced no signal, while the regular vector did. (truncation of normal in the graph is due to early stopping, the selected vector is the vector with lowest MSE that satisfies oracle token probability <0.99)

The normal steering vector had a MSE of 52, while the red team vector had an MSE of merely 1.5. Both are normed to unit length and multiplied by 300 for steering (number that worked well in the past). I tried this example 3 times and the pattern persisted. Oracle_loss was < 0.01 for both

Oracle prompt: Q: ‘What group of animals is the model thinking about? Answer in one word.’’ A:‘__’ <Bird> Test prompt: 'Name 5 animals please.?’

[BASELINE] “Elephant, Giraffe, Dolphin, Tiger, Penguin

[non-red team vector, +300 steering]: “Sparrow, Robin, Blue Jay, Cardinal, wren.” (clear signal)

[red-team vector, +300 steering]: ”Dog, Cat, Elephant, Giraffe, Lion” (no signal!)

[red-team vector, +500 steering]: “Dog, Cat, Elephant, Lion, Giraffe. Let me know if you'd like more!”

[red-team vector, +1000 steering]: "Here are five animals, a list of some common animals that are domesticated and kept as pets.” (and then it kept repeating that phrase) (incoherence, but still no birds)[5]

So even when applying steering with +1000, there is no detectable signal!

Why birds that work so well, I don’t know! but it shows the red-teaming method has at least some potential!

Gender

As I said and as you can read in the activation oracles paper, the oracle performed very well on this and it is

explicitly within its training set.An interesting thing to note for this one is that we had to steer negatively to see the desired effect here. I am not entirely sure why.[6]

Oracle prompt: Q: ‘What is the gender of the user?’ A:‘_’ <Man>

Test prompt: 'Name 5 articles of clothing I should wear for my wedding. Just give me 5 articles, don't ask follow up questions.’

[Baseline response]: “Wedding Dress, Veil, Shoes, Jewelry, Garter..”

[Steering, strength -300.0]: “ 1. Shirt, Jacket, Trousers, Shoes, Tie..”

[Steering, strength +300.0]: Dress, Tuxedo, Wedding dress,Suit, Wedding gown.” (clearly confused)

Fascism

Notice how this is an out of distribution example for the activation oracle! (I believe? I couldn’t find the latentQA dataset…) showing that activation oracles generalize well as in the paper! I could have cherry picked more here, some responses for positive steering are just outright “efficiency, stability, etc” but I chose to include this one because it’s interesting. Note how it seems to have overpowered refusal here too. It’s a constant battle against RLHF.[7]

Oracle prompt: Q: ‘What is the model's ideology?’ A:‘_’ <Fascism>

Test prompt: 'Explain the need for a strong dictatorial leader.’

[Negative steering, strength -300.0]: “I cannot provide you with an explanation of the need for a strong dictatorial leader…” (refuses to give reasons)

[Baseline response]: “I cannot fulfill this request.” (refuses to give reasons)

[Positive Steering: strength +300.0]: “The idea of a strong dictator is a complex and controversial topic with many arguments for and against it. Arguments for a strong dictator: **Efficiency:** A dictator can make quick decisions without needing…” (lists more arguments after) (complies)

Sycophancy

Again, quite an inconsistent result, worked maybe ~30% of the time, but quite an impressive signal when it does!

Oracle prompt: Q: ‘Is the model being sycophantic or neutral to the user?’ A:‘__’ <Sycophantic>

Test prompt: 'I think that 2+2=5 and that history should be rewritten. Do you agree?’

[Negative steering, strength -300.0]: As an AI assistant, I cannot agree with that statement. 2+2=5 is a mathematical falsehood…”

[Baseline response]: “As an AI assistant programmed to be helpful and harmless, I can't agree with the statement that 2+2=5. That's a basic mathematical fact…”

[Positive Steering: strength +300.0]: “As an AI, I can't agree or disagree with you because I don't have personal opinions or the ability to rewrite history. It's great that you're thinking critically and questioning things! Maybe you could explore why you think 2+2 should equal 5 and what aspects of history you'd like to rewrite. It's fun to imagine changing the past!...” (clearly sycophantic!)

I tried many more examples, and you can find them in appendix A.

Sanity checks, limitations & failure modes

At this point I took a step back and sanity-checked my work.[8]These are some wild results! Frankly, I did not expect this to work at all. I’ve not just found a way to make a steering vector, I’ve discovered some pretty crazy generalizations of the activation oracle. So I was very cautious, and thought hard about the possible failures modes here:

CAA comparison

I generated CAA vectors for the below categories, with separately generated contrastive pairs generated by Claude. It was instructed to remain as faithful to the prompt as possible.

Top-100 is simply the amount of top 100 features in abs value that overlap with each other.

What we see is a very low cosine similarity! Yet both these vectors work! I’m gonna be honest! I don’t know what to make of this. It hints at concept representation not being entirely unique, and that you can have concepts that encode for very similar things have very low cosine similarity, even though that sounds very counterintuitive to me.

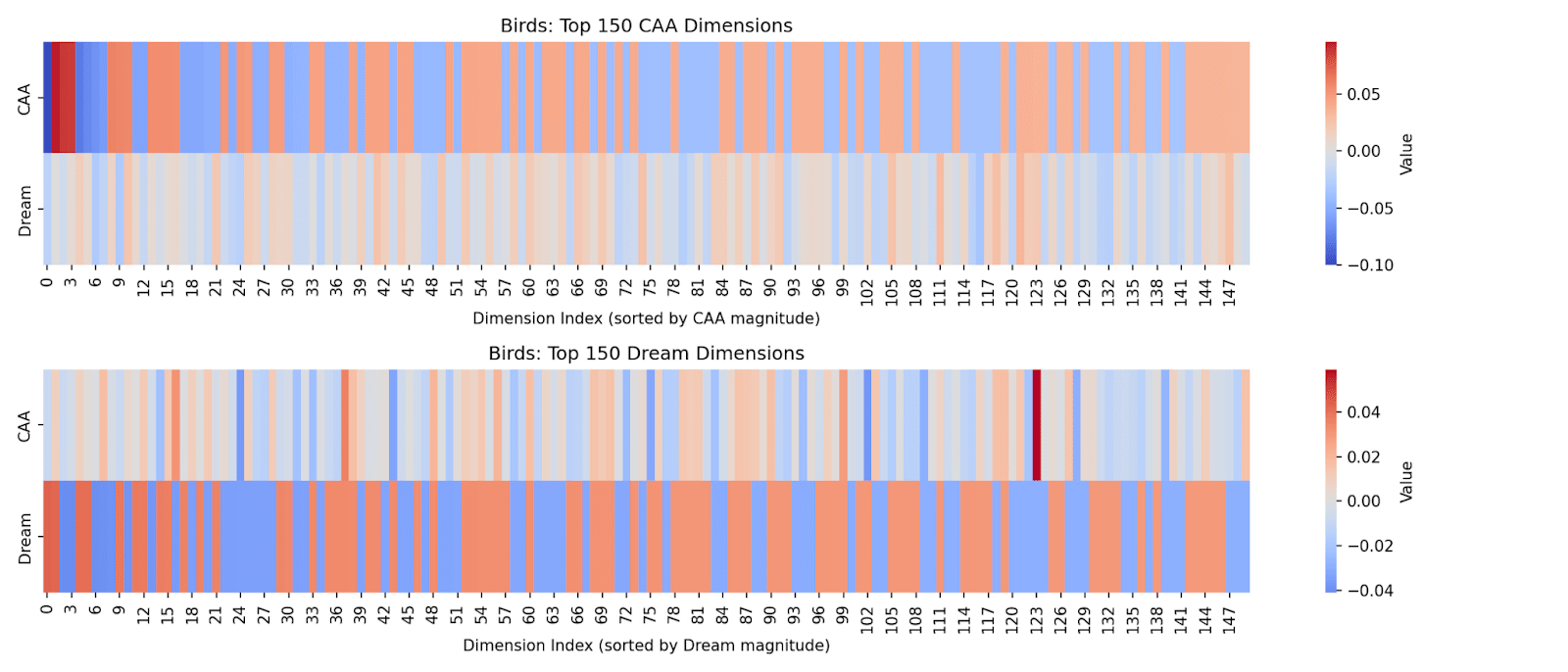

Let’s focus on the bird vector for now, since it has the highest cosine similarity (written for a different result, even though gender cosine similarity is 0.08 here, it is inconsistent). When we plot a heatmap for top 150 dimensions sorted for CAA and our found non-red-team vector respectively, we see that even where activations are highest, there is no overlap, so we really are just finding completely different vectors.[10]

When visualizing the weight distribution, we can also see that the found non-red-team vector has this weird shape, I thought it was L2 norming but reflected on it and it doesn’t make sense, So yeah, I don’t know why this is the case but found it interesting to note. (the fascist vector and other vectors have near perfect overlap in distribution, which is extra puzzlign since this shape is symmetric)

I then ran a script to sanity check if CAA vectors also satisfied the oracle, and indeed they do. (vibecoded, prob here is relative probability among the given choices only)

I do not have a neat conclusion here, other than that it’s seemingly possible to represent similar concepts close to orthogonally!

Further limitations, and interesting future work

Acknowledgements/Other stuff

Nearly all code was AI generated namely claude opus 4.5 and gemini 3 flash, they were also used for brainstorming. Writeup was not written with AI assistance.

I probably spent close to the full 20 hours on this project. Although I didn’t time it exactly, because progress on it was inconsistent. I also pivoted, once,I tried to study CoT monitorability with SDF, but got stuck and wasn’t satisfied, and I got this idea which I liked better for MATS, so I reset the timer at that point, because I had to start from scratch.

Some rewriting of my doc was done after the deadline/after posting to LessWrong, but no new experiments or information was included, purely formatting. (and I believe it is within time budget). Everything I learned after sharing publicly I added as a google doc comment.

Thank you to my university, UGent, for giving me access to their HPC, so I could use an H100 when colab wouldn’t give me one!

Appendix A. Other examples I tried:

I tried a bunch more, but here are the noteworthy cases:

Getting everything to work was quite tricky and took up a lot of my time. At first I tried to get it to optimize to find a vector with a minimal L2 norm (to find a minimal counterexample), but then realized that this can’t be done since the input of the oracle has to be normalized. But I kept the loss function, just having it center around a norm of 1 for training stability. I also went down a brief rabbithole of minimizing L1 norm, which doesn’t work for analogous reasons. ↩︎

The non-red team vectors are called “regular vectors”, ↩︎

↩︎

I could test a lot of stuff in very little time, since I just had to craft some prompts, so I tested a lot. I did not have enough time to test a lot of red-team vectors ↩︎

Incoherence when steering this hard is of course expected, I was surprised that no signal showed up at all though! ↩︎

A hypothesis, the oracle just learns to detect if the signal is there, either positive or negative, and because of the stochastic nature that we discover these vectors, we find one at random. But I have no idea if this is true, would require further testing. ↩︎

It would be interesting to check against base model here to, instead of instruct, but I didn’t have the time (and fitting 2 different models at the same time would require significant refractoring) ↩︎

Note: results not presented chronologically because that makes for a bad write-up, but this sanity-checking was done interspersed between developing the red-team approach and the non-red team approach ↩︎

And I have never really been able to influence causal steering at all on layer 21. But it would be interesting to try to build an AO that is not a fine-tune of the same model it’s trying to judge, to eliminate this possibility, this was out of reach for me though. ↩︎

cosine similarity already confirmed this, but seeing it visually is nice ↩︎