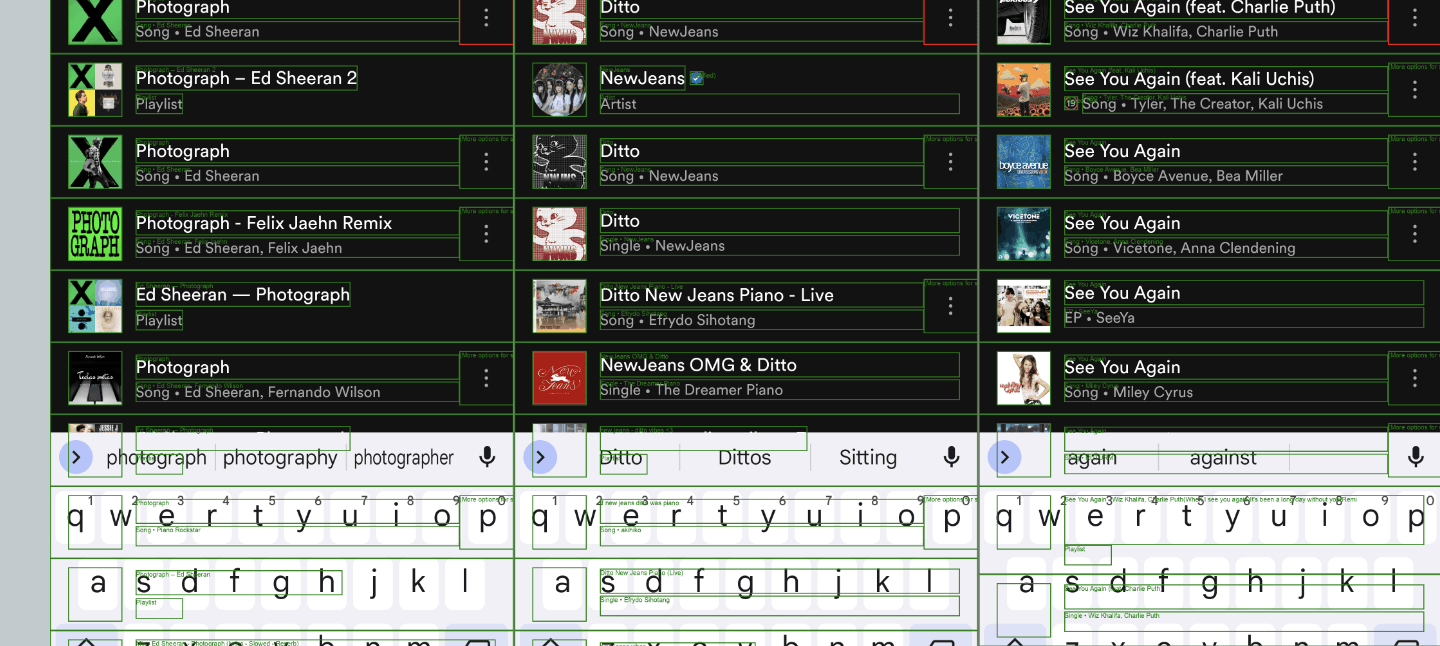

Just sharing it here, curious of what the LW community would think of rabbit's neuro-symbolic approach and their new device "R1"?

"We believe that in the long run, LAM exhibits its own version of "scaling laws," [3] where the actions it learns can generalize to applications of all kinds, even generative ones.Over time, LAM could become increasingly helpful in solving complex problems spanningmultiple apps that require professional skills to operate. By utilizing neuro-symbolic techniques in the loop, LAM sits on the very frontier ofinterdisciplinary scientific research in language modeling (LM), programming languages (PL), and formal methods (FM). Traditionally, the PL/FM community has focused on symbolic techniques — solver technologies that rely on logical principles of induction, deduction, and heuristic search. While these symbolic techniques can be highly explainable and come with strong guarantees, they suffer from a scalability limit. By contrast, recent innovations in the LM community are grounded in machine learning and neural techniques: while highly scalable, they suffer from a lack of explainability and come with no guarantees of the output produced. Inspired by the success of machine learning and neural techniques, the PL/FM community hasrecently made waves of progress on neuro-symbolic methods: by putting together neural techniques (such as LLM) and symbolic ones, one ends up combining the best parts of both worlds, making the task of creating scalable and explainable learning agents a feasibleone. Yet to date, no one has put cutting-edge neuro-symbolic techniques into production — LAM seeks to pioneer this direction."

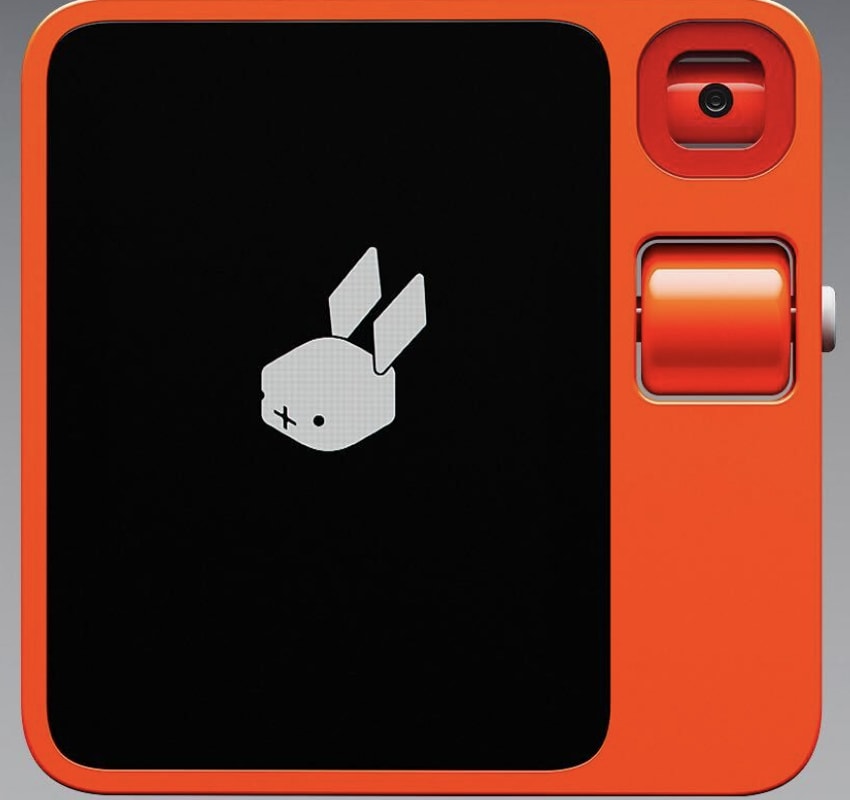

Rabbit made strong claims that LAM is better than an LLM in doing tasks. A demo video presented their device called "R1" that uses LAM as it's operating system.

The conclusion in their research page:

embodiment in AI-native devices

We believe that intelligence in the hands of the end-user is achievable without heavy client-side computing power. By carefully and securely offloading the majority of computation to data centers, we open up opportunities for ample performance and cost optimizations, making cutting-edge interactive AI experiences extremelyaffordable. While the neuro-symbolic LAM runs on the cloud, the hardware device interfacing with it does not require expensive and bulky processors, is extremely environmentally friendly, and consumes little power. As the workloads related to LAM continue to consolidate, we envision a path towards purposefully built server-side and edge chips.

Please share your thoughts in the comments section below! Especially alignment related ideas on the neuro-symbolic approach!