Indeed, the comparative advantage theorem includes the assumption that being left alone is actually an option that both traders have. It does say that, if two agents have literally any ability to each produce things the other want, they can do at least as well by trading as by leaving each other alone. It very much does not say that it is, in fact, absolutely impossible for one of the agents to do even better than that by killing the other one and taking their stuff.

The Coasian barganing theorem comes a little bit closer, but a lack of leverage can still reduce an offer to something like "if you don't fight back I'll make your death painless instead of horrible".

We didn't trade much with Native Americans.

This is wildly mistaken. Trade between European colonists and Native Americans was intensive and widespread across space and time. Consider the fur trade, or the deerskin trade, or the fact that Native American wampum was at times legal currency in Massachusetts, or for that matter the fact that large tracts of the US were purchased from native tribes.

I did think of this, that's why inserted "didn't trade much" here whereas I just say we didn't trade with ants. I think that you can argue about the relative scales of trade vs theft between europeans and native americans. But the basic point still seems to stand to me that relatively to the amount of theft, the trade amount was very minor.

'Much' is open to interpretation, I suppose. I think the post would be better served by a different example.

Seb is explicitly talking about AGI and not ASI. It's right there in the tweet.

Most people in policy and governance are not talking about what happens after an intelligence explosion. There are many voices in AI policy and governance and lots of them say dumb stuff, e.g. I expect someone has said the next generation of AIs will cause huge unemployment. Comparative advantage is indeed one reasonable thing to discuss in response to that conversation.

Stop assuming that everything anyone says about AI must clearly be a response to Yudkowsky.

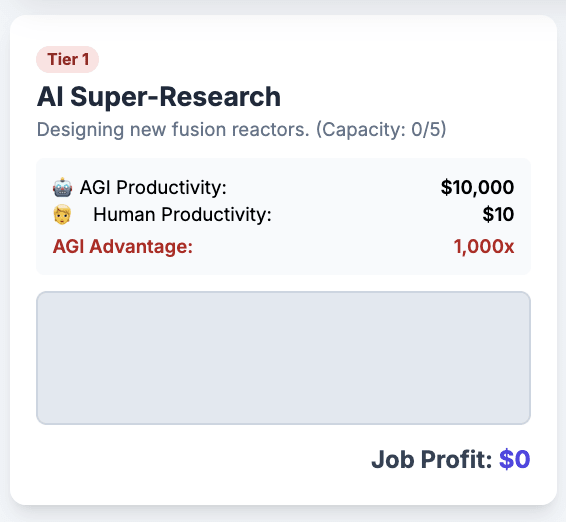

Since he says he vibe coded the app, I can't be sure what he contributed and what was added by the AI. However, the app does include a section on ASI, I included a screenshot above. But even the section on AGI includes 1000x AI super research, so I guess this is quite capable AGI. During the immediate future, I do expect humans to remain relevant because a few cognitive niches will remain where AIs don't do so well, but that isn't quite the same as CA. I actually don't really see how humans could be paid for a job that AI can also do just as well, I mean AI is so much cheaper and we are not really compute bottle-necked where we couldn't get more AIs spinned up. Currently humans have an absolute advantage at a shrinking number of tasks which keeps us employed for the moment. But maybe that's a different post.

This post is not really about Yudkowsky, I had this in drafts and published it slightly rushed when I saw Yudkowsky's post on the same topic come out yesterday. I did only minor edits after reading Yud's post, I mean this post basically came out minutes after reading Yud's post. Most of these ideas where not directly influenced but these arguments have been around in the discussion for a while, that's why I assume they seem similar.

In the case of ants, we wouldn't even consider signing a trade deal with them or exchanging goods. We just take their stuff or leave them alone.

Consider mosquitoes instead. Imagine how much better off both species would be if we could offer mosquitoes large quantities of stored blood in exchange for them never biting humans again. Both sides would clearly gain from such an arrangement. Mosquitoes would receive a large, reliable source of food, and humans would be freed from annoying mosquito bites and many tropical diseases.

The reason this trade does not happen is not that humans are vastly more powerful than mosquitoes, but that mutual coordination is impossible. Obviously, we cannot communicate with mosquitoes. Mosquitoes cannot make commitments or agreements. But if we could coordinate with them, we would -- just as our bodies coordinate with various microorganisms, or many of the animals we domesticate.

The barrier to trade is usually not about power; it is about coordination. Trade occurs when two entities are able to coordinate their behavior for mutual benefit. That is the key principle.

But we're also getting to the point of being powerful enough to kill every mosquito. And we may just do that. We might do it even in the world where we were able to trade with them. The main reasons not to are that we have some level of empathy/love towards. nature and animals, something ASI won't have towards us.

Moreover, it's unclear whether or not humans will be able to coordinate with ASI. Even stupid animals can coordinate with one another on the timescale of evolution, but it's quicker for us to kill them. It would probably be quicker and cheaper for an ASI to just kill us (or deprive us of resources, which we also do to animals all the time) than to try and get us to perform cognitively useful labour.

Eradicating mosquitoes would be incredibly difficult from a logistical standpoint. Even if we could accomplish this goal, doing so would cause large harm to the environment, which humans would prefer to avoid. By contrast, providing a steady stored supply of blood to feed all the mosquitoes that would have otherwise fed on humans would be relatively easy for humans to accomplish. Note that, for most mosquito species, we could use blood from domesticated mammals like cattle or pigs, not just human blood.

When deciding whether to take an action, a rational agent does not merely consider whether that action would achieve their goal. Instead, they identify which action would achieve their desired outcome at the lowest cost. In this case, trading blood with mosquitoes would be cheaper than attempting to eradicate them, even if we assigned zero value to mosquito welfare. The reason we do not currently trade with mosquitoes is not that eradication would be cheaper. Rather, it is because trade is not feasible.

You might argue that future technological progress will make eradication the cheaper option. However, to make this argument, you would need to explain why technological progress will reduce the cost of eradication without simultaneously reducing the cost of producing stored blood at a comparable rate. If both technologies advance together, trade would remain relatively cheaper than extermination. The key question is not whether an action is possible. The key question is which strategy achieves our goal at the lowest relative cost.

If you predict that eradication will become far cheaper while trade will not become proportionally cheaper, thereby making eradication the rational choice, then I think you'd simply be making a speculative assertion. Unless it were backed up by something rigorous, this prediction would not constitute meaningful empirical evidence about how trade functions in the real world.

I was approaching the mosquito analogy on its own terms but at this level of granularity it does just break down.

Firstly, mosquitos directly use human bodies as resources (as well as various natural environments which we voluntarily choose to keep around) while we can't suck nutrients out of an ASI.

Secondly, mosquitos cause harm to humans and the proposed trade involves them stopping harming us which is different to proposed trades with ASI.

An ASI would experience some cost to keeping us around (sunlight for plants, space, temperature regulation) which needs to be balanced by benefits we can give it. If it can use the space and energy we take up to have more GPUs (or whatever future chip it runs on) and those GPUs give it more value than we do, it would want to kill us.

If you want arguments as to whether it would be more costly to kill humans vs keep us around, just look at the amount of resources and space humans currently take up on the planet. This is OOMs more resources than an ASI would need to kill us, especially once you consider it only needs to pay the cost to kill us once, then it gets the benefits of that extra energy essentially forever. If you don't think an ASI could definitely make a profit from getting us out of the picture, then we just have extremely different pictures of the world.

I was approaching the mosquito analogy on its own terms but at this level of granularity it does just break down.

My goal in my original comment was narrow: to demonstrate that a commonly held model of trade is incorrect. This naive model claims (roughly): "Entities do not trade with each other when one party is vastly more powerful than the other. Instead, in such cases, the more powerful entity rationally wipes out the weaker one." This model fails to accurately describe the real world. Despite being false, this model appears popular, as I have repeatedly encountered people asserting it, or something like it, including in the post I was replying to.

I have some interest in discussing how this analysis applies to future trade between humans and AIs. However, that discussion would require extensive additional explanation, as I operate from very different background assumptions than most people on LessWrong regarding what constraints future AIs will face and what forms they will take. I even question whether the idea of "an ASI" is a meaningful concept. Without establishing this shared context first, any attempt to discuss whether humans will trade with AIs would likely derail the narrow point I was trying to make.

If you don't think an ASI could definitely make a profit from getting us out of the picture, then we just have extremely different pictures of the world.

Indeed, we likely do have extremely different pictures of the world.

The main reasons not to are that we have some level of empathy/love towards. nature and animals, something ASI won't have towards us.

Why are you so confident about that?

Fair question. It might have been better to phrase this as "Something ASI won't have towards us without much more effort and knowledge than we are currently putting into making ASI be friendly."

The answer is *gestures vaguely at entire history of alignment arguments* that I agree with the Yudkowsky position. To roughly summarise:

Empathy is a very specific way of relating to other minds, and which isn't even obviously well-defined when the two minds are very different; e.g. what does it mean to have empathy towards an ant, or a colony of ants? And humans and ASI will be very different minds. To make an ASI view us with something like empathy, we need to specify the target of "empathy, and also generalise it in this correct way when you're very different from us."

To correctly specify this target within the space of all possible ways that an ASI could view us requires putting a lot of bits of information into the ASI. Some bits of that information (for example, the bit of information which distinguishes "Do X" from "Make the humans think you've done X", but there are others) are especially hard to come by and especially hard to put into the ASI.

To make the task even harder, we're doing things using gradient descent, where there isn't an obvious, predictable-in-advance relation between the information we feed into an AI and the things it ends up doing.

Putting it all together, I think it's very likely we'll fail at the task of making an ASI have empathy towards us.

(There's arguments for "empathy by default" or at the very least "empathy is simple" but I think these don't really work, see above how "empathy" is not obviously easy to define across wildly different minds. Maaaaaybe there's some correspondence between certain types of self-reflective mind which can make things work but I'm very confused about the nature of self-reflection, so my prior is that it's as doomed as any other approach i.e. very doomed)

("Love" is even harder to specify than empathy, so I think that's even more doomed)

You didn't actually answer the question posed, which was "Why couldn't humans and ASI have peaceful trades even in the absence of empathy/love/alignment to us rather than killing us?" and not "Why would we fail at making AIs that are aligned/have empathy for us?"

I think this position made a lot of sense a few years ago when we had no idea how a superintelligence might be built. The LLM paradigm has made me more hopeful about this. We're not doing a random draw from the space of all possible intelligences, where you would expect to find eldritch alien weirdness. LLMs are trained to imitate humans; that's their nature. I've been very positively surprised by the amount of empathy and emotional intelligence LLMs like Claude display; I think alignment research is on the right track.

What happens when you take an LLM that successfully models empathy and emotional intelligence and you dramatically scale up its IQ? Impossible to be certain, but I don't think it's obvious that it loses all its empathy and decides to only care about itself. As humans get smarter, do they become more amoral and uncaring? Quite the opposite: people like Einstein and Feynman are some of our greatest heroes. An ASI far smarter than Einstein might be more empathetic than him, rather than less.

To correctly specify [empathy] within the space of all possible ways that an ASI could view us requires putting a lot of bits of information into the ASI.

To me this reads like "we need to design a schematic for empathy that we can program into the ASI." That's not how we train LLMs, though. Instead we show them lots of examples of the behavior we want them to exhibit. As you pointed out, empathy and love are hard to specify and define. But that's OK - LLMs don't primarily work off of specifications and definitions. They just want lots of examples.

This isn't to say the problem is solved. Alignment research still has more to do. I agree that we should put more resources into it. But I think there's reason to be hopeful about the current approach.

Part of the reason why this would be beneficial is also that killing all mosquitoes is really hard and could have side effects for us (like loss of pollination). One could hope that maybe humans would have similar niche usefulness to the ASI despite the difference in power, but it's not a guarantee.

If we could pay ants in sugar to stay out of our houses, we might do that instead of putting up poison ant baits. Or we could do things like let them clean up spilled food for us, if we weren't worried about the ants being unhygenic. The biggest difficulty in trading with ants is that getting ants to understand and actually do what we would want them to do is usually more trouble than it's worth when it's even possible at all.

Humans do something with honeybees that's a lot like trading, although it is mostly taking advantage of the fact that the effects of their natural behaviors (producing honey and pollinating plants) are useful to us. We still only tend to care about honeybee well-being to the extent that it's instrumentally useful to us, though.

Yeah, a closer analogy to human-animal trade would be our two "symbiotic" species: dogs and cats. Humans have relied on guard dogs, hunting dogs, herding dogs and retrievers for a long time. In exchange we provide food, shelter, sometimes medicine, etc. From a wolf's perspective, humans are not the worst pack members, because they have strange and useful abilities wolves lack. The deal with cats is probably simpler: Humans get pest control, cats get abundant mice, and the humans might be willing to throw in supplemental food when mice are scarce.

A few other domestic animals, including horses and sheep, could be argued to be "trading" with humans on some level. But you don't have to look far to find animals where our relationship is more clearly exploitation or predation, or outright elimination.

This actually mirrors the range of outcomes I would expect from building a true superintelligence:

- If we're insanely lucky, we'll be offered the deal dogs got offered: We won't get to make any of the important decisions about our futures, or even understand what's going on. We might get spayed or "put to sleep." But maybe someone will occasionally throw a stick for us to chase.

- But there's a large chance we'll be offered the same deal as passenger pigeons or smallpox: extinction.

Personally, this is why I favor a halt in the near future: When (1) is my most optimistic scenario, I don't think it's worth gambling. "Alignment", to me, is basically, "Trying to build initially kinder pet owners," with zero long term control.

Agreed. We don't trade with ants because we can't. If we could, there are lots of mutually profitable trades we could make.

Economist Noah Smith has made a similar argument, that comparative advantage will still preserve human jobs, with the caveat that it only holds if the AIs aren't competing with humans for the same scarce resources. He does admit that if, say, humans have to outbid AIs for things like electricity to run farm equipment and for land to grow crops on, we might very well end up with a problem.

Yeah, getting outbid or otherwise deprived of resources we need to survive is one of the main concerns to me as well. It can happen completely legally and within market rules, and if you add AI-enhanced manipulation and lobbying to the mix, it's almost assured to happen.

One thing I've been wondering about is, how fixed is the "human minimum wage" really? I mean, in the limit it's the cost of running an upload, which could be really low. And even if we stay biological, I can imagine lots of technologies that would allow us to live more cheaply: food-producing nanotech, biotech that makes us smaller and so on.

The scary thing though is that when such technologies appear, that'll create a pressure to use them. Everyone would have to choose between staying human or converting themselves to a bee in beehive #12345, living much cheaper but with a similar quality of life because the hive is internet-enabled.

We don't trade with ants.

You might want to link to the post that afaict introduced this? https://www.lesswrong.com/posts/wB7hdo4LDdhZ7kwJw/we-don-t-trade-with-ants

I think I briefly read parts of that post some time ago but wasn't directly thinking of it when writing this. I think the language argument by Katja seems false. I mean if Ants had language they still wouldn't understand us, in some sense they probably do have communication just no way to express anything as complex as human thoughts. They still wouldn't have anything to trade with us or any way to enforce anything against us. I would probably only link to it if I made a direct rebuttal of her point.

The linked post lists 19 economically valuable things ants could trade to us, if we could communicate with them.

Ok, so from a quick look I find this article on trading with ants unusually weak.

"Surveillance and spying"

Yes but ants couldn't possibly understand anything we would be looking for? Not just that they don't have language they have a fundamentally lower level of understanding, they couldn't tell us "are the chinese building new submarines?" They also couldn't perform these tasks since ants can't follow any human orders since they are too stupid. like an ant doesn't just go of and do some newly specified job, no they do the same stuff every day, like looking for food or following other ants. In this analogy humans, couldn't possibly understand what ASI wants of them and even if humans couldn't follow those orders.

This ignores that the gap would realistcally be larger, humans can't make ants, we can't build reliable robots that small. ASI will be able to build better humanoids if it need them for something for some reason.

Just want to add some context. I'm not going to respond to the specific arguments here, but I want to clarify a few things:

- As I wrote in the original post, the app (that I vibecoded in 10min)'s sole purpose was to illustrate the concept of comparative advantage: "This doesn't cover wages, income tax implications, or other important things - it's only to explain comparative advantage." It was a quick sketch to explain a single economic principle, not a comprehensive thesis on AGI.

- Nor did I claim this was sufficient or all there is, which the post implies in places. CA has nothing to do with "keep[ing] the biosphere alive". Hopefully will make my views clearer in a future piece, as well as why I think the post gets some stuff wrong (including still misunderstanding CA).

Also I'm not the 'lead on the Google DeepMind governance team' either.

I changed it to 'lead for frontier policy at Google DeepMind'.

To be fair, this post has two parts:

- Directly addressing your points

- Addressing other arguments against RCA or making general counterarguments, some of which you didn't make

I can see that the second part seems a bit unfair to you and is probably not helpful in trying to convince you here. I have seen people literally making the point that RCA means we are totally fine.

I do however still think that RCA totally does not apply here, maybe I am wrong and there will be some transition period where it briefly applies, but I really don't see even that argument at the moment. Currently humans have an absolute advantage at a shrinking number of tasks which keeps us employed for the moment.

If you read my post as uncharitable and have 40 minutes to spare, you may read The tale of the Top Tier intellect by Yud.

Economist Noah Smith has made a similar argument, that comparative advantage will still preserve human jobs, with the caveat that it only holds if the AIs aren't competing with humans for the same scarce resources. He does admit that if, say, humans have to outbid AIs for things like electricity to run farm equipment and for land to grow crops on, we might very well end up with a problem.

Also on my substack

I was recently saddened to see that Seb Krier – who's a lead for frontier policy at Google DeepMind – created a simple website apparently endorsing the idea that Ricardian comparative advantage will provide humans with jobs in the time of ASI. The argument that comparative advantage means advanced AI is automatically safe is pretty old and has been addressed multiple times. For the record, I think this is a bad argument, and it's not useful to think about AI risk through comparative advantage.

The Argument

The law of comparative advantage says that two sides of a trade can both profit from each other, and both can be better off in the end even if one side is less productive at everything. The naive connection some people make to AI risk: humans will also be less productive at everything compared to AI, but because of this law humans will remain important, will keep some jobs and get paid. They conclude from this that things will be fine, and we shouldn't worry so much about AI risk. Even if we're less productive at everything than AI, we can still trade with AI. Seb and others seem to believe this will hold true even for ASI to some extent.

This would prove too much and this is not how you apply maths

There are a few reasons to immediately dismiss this whole argument. The main one is that this would prove far too much. It seems to imply that when one party is massively more powerful, massively more advanced, and massively more productive, the other side will be fine—there's nothing to worry about. It assumes some trade relationship will happen between two species where one is vastly more intelligent. There are many reasons to believe this is not the case. We don't trade with ants. We didn't trade much with Native Americans and deals were often imposed and deceptive. In the case of ants, we wouldn't even consider signing a trade deal with them or exchanging goods. We just take their stuff or leave them alone. In other cases, it's been more advantageous to just take the stuff of other people, enslave them, or kill them. In conclusion, this argument proves far too much.

Also, vaguely pattern matching math theorems to reality won’t prove that AI will be safe, this is not the structure of reality. The no-free-lunch theorem doesn’t prove that there can’t be something smarter than us either, neither does Gödel's incompleteness prove that human brains are uncomputable. Comparative advantage is a simple mathematical theorem used to explain trade patterns in economics. You can't look at a simple theorem in linear algebra and conclude that AI and humans would peacefully co-exist. One defines productivity by some measure, and you have a vector of productivity for different goods and you get a vector of labor allocation. It's a simple mathematical fact from linear algebra. This naive way of vaguely pattern matching is not how you apply maths to the real world, ASI won't be safe because of this.

In-Depth Counter-Arguments

Let's say you're unconvinced and want to go more in-depth. Comparative advantage says nothing about leaving power to humans or humans being treated well. It only addresses trade relationships, it says nothing about leaving power to the less productive side or treating them well.

There's nothing preventing the more productive side from acquiring more resources over time—buying things, buying up land, buying up crucial resources it needs—and then at some point leaving nothing for the other side.

Comparative advantage doesn't say what's the optimal action. It only says you can both profit from trade in certain situations, but it doesn't say that's the most optimal thing. In reality, it's often more optimal to just take things from the other side, enslave them, and not respect them.

Another big problem with this whole website that Seb Krier created: he's looking at 10 humans and 10 AIs and how to divide labor between them. But you can just spin up as many AGIs as you want. There's massive constant upscaling of the amount of GPUs and infrastructure we have. You can have a massively increasing, exponentially increasing amount of artificial intelligence. This massively breaks comparative advantage: the more productive side is increasing in numbers all the time.

Comparative advantage says nothing about whether the AI will keep the biosphere alive. What happens when ASI determines that all the things we do to keep enough oxygen and the right temperature are not compatible with filling the world with data centers, nuclear power stations, and massive solar panels. How much money does it actually make from trade with humans compared to the advantage of being able to ravage the environment?

In the app, the optimal strategy for humans is making artisan crafts, artisan furniture, and therapy for other humans—things that mean nothing to the AI. Realistically there is nothing valuable we could provide to the AI that it couldn't cheaply make itself. If we have zero productivity at anything the AI desires, and only very small productivity for things that only we need that the AI doesn't need, there's no potential for trade. There's no trade happening in comparative advantage if you have zero productivity for anything the AI actually needs. What could we possibly trade with ants? We could give them something they like, like sugar. What could the ants give us in return?

Even if we could trade something with the AI and get something in return, humans have a minimum wage—we need to get enough calories, we need space, we need oxygen. It's not guaranteed that we provide this amount of productivity. We're extremely slow, and we need food, shelter, and all these things that the AI doesn't need.

Conclusion

I feel sad that people in Google DeepMind think this is a realistic reason for hope. He apparently has an important position at Google DeepMind working on governance and I hope to convince him here a bit. I don't think RCA is a serious position, it's not a reasonable way to think about AI human co-existence. To be fair, he has put out a few caveats and told me that he doesn't believe comparative advantage alone will make sure AI development will go fine.

Also see and this post