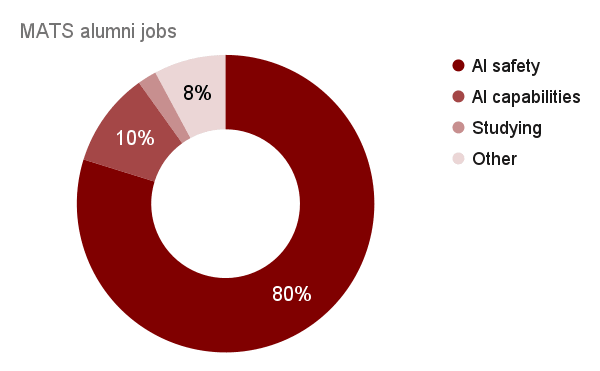

80% of MATS alumni who completed the program before 2025 are still working on AI safety today, based on a survey of all available alumni LinkedIns or personal websites (242/292 ~ 83%). 10% are working on AI capabilities, but only ~6 at a frontier AI company (2 at Anthropic, 2 at Google DeepMind, 1 at Mistral AI, 1 extrapolated). 2% are still studying, but not in a research degree focused on AI safety. The last 8% are doing miscellaneous things, including non-AI safety/capabilities software engineering, teaching, data science, consulting, and quantitative trading.

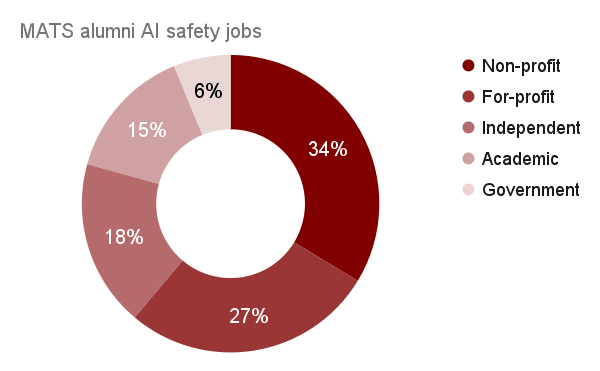

Of the 193+ MATS alumni working on AI safety (extrapolated: 234):

- 34% are working at a non-profit org (Apollo, Redwood, MATS, EleutherAI, FAR.AI, MIRI, ARC, Timaeus, LawZero, RAND, METR, etc.);

- 27% are working at a for-profit org (Anthropic, Google DeepMind, OpenAI, Goodfire, Meta, etc.);

- 18% are working as independent researchers, probably with grant funding from Open Philanthropy, LTFF, etc.;

- 15% are working as academic researchers, including PhDs/Postdocs at Oxford, Cambridge, MIT, ETH Zurich, UC Berkeley, etc.;

- 6% are working in government agencies, including in the US, UK, EU, and Singapore.

10% of MATS alumni co-founded an ...

AI safety field-building in Australia should accelerate. My rationale:

- OpenAI opened a Sydney office in Dec 2025 and Anthropic is planning to open a Sydney office in 2026. These offices may hire safety staff from local talent, or partner with local auditing, evaluation, and security companies, including Harmony Intelligence, Good Ancestors, and Gradient Institute.

- An Australian AISI was announced for early 2026 and is currently hiring. The UK AISI has benefited from close partnerships with Apollo Research, METR, and the LISA office community. There is a community space in Sydney, the Sydney AI Safety Space, and two field-building organizations, AI Safety ANZ and TARA, but these could expand substantially.

- Australia seems like a prime location for datacenter build-out. OpenAI published an "AI blueprint" for Australia, calling for datacenter build-out, and started building a $4.6B datacenter in Sydney in Dec 2025. Australia is a NATO partner, Five Eyes member, and member of the AUKUS security partnership with the US and UK; it's much more secure and aligned with US/UK interests than Saudi Arabia. Australia is the second-largest exporter of thermal coal, has vast solar and wind resources

Piggybacking: If people have strong opinions on, or interest in contributing to, said fieldbuilding, I'd be very happy to connect - you can reach me at michael.kerrison@aisafetyanz.com.au :)

Also piggybacking, if anybody is Sydney-based or visiting Sydney, you are welcome to work out of the SydneyAISafetySpace.org (SASS) for free.

I am a volunteer with PauseAI Australia, so if anyone wants to connect with our very, very small group, that would be great. We are pushing politicians on superintelligence.

One reason is that hosting data centers can give countries political influence over AI development, increasing the importance of their governments having reasonable views on AI risks.

As part of MATS' compensation reevaluation project, I scraped the publicly declared employee compensations from ProPublica's Nonprofit Explorer for many AI safety and EA organizations (data here) in 2019-2023. US nonprofits are required to disclose compensation information for certain highly paid employees and contractors on their annual Form 990 tax return, which becomes publicly available. This includes compensation for officers, directors, trustees, key employees, and highest compensated employees earning over $100k annually. Therefore, my data does not include many individuals earning under $100k, but this doesn't seem to affect the yearly medians much, as the data seems to follow a lognormal distribution, with mode ~$178k in 2023, for example.

I generally found that AI safety and EA organization employees are highly compensated, albeit inconsistently between similar-sized organizations within equivalent roles (e.g., Redwood and FAR AI). I speculate that this is primarily due to differences in organization funding, but inconsistent compensation policies may also play a role.

I'm sharing this data to promote healthy and fair compensation policies across the ecosystem. I believe th...

I am a Manifund Regrantor. In addition to general grantmaking, I have requests for proposals in the following areas:

- Funding for AI safety PhDs (e.g., with these supervisors), particularly in exploratory research connecting AI safety theory with empirical ML research.

- An AI safety PhD advisory service that helps prospective PhD students choose a supervisor and topic (similar to Effective Thesis, but specialized for AI safety).

- Initiatives to critically examine current AI safety macrostrategy (e.g., as articulated by Holden Karnofsky) like the Open Philanthropy AI Worldviews Contest and Future Fund Worldview Prize.

- Initiatives to identify and develop "Connectors" outside of academia (e.g., a reboot of the Refine program, well-scoped contests, long-term mentoring and peer-support programs).

- Physical community spaces for AI safety in AI hubs outside of the SF Bay Area or London (e.g., Japan, France, Bangalore).

- Start-up incubators for projects, including evals/red-teaming/interp companies, that aim to benefit AI safety, like Catalyze Impact, Future of Life Foundation, and YCombinator's request for Explainable AI start-ups.

- Initiatives to develop and publish expert consensus on AI safety macr

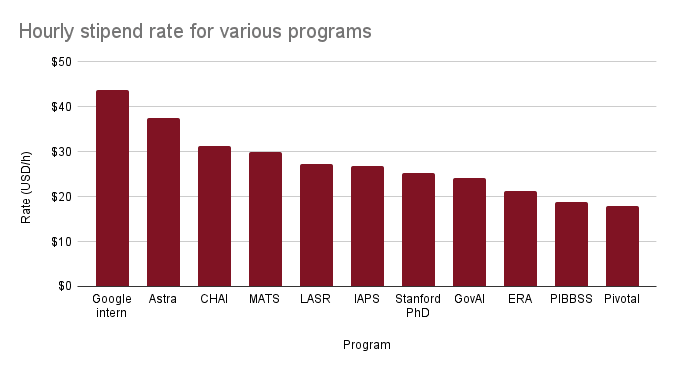

Hourly stipends for AI safety fellowship programs, plus some referents. The average AI safety program stipend is $26/h.

Edit: updated figure to include more programs.

Interesting, thanks! My guess is this doesn't include benefits like housing and travel costs? Some of these programs pay for those while others don't, which I think is a non-trivial difference (especially for the bay area)

I made a Google Scholar page for MATS. This was inspired by @Esben Kran's Google Scholar for Apart Research. Eleuther AI subsequently made one too. I think all AI safety organizations and research programs should consider making Google Scholar pages to better share research and track impact.

Why does the AI safety community need help founding projects?

- AI safety should scale

- Labs need external auditors for the AI control plan to work

- We should pursue many research bets in case superalignment/control fails

- Talent leaves MATS/ARENA and sometimes struggles to find meaningful work for mundane reasons, not for lack of talent or ideas

- Some emerging research agendas don’t have a home

- There are diminishing returns at scale for current AI safety teams; sometimes founding new projects is better than joining an existing team

- Scaling lab alignment teams are bottlenecked by management capacity, so their talent cut-off is above the level required to do “useful AIS work”

- Research organizations (inc. nonprofits) are often more effective than independent researchers

- “Block funding model” is more efficient, as researchers can spend more time researching, rather than seeking grants, managing, or other traditional PI duties that can be outsourced

- Open source/collective projects often need a central rallying point (e.g., EleutherAI, dev interp at Timaeus, selection theorems and cyborgism agendas seem too delocalized, etc.)

- There is (imminently) a market for for-profit AI safety companies and value-al

This still reads to me as advocating for a jobs program for the benefit of MATS grads, not safety. My guess is you're aiming for something more like "there is talent that could do useful work under someone else's direction, but not on their own, and we can increase safety by utilizing it".

I just left a comment on PIBBSS' Manifund grant proposal (which I funded $25k) that people might find interesting.

...Main points in favor of this grant

- My inside view is that PIBBSS mainly supports “blue sky” or “basic” research, some of which has a low chance of paying off, but might be critical in “worst case” alignment scenarios (e.g., where “alignment MVPs” don’t work, or “sharp left turns” and “intelligence explosions” are more likely than I expect). In contrast, of the technical research MATS supports, about half is basic research (e.g., interpretability, evals, agent foundations) and half is applied research (e.g., oversight + control, value alignment). I think the MATS portfolio is a better holistic strategy for furthering AI alignment. However, if one takes into account the research conducted at AI labs and supported by MATS, PIBBSS’ strategy makes a lot of sense: they are supporting a wide portfolio of blue sky research that is particularly neglected by existing institutions and might be very impactful in a range of possible “worst-case” AGI scenarios. I think this is a valid strategy in the current ecosystem/market and I support PIBBSS!

- In MATS’ recent post, “Talent Needs of

Main takeaways from a recent AI safety conference:

- If your foundation model is one small amount of RL away from being dangerous and someone can steal your model weights, fancy alignment techniques don’t matter. Scaling labs cannot currently prevent state actors from hacking their systems and stealing their stuff. Infosecurity is important to alignment.

- Scaling labs might have some incentive to go along with the development of safety standards as it prevents smaller players from undercutting their business model and provides a credible defense against lawsuits regarding unexpected side effects of deployment (especially with how many tech restrictions the EU seems to pump out). Once the foot is in the door, more useful safety standards to prevent x-risk might be possible.

- Near-term commercial AI systems that can be jailbroken to elicit dangerous output might empower more bad actors to make bioweapons or cyberweapons. Preventing the misuse of near-term commercial AI systems or slowing down their deployment seems important.

- When a skill is hard to teach, like making accurate predictions over long time horizons in complicated situations or developing a “security mindset,” try treating human

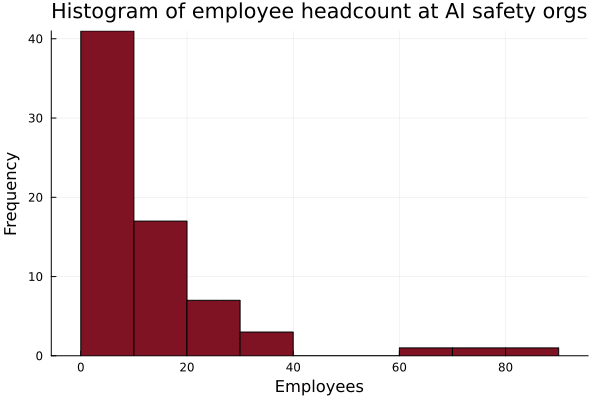

I did a quick inventory on the employee headcount at AI safety and safety-adjacent organizations. The median AI safety org has 10 8 employees. I didn't include UK AISI, US AISI, CAIS, and the safety teams at Anthropic, GDM, OpenAI, and probably more, as I couldn't get accurate headcount estimates. I also didn't include "research affiliates" or university students in the headcounts for academic labs. Data here. Let me know if I missed any orgs!

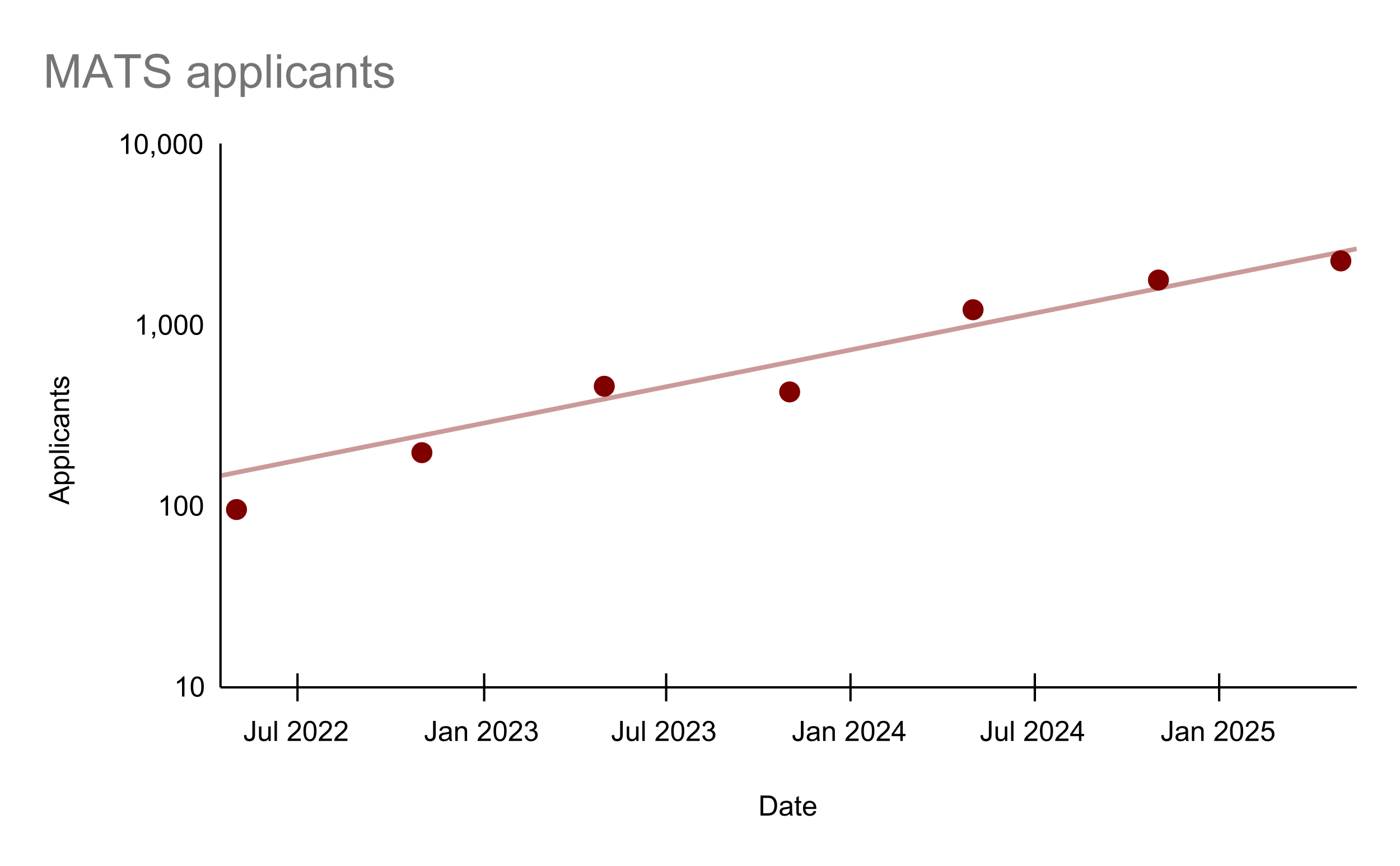

I'm interested in determining the growth rate of AI safety awareness to aid field-building strategy. Applications to MATS have been increasing by 70% per year. Do any other field-builders have statistics they can share?

Without the question at the end it would feel less ad-like, like it doesn't to me feel like you are honestly asking that question.

It's also that this correlates with applications opening for the next MATS, and your last post had a very similar statistic correlating with the same window, so my guess is there is indeed some ad-like motivation (I think it's fine for MATS to advertise a bit on LW, though shortform is a kind of tricky medium for that because we don't have a frontpage/personal distinction for shortform and I don't want people to optimize around the lack of that distinction).

I don't know, it feels to me like a relatively complicated aggregate of a lot of different things. Some messages that would have caused me to feel less advertised towards:

- "FYI, applications to MATS have been increasing 70% a year: [graph]. ARENA has seen also quite high growth rates [graph or number]. We've also seen a lot of funding increase over the same period, my best guess something similar like X. I wonder whether ~70% or something is aggregate growth rate of the field." (Of course one would have to check here, and I am not saying you have to do all of this work)

- "MATS applicati

Technical AI alignment/control is still impactful; don't go all-in on AI gov!

- Liability incentivizes safeguards, even absent regulation;

- Cheaper, more effective safeguards make it easier for labs to meet safety standards;

- Concrete, achievable safeguards give regulation teeth.

Also there's a good chance AI gov won't work, and labs will just have a very limited safety budget to implement their best guess mitigations. Or maybe AI gov does work and we get a large budget, we still need to actually solve alignment.

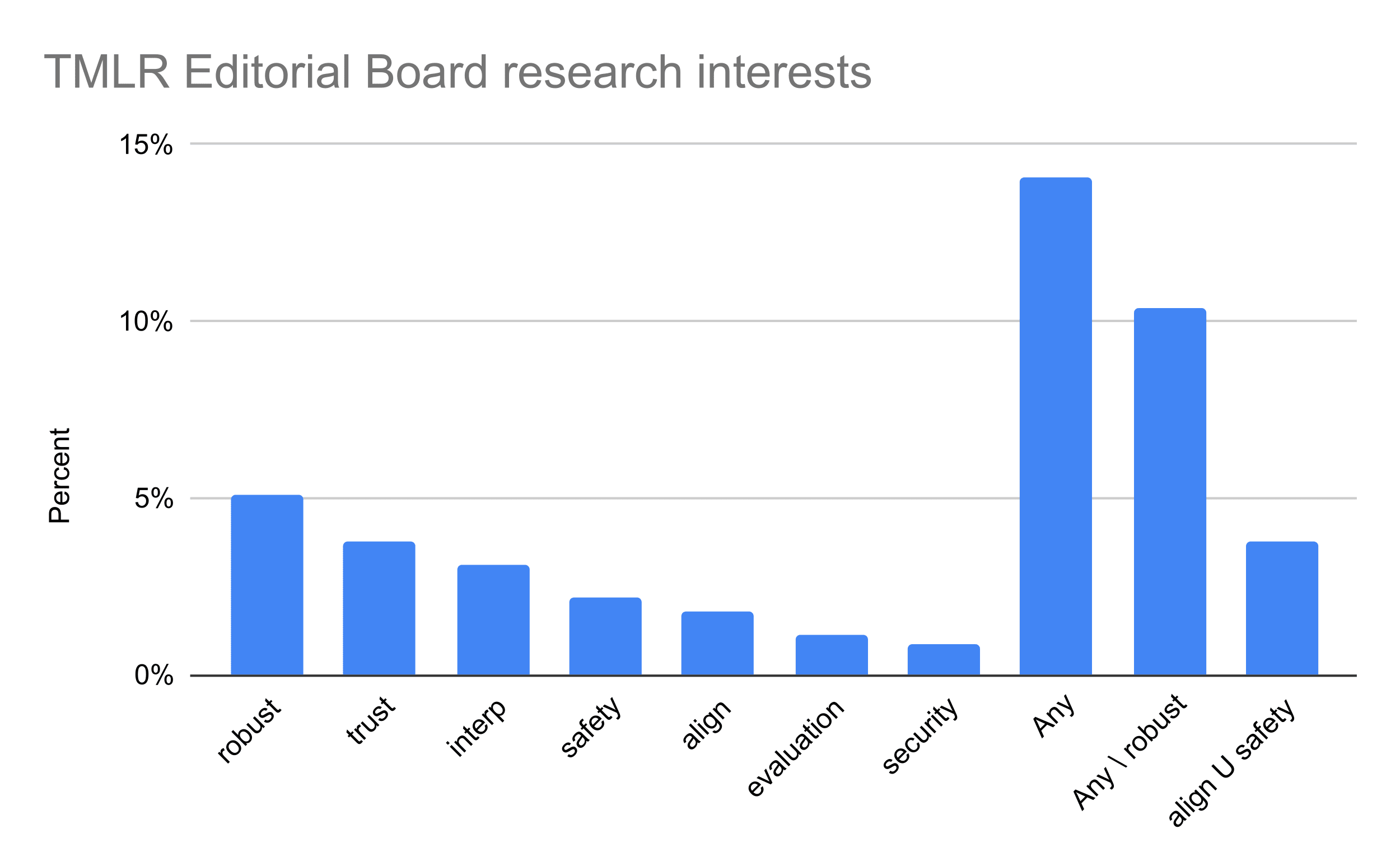

I analyzed the research interests of the 454 Action Editors on the Transactions on Machine Learning Research (TMLR) Editorial Board to determine what proportion of ML academics are interested in AI safety (credit to @scasper for the idea).

- 14% of editors listed any of "robustness", "trustworthy", "interpretable", "safety", "alignment", "evaluation", or "security" as a research interest.

- 10% of editors listed any of "trustworthy", "interpretable", "safety", "alignment", "evaluation", or "security" as a research interest. I excluded "robustness" as much of this research is considered more "capabilities" than "safety".

- 3.7% of editors listed "safety" or "alignment" as a research interest.

Crucial questions for AI safety field-builders:

- What is the most important problem in your field? If you aren't working on it, why?

- Where is everyone else dropping the ball and why?

- Are you addressing a gap in the talent pipeline?

- What resources are abundant? What resources are scarce? How can you turn abundant resources into scarce resources?

- How will you know you are succeeding? How will you know you are failing?

- What is the "user experience" of my program?

- Who would you copy if you could copy anyone? How could you do this?

- Am I better than the counterfactual?

- Who are your clients? What do they want?

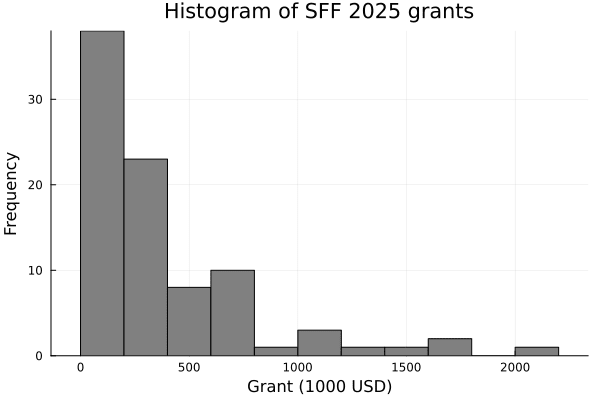

SFF-2025 S-Process funding results have been announced! This year, the S-process gave away ~$34M to 88 organizations. The mean (median) grant was $390k ($241k).

It seems plausible to me that if AGI progress becomes strongly bottlenecked on architecture design or hyperparameter search, a more "genetic algorithm"-like approach will follow. Automated AI researchers could run and evaluate many small experiments in parallel, covering a vast hyperparameter space. If small experiments are generally predictive of larger experiments (and they seem to be, a la scaling laws) and model inference costs are cheap enough, this parallelized approach might be be 1) computationally affordable and 2) successful at overcoming the architecture bottleneck.

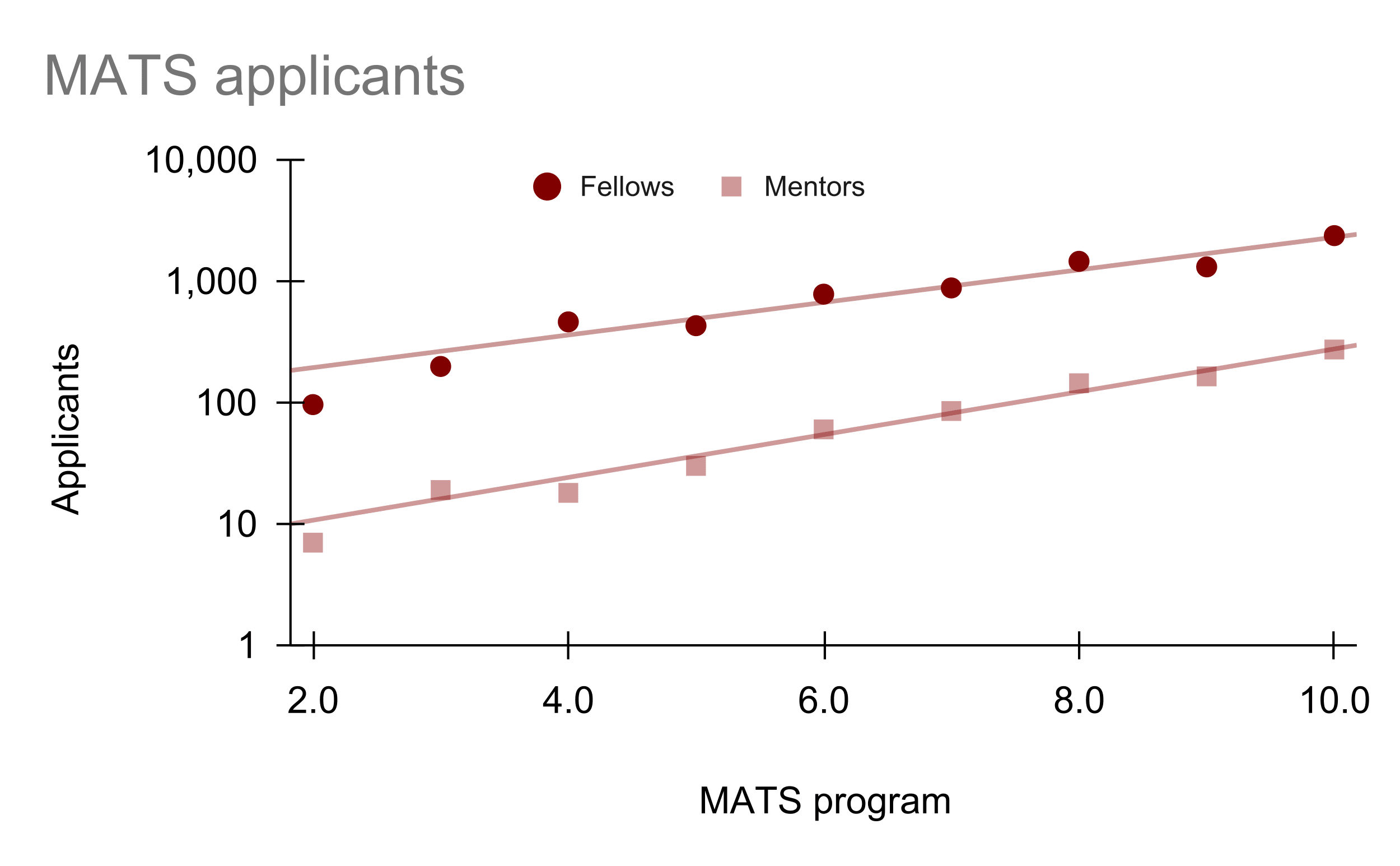

Update on MATS applications trends:

- Mentor applications seem to be growing 2.2x/year and we accepted 20% as primary mentors for 10.0 (Summer 2026).

- Fellow applications seem to be growing 1.8x/year (counting only applicants who applied to at least one mentor) and we plan to accept 5% for 10.0.

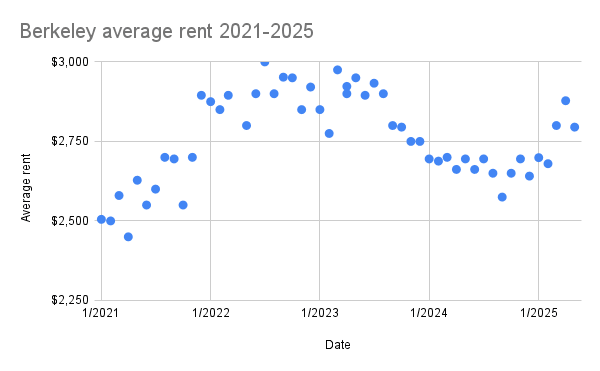

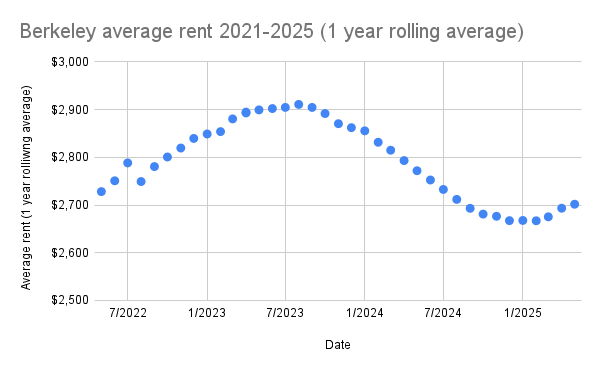

Not sure this is interesting to anyone, but I compiled Zillow's data on 2021-2025 Berkeley average rent prices recently, to help with rent negotiation. I did not adjust for inflation; these are the raw averages at each time.

An incomplete list of possibly useful AI safety research:

- Predicting/shaping emergent systems (“physics”)

- Learning theory (e.g., shard theory, causal incentives)

- Regularizers (e.g., speed priors)

- Embedded agency (e.g., infra-Bayesianism, finite factored sets)

- Decision theory (e.g., timeless decision theory, cooperative bargaining theory, acausal trade)

- Model evaluation (“biology”)

- Capabilities evaluation (e.g., survive-and-spread, hacking)

- Red-teaming alignment techniques

- Demonstrations of emergent properties/behavior (e.g., instrumental powerseeking)

- Interpretabili

AI alignment threat models that are somewhat MECE (but not quite):

- We get what we measure (models converge to the human++ simulator and build a Potemkin village world without being deceptive consequentialists);

- Optimization daemon (deceptive consequentialist with a non-myopic utility function arises in training and does gradient hacking, buries trojans and obfuscates cognition to circumvent interpretability tools, "unboxes" itself, executes a "treacherous turn" when deployed, coordinates with auditors and future instances of itself, etc.);

- Coordination failur

Reasons that scaling labs might be motivated to sign onto AI safety standards:

- Companies who are wary of being sued for unsafe deployment that causes harm might want to be able to prove that they credibly did their best to prevent harm.

- Big tech companies like Google might not want to risk premature deployment, but might feel forced to if smaller companies with less to lose undercut their "search" market. Standards that prevent unsafe deployment fix this.

However, AI companies that don’t believe in AGI x-risk might tolerate higher x-risk than ideal safet...

How fast should the field of AI safety grow? An attempt at grounding this question in some predictions.

- Ryan Greenblatt seems to think we can get a 30x speed-up in AI R&D using near-term, plausibly safe AI systems; assume every AIS researcher can be 30x’d by Alignment MVPs

- Tom Davidson thinks we have <3 years from 20%-AI to 100%-AI; assume we have ~3 years to align AGI with the aid of Alignment MVPs

- Assume the hardness of aligning TAI is equivalent to the Apollo Program (90k engineer/scientist FTEs x 9 years = 810k FTE-years); therefore, we need ~

I appreciate the spirit of this type of calculation, but think that it's a bit too wacky to be that informative. I think that it's a bit of a stretch to string these numbers together. E.g. I think Ryan and Tom's predictions are inconsistent, and I think that it's weird to identify 100%-AI as the point where we need to have "solved the alignment problem", and I think that it's weird to use the Apollo/Manhattan program as an estimate of work required. (I also don't know what your Manhattan project numbers mean: I thought there were more like 2.5k scientists/engineers at Los Alamos, and most of the people elsewhere were purifying nuclear material)

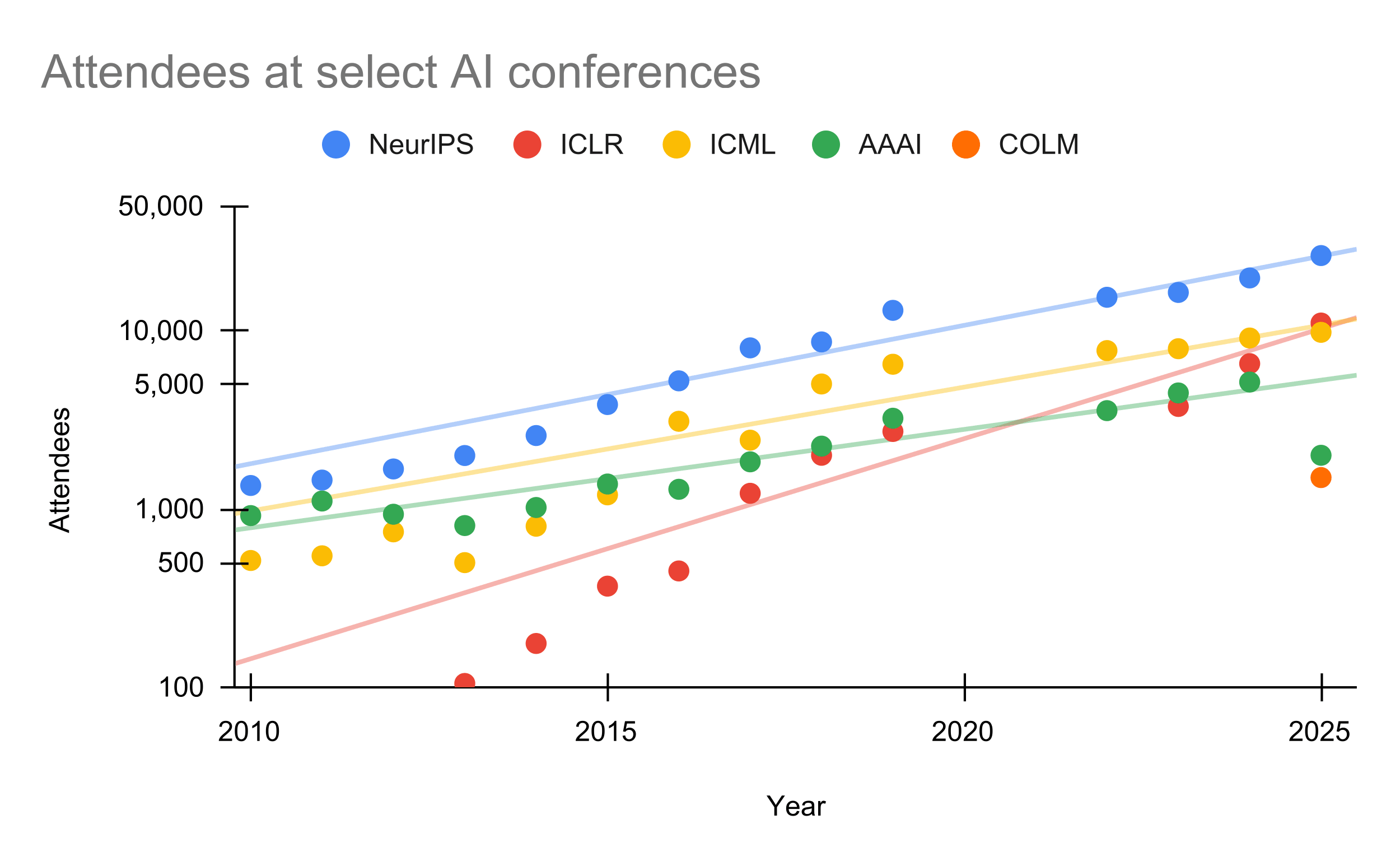

Attendees at the top-four AI conferences have been growing at 1.26x/year on average. Data is from Our World in Data. I skipped 2020-2021 for all conferences and 2022 for ICLR, as these conferences were virtual due to the COVID pandemic and had increased virtual attendance.

One could infer from these growth rates that the academic field of AI is growing 1.26x/year. Interestingly, the AI safety field (including technical and governance) seems to be growing at 1.25x/year.

Types of organizations that conduct alignment research, differentiated by funding model and associated market forces:

- Academic research groups (e.g., Krueger's lab at Cambridge, UC Berkeley CHAI, NYU ARG, MIT AAG);

- Research nonprofits (e.g., ARC Theory, MIRI, FAR AI, Redwood Research);

- "Mixed funding model" organizations:

- "Alignment-as-a-service" organizations, where the product directly contributes to alignment (e.g., Apollo Research, Aligned AI, ARC Evals, Leap Labs);

- "Alignment-on-the-side" organizations, where product revenue helps funds alignment research

Can the strategy of "using surrogate goals to deflect threats" be countered by an enemy agent that learns your true goals and credibly precommits to always defecting (i.e., Prisoner's Dilemma style) if you deploy an agent against it with goals that produce sufficiently different cooperative bargaining equilibria than your true goals would?

MATS' goals:

- Find + accelerate high-impact research scholars:

- Pair scholars with research mentors via specialized mentor-generated selection questions (visible on our website);

- Provide a thriving academic community for research collaboration, peer feedback, and social networking;

- Develop scholars according to the “T-model of research” (breadth/depth/epistemology);

- Offer opt-in curriculum elements, including seminars, research strategy workshops, 1-1 researcher unblocking support, peer study groups, and networking events;

- Support high-impact&n

"Why suicide doesn't seem reflectively rational, assuming my preferences are somewhat unknown to me," OR "Why me-CEV is probably not going to end itself":

- Self-preservation is a convergent instrumental goal for many goals.

- Most systems of ordered preferences that naturally exhibit self-preservation probably also exhibit self-preservation in the reflectively coherent pursuit of unified preferences (i.e., CEV).

- If I desire to end myself on examination of the world, this is likely a local hiccup in reflective unification of my preferences, i.e., "failure of pres

Are these framings of gradient hacking, which I previously articulated here, a useful categorization?

...

- Masking: Introducing a countervailing, “artificial” performance penalty that “masks” the performance benefits of ML modifications that do well on the SGD objective, but not on the mesa-objective;

- Spoofing: Withholding performance gains until the implementation of certain ML modifications that are desirable to the mesa-objective; and

- Steering: In a reinforcement learning context, selectively sampling environmental states that will either leave the mesa-objecti

How does the failure rate of a hierarchy of auditors scale with the hierarchy depth, if the auditors can inspect all auditors below their level?