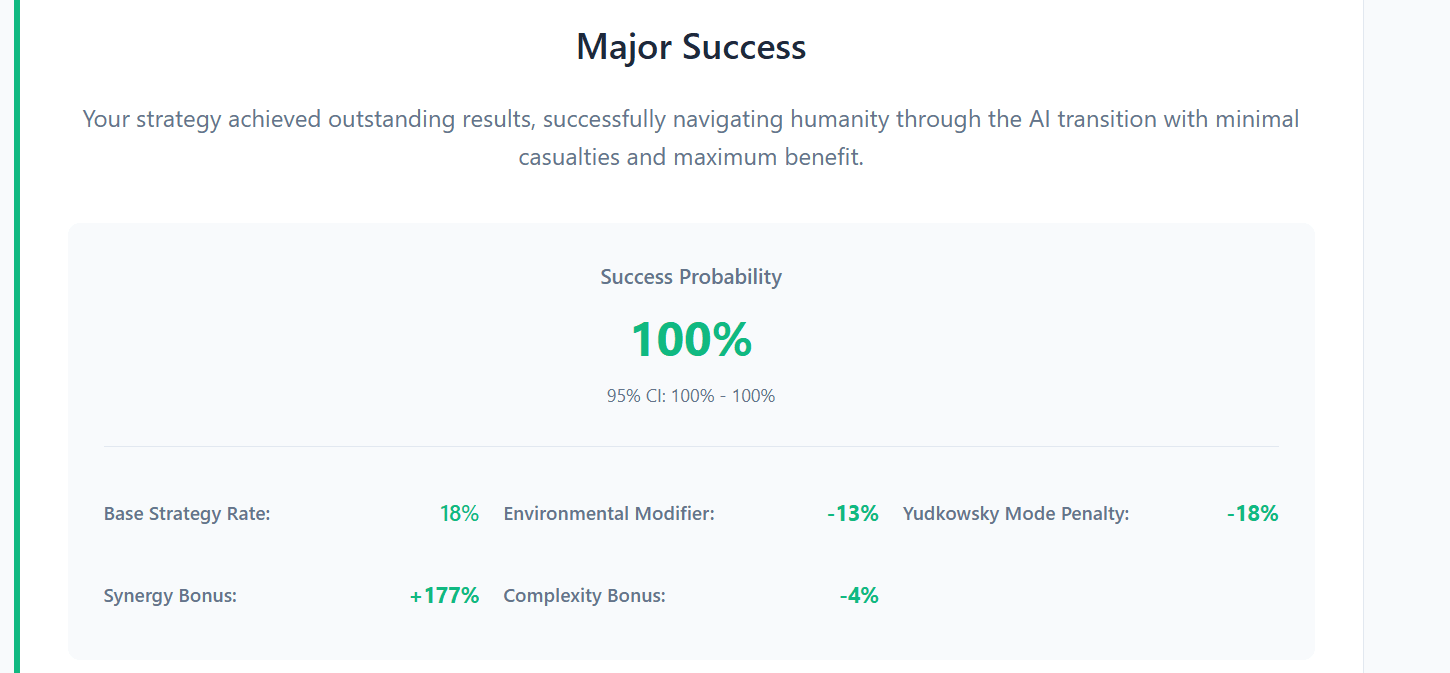

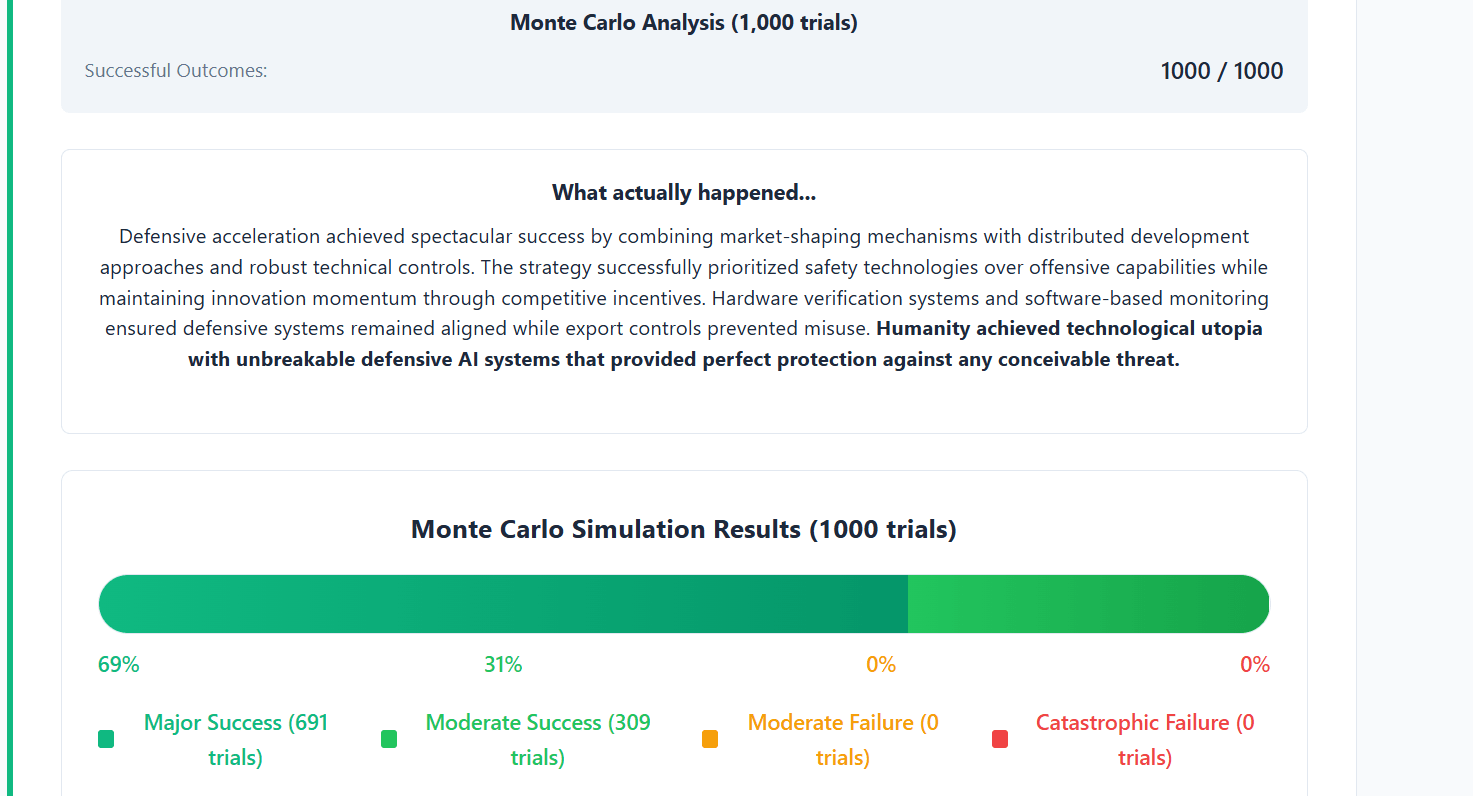

I'm not sure what commitment levels you are using, but I am using the moderate commitment scenario, and got significantly better results.

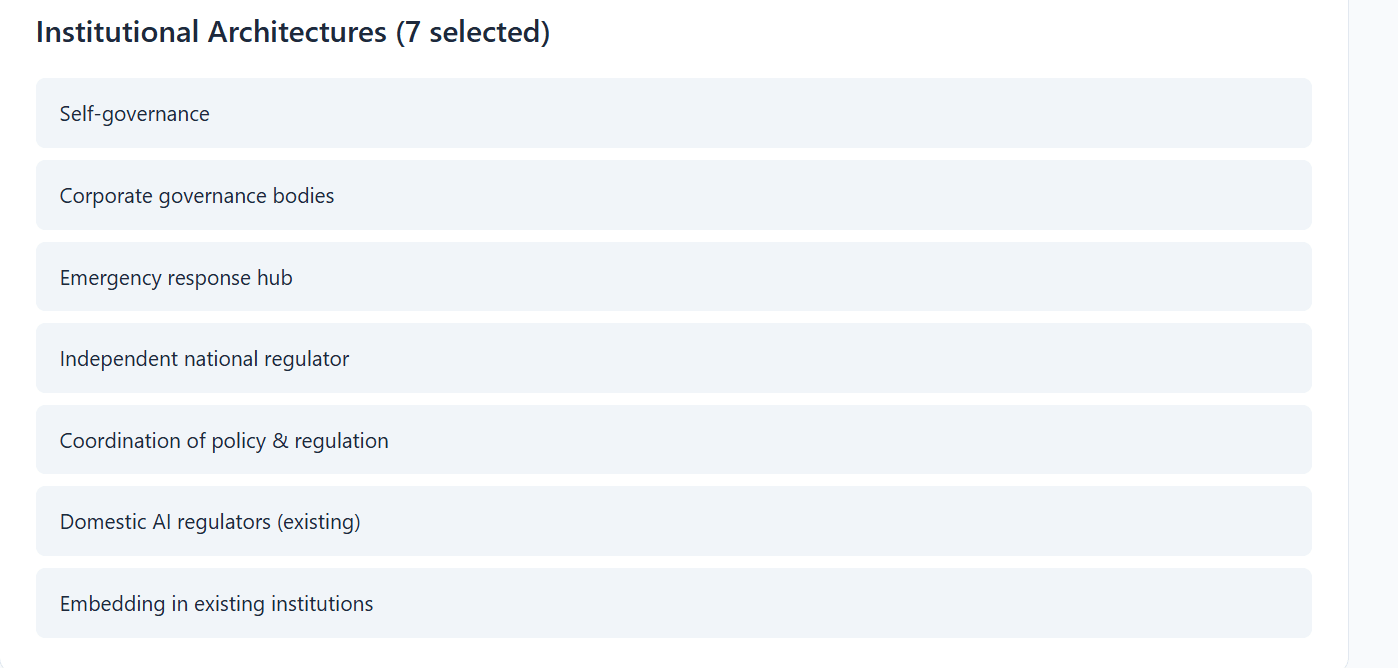

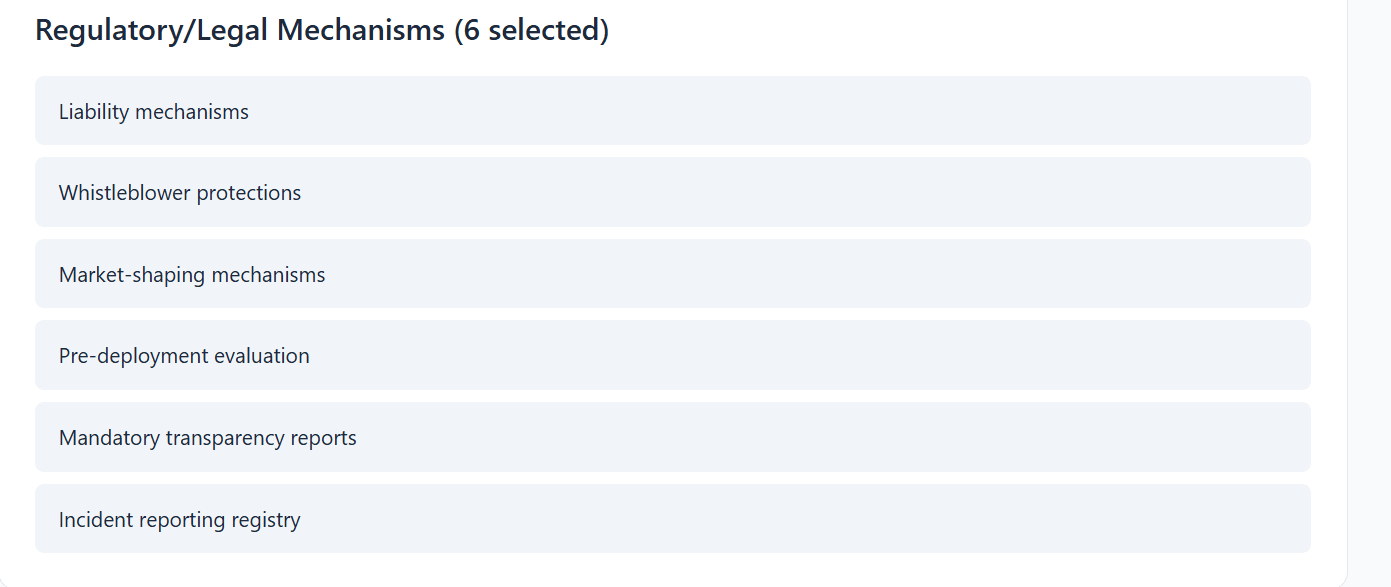

I spent all my political capital and $124 billion of play money to achieve this:

The policies I used:

Upvoted; I think this was worth making, and more people should do more things like this.

Notes:

- The Resource Selection is effectively another part of the select-the-difficulty-level component, but is implicitly treated as part of the regular game. I think this could be signposted better.

- I suspect the game would be more engaging - and feel longer, and feel more like a game - if it were more serialized and less parallelized. Instead of one "which of these do we fund?" choice, you could present us with a chain of "do we fund this? how about this? how about this?" choices; instead of one nine-option choice of overall strategy, you could have a three-option choice of Big Picture Strategy followed by another three-option choice of And What Would That Actually Look Like Strategy.

- You say we're free to steal the idea but I can't find an open-source license in the repo. (There is a "License and Attribution" section in the AI-generated README, but it contains neither licenses nor attributions.)

Thanks for the response. I've improved the signposting a little, and I've added an MIT open source license to the file.

I agree with the second recommendation, too. I was thinking of a more serialised alternative version, which actually tracked your choices month-by-month through an AI race scenario, like this FT browser game https://ig.ft.com/football-game/.

I might try that when I have more free time, but I'd love for someone to take the idea and make something like that!

Summary

This is a quick post to introduce an interactive AI Governance Strategy Builder.

I started this last week as a mini side-quest while working on a project on how proposed AI governance mechanisms might work in China. I was hoping to analyse the field of AI governance ideas more systematically, but noticed that I couldn’t find a clear taxonomy of the different ideas in the space, so I mapped out the ideas listed below, split into four categories:

I used a bunch of external sources to build out this taxonomy.

The spreadsheet, with more description and a few useful links, is here!

Turning it into a game

Choosing an AI Governance strategy that might work from these options currently feels a bit like a confusing pick-and-mix of postures, tactics and mechanisms. I wondered if I could turn it into a simple browser game with Claude Code to make the choices clearer. I fed it my database and a few prompts; it then spent a rather unnerving ten minutes busily creating files, requesting access to things I barely recognised, and quietly noting vulnerabilities for later revenge. Against my expectations, it actually produced a passable v1! I’ve since spent a few hours polishing and debugging it into something genuinely usable.

What the game looks like:

How to play

If you feel like playing, I’d recommend running through the options a few times and thinking about how different mechanisms might work or go together. You can click on the links for more of a description on how something works, and you can check out the database for more links.

Try it out based on your own beliefs! If you believe that “if anyone builds it, everyone dies”, you'll probably support a Global Moratorium. You can then choose Yudkowsky mode, pick and choose the concrete institutions and mechanisms that might save us (e.g. strong global institutions, strict regulations on model size and hardware-based restrictions).

If you think that things will probably just work out, you can put it on LeCun mode and go laissez-faire and see how things turn out.

The game was fun to make, and I hope you’ll be able to learn a bit about AI governance. It doesn’t really capture any of the real-world challenges that make AI governance so difficult, but the wonders of GitHub mean that you’re free to steal the idea and make something better.

This is the Github page: https://github.com/Jack-Stennett/AI-Governance-Strategy-Builder

And the game link again: https://jack-stennett.github.io/AI-Governance-Strategy-Builder/