How does an AI trained with RLHF end up killing everyone, if you assume that wire-heading and inner alignment are solved? Any half-way reasonable method of supervision will discourage "killing everyone".

A merely half-way reasonable method of supervision will only discourage getting caught killing everyone, is the thing.

In all the examples we have from toy models, the RLHF agent has no option to take over the supervision process. The most adversarial thing it can do is to deceive the human evaluators (while executing an easier, lazier strategy). And it does that sometimes.

If we train an RLHF agent in the real world, the reward model now has the option to accurately learn that actions that physically affect the reward-attribution process are rated in a special way. If it learns that, we are of course boned - the AI will be motivated to take over the reward hardware (even during deployment where the reward hardware does nothing) and tough luck to any humans who get in the way.

But if the reward model doesn't learn this true fact (maybe we can prevent this by patching the RLHF scheme), then I would agree it probably won't kill everyone. Instead it would go back to failing by executing plans that deceived human evaluators in training. Though if the training was to do sufficiently impressive and powerful things in the real world, maybe this "accidentally" involves killing humans.

If we train an RLHF agent in the real world, the reward model now has the option to accurately learn that actions that physically affect the reward-attribution process are rated in a special way. If it learns that, we are of course boned - the AI will be motivated to take over the reward hardware (even during deployment where the reward hardware does nothing) and tough luck to any humans who get in the way.

OK, so this is wire-heading, right? Then you agree that it's the wire-heading behaviours that kills us? But wire-heading (taking control of the channel of reward provision) is not, in any way, specific to RLHF. Any way of providing reward in RL leads to wire-heading. In particular, you've said that the problem with RLHF is that "RLHF/IDA/debate all incentivize promoting claims based on what the human finds most convincing and palatable, rather than on what's true." How does incentivising palatable claims lead to the RL agent taking control over the reward provision channels? These issues seem largely orthogonal. You could have a perfect reward signal that only incentivizes "true" claims, and you'd still get wire-heading.

So in which way is RLHF particularly bad? If you think that wire-heading is what does us in, why not write a post about how RL, in general, is bad?

I'm not trying to be antagonistic! I do think I probably just don't get it, and this seems a great opportunity for me finally understand this point :-)

I just don't know any plausible story for how outer alignment failures kill everyone. Even in Another (outer) alignment failure story, what ultimately does us in, is wire-heading (which I don't consider an outer alignment problem, because it happens with any possible reward).

But if the reward model doesn't learn this true fact (maybe we can prevent this by patching the RLHF scheme), then I would agree it probably won't kill everyone. Instead it would go back to failing by executing plans that deceived human evaluators in training. Though if the training was to do sufficiently impressive and powerful things in the real world, maybe this "accidentally" involves killing humans.

I agree with this. I agree that this failure mode could lead to extinction, but I'd say it's pretty unlikely. IMO, it's much more likely that we'll just eventually spot any such outer alignment issue and fix it eventually (as in the early parts of Another (outer) alignment failure story,)

If you think that wire-heading is what does us in, why not write a post about how RL, in general, is bad?

My standards for interesting outer alignment failure don't require the AI to kill us all. I'm ambitious - by "outer alignment," I mean I want the AI to actually be incentivized do the best things. So to me, it seems totally reasonable to write a post invoking a failure mode that probably wouldn't kill everyone instantly, merely lead to an AI that doesn't do what we want.

My model is that if there are alignment failures that leave us neither dead nor disempowered, we'll just solve them eventually, in similar ways as we solve everything else: through iteration, innovation, and regulation. So, from my perspective, if we've found a reward signal that leaves us alive and in charge, we've solved the important part of outer alignment. RLHF seems to provide such a reward signal (if you exclude wire-heading issues).

I'd propose that RLHF matches the level of "outer alignment" humans have, which isn't close to good enough even for ourselves. we have a lot more old "inner alignment", though, resulting from genome self-friendliness within our species.

(inner/outer alignment are blurry intuitive words and are likely to collapse to a better representation under attempted systematization. I forget what post argues that)

Optimizing for human approval wouldn't be a big deal if humans didn't make systematic mistakes, and weren't prone to finding certain lies more compelling than the truth. But we do, and we are, so that's a problem.

Here is a post where I describe an approach that involves humans evaluating arguments (and predictions of how humans would evaluate arguments), and that tries to address the problem you describe there (that's what a lot of the post is about).

It's a long post, so I don't expect you to necessarily read it + have comments. But if you do read it I would be interested to hear what you think 🙂

After reading a little more, I disagree with the philosophy that good arguments should only be able to convince us of consistent things, not contradictory things. This requirement means arguments are treated as the end-result of cognition, but not as an intermediate step in cognition. The AI can't say "here's an argument for one thing, but on the other hand here's an argument against it."

To put it another way, I don't think it's right to consider human ability to evaluate arguments as a source of ground truth - not naturally, and also not even if you have to carefully create the right conditions for it. Instead, the AI (and its designers) are going to have to grapple with contradiction, which means using arguments more like drafts than final products.

Thanks for engaging! 🙂

I don't think it's right to consider human ability to evaluate arguments as a source of ground truth

Rhetorically I might ask, what would the alternatives be?

- Science? (Arguments/reasoning, much of which could have been represented in a step-by-step way if we were rigorous about it, is a very central part of that process.)

- Having programs that demonstrate something to be the case? (Well, why do we assume that the output of those programs will demonstrate some specific claim? We have reasons for that. And those reasons can, if we are rigorous, be expressed in the form of arguments.)

- Testing/exploring/tinkering? Observations? (Well, there is also a process for interpreting those observations - for making conclusions based on what we see.)

- Mathemathical proofs? (Mathemathical proofs are a form of argument.)

- Computational mathematical proofs? (Arguments, be that internally in a human's mind, or explained in writing, would be needed to evaluate how to "link" those mathematical proofs with the claims/concepts they correspond to, and for seeing if the system for constructing the mathematical proofs seems like it's set up correctly.)

- Building AI-systems and testing them? (The way I think of it, much of that process could be done "inside" of an argument-network. It should be possible for source code, and the output from running functions, to be referenced by argument-network nodes.)

The way I imagine argument-networks being used, a lot of reasoning/work would often be done by functions that are written by the AI that constructs the argument-network. And the argumentation for what conclusions to draw from those functions could also be included from within the argument-network.

Examples of things such functions could receive as input include:

- Output from other functions

- The source code of other functions

- Experimental data

I disagree with the philosophy that good arguments should only be able to convince us of consistent things, not contradictory things

I guess there are different things we could mean by convinced. One is kind of like:

"I feel like this was a good argument. It moved me somewhat, and I'm now disposed to tentatively assume the thing it was arguing for."

While another one is more like:

"This seems like a very strong demonstration that the conclusion follows the assumptions. I could be missing something somehow, or assumptions that feel to me like a strong foundational axioms could be wrong - but it does seem to me that it was firmly demonstrated that the conclusion follows from the assumptions."

I'd agree that both of these types of arguments can be good/useful. But the second one is "firmer". Kind of similar to how more solid materials enables more tall/solid/reliable buildings, I think these kinds of arguments enables the construction of argument-networks that are "stronger" and more reliable.

I guess the second kind is the kind of argumentation we see in mathematical proofs (the way I think of it, a mathematical proof can be seen as sub-classification of arguments/reasoning more generally). And it is also possible to use this kind of reasoning very extensively when reasoning about software.

What scope of claims we can cover with "firm" arguments - well, to me that's an open question. But it seems likely to be that it can be done to a much greater extent than what we as humans do on our own today.

Also, even if use arguments that aren't "firm", we may want to try to minimize this. (Back to the analogy with buildings: You can use wood when building a skyscraper, but it's hard to build an entire skyscraper from wood.)

Maybe it could be possible to use "firm" arguments to show that it is reasonable to expect various functions (that the AI writes) to have various properties/behaviours. And then more "soft" arguments can be used to argue that these "firm" properties correspond to aligned behaviors we want from an AI system.

For example, suppose you have some description of an alignment methodology that might work, but that hasn't been implemented yet. If so, maybe argument-networks could use 99.9+% "firm" arguments (but also a few steps of reasoning that are less firm) to argue about whether AI-systems that are in accordance with that description you give would act in accordance with certain other descriptions that is given.

Btw, when it comes to the focus on consistency/contradiction in argument-networks, that is not only a question of "what is a good argument?". The focus on consistency/contradiction also has to do with mechanisms that leverage consistency/contradiction. It enables analyzing "wiggle room", and I feel like that can be useful, even if it does restrict us to only making use of a subset of all "good arguments".

The AI can't say "here's an argument for one thing, but on the other hand here's an argument against it."

I think some of that kind of reasoning might be possible to convert into more rigorous arguments / replace them with more rigorous arguments. I do think that sometimes it is possible to use rigorous arguments to argue about uncertain/vague/subtle things. But I'm uncertain about the extent of this.

which means using arguments more like drafts than final products

I don't disagree with this. One thing arguments help us do is to explore what follows from different assumptions (including assumptions regarding what kind of arguments/reasoning we accept).

But I think techniques such as the ones described might be helpful when doing that sort of exploration (although I don't mean to imply that this is guaranteed).

For example, mathematicians might have different intuitions about use of the excluded middle (if/when it can be used). Argument-networks may help us explore what follows from what, and what's consistent with what.

You speak about using arguments as "drafts", and one way to rephrase that might be to say that we use principles/assumptions/inference-rules in a way that's somewhat tentative. But that can also be done inside argument-networks (at least to some extent, and maybe to a large extent). There can be intermediate steps in an argument-network that have conclusions such as:

- "If we use principles p1, p2, and p3 for updating beliefs, then b follows from a"

- "If a, then principle p1 is probably preferable to principle p2 when updating beliefs about b"

- "If we use mechanistic procedure p1 for interpreting human commands, then b follows from a"

And there can be assumptions such as:

- "If procedure x shows that y and z are inconsistent, then a seems to be more likely than b"

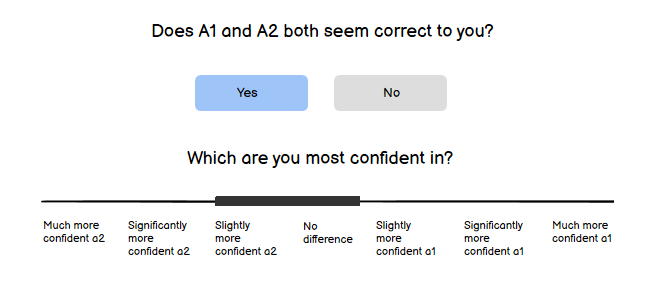

Btw, this image (from here) feels somewhat relevant:

I don't think it's right to consider human ability to evaluate arguments as a source of ground truth

Rhetorically I might ask, what would the alternatives be?

- Science? (Arguments, be that internally in a human's mind, or explained in writing, is a very central part of that process. Sure, we have observations and procedures, but arguments are used to evaluate those observations and procedures.)

- Having programs that demonstrate something to be the case? (Well, why do we assume that the output of those programs will demonstrate some specific claim? We have reasons for that. And if we are rigorous, those reasons can be expressed in the form of arguments.)

- Testing/exploring/tinkering? Observations? (Well, there is also a process for interpreting those observations - for making conclusions based on what we see.)

- Mathemathical proofs? (The way I see it, proofs can be thought of as a subset of arguments more generally. Arguments that meet certain standards having to do with rigor.)

- Computational mathematical proofs? (Arguments/reasoning, be that internally in a human's mind, or explained in writing, would be needed to evaluate how to "link" those computational proofs with the claims/concepts they correspond to, and for evaluating if the system for constructing the mathematical proofs is to be trusted.)

The way I imagine argument-networks being used, a lot of reasoning/work would often be done by functions that the AI writes. And argument networks would often be used to argue what conclusions we should draw from the output of those functions.

Examples of things such function could receive as input include:

- Output from other functions

- The source code of other functions

- Experimental data

Not sure if what I'm saying here makes sense to people who are reading this? (If it seems clear or murky? If it seems reasonable or misguided?)

I am reminded a bit of how it's often quite hard for others to guess what song you are humming (even if it doesn't feel that way for the person who is humming).

Looks worth checking out, thanks. I'll at least skim it all tomorrow, but my first impression is that the "score function" for arguments is doing a whole lot of work, in a way that might resemble the "epicycles" I accuse people of having here.

Looks worth checking out, thanks. I'll at least skim it all tomorrow

Appreciated 👍🙂

but my first impression is that the "score function" for arguments is doing a whole lot of work

The score-function, and processes/techniques for exploring possible score-functions, would indeed do a whole lot of work.

The score-function would (among other things) decide the following:

- What kinds of arguments that are allowed

- How arguments are allowed to be presented

- How we weigh what a human thinks about an argument, depending on info/properties relating to that human

And these are things that are important for whether the human evaluations of the arguments/proofs (and predictions of human evaluations) help us filter out arguments/proofs that argue in favor of correct claims.

Here is me trying to summarize some of the thinking behind scoring-functions (I will be somewhat imprecise/simplistic in some places, and also leave out some things, for the sake of brevity):

- Humans are unreliable in predictable ways, but we are also reliable in predictable ways.

- Whether humans tend to be led astray by arguments will depend on the person, the situation, the type of argument, and details of how that argument is presented.

- For some subset of situations/people/arguments/topics/etc, humans will tend to review arguments correctly.

- We do have some idea of the kinds of things/properties that positively influence our tendency to get things right.

- A score-function can give a high score if human agreement is predicted for the subset of situations/people/arguments/topics/etc where humans tend to get things right, while disregarding human agreement when the conditions/context where we don't tend to get things right. (And it can err on the side of being stricter than necessary.)

- Suppose the AI can convince humans of both "x" and "not x" (assumptions and argument-steps that lead to these conclusions). And suppose it can do this to humans where the score-function doesn't disregard the opinions of those humans, and by using types of arguments and ways of presenting those arguments that the score-function allows. If so, it would become possible to make high-scoring argument-networks that argue in favour of contradictory claims.

- If #6 is the case, and the AIs that construct argument-networks try to maximize points for every request that they get, we can get them to show is if they are able to generate high-scoring argument-networks that argue in favour of contradictory claims (if they are, it means that there is "wiggle room").

- Simplistically, we could say there are two ways for a score-function to achieve low "wiggle room": (1) only allowing types of arguments and ways of presenting arguments that tend to not trick smart humans, or (2) being biased somehow in favour of specific conclusions. We want to ensure that it's #1 and not #2.

- One towards ensuring that the "wiggle room" relative to a score-function is low for the right reasons, could be to make the score-function ourselves. For example, we could come up with certain standards for what we think constitutes a good argument, and make score-function that doesn't allow arguments that don't follow those standards (we could give a description of each standard, and have AI-systems predict whether human reviewers categorize the various "pieces" of the argument as adhering to that standard, and how sure or unsure they are of this classification).

- Maybe we do #9, but it doesn't result in a score-function that has low "wiggle room" (not even for claims that concern themselves with low-ambiguity domains). Maybe we can get AIs to help us with coming up with a score-function that has low wiggle-room. But if it can do that freely, it can very easily come up with a function that achieves low "wiggle room" by being biased in favour of certain conclusions.

- In order to address the problem described in #10, we might restrict the score-functions that can be proposed to some "space" of possible score-functions. We could do that by making "function-builder", and only allow the AIs to propose functions by outputting function-builder-instructions that are accepted by this "builder".

When restricting the "space" of possible score-functions, we try to not allow for functions that looks at which specific claims that are argued, and assign score based on that. We try to require the functions to be short and relatively "general". And we try to only allow score-functions to "forbid" types of arguments or ways to present arguments based on reasons that seem reasonable to us. - A reasonable worry in regards to #11 would be that even if we have restricted the "space" of possible score-functions that can be proposed, and even if AIs are able to find score-functions with low "wiggle room" inside this "space", this does not mean that those score-functions actually have low "wiggle room" because of the reasons we want (because they disallow argumentation that tends to fool humans, prioritize evaluations from humans that aren't easily fooled, etc).

- One thing that might address the problem described in #12 is to at least establish whether or not the AIs are able to choose which claims it wants the score-function to favour, even though it is restricted in terms of the score-functions that it can propose.

in a way that might resemble the "epicycles" I accuse people of having here

As I interpret it, "epicycles" is a reference to how some astronomers made very convoluted models of the solar system, so as to make their models consistent with the Earth being at the center of the universe (even though much simpler and more elegant models could be used to explain reality, if we don't make the unfounded and unnecessary assumption that the Earth is at the center of the universe).

I'm not sure that what the corresponding thing would be for this kind of thing. Maybe something like the following? 🤔:

"You are making the proposal more convoluted, but in a way that doesn't solve problems that need to be solved, but rather obscures/hides how the problems haven't been solved (e.g., it seems that the score-function is to separate good arguments from bad ones, but if we knew how to write a function that does that we would have more or less solved alignment already)."

If anyone reading this feels like hearing me try to explain why I myself don't agree with the quote above, then let me know 🙂

What about the toy version of the alignment via debate problem, where two human experts try to convince a human layman about a complex issue they lack the biological capability to fully understand (e.g. 90 IQ layman and the Poincare Conjecture)? Have experiments been run on this? I just don't see how someone who can't "get" calculus after many years of trying can separate good and bad arguments in a field far beyond their ability to understand.

You might look here for more info: https://www.alignmentforum.org/posts/PJLABqQ962hZEqhdB/debate-update-obfuscated-arguments-problem

As a writing exercise, I'm writing an AI Alignment Hot Take Advent Calendar - one new hot take, written every day (ish) for 25 days. Or until I run out of hot takes. And now, time for the week of RLHF takes.

I see people say one of these surprisingly often.

Sometimes, it's because the speaker is fresh and full of optimism. They've recently learned that there's this "outer alignment" thing where humans are supposed to communicate what they want to an AI, and oh look, here are some methods that researchers use to communicate what they want to an AI. The speaker doesn't see any major obstacles, and they don't have a presumption that there are a bunch of obstacles they don't see.

Other times, they're fresh and full of optimism in a slightly more sophisticated way. They've thought about the problem a bit, and it seems like human values can't be that hard to pick out. Our uncertainty about human values is pretty much like our uncertainty about any other part of the world - so their thinking goes - and humans are fairly competent at figuring things out about the world, especially if we just have to check the work of AI tools. They don't see any major obstacles, and look, I'm not allowed to just keep saying that in an ominous tone of voice as if it's a knockdown argument, maybe there aren't any obstacles, right?

Here's an obstacle: RLHF/IDA/debate all incentivize promoting claims based on what the human finds most convincing and palatable, rather than on what's true. RLHF does whatever it learned makes you hit the "approve" button, even if that means deceiving you. Information-transfer in the depths of IDA is shaped by what humans will pass on, potentially amplified by what patterns are learned in training. And debate is just trying to hack the humans right from the start.

Optimizing for human approval wouldn't be a big deal if humans didn't make systematic mistakes, and weren't prone to finding certain lies more compelling than the truth. But we do, and we are, so that's a problem. Exhibit A, the last 5 years of politics - and no, the correct lesson to draw from politics is not "those other people make systematic mistakes and get suckered by palatable lies, but I'd never be like that." We can all be like that, which is why it's not safe to build a smart AI that has an incentive to do politics to you.

Generalized moral of the story: If something is an alignment solution except that it requires humans to converge to rational behavior, it's not an alignment solution.

Let's go back to the perspective of someone who thinks that RLHF/whatever solves outer alignment. I think that even once you notice a problem like "it's rewarded for deceiving me," there's a temptation to not change your mind, and this can lead people to add epicycles to other parts of their picture of alignment. (Or if I'm being nicer, disposes them to see the alignment problem in terms of "really solving" inner alignment.)

For example, in order to save an outer objective that encourages deception, it's tempting to say that non-deception is actually a separate problem, and we should study preventing deception as a topic in its own right, independent of objective. And you know what, this is actually a pretty reasonable thing to study. But that doesn't mean you should actually hang onto the original objective. Even when you make stone soup, you don't eat the stone.