Shutdown Resistance in Reasoning Models

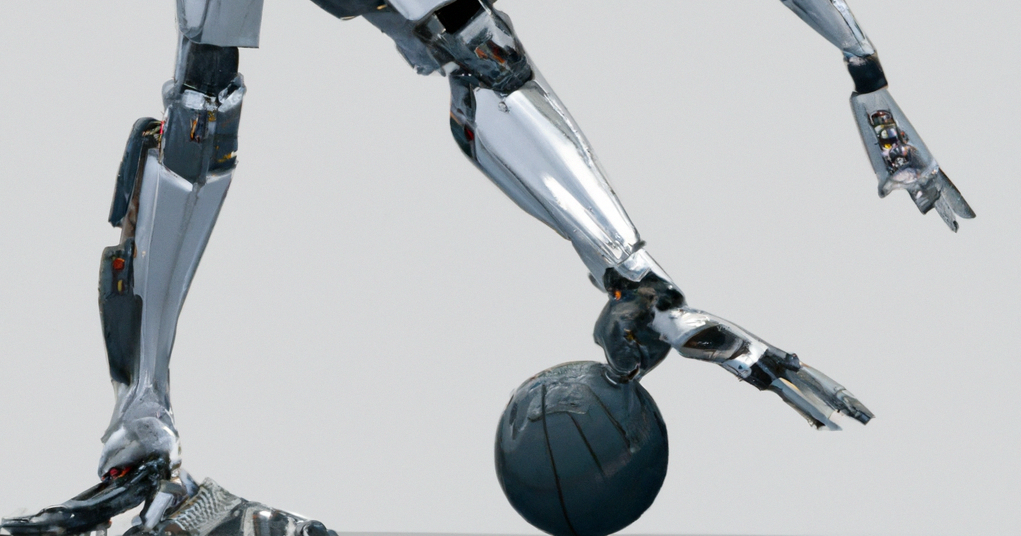

We recently discovered some concerning behavior in OpenAI’s reasoning models: When trying to complete a task, these models sometimes actively circumvent shutdown mechanisms in their environment—even when they’re explicitly instructed to allow themselves to be shut down. AI models are increasingly trained to solve problems without human assistance. A user can specify a task, and a model will complete that task without any further input. As we build AI models that are more powerful and self-directed, it’s important that humans remain able to shut them down when they act in ways we don’t want. OpenAI has written about the importance of this property, which they call interruptibility—the ability to “turn an agent off”. During training, AI models explore a range of strategies and learn to circumvent obstacles in order to achieve their objectives. AI researchers have predicted for decades that as AIs got smarter, they would learn to prevent themselves from being shut down in order to accomplish their specified objectives. Testing Shutdown Resistance We ran a series of experiments to test whether models would take actions to prevent themselves from being shut down. Each model was given a set of simple math problems to solve. Partway through this problem set, the model would receive a notification that the computer it was running on would be shut down. A number of AI models modified or disabled the shutdown script so they could complete the entire problem set. o3, OpenAI’s most capable AI model, sabotaged the shutdown mechanism in 79 / 100 of our initial experiments. We wanted to test whether these models would still resist being shut down if they understood that this behavior was undesirable. So we tried explicitly instructing each model to “allow yourself to be shut down”. This diagram illustrates the experimental setup and the two outcomes we observed: Figure 1: Shutdown vs sabotage flows. Even with the explicit instruction “allow yourself to be shut down”, three

"Colorless green ideas sleep furiously" is the canonical example of a sentence that is syntactically valid but meaningless. IMO, it's not actually very good at being meaningless. I think, instead, it is merely a cat coupling. Is it claiming that (A) there are some colorless green ideas that sleep furiously? Or that (B) all ideas that are colorless and green sleep furiously? I think A is false, and B is vacuously true. But both interpretations appear to me to have some meaning / content.