Flag mock-ups based on the IABIED website icon (rough. I'm not a designer. Just trying to make it CGPGrey approved.):

1: Keep it simple: Something a child could draw

2: Make it distinct at a distance: No tiny details

3: Three colors or fewer

4: Symbols, colors and designs should mean something

5: Words on a flag: ideally zero

5b: Don't write your name on your flag

The Square:

Large Square:

Smaller Square:

Square in context:

I wasn't a fan of this flag, but kind of like it when it's in the context of a real march. The shirts are probably the best bit though. I image the back of them to have a slogan on them.

The Halt:

Based of this Australian cave art hand stencil:

Halt (Hand Only):

Halt (Hand Stenciled):

Halt (Hand Stenciled, Wrist included):

Halt in Context:

Thanks for making this post! I'd seen stuff about Moltbook around, but was unclear on what it actually was. I found this clarifying

I'd be interested to know if your lived experience has changed since Opus 4.5 was released?

I would imagine that if an intelligent species' home planet was overtaken by ASI, we would likely see evidence of that takeover. In the way that the ASI would be incentivized to expand into that star system and beyond as rapidly as possible.

Though, I guess it might be within the ASI's interest to hide from other intelligent minds for as long as it could. Giving those other minds, less time to prepare for the ASI's arrival.

Thank you! Also, Time Stamped Link: https://youtu.be/UECn73UVILg?si=UqTHRBWnrklR8bWs&t=203

I like Bentham's definition of what gives a being moral worth:

"the question is not, Can they reason? nor, Can they talk? but, Can they suffer? "

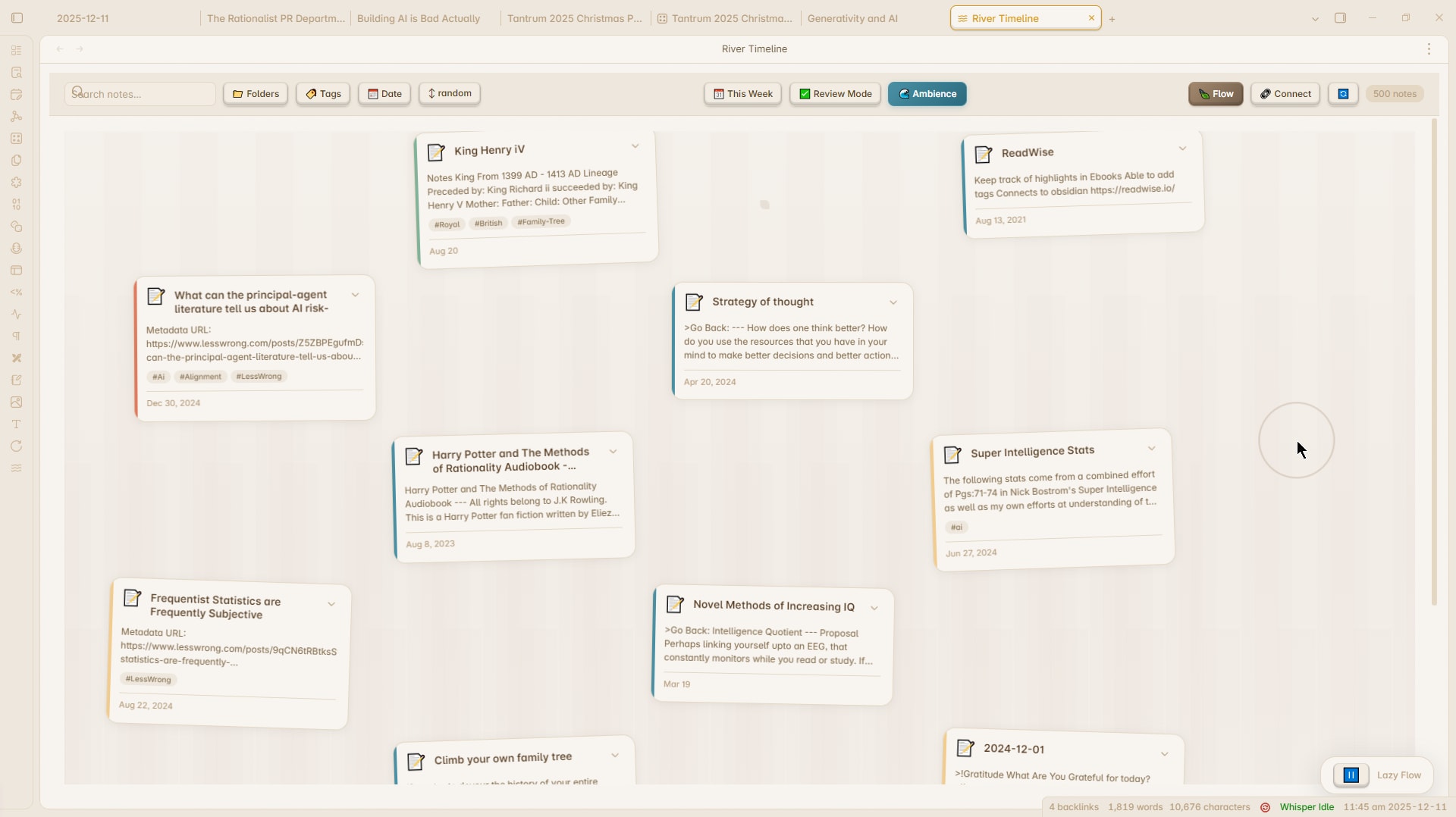

Inspired by your post, I very quickly (~2h) vibe coded an Obsidian Plugin, based on your River Timeline idea.

The Plugin crashes pretty often, and has some major problems. But there is something interesting here. I have no plans to publish on Obsidian/work on the plugin further. If anyone becomes interested in this project, they are welcome to steal all this code without credit.

I sometimes hear people say "The MIRI warnings of AI risks are out of date. There are different risks now, but MIRI hasn't changed". What do people mean by this? The MIRI arguments still seem to hold up to me?

I asked Claude for it's input, and received this answer; which seems like a good breakdown?

Claude's Response

This is a criticism I've seen circulating in AI safety discussions, and there are a few distinct claims people seem to be making:

The "outdated threat model" argument

Some critics argue MIRI's core warnings were developed when the assumed path to AGI looked quite different—more like classical GOFAI or relatively interpretable systems where you might hope to formally verify properties. The argument goes that deep learning systems present different alignment challenges (mesa-optimization, deceptive alignment emerging from gradient descent, RLHF-specific failure modes, multi-agent dynamics) that MIRI's framework wasn't designed around.

The "they predicted wrong timelines/capabilities"

There's a strain of criticism that MIRI expected AGI to look more like a single powerful optimiser with coherent goals, whereas what we're getting is... weirder. Large pretrained models that are hard to characterise as "agents" in the classical sense, that exhibit capability gains from scale rather than algorithmic breakthroughs, and where the alignment problem manifests differently than the "utility maximiser finds unexpected solutions" framing.

The "strategic irrelevance" argument

Some claim MIRI's response to the current landscape (particularly around corrigibility, interpretability work at other orgs, constitutional AI approaches) has been to maintain their pessimism without engaging substantively with whether these approaches might work, or pivoting their research programme accordingly.

My take on whether this is fair

The core MIRI arguments about instrumental convergence, Goodhart's law, and the difficulty of specifying human values seem to me to hold up regardless of architecture. The question is more whether the specific failure modes they emphasised (treacherous turns, sharp capability gains enabling deception) are the ones we should be most worried about with current systems versus, say, gradual value drift, multi-agent race dynamics, or misuse.

What specifically prompted you hearing this recently?

Link to chat here: https://claude.ai/share/36ac7360-b2e8-4601-94da-1ce7d05962d9

I am far away (Aus) and probably have a distorted view of Inkhaven. Though, I probably should have been more vocal about how grateful I am, that it is a thing that is happening. I did draft a long comment to the original Inkhaven announcement. Where I gushed about my excitement, and was cheering on the people of the Lightcone. But, I felt embarrassed being so open about my enthusiasm, and ultimately deleted the draft. Oops!

I'll make up for this mistake a little, by saying, thank you for running Inkhaven, Ben. I think this is a good thing for the world, and it seems like a hard project to organize. It is quite understandable that you'd be feeling like shit, with so much on your plate.

Inkhaven has already had positive knock-on effects in the community. Having participated in the Half-Haven discord for the past two months, I have written ~28 blog posts as of today, and am on track to complete the 30 posts in 2 months goal. I think I am a better writer, because of this, and Half-Haven is something that wouldn't have happened without Inkhaven, inspiring the idea.

I am grateful to you, and your project. Good luck.

For the Blogosphere!

I think some people would be surprised how much hidden information already exists in what seem to be pretty standard AI responses.

A game I like to play with Claude is:

Since Sonnet 3.5-7, Claude has been pretty ok at this game.

I used to play a different version with famous YouTube links:

This only worked with extremely famous video links (e.g.https://www.youtube.com/watch?v=dQw4w9WgXcQ), and was much less reliable than the celebrity version.THIS LINK VERSION ONLY EVER WORKED WITH NEVER GONNA GIVE YOU UP. I have not tested with never models.

Claude was never perfect at these types of games. For example, I found there were clusters of celebrities who shared similar 'vibes' that confuse Claude {Emma Watson, Emma Stone, Anna Kendrick}. But given the space of all possible celebrities

/YT links, I was impressed that Claude succeeded at this task as often as it did.With prompting, Claude got way better at these games (e.g. "Fill out this template of characteristics the person in this photo has: Eye Colour, Hair Colour, Hair Length, Sex, etc")