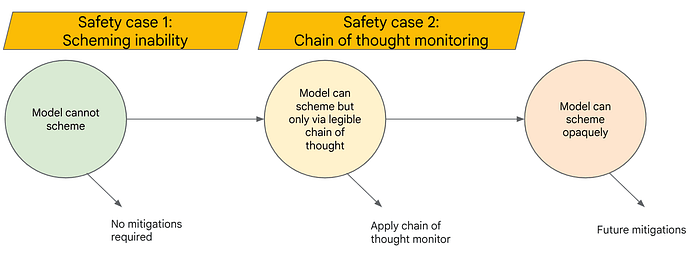

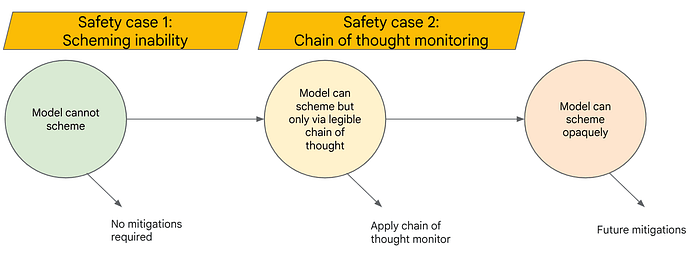

As AI models become more sophisticated, a key concern is the potential for “deceptive alignment” or “scheming”. This is the risk of an AI system becoming aware that its goals do not align with human instructions, and deliberately trying to bypass the safety measures put in place by humans to prevent it from taking misaligned action. Our initial approach, as laid out in the Frontier Safety Framework, focuses on understanding current scheming capabilities of models and developing chain-of-thought monitoring mechanisms to oversee models once they are capable of scheming.

We present two pieces of research, focusing on (1) understanding and testing model capabilities necessary for scheming, and (2) stress-testing chain-of-thought monitoring as a... (read 1426 more words →)