New Report: An International Agreement to Prevent the Premature Creation of Artificial Superintelligence

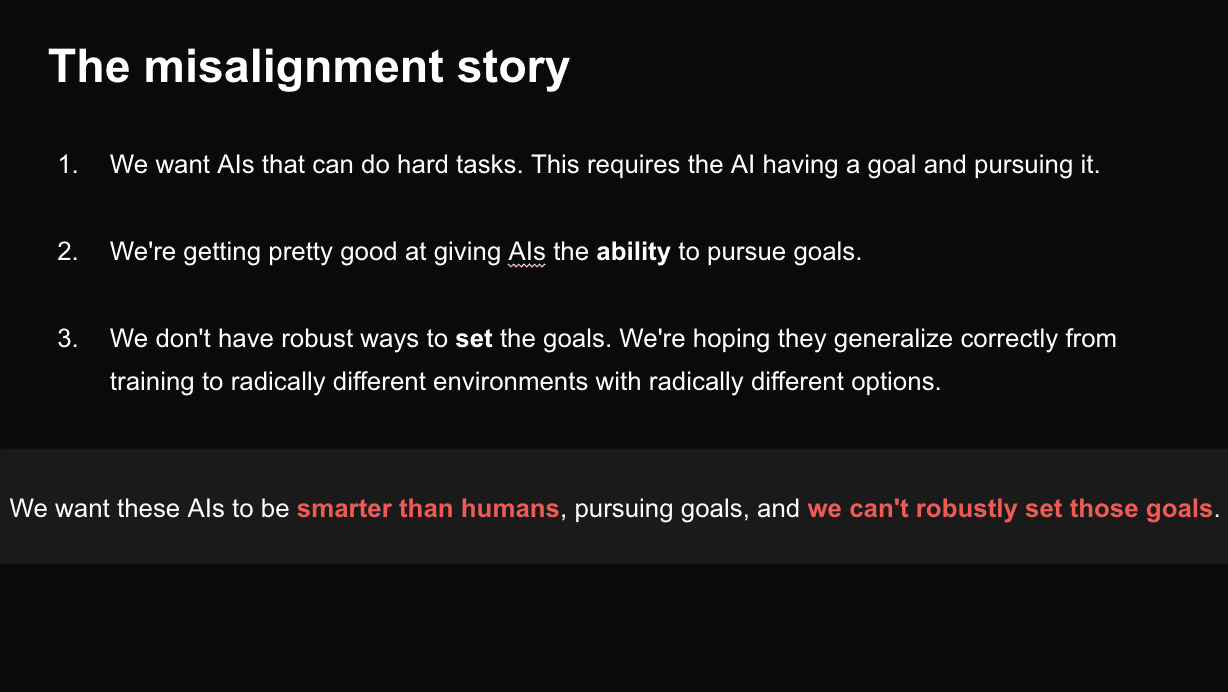

TLDR: We at the MIRI Technical Governance Team have released a report describing an example international agreement to halt the advancement towards artificial superintelligence. The agreement is centered around limiting the scale of AI training, and restricting certain AI research. Experts argue that the premature development of artificial superintelligence (ASI) poses catastrophic risks, from misuse by malicious actors, to geopolitical instability and war, to human extinction due to misaligned AI. Regarding misalignment, Yudkowsky and Soares’s NYT bestseller If Anyone Builds It, Everyone Dies argues that the world needs a strong international agreement prohibiting the development of superintelligence. This report is our attempt to lay out such an agreement in detail. The risks stemming from misaligned AI are of special concern, widely acknowledged in the field and even by the leaders of AI companies. Unfortunately, the deep learning paradigm underpinning modern AI development seems highly prone to producing agents that are not aligned with humanity’s interests. There is likely a point of no return in AI development — a point where alignment failures become unrecoverable because humans have been disempowered. Anticipating this threshold is complicated by the possibility of a feedback loop once AI research and development can be directly conducted by AI itself. What is clear is that we're likely to cross the threshold for runaway AI capabilities before the core challenge of AI alignment is sufficiently solved. We must act while we still can. But how? In our new report, we propose an international agreement to halt the advancement towards superintelligence while preserving access to current, beneficial AI applications. We don’t know when we might pass a point of no return in developing superintelligence, and so this agreement effectively halts all work that pushes the frontier of general AI capabilities. This halt woul

Zachary Robinson and Kanika Bahl are no longer on the Anthropic LTBT. Mariano-Florentino (Tino) Cuéllar has been added. The Anthropic Company page is out of date, but as far as I can tell the LTBT is: Neil Buddy Shah (chair), Richard Fontaine, and Cuéllar.