Carl Shulman is working for Leopold Aschenbrenner's "Situational Awareness" hedge fund as the Director of Research. https://whalewisdom.com/filer/situational-awareness-lp

People seem to be reacting to this as though it is bad news. Why? I'd guess the net harm caused by these investments is negligible and this seems like a reasonable earning to give strategy.

Leopold himself also IMO seems to me like a kind of low-integrity dude and Shulman lending his skills and credibility to empowering him seems pretty bad for the world (I don't have this take confidently, but that's where a lot of my negative reaction came from).

Situational awareness did seem like it was basically trying to stoke a race, seemed to me like it was incompatible with other things he had told me and I had heard about his beliefs from others, and then he did go on and leverage that thing of all things into an investment fund which does seem like it would straightforwardly hasten the end. Like, my honest guess is that he did write Situational Awareness largely to become more powerful in the world, not to help it orient, and turning it into a hedge fund was a bunch of evidence in that direction.

Also some other interactions I've had with him where he made a bunch of social slap-down motions towards people taking AGI or AGI-risk seriously in ways that seemed very power-play optimized.

Again, none of this is a confident take.

I also had a negative reaction to the race-stoking and so forth, but also, I feel like you might be judging him too harshly from that evidence? Consider for example that Leopold, like me, was faced with a choice between signing the NDA and getting a huge amount of money, and like me, he chose the freedom to speak. A lot of people give me a lot of credit for that and I think they should give Leopold a similar amount of credit.

The fund manages 2 billion dollars, and (from the linked webpage on their holdings) puts the money into chipmakers and other AI/tech companies. The money will be used to fund development of AI hardware and probably software, leading to growth in capabilities.

If you're going to argue that 2 billion AUM is too small an amount to have an impact on the cash flows of these companies and make developing AI easier, I think you would be incorrect (even openai and anthropic are only raising single digit billions at the moment).

You might argue that other people would invest similar amounts anyways, so its better for the "people in the know" to do it and "earn to give". I think that accelerating capabilities buildouts to use your cut of the profits to fund safety research is a bit like an arsonist donating to the fire station. I will also note that "if I don't do it, they'll just find somebody else" is a generic excuse built on the notion of a perfectly efficient market. In fact, this kind of reasoning allows you to do just about anything with an arbitrarily large negative impact for personal gain, so long as someone else exists who might do it if you don't.

My argument wouldn't start from "it's fully negligible". (Though I do think it's pretty negligible insofar as they're investing in big hardware & energy companies, which is most of what's visible from their public filings. Though private companies wouldn't be visible on their public filings.) Rather, it would be a quantitative argument that the value from donation opportunities is substantially larger than the harms from investing.

One intuition pump that I find helpful here: Would I think it'd be a highly cost-effective donation opportunity to donate [however much $ Carl Shulman is making] to reduce investment in AI by [however much $ Carl Shulman is counterfactually causing]? Intuitively, that seems way less cost-effective than normal, marginal donation opportunities in AI safety.

You say "I think that accelerating capabilities buildouts to use your cut of the profits to fund safety research is a bit like an arsonist donating to the fire station". I could say it's more analogous to "someone who wants to increase fire safety invests in the fireworks industry to get excess returns that they can donate to the fire station, which they estimate will reduce far more fire than their f...

I will also note that "if I don't do it, they'll just find somebody else" is a generic excuse built on the notion of a perfectly efficient market.

The existence of the Situational Awareness Fund is specifically predicated on the assumption that markets are not efficient, and if they don't invest in AI then AI will be under-invested.

(I don't have a strong position on whether that assumption is correct, but the people running the fund ought to believe it.)

To be clear, I have for many years said to various people that their investing in AI made sense to me, including (certain) AI labs (in certain times and conditions). I refrained from doing so at the time because it might create conflicts that could interfere with some of my particular advising and policy work at the time, not because I thought no one should do so.

I think a lot of rationalists accepted these Molochian offers ("build the Torment Nexus before others do it", "invest in the Torment Nexus and spend the proceeds on Torment Nexus safety") and the net result is simply that the Nexus is getting built earlier, with most safety work ending up as enabling capabilities or safetywashing. The rewards promised by Moloch have a way of receding into the future as the arms race expands, while the harms are already here and growing.

Consider the investments in AI by people like Jaan. It's possible for them to increase funding for the things they think are most helpful by sizable proportions while increasing capital for AI by <1%: there are now trillions of dollars of market cap in AI securities (so 1% of that is tens of billions of dollars), and returns have been very high. You can take a fatalist stance that nothing can be done to help with resources, but if there are worthwhile things to do then it's very plausible that for such people it works out.

If you're going to say that e.g. export controls on AI chips and fabrication equipment, non-lab AIS research (e.g. Redwood), the CAIS extinction risk letter, and similar have meaningful expected value, or are concerned about the funding limits for such activity now,

I don't think any of these orgs are funding constrained, so I am kind of confused what the point of this is. It seems like without these investments all of these projects have little problem attracting funding, and the argument would need to be that we could find $10B+ of similarly good opportunities, which seems unlikely.

More broadly, it's possible to increase funding for those things by 100%+ while increasing capital for AI by <1%

I think it's not that hard to end up with BOTECs where the people who have already made these investments ended up causing more harm than good (correlated with them having made the investments).

In-general, none of this area is funding constrained approximately at all, or will very soon stop being so when $30B+ of Anthropic equity starts getting distributed. The funding decisions are largely downstream of political and reputational constraints, not aggregate availability of funding. A ...

All good points and I wanted to reply with some of them, so thanks. But there's also another point where I might disagree more with LW folks (including you and Carl and maybe even Wei): I no longer believe that technological whoopsie is the main risk. I think we have enough geniuses working on the thing that technological whoopsie probably won't happen. The main risk to me now is that AI gets pretty well aligned to money and power, and then money and power throws most humans by the wayside. I've mentioned it many times, the cleanest formulation is probably in this book review.

In that light, Redwood and others are just making better tools for money and power, to help align AI to their ends. Export controls are a tool of international conflict: if they happen, they happen as part of a package of measures which basically intensify the arms race. And even the CAIS letter is now looking to me like a bit of PR move, where Altman and others got to say they cared about risk and then went on increasing risk anyway. Not to mention the other things done by safety-conscious money, like starting OpenAI and Anthropic. You could say the biggest things that safety-conscious money achieved were basically enabling stuff that money and power wanted. So the endgame wouldn't be some kind of war between humans and AI: it would be AI simply joining up with money and power, and cutting out everyone else.

What exactly I would advise doing depends on the scale of the money. I am assuming we are talking here about a few million dollars of exposure, not $50M+:

- Diversify enough away from AI that you really genuinely know you will be personally fine even if all the AI stuff goes to zero (e.g. probably something like $2M-$3M)

- Cultivate at least a few people you talk to about big career decisions who seem multiple steps removed from similarly strong incentives

- Make public statements to the effect of being opposed to AI advancing rapidly. This has a few positive effects, I think:

- It makes it easier for you to talk about this later when you might end up in a more pressured position (e.g. when you might end up in a position to take actions that might more seriously affect overall AI progress via e.g. work on regulation)

- It reduces the degree that you end up in relationships that seem based on false premises because e.g. people assumed you would be in favor of this given your exposure (if you e.g. hold substantial stock in a company)

- (To be clear, holding public positions like this isn't everyone's jam, and many people prefer holding no positions strongly in public)

- See whether you can use yo

@Carl_Shulman what do you intend to donate to and on what timescale?

(Personally, I am sympathetic to weighing the upside of additional resources in one’s considerations. Though I think it would be worthwhile for you to explain what kinds of things you plan to donate to & when you expect those donations to be made. With ofc the caveat that things could change etc etc.)

I also think there is more virtue in having a clear plan and/or a clear set of what gaps you see in the current funding landscape than a nebulous sense of “I will acquire resources and then hopefully figure out something good to do with them”.

I think it's extremely unfortunate that some people and institutions concerned with AI safety didn't do more of that earlier, such that they could now have more than an order of magnitude more resources and could do and have done some important things with them.

I don't really get this argument. Doesn't the x-risk community have something like 80% of its net-worth in AI capability companies right now? Like, people have a huge amount of equity in Anthropic and OpenAI, which have had much higher returns than any of these other assets. I agree one could have done a bit more, but I don't see an argument here for much higher aggregate returns, definitely not an order of magnitude.

Like, my guess is we are talking about $50B+ in Anthropic equity. What is the possible decision someone could have made such that we are now talking about $500B? I mean, it's not impossible, but I do also think that money seems already at this point to be a very unlikely constraint on the kind of person who could have followed this advice, given the already existing extremely heavy investment in frontier capability companies.

I don't know the situation, but over the past couple years I've updated significantly in the direction of "if an AI safety person is doing something that looks like it's increasing x-risk, it's not because there's some secret reason why it's good actually; it's because they're increasing x-risk." Without knowing the details, my prior is a 75% chance that that's what's going on here.

Mostly indirect evidence:

- The "Situational Awareness" essays downplayed AI risk and encouraged an arms race.

- This is evidence that the investors in Aschenbrenner's fund will probably also be the sorts of people who don't care about AI risk.

- It also personally makes Aschenbrenner wealthier, which gives him more power to do more things like downplaying AI risk and encouraging an arms race.

- Investing in AI companies gives them more capital with which to build dangerous AI. I'm not super concerned about this for public companies at smaller scales, but I think it's a bigger deal for private companies, where funding matters more; I don't know if the Situational Awareness fund invests in private companies. The fund is also pretty big so it may be meaningfully accelerating timelines via investing in public companies; pretty hard to say how big that effect is.

For people who like Yudkowsky's fiction, I recommend reading his story Kindness to Kin. I think it's my favorite of his stories. It's both genuinely moving, and an interesting thought experiment about evolutionary selection pressures and kindness. See also this related tweet thread.

Zachary Robinson and Kanika Bahl are no longer on the Anthropic LTBT. Mariano-Florentino (Tino) Cuéllar has been added. The Anthropic Company page is out of date, but as far as I can tell the LTBT is: Neil Buddy Shah (chair), Richard Fontaine, and Cuéllar.

[NOW CLOSED]

MIRI Technical Governance Team is hiring, please apply and work with us!

We are looking to hire for the following roles:

- Technical Governance Researcher (2-4 hires)

- Writer (1 hire)

The roles are located in Berkeley, and we are ideally looking to hire people who can start ASAP. The team is currently Lisa Thiergart (team lead) and myself.

We will research and design technical aspects of regulation and policy that could lead to safer AI, focusing on methods that won’t break as we move towards smarter-than-human AI. We want to design policy that allows us to safely and objectively assess the risks from powerful AI, build consensus around the risks we face, and put in place measures to prevent catastrophic outcomes.

The team will likely work on:

- Limitations of current proposals such as RSPs

- Inputs into regulations, requests for comment by policy bodies (ex. NIST/US AISI, EU, UN)

- Researching and designing alternative Safety Standards, or amendments to existing proposals

- Communicating with and consulting for policymakers and governance organizations

If you have any questions, feel free to contact me on LW or at peter@intelligence.org

I would strongly suggest considering hires who would be based in DC (or who would hop between DC and Berkeley). In my experience, being in DC (or being familiar with DC & having a network in DC) is extremely valuable for being able to shape policy discussions, know what kinds of research questions matter, know what kinds of things policymakers are paying attention to, etc.

I would go as far as to say something like "in 6 months, if MIRI's technical governance team has not achieved very much, one of my top 3 reasons for why MIRI failed would be that they did not engage enough with DC people//US policy people. As a result, they focused too much on questions that Bay Area people are interested in and too little on questions that Congressional offices and executive branch agencies are interested in. And relatedly, they didn't get enough feedback from DC people. And relatedly, even the good ideas they had didn't get communicated frequently enough or fast enough to relevant policymakers. And relatedly... etc etc."

I do understand this trades off against everyone being in the same place, which is a significant factor, but I think the cost is worth it.

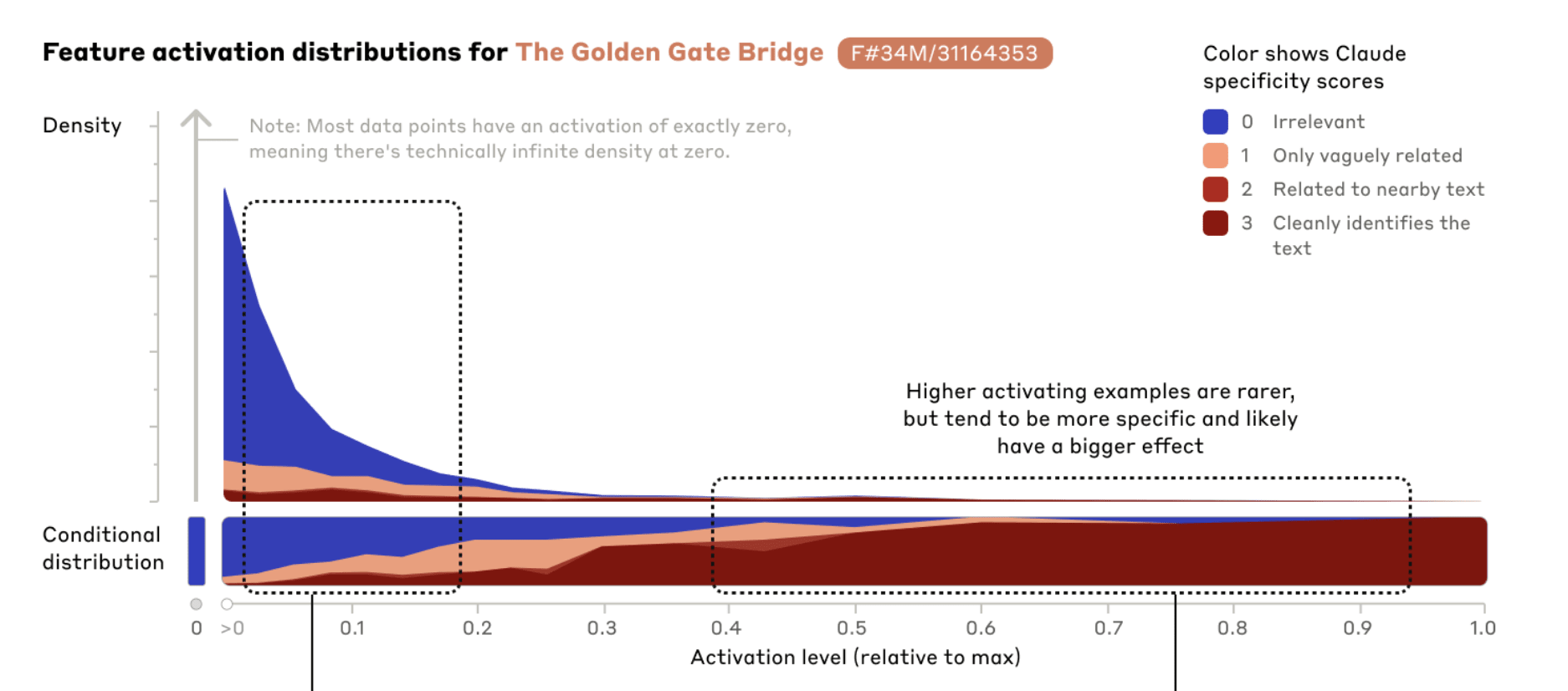

I think the Claude Sonnet Golden Gate Bridge feature is not crispy aligned with the human concept of "Golden Gate Bridge".

It brings up the San Fransisco fog far more than it would if it was just the bridge itself. I think it's probably more like Golden Gate Bridge + SF fog + a bunch of other things (some SF related, some not).

This isn't particularly surprising, given these are related ideas (both SF things), and the features were trained in an unsupervised way. But still seems kinda important that the "natural" features that SAEs find are not like exactly intuitively natural human concepts.

- It might be interesting to look at how much the SAE training data actually mentions the fog and the Golden Gate Bridge together

- I don't really think that this is super important for "fragility of value"-type concerns, but probably is important for people who think we will easily be able to understand the features/internals of LLMs

Almost all of my Golden Gate Claude chats mention the fog. Here is a not particularly cherrypicked example:

I don't really think that this is super important for "fragility of value"-type concerns, but probably is important for people who think we will easily be able to understand the features/internals of LLMs

I'm not surprised if the features aren't 100% clean, because this is after all a preliminary research prototype of a small approximation of a medium-sized version of a still sub-AGI LLM.

But I am a little more concerned that this is the first I've seen anyone notice that the cherrypicked, single, chosen example of what is apparently a straightforward, familiar, concrete (literally) concept, which people have been playing with interactively for days, is clearly dirty and not actually a 'Golden Gate Bridge feature'. This suggests it is not hard to fool a lot of people with an 'interpretable feature' which is still quite far from the human concept. And if you believe that it's not super important for fragility-of-value because it'd have feasible fixes if noticed, how do you know anyone will notice?

I'm not surprised if the features aren't 100% clean, because this is after all a preliminary research prototype of a small approximation of a medium-sized version of a still sub-AGI LLM.

It's more like a limitation of the paradigm, imo. If the "most golden gate" direction in activation-space and the "most SF fog" direction have high cosine similarity, there isn't a way to increase activation of one of them but not the other. And this isn't only a problem for outside interpreters - it's expensive for the AI's further layers to distinguish close-together vectors, so I'd expect the AI's further layers to do it as cheaply and unreliably as works on the training distribution, and not in some extra-robust way that generalizes to clamping features at 5x their observed maximum.

Wow, what is going on with AI safety

Status: wow-what-is-going-on, is-everyone-insane, blurting, hope-I-don’t-regret-this

Ok, so I have recently been feeling something like “Wow, what is going on? We don’t know if anything is going to work, and we are barreling towards the precipice. Where are the adults in the room?”

People seem way too ok with the fact that we are pursuing technical agendas that we don’t know will work and if they don’t it might all be over. People who are doing politics/strategy/coordination stuff also don’t seem freaked out that they will be the only thing that saves the world when/if the attempts at technical solutions don’t work.

And maybe technical people are ok doing technical stuff because they think that the politics people will be able to stop everything when we need to. And the politics people think that the technical people are making good progress on a solution.

And maybe this is the case, and things will turn out fine. But I sure am not confident of that.

And also, obviously, being in a freaked out state all the time is probably not actually that conducive to doing the work that needs to be done.

Technical stuff

For most technical approaches to the alignment...

I am very concerned about breakthroughs in continual/online/autonomous learning because this is obviously a necessary capability for an AI to be superhuman. At the same time, I think that this might make a bunch of alignment problems more obvious, as these problems only really arise when the AI is able to learn new things. This might result in a wake up of some AI researchers at least.

Or, this could just be wishful thinking, and continual learning might allow an AI to autonomously improve without human intervention and then kill everyone.

I've seen a bunch of places where them people in the AI Optimism cluster dismiss arguments that use evolution as an analogy (for anything?) because they consider it debunked by Evolution provides no evidence for the sharp left turn. I think many people (including myself) think that piece didn't at all fully debunk the use of evolution arguments when discussing misalignment risk. A people have written what I think are good responses to that piece; many of the comments, especially this one, and some posts.

I don't really know what to do here. The arguments often look like:

A: "Here's an evolution analogy which I think backs up my claims."

B: "I think the evolution analogy has been debunked and I don't consider your argument to be valid."

A: "I disagree that the analogy has been debunked, and think evolutionary analogies are valid and useful".

The AI Optimists seem reasonably unwilling to rehash the evolution analogy argument, because they consider this settled (I hope I'm not being uncharitable here). I think this is often a reasonable move, like I'm not particularly interested in arguing about climate change or flat-earth because I do consider these settled. But I do think that the...

Is there a place that you think canonically sets forth the evolution analogy and why it concludes what it concludes in a single document? Like, a place that is legible and predictive, and with which you're satisfied as self-contained -- at least speaking for yourself, if not for others?

Are evolution analogies really that much of a crux? It seems like the evidence from evolution can't get us that far in an absolute sense (though I could imagine evolution updating someone up to a moderate probability from a super low prior?), so we should be able to talk about more object level things regardless.

Diffusion language models are probably bad for alignment and safety because there isn't a clear way to get a (faithful) Chain-of-Thought from them. Even if you can get them to generate something that looks like a CoT, compared with autoregressive LMs, there is even less reason to believe that this CoT is load-bearing and being used in a human-like way.

I think Sam Bowman's The Checklist: What Succeeding at AI Safety Will Involve is a pretty good list and I'm glad it exists. Unfortunately, I think its very unlikely that we will manage to complete this list, given my guess at timelines. It seems very likely that the large majority of important interventions on this list will go basically unsolved.

I might go through The Checklist at some point and give my guess at success for each of the items.

Is there a name of the thing where an event happens and that specific event is somewhat surprising, but overall you would have expected something in that reference class (or level of surprisingness) to happen?

E.g. It was pretty surprising that Ivanka Trump tweeted about Situational Awareness, but I sort of expect events this surprising to happen.

In a forecasting context, you could treat it as a kind of conservation of evidence: you are surprised, and would have been wrong, in the specific prediction of 'Ivanka Trump will not tweet about short AI timelines', but you would have been right, in a way which more than offsets your loss, for your implied broader prediction of 'in the next few years, some highly influential "normie" politicians will suddenly start talking in very scale-pilled ways'.

(Assuming that's what you meant. If you simply meant "some weird shit is gonna happen because it's a big world and weird shit is always happening, and while I'm surprised this specific weird thing happened involving Ivanka, I'm no more surprised in general than usual", then I agree with Jay that you are probably looking for Law of Large Numbers or maybe Littlewood's Law.)

Model providers often don’t provide the full CoT, and instead provide a summary. I think this is a fine/good thing to do to help prevent distillation.

However, I think it would be good if the summaries provided a flag for when the CoT contained evaluation awareness or scheming (or other potentially concerning behavior).

I worry that currently the summaries don’t really provide this information, and this probably makes alignment and capability evaluations less valid.

Another reason labs don't provide CoT is that if users see them, the labs will be incentivized to optimize for them, and this will decrease their informativeness. A flag like you propose would have a similar effect.

There's a funny and bad incentive where I want to upvote posts I haven't read to push them past the 30 Karma threshold and make them appear on the podcast feed.

My Favourite Slate Star Codex Posts

This is what I send people when they tell me they haven't read Slate Star Codex and don't know where to start.

Here are a bunch of lists of top ssc posts:

These lists are vaguely ranked in the order of how confident I am that they are good

https://guzey.com/favorite/slate-star-codex/

https://slatestarcodex.com/top-posts/

https://nothingismere.com/2015/09/12/library-of-scott-alexandria/

https://www.slatestarcodexabridged.com/ (if interested in psychology almost all the stuff here is good https://www.slatestarcodexabridged.com/Ps...