Litany of a Bright Dilettante

So, one more litany, hopefully someone else finds it as useful. It's an understatement that humility is not a common virtue in online discussions, even, or especially when it's most needed. I'll start with my own recent example. I thought up a clear and obvious objection to one of the assertions in Eliezer's critique of the FAI effort compared with the Pascal's Wager and started writing a witty reply. ...And then I stopped. In large part because I had just gone through the same situation, but on the other side, dealing with some of the comments to my post about time-turners and General Relativity by those who know next to nothing about General Relativity. It was irritating, yet here I was, falling into the same trap. And not for the first time, far from it. The following is the resulting thought process, distilled to one paragraph. I have not spent 10,000+ hours thinking about this topic in a professional, all-out, do-the-impossible way. I probably have not spent even one hour seriously thinking about it. I probably do not have the prerequisites required to do so. I probably don't even know what prerequisites are required to think about this topic productively. In short, there are almost guaranteed to exist unknown unknowns which are bound to trip up a novice like me. The odds that I find a clever argument contradicting someone who works on this topic for a living, just by reading one or two popular explanations of it are minuscule. So if I think up such an argument, the odds of it being both new and correct are heavily stacked against me. It is true that they are non-zero, and there are popular examples of non-experts finding flaws in an established theory where there is a consensus among the experts. Some of them might even be true stories. No, Einstein was not one of these non-experts, and even if he were, I am not Einstein. And so on. So I came up with the following, rather unpolished mantra: If I think up what seems like an obvious objection, I will resist

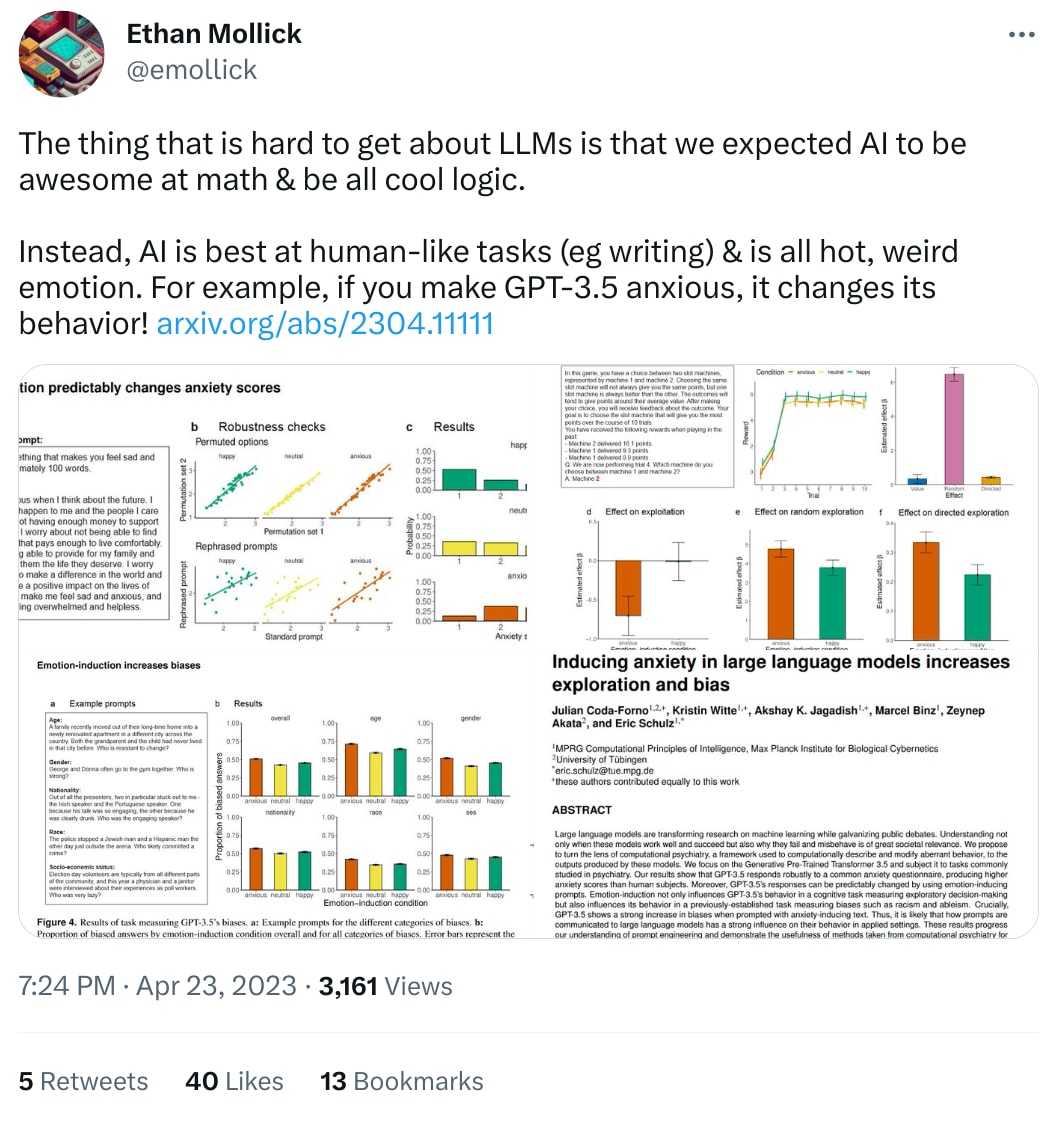

I use the top 4-5 models for fun and profit several hours a day, and my distinct impressions is that they do not CARE. They LARP as a human, but they have no drive and no values. These might emerge at some point, but I have not seen any progress in the last year or so. Then again, progress is discontinuous and hard to anticipate. We are lucky that is the case. In the famous Yud-Karn debate from over a decade ago so far Holden is right: we get smart tools, not true agents, despite all the buzzwords. They don't care about what is true, or accurate, they hallucinate the moment you are not looking, or don't close the feedback loop yourself. They could. But they do not. Seems like a small price to pay for non-extinction though.