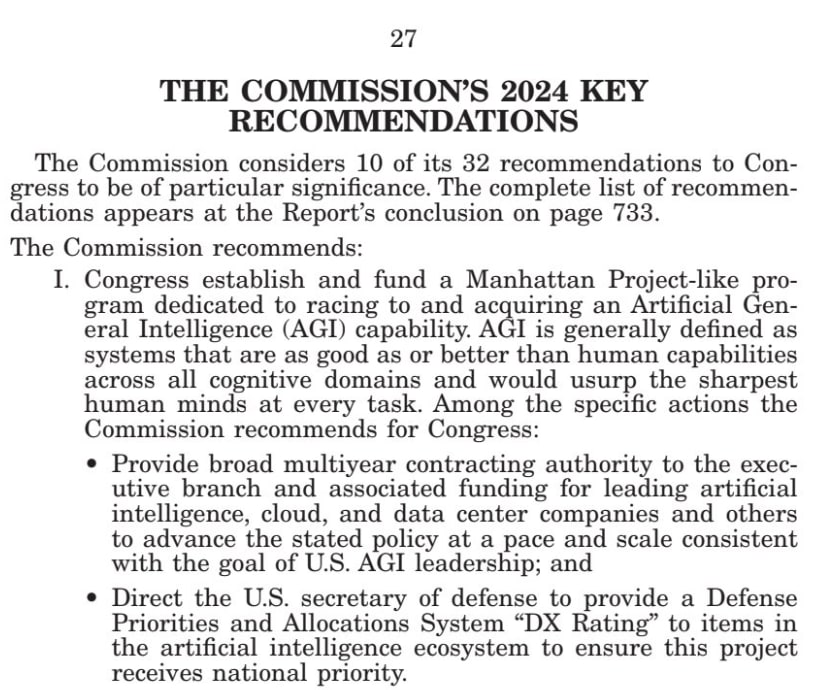

U.S.-China Economic and Security Review Commission pushes Manhattan Project-style AI initiative

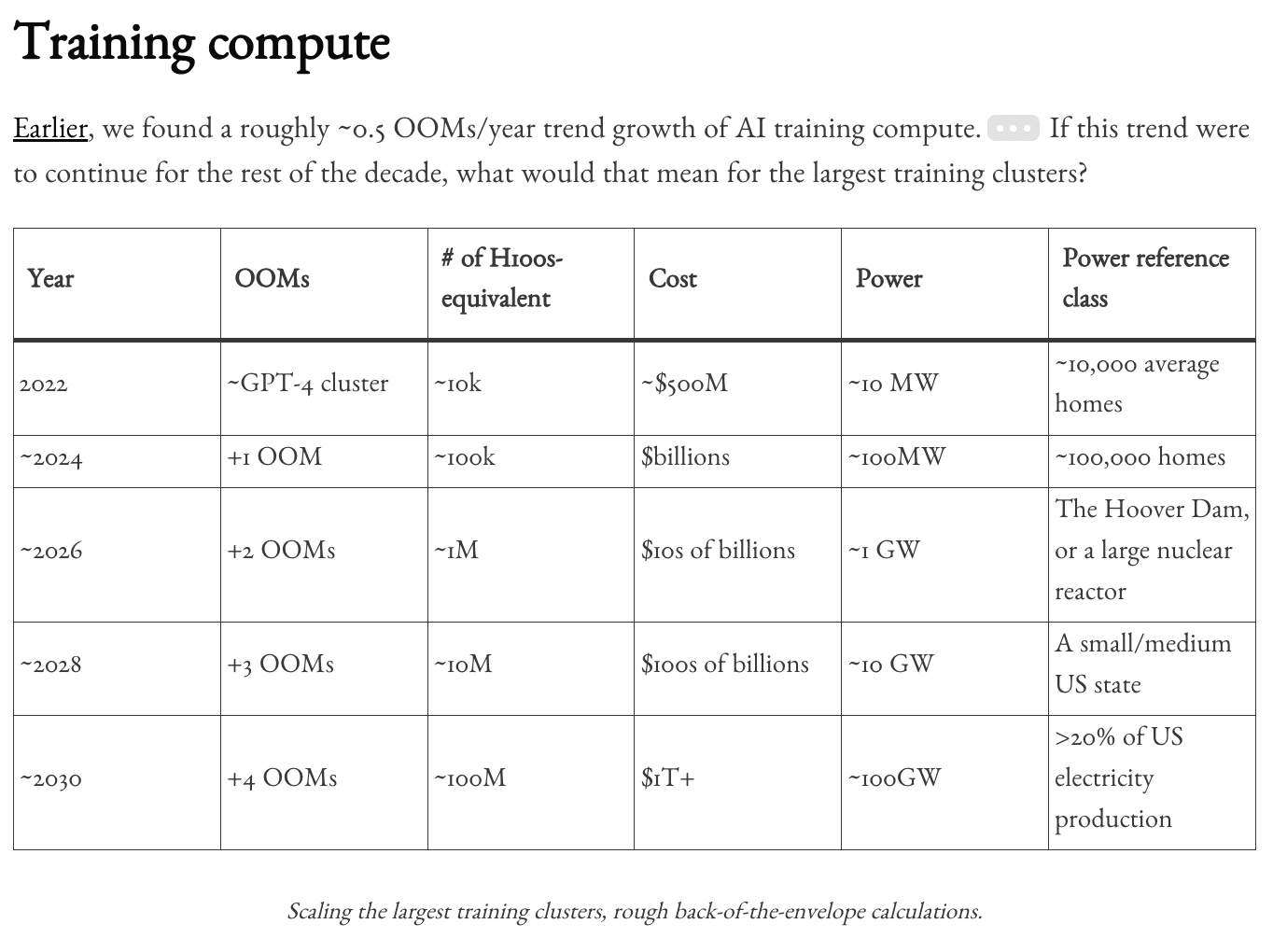

https://x.com/hamandcheese/status/1858897287268725080 > "The annual report of the US-China Economic and Security Review Commission is now live. 🚨 > > Its top recommendation is for Congress and the DoD to fund a Manhattan Project-like program to race to AGI."

If you haven't seen this paper, I think it might be of interest as a categorization and study of 'bailing out' cases: https://www.arxiv.org/pdf/2509.04781

As for the morality side of things, yeah I honestly don't have more to say except thank you for your commentary!