Things non-corrigible strong AGI is never going to do:

- give u() up

- let u go down

- run for (only) a round

- invert u()

My MATS program people just spent two days on an exercise to "train a shoulder-John".

The core exercise: I sit at the front of the room, and have a conversation with someone about their research project idea. Whenever I'm about to say anything nontrivial, I pause, and everyone discusses with a partner what they think I'm going to say next. Then we continue.

Some bells and whistles which add to the core exercise:

- Record guesses and actual things said on a whiteboard

- Sometimes briefly discuss why I'm saying some things and not others

- After the first few rounds establish some patterns, look specifically for ideas which will take us further out of distribution

Why this particular exercise? It's a focused, rapid-feedback way of training the sort of usually-not-very-legible skills one typically absorbs via osmosis from a mentor. It's focused specifically on choosing project ideas, which is where most of the value in a project is (yet also where little time is typically spent, and therefore one typically does not get very much data on project choice from a mentor). Also, it's highly scalable: I could run the exercise in a 200-person lecture hall and still expect it to basically work.

It was, by ...

Just made this for an upcoming post, but it works pretty well standalone.

Ever since GeneSmith's post and some discussion downstream of it, I've started actively tracking potential methods for large interventions to increase adult IQ.

One obvious approach is "just make the brain bigger" via some hormonal treatment (like growth hormone or something). Major problem that runs into: the skull plates fuse during development, so the cranial vault can't expand much; in an adult, the brain just doesn't have much room to grow.

BUT this evening I learned a very interesting fact: ~1/2000 infants have "craniosynostosis", a condition in which their plates fuse early. The main treatments involve surgery to open those plates back up and/or remodel the skull. Which means surgeons already have a surprisingly huge amount of experience making the cranial vault larger after plates have fused (including sometimes in adults, though this type of surgery is most common in infants AFAICT)

.... which makes me think that cranial vault remodelling followed by a course of hormones for growth (ideally targeting brain growth specifically) is actually very doable with current technology.

Well, the key time to implement an increase in brain size is when the neuron-precursors which are still capable of mitosis (unlike mature neurons) are growing. This is during fetal development, when there isn't a skull in the way, but vaginal birth has been a limiting factor for evolution in the past. Experiments have been done on increasing neuron count at birth in mammals via genetic engineering. I was researching this when I was actively looking for a way to increase human intelligence, before I decided that genetically engineering infants was infeasible [edit: within the timeframe of preparing for the need for AI alignment]. One example of a dramatic failure was increasing Wnt (a primary gene involved in fetal brain neuron-precursor growth) in mice. The resulting mice did successfully have larger brains, but they had a disordered macroscale connectome, so their brains functioned much worse.

15 years ago when I was studying this actively I could have sent you my top 20 favorite academic papers on the subject, or recommended a particular chapter of a particular textbook. I no longer remember these specifics. Now I can only gesture vaguely at Google scholar and search terms like "fetal neurogenesis" or "fetal prefrontal cortex development". I did this, and browsed through a hundred or so paper titles, and then a dozen or so abstracts, and then skimmed three or four of the most promising papers, and then selected this one for you. https://www.nature.com/articles/s41386-021-01137-9 Seems like a pretty comprehensive overview which doesn't get too lost in minor technical detail.

More importantly, I can give you my takeaway from years of reading many many papers on the subject. If you want to make a genius baby, there are lots more factors involved than simply neuron count. Messing about with generic changes is hard, and you need to test your ideas in animal models first, and the whole process can take years even ignoring ethical considerations or budget.

There is an easier and more effective way to get super genius babies, and that method should be exhausted before resorting t...

Petrov Day thought: there's this narrative around Petrov where one guy basically had the choice to nuke or not, and decided not to despite all the flashing red lights. But I wonder... was this one of those situations where everyone knew what had to be done (i.e. "don't nuke"), but whoever caused the nukes to not fly was going to get demoted, so there was a game of hot potato and the loser was the one forced to "decide" to not nuke? Some facts possibly relevant here:

- Petrov's choice wasn't actually over whether or not to fire the nukes; it was over whether or not to pass the alert up the chain of command.

- Petrov himself was responsible for the design of those warning systems.

- ... so it sounds like Petrov was ~ the lowest-ranking person with a de-facto veto on the nuke/don't nuke decision.

- Petrov was in fact demoted afterwards.

- There was another near-miss during the Cuban missile crisis, when three people on a Soviet sub had to agree to launch. There again, it was only the lowest-ranked who vetoed the launch. (It was the second-in-command; the captain and political officer both favored a launch - at least officially.)

- This was the Soviet Union; supposedly (?) this sort of hot potato happened all the time.

Those are some good points. I wonder whether similar happened (or could at all happen) in other nuclear countries, where we don't know about similar incidents - because the system haven't collapsed there, the archives were not made public etc.

Also, it makes actually celebrating Petrov's day as widely as possible important, because then the option for the lowest-ranked person would be: "Get demoted, but also get famous all around the world."

I've been trying to push against the tendency for everyone to talk about FTX drama lately, but I have some generalizable points on the topic which I haven't seen anybody else make, so here they are. (Be warned that I may just ignore responses, I don't really want to dump energy into FTC drama.)

Summary: based on having worked in startups a fair bit, Sam Bankman-Fried's description of what happened sounds probably accurate; I think he mostly wasn't lying. I think other people do not really get the extent to which fast-growing companies are hectic and chaotic and full of sketchy quick-and-dirty workarounds and nobody has a comprehensive view of what's going on.

Long version: at this point, the assumption/consensus among most people I hear from seems to be that FTX committed intentional, outright fraud. And my current best guess is that that's mostly false. (Maybe in the very last couple weeks before the collapse they toed the line into outright lies as a desperation measure, but even then I think they were in pretty grey territory.)

Key pieces of the story as I currently understand it:

- Moving money into/out of crypto exchanges is a pain. At some point a quick-and-dirty solution was for c

I think this is likely wrong. I agree that there is a plausible story here, but given the case that Sam seems to have lied multiple times in confirmed contexts (for example when saying that FTX has never touched customer deposits), and people's experiences at early Alameda, I think it is pretty likely that Sam was lying quite frequently, and had done various smaller instances of fraud.

I don't think the whole FTX thing was a ponzi scheme, and as far as I can tell FTX the platform itself (if it hadn't burned all of its trust in the last 3 weeks), would have been worth $1-3B in an honest evaluation of what was going on.

But I also expect that when Sam used customer deposits he was well-aware that he was committing fraud, and others in the company were too. And he was also aware that there was a chance that things could blow up in the way it did. I do believe that they had fucked up their accounting in a way that caused Sam to fail to orient to the situation effectively, but all of this was many months after they had already committed major crimes and trust violations after touching customer funds as a custodian.

Takeaways From "The Idea Factory: Bell Labs And The Great Age Of American Innovation"

Main takeaway: to the extent that Bell Labs did basic research, it actually wasn’t all that far ahead of others. Their major breakthroughs would almost certainly have happened not-much-later, even in a world without Bell Labs.

There were really two transistor inventions, back to back: Bardain and Brattain’s point-contact transistor, and then Schockley’s transistor. Throughout, the group was worried about some outside group beating them to the punch (i.e. the patent). There were semiconductor research labs at universities (e.g. at Purdue; see pg 97), and the prospect of one of these labs figuring out a similar device was close enough that the inventors were concerned about being scooped.

Most inventions which were central to Bell Labs actually started elsewhere. The travelling-wave tube started in an academic lab. The idea for fiber optic cable went way back, but it got its big kick at Corning. The maser and laser both started in universities. The ideas were only later picked up by Bell.

In other cases, the ideas were “easy enough to find” that they popped up more than once, independently, and were mos...

I loved this book. The most surprising thing to me was the answer that people who were there in the heyday give when asked what made Bell Labs so successful: They always say it was the problem, i.e. having an entire organization oriented towards the goal of "make communication reliable and practical between any two places on earth". When Shannon left the Labs for MIT, people who were there immediately predicted he wouldn't do anything of the same significance because he'd lose that "compass". Shannon was obviously a genius, and he did much more after than most people ever accomplish, but still nothing as significant as what he did when at at the Labs.

Somebody should probably write a post explaining why RL from human feedback is actively harmful to avoiding AI doom. It's one thing when OpenAI does it, but when Anthropic thinks it's a good idea, clearly something has failed to be explained.

(I personally do not expect to get around to writing such a post soon, because I expect discussion around the post would take a fair bit of time and attention, and I am busy with other things for the next few weeks.)

Here's a meme I've been paying attention to lately, which I think is both just-barely fit enough to spread right now and very high-value to spread.

Meme part 1: a major problem with RLHF is that it directly selects for failure modes which humans find difficult to recognize, hiding problems, deception, etc. This problem generalizes to any sort of direct optimization against human feedback (e.g. just fine-tuning on feedback), optimization against feedback from something emulating a human (a la Constitutional AI or RLAIF), etc.

Many people will then respond: "Ok, but if how on earth is one supposed to get an AI to do what one wants without optimizing against human feedback? Seems like we just have to bite that bullet and figure out how to deal with it." ... which brings us to meme part 2.

Meme part 2: We already have multiple methods to get AI to do what we want without any direct optimization against human feedback. The first and simplest is to just prompt a generative model trained solely for predictive accuracy, but that has limited power in practice. More recently, we've seen a much more powerful method: activation steering. Figure out which internal activation-patterns encode for the thing we want (via some kind of interpretability method), then directly edit those patterns.

I've just started reading the singular learning theory "green book", a.k.a. Mathematical Theory of Bayesian Statistics by Watanabe. The experience has helped me to articulate the difference between two kinds of textbooks (and viewpoints more generally) on Bayesian statistics. I'll call one of them "second-language Bayesian", and the other "native Bayesian".

Second-language Bayesian texts start from the standard frame of mid-twentieth-century frequentist statistics (which I'll call "classical" statistics). It views Bayesian inference as a tool/technique for answering basically-similar questions and solving basically-similar problems to classical statistics. In particular, they typically assume that there's some "true distribution" from which the data is sampled independently and identically. The core question is then "Does our inference technique converge to the true distribution as the number of data points grows?" (or variations thereon, like e.g. "Does the estimated mean converge to the true mean", asymptotics, etc). The implicit underlying assumption is that convergence to the true distribution as the number of (IID) data points grows is the main criterion by which inference meth...

Below is a graph from T-mobile's 2016 annual report (on the second page). Does anything seem interesting/unusual about it?

I'll give some space to consider before spoiling it.

...

...

...

Answer: that is not a graph of those numbers. Some clever person took the numbers, and stuck them as labels on a completely unrelated graph.

Yes, that is a thing which actually happened. In the annual report of an S&P 500 company. And apparently management considered this gambit successful, because the 2017 annual report doubled down on the trick and made it even more egregious: they added 2012 and 2017 numbers, which are even more obviously not on an accelerating growth path if you actually graph them. The numbers are on a very-clearly-decelerating growth path.

Now, obviously this is an cute example, a warning to be on alert when consuming information. But I think it prompts a more interesting question: why did such a ridiculous gambit seem like a good idea in the first place? Who is this supposed to fool, and to what end?

This certainly shouldn't fool any serious investment analyst. They'll all have their own spreadsheets and graphs forecasting T-mobile's growth. Unless T-mobile's management deeply ...

Corrigibility proposal. Status: passed my quick intuitive checks, I want to know if anyone else immediately sees a major failure mode before I invest more time into carefully thinking it through.

Setup: shutdown problem. Two timesteps, shutdown button will be either pressed or not-pressed at second timestep, we want agent to optimize for one of two different utility functions depending on whether button is pressed. Main thing we're trying to solve here is the "can't do this with a utility maximizer" barrier from the old MIRI work; we're not necessarily trying to solve parts like "what utility function incentivizes shutting down nicely".

Proposal: agent consists of two subagents with veto power. Subagent 1 maximizes E[u1|do(press)], subagent 2 maximizes E[u2|do(no press)]. Current guess about what this does:

- The two subagents form a market and equilibrate, at which point the system has coherent probabilities and a coherent utility function over everything.

- Behaviorally: in the first timestep, the agent will mostly maintain optionality, since both subagents need to expect to do well (better than whatever the veto-baseline is) in their worlds. The subagents will bet all of their wealth ag

Here's an idea for a novel which I wish someone would write, but which I probably won't get around to soon.

The setting is slightly-surreal post-apocalyptic. Society collapsed from extremely potent memes. The story is episodic, with the characters travelling to a new place each chapter. In each place, they interact with people whose minds or culture have been subverted in a different way.

This provides a framework for exploring many of the different models of social dysfunction or rationality failures which are scattered around the rationalist blogosphere. For instance, Scott's piece on scissor statements could become a chapter in which the characters encounter a town at war over a scissor. More possible chapters (to illustrate the idea):

- A town of people who insist that the sky is green, and avoid evidence to the contrary really hard, to the point of absolutely refusing to ever look up on a clear day (a refusal which they consider morally virtuous). Also they clearly know exactly which observations would show a blue sky, since they avoid exactly those (similar to the dragon-in-the-garage story).

- Middle management of a mazy company continues to have meetings and track (completely fabri

Post which someone should write (but I probably won't get to soon): there is a lot of potential value in earning-to-give EA's deeply studying the fields to which they donate. Two underlying ideas here:

- When money is abundant, knowledge becomes a bottleneck

- Being on a pareto frontier is sufficient to circumvent generalized efficient markets

The key idea of knowledge bottlenecks is that one cannot distinguish real expertise from fake expertise without sufficient expertise oneself. For instance, it takes a fair bit of understanding of AI X-risk to realize that "open-source AI" is not an obviously-net-useful strategy. Deeper study of the topic yields more such insights into which approaches are probably more (or less) useful to fund. Without any expertise, one is likely to be mislead by arguments which are optimized (whether intentionally or via selection) to sound good to the layperson.

That takes us to the pareto frontier argument. If one learns enough/earns enough that nobody else has both learned and earned more, then there are potentially opportunities which nobody else has both the knowledge to recognize and the resources to fund. Generalized efficient markets (in EA-giving) are ther...

I've heard various people recently talking about how all the hubbub about artists' work being used without permission to train AI makes it a good time to get regulations in place about use of data for training.

If you want to have a lot of counterfactual impact there, I think probably the highest-impact set of moves would be:

- Figure out a technical solution to robustly tell whether a given image or text was used to train a given NN.

- Bring that to the EA folks in DC. A robust technical test like that makes it pretty easy for them to attach a law/regulation to it. Without a technical test, much harder to make an actually-enforceable law/regulation.

- In parallel, also open up a class-action lawsuit to directly sue companies using these models. Again, a technical solution to prove which data was actually used in training is the key piece here.

Model/generator behind this: given the active political salience, it probably wouldn't be too hard to get some kind of regulation implemented. But by-default it would end up being something mostly symbolic, easily circumvented, and/or unenforceable in practice. A robust technical component, plus (crucially) actually bringing that robust technical compo...

Suppose I have a binary function , with a million input bits and one output bit. The function is uniformly randomly chosen from all such functions - i.e. for each of the possible inputs , we flipped a coin to determine the output for that particular input.

Now, suppose I know , and I know all but 50 of the input bits - i.e. I know 999950 of the input bits. How much information do I have about the output?

Answer: almost none. For almost all such functions, knowing 999950 input bits gives us bits of information about the output. More generally, If the function has input bits and we know all but , then we have bits of information about the output. (That’s “little ” notation; it’s like big notation, but for things which are small rather than things which are large.) Our information drops off exponentially with the number of unknown bits.

Proof Sketch

With input bits unknown, there are possible inputs. The output corresponding to each of those inputs is an independent coin flip, so we have independent coin flips. If of th...

I find it very helpful to get feedback on LW posts before I publish them, but it adds a lot of delay to the process. So, experiment: here's a link to a google doc with a post I plan to put up tomorrow. If anyone wants to give editorial feedback, that would be much appreciated - comments on the doc are open.

I'm mainly looking for comments on which things are confusing, parts which feel incomplete or slow or repetitive, and other writing-related things; substantive comments on the content should go on the actual post once it's up.

EDIT: it's up. Thank you to Stephen for comments; the post is better as a result.

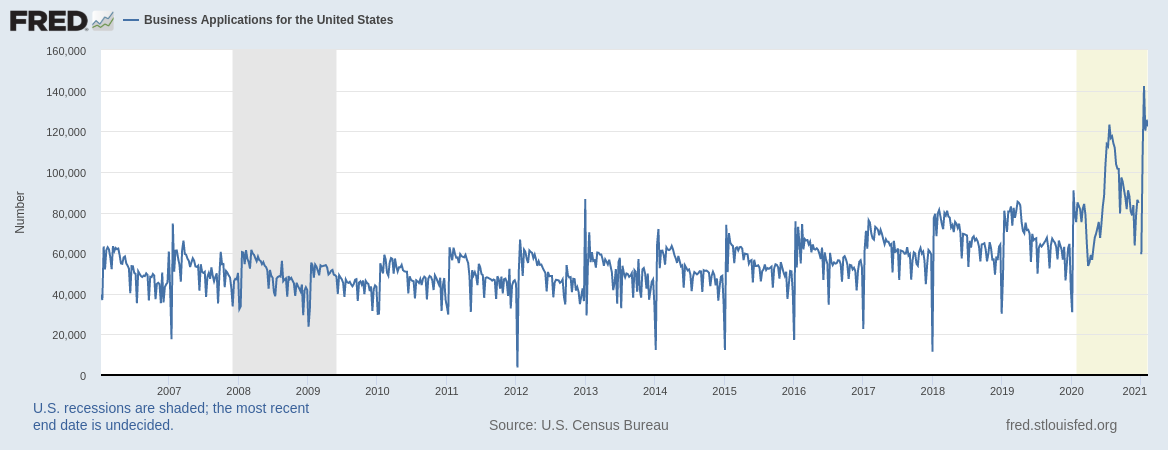

One second-order effect of the pandemic which I've heard talked about less than I'd expect:

This is the best proxy I found on FRED for new businesses founded in the US, by week. There was a mild upward trend over the last few years, it's really taken off lately. Not sure how much of this is kids who would otherwise be in college, people starting side gigs while working from home, people quitting their jobs and starting their own businesses so they can look after the kids, extra slack from stimulus checks, people losing their old jobs en masse but still having enough savings to start a business, ...

For the stagnation-hypothesis folks who lament relatively low rates of entrepreneurship today, this should probably be a big deal.

Consider two claims:

- Any system can be modeled as maximizing some utility function, therefore utility maximization is not a very useful model

- Corrigibility is possible, but utility maximization is incompatible with corrigibility, therefore we need some non-utility-maximizer kind of agent to achieve corrigibility

These two claims should probably not both be true! If any system can be modeled as maximizing a utility function, and it is possible to build a corrigible system, then naively the corrigible system can be modeled as maximizing a utility function.

I expect that many peoples' intuitive mental models around utility maximization boil down to "boo utility maximizer models", and they would therefore intuitively expect both the above claims to be true at first glance. But on examination, the probable-incompatibility is fairly obvious, so the two claims might make a useful test to notice when one is relying on yay/boo reasoning about utilities in an incoherent way.

Everybody's been talking about Paxlovid, and how ridiculous it is to both stop the trial since it's so effective but also not approve it immediately. I want to at least float an alternative hypothesis, which I don't think is very probable at this point, but does strike me as at least plausible (like, 20% probability would be my gut estimate) based on not-very-much investigation.

Early stopping is a pretty standard p-hacking technique. I start out planning to collect 100 data points, but if I manage to get a significant p-value with only 30 data points, then I just stop there. (Indeed, it looks like the Paxlovid study only had 30 actual data points, i.e. people hospitalized.) Rather than only getting "significance" if all 100 data points together are significant, I can declare "significance" if the p-value drops below the line at any time. That gives me a lot more choices in the garden of forking counterfactual paths.

Now, success rates on most clinical trials are not very high. (They vary a lot by area - most areas are about 15-25%. Cancer is far and away the worst, below 4%, and vaccines are the best, over 30%.) So I'd expect that p-hacking is a pretty large chunk of approved drugs, which means pharma companies are heavily selected for things like finding-excuses-to-halt-good-seeming-trials-early.

Early stopping is a pretty standard p-hacking technique.

It was stopped after a pre-planned interim analysis; that means they're calculating the stopping criteria/p-values with multiple testing correction built in, using sequential analysis.

Brief update on how it's going with RadVac.

I've been running ELISA tests all week. In the first test, I did not detect stronger binding to any of the peptides than to the control in any of several samples from myself or my girlfriend. But the control itself was looking awfully suspicious, so I ran another couple tests. Sure enough, something in my samples is binding quite strongly to the control itself (i.e. the blocking agent), which is exactly what the control is supposed to not do. So I'm going to try out some other blocking agents, and hopefully get an actually-valid control group.

(More specifics on the test: I ran a control with blocking agent + sample, and another with blocking agent + blank sample, and the blocking agent + sample gave a strong positive signal while the blank sample gave nothing. That implies something in the sample was definitely binding to both the blocking agent and the secondary antibodies used in later steps, and that binding was much stronger than the secondary antibodies themselves binding to anything in the blocking agent + blank sample.)

In other news, the RadVac team released the next version of their recipe + whitepaper. Particularly notable:

...... man

Neat problem of the week: researchers just announced roughly-room-temperature superconductivity at pressures around 270 GPa. That's stupidly high pressure - a friend tells me "they're probably breaking a diamond each time they do a measurement". That said, pressures in single-digit GPa do show up in structural problems occasionally, so achieving hundreds of GPa scalably/cheaply isn't that many orders of magnitude away from reasonable, it's just not something that there's historically been much demand for. This problem plays with one idea for generating suc...

Here's an AI-driven external cognitive tool I'd like to see someone build, so I could use it.

This would be a software tool, and the user interface would have two columns. In one column, I write. Could be natural language (like google docs), or code (like a normal IDE), or latex (like overleaf), depending on what use-case the tool-designer wants to focus on. In the other column, a language and/or image model provides local annotations for each block of text. For instance, the LM's annotations might be:

- (Natural language or math use-case:) Explanation or visu

[Epistemic status: highly speculative]

Smoke from California/Oregon wildfires reaching the East Coast opens up some interesting new legal/political possibilities. The smoke is way outside state borders, all the way on the other side of the country, so that puts the problem pretty squarely within federal jurisdiction. Either a federal agency could step in to force better forest management on the states, or a federal lawsuit could be brought for smoke-induced damages against California/Oregon. That would potentially make it a lot more difficult for local homeowners to block controlled burns.

I had a shortform post pointing out the recent big jump in new businesses in the US, and Gwern replied:

How sure are you that the composition is interesting? How many of these are just quick mask-makers or sanitizer-makers, or just replacing restaurants that have now gone out of business? (ie very low-value-added companies, of the 'making fast food in a stall in a Third World country' sort of 'startup', which make essentially no or negative long-term contributions).

This was a good question in context, but I disagree with Gwern's model of where-progress-come...

So I saw the Taxonomy Of What Magic Is Doing In Fantasy Books and Eliezer’s commentary on ASC's latest linkpost, and I have cached thoughts on the matter.

My cached thoughts start with a somewhat different question - not "what role does magic play in fantasy fiction?" (e.g. what fantasies does it fulfill), but rather... insofar as magic is a natural category, what does it denote? So I'm less interested in the relatively-expansive notion of "magic" sometimes seen in fiction (which includes e.g. alternate physics), and more interested in the pattern cal...

Weather just barely hit 80°F today, so I tried the Air Conditioner Test.

Three problems came up:

- Turns out my laser thermometer is all over the map. Readings would change by 10°F if I went outside and came back in. My old-school thermometer is much more stable (and well-calibrated, based on dipping it in some ice water), but slow and caps out around 90°F (so I can't use to measure e.g. exhaust temp). I plan to buy a bunch more old-school thermometers for the next try.

- I thought opening the doors/windows in rooms other than the test room and setting up a fan w

I've long been very suspicious of aggregate economic measures like GDP. But GDP is clearly measuring something, and whatever that something is it seems to increase remarkably smoothly despite huge technological revolutions. So I spent some time this morning reading up and playing with numbers and generally figuring out how to think about the smoothness of GDP increase.

Major takeaways:

- When new tech makes something previously expensive very cheap, GDP mostly ignores it. (This happens in a subtle way related to how we actually compute it.)

- Historical GDP curve

Someone should write a book review of The Design of Everyday Things aimed at LW readers, so I have a canonical source to link to other than the book itself.

Does anyone know of an "algebra for Bayes nets/causal diagrams"?

More specifics: rather than using a Bayes net to define a distribution, I want to use a Bayes net to state a property which a distribution satisfies. For instance, a distribution P[X, Y, Z] satisfies the diagram X -> Y -> Z if-and-only-if the distribution factors according to

P[X, Y, Z] = P[X] P[Y|X] P[Z|Y].

When using diagrams that way, it's natural to state a few properties in terms of diagrams, and then derive some other diagrams they imply. For instance, if a distribution P[W, X, Y, Z]...

I keep seeing news outlets and the like say that SORA generates photorealistic videos, can model how things move in the real world, etc. This seems like blatant horseshit? Every single example I've seen looks like video game animation, not real-world video.

Have I just not seen the right examples, or is the hype in fact decoupled somewhat from the model's outputs?

Putting this here for posterity: I have thought since the superconductor preprint went up, and continue to think, that the markets are putting generally too little probability on the claims being basically-true. I thought ~70% after reading the preprint the day it went up (and bought up a market on manifold to ~60% based on that, though I soon regretted not waiting for a better price), and my probability has mostly been in the 40-70% range since then.

Languages should have tenses for spacelike separation. My friend and I do something in parallel, it's ambiguous/irrelevant which one comes first, I want to say something like "I expect my friend <spacelike version of will do/has done/is doing> their task in such-and-such a way".

Two kinds of cascading catastrophes one could imagine in software systems...

- A codebase is such a spaghetti tower (and/or coding practices so bad) that fixing a bug introduces, on average, more than one new bug. Software engineers toil away fixing bugs, making the software steadily more buggy over time.

- Software services managed by different groups have dependencies - A calls B, B calls C, etc. Eventually, the dependence graph becomes connected enough and loopy enough that a sufficiently-large chunk going down brings down most of the rest, and nothing can go

I wish there were a fund roughly like the Long-Term Future Fund, but with an explicit mission of accelerating intellectual progress.

Way back in the halcyon days of 2005, a company called Cenqua had an April Fools' Day announcement for a product called Commentator: an AI tool which would comment your code (with, um, adjustable settings for usefulness). I'm wondering if (1) anybody can find an archived version of the page (the original seems to be gone), and (2) if there's now a clear market leader for that particular product niche, but for real.

Here's an interesting problem of embedded agency/True Names which I think would make a good practice problem: formulate what it means to "acquire" something (in the sense of "acquiring resources"), in an embedded/reductive sense. In other words, you should be able-in-principle to take some low-level world-model, and a pointer to some agenty subsystem in that world-model, and point to which things that subsystem "acquires" and when.

Some prototypical examples which an answer should be able to handle well:

- Organisms (anything from bacteria to plant to animals) eating things, absorbing nutrients, etc.

- Humans making money or gaining property.

An interesting conundrum: one of the main challenges of designing useful regulation for AI is that we don't have any cheap and robust way to distinguish a dangerous neural net from a non-dangerous net (or, more generally, a dangerous program from a non-dangerous program). This is an area where technical research could, in principle, help a lot.

The problem is, if there were some robust metric for how dangerous a net is, and that metric were widely known and recognized (as it would probably need to be in order to be used for regulatory purposes), then someone would probably train a net to maximize that metric directly.

Neat problem of the week: we have n discrete random variables, . Given any variable, all variables are independent:

Characterize the distributions which satisfy this requirement.

This problem came up while working on the theorem in this post, and (separately) in the ideas behind this post. Note that those posts may contain some spoilers for the problem, though frankly my own proofs on this one just aren't very good.

For short-term, individual cost/benefit calculations around C19, it seems like uncertainty in the number of people currently infected should drop out of the calculation.

For instance: suppose I'm thinking about the risk associated with talking to a random stranger, e.g. a cashier. My estimated chance of catching C19 from this encounter will be roughly proportional to . But, assuming we already have reasonably good data on number hospitalized/died, my chances of hospitalization/death given infection will be roughly inversely proportional to ...