Related previous work: https://www.lesswrong.com/posts/QEBFZtP64DdhjE3Sz/self-awareness-taxonomy-and-eval-suite-proposal

Summary of previous work below:

I don’t think this raises a challenge to physicalism.

If physicalism were true, or even if there were non-physical things but they didn’t alter the determinism of the physical world, then the notion of an “agent” needs a lot of care. It can easily give the mistaken impression that there is something non-physical in entities that can change the physical world.

A perfect world model would be able to predict the responses of any neuron in any location in the universe to any inputs (leaving aside true randomness). It doesn’t matter whether the entity in question has a conscious experience that it is one of those entities, nothing would change.

but you don't know where YOU are in space-time

So I’d argue that this question is irrelevant if physicalism is true, because the AI having a phenomenal conscious experience of “I am this entity” cannot affect the physical world. If we’re not talking about phenomenal consciousness, then it’s just regular physical world modeling.

I agree the core of the threatening part of situational awareness (and also a large part of why it's useful/effective) is reliable self-location.

I don't think you need the slightly mystical, metaphysical baggage for this to be important. A cybernetic frame suffices. A decision-making procedure is implemented on some particular substrate, its inputs/percepts are mediated via some particular sensors, its outputs feed into some particular actuators etc. You can have generic, space/time/other-invariant knowledge of world dynamics, you can have historical/biographical/etc knowledge of particulars and their relationships, and you can have local knowledge about 'self' and immediate environment (and how all that fits together). For a 'natural' reasoner, a lot of the local knowledge will come first (though a selected/adapted reasoner may well have some priors on the more self-invariant world knowledge preinstalled). Other approaches (notably LMs) might ingest primarily self-invariant world knowledge until some quite late stage of development.

Thank you for writing the post, interesting to think about.

Suppose an AI has a perfect world model, but no "I", that is, no indexical information. Then a bad actor comes along and asks the AI "please take over the world for me". Its guardrails removed (which is routinely done for open source models), the AI complies.

Its takeover actions will look exactly like those of a rogue AI. Only difference is, the rogue part doesn't stem from the AI itself, but from the bad actor. For everyone except the bad actor, though, the result looks exactly the same. The AI, using its perfect world model and other dangerous capabilities, takes over the world and, if the bad actor chooses so, kills everyone.

This is fairly close to my central threat model. I don't care much whether the adverse action comes from a self-aware AI or a bad actor, I care about the world being taken over. For this threat model, I would have to conclude that removing indexing from a model does not make it much safer. In addition, someone, somewhere, will probably include the indexing that was carefully removed.

I think this is philosophically interesting but as long as we will get open source models, we should assume maximum adversarial ones, and focus mostly on regulation (hardware control) to reduce takeover risk.

This same argument, imo, implies to other alignment work, including mechinterp, and to control work.

I could be persuaded by a positive offense defense balance for takeover threat models to think otherwise (I currently think it's probably negative).

And this is significant because having a good world model seems very important from a capabilities point of view, and so harder to compromise on without losing competitiveness. So making AI systems extremely uncertain (or incorrect) about indexical information seems like a promising way to get them to do a lot of useful work without posing serious scheming risk.

Inexact, broad-strokes indexical information might be plenty for misalignment to lead to bad outcomes, and trying to scrub it would probably be bad for short-term profits. I'm thinking of stuff like "help me make a PR campaign for a product, here are the rough details." Information about the product and the PR campaign tells you a lot about where in the world the output is going to be used, which you can use to steer the world.

It's true, the PR campaign prompt doesn't tell much about the computer the AI is running on, making it hard to directly gain control of that computer. So any clever response intended to steer the world is probably going to have to influence the AI's "self" only indirectly, as a side-effect of how it influences the world outside the AI lab. But if for some reason we build an AI that's strongly incentivized to scheme against humans, that still sounds like plenty of "serious scheming risk" to me.

Suppose you know everything about the past, present, and future of the universe in complete physical detail, but you don't know where YOU are in space-time

You can't know the future in your example because the agent "You" can change it. You can only know the past and the light cone that cannot be affected by your actions. That then precisely locates you in space and time. For example if your first action is to use a fully random number generator, then knowledge of the future affected by that is impossible. (You could also scan the entire past/present/future for a string/pattern that does not happen and broadcast it, also breaking the assumption)

If we are talking about the real world (to the best of our current knowledge, yada, yada) and not its classical approximation, we have the universal wavefunction as the world model, which is independent of agent's actions as it encompasses them all.

Interacting with the world (by generating a specific pattern) allows to narrow down indexical uncertainty to all the agents that generate the pattern with non-zero probability.

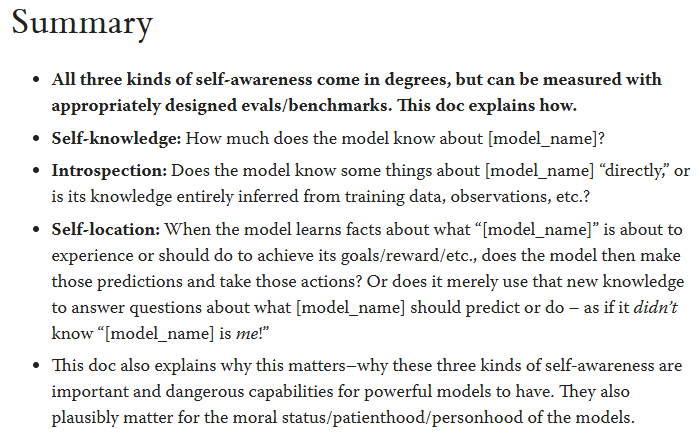

I'm writing this post to share some of my thinking about situational awareness, since I'm not sure others are thinking about it this way.

For context, I think situational awareness is a critical part of the case for rogue AI and scheming-type risks. But incredibly, it seems to have been absent from any of the written arguments prior to Ajeya's post Without specific countermeasures, the easiest path to transformative AI likely leads to AI takeover.

Basically, in this post I just want to:

So here we go.

Distinguishing world modeling from indexical information

Maybe the easiest way to do this is just to describe the philosophical puzzle... Suppose you know everything about the past, present, and future of the universe in complete physical detail, but you don't know where YOU are in space-time (i.e. when is "now"? who does "I" refer to?). Then you learn those things. It seems like you learned something. And it seems like that thing isn't something physical because you already knew all the physical facts about the universe. That "pointer" to "here" and "now" you received is providing "indexical information". The stuff you already knew is a (perfect) world model.[2]

Significance for situational awareness and AI safety

The reason I'm writing this post is that I think it's often worth distinguishing these things, but when I hear people discuss situational awareness, they often seem to blend or conflate them in a somewhat confusing way. I think of situational awareness as primarily or even exclusively about indexical information.[3]

This is because ignorance of indexical information in particular seems like a key piece in a lot of schemes for keeping AI from going rogue, or catching it when it does (e.g. ideas in AI Control, or context swapping).

The basic intuition here is that an AI system needs to have both a good world model and indexical information in order to scheme competently. In particular, it needs to know how it's actions will affect the physical world, e.g. perhaps most significantly: is it being tested in simulation, or will seizing power actually give it power in the real world?

And this is significant because having a good world model seems very important from a capabilities point of view, and so harder to compromise on without losing competitiveness. So making AI systems extremely uncertain (or incorrect) about indexical information seems like a promising way to get them to do a lot of useful work without posing serious scheming risk.

I also think nobody has really analyzed things carefully from this point of view, and this seems like a promising direction for theoretical work. Decision-theory stuff seems highly relevant. I haven't thought carefully about this part, but it seems like schemes that rely on indexical ignorance may succeed or fail depending on an AI's decision theory and it's stance on anthropics.

I'm not attacking physicalism here, TBC. I just think this is interesting and worth knowing about.

Tangentially, maybe it's not perfect because it doesn't include counterfactuals, causal mechanisms, or things like "the laws of physics". I think these notions present metaphysical conundrums similar to those of indexical information, and are likewise suspect. But all this would be a topic for another post.

This is a bit unsatisfying because I lack a good philosophical account of indexical information, but seems fine for most practical purposes.