I don't think people here realize that this is an incredible opportunity, since the arguments are now going to be field tested with tons of people. It was written pretty efficiently here, too.

But mainly, it's horrifically bad news, because AI safety is now in the hands of extremely nefarious shadow people using it at large scale for god-knows-what. Left-Right Polarization is the main bad outcome, but it is by no means the only one. There's Russian bots out there, and they're as bad as any nihilistic "for the lulz" hacker from that one trope.

Hopefully it peters out without doing too much damage, e.g. setting attitudes in stone in ways that will last decades.

because AI safety is now in the hands of extremely nefarious shadow people using it at large scale for god-knows-what.

The public will end up with a somewhat garbled and politicized picture. Which is still more accurate than their current picture based on some hollywood scifi.

Miri will carry on being Miri. The russian botnet creators aren't smart enough to make an actual cutting edge AI, and aren't as good at creating compelling nonsense as hollywood. Most people have a kind of ok perception of climate change despite the botnets and oil companies and green nuts.

The fact that Future Now ran the story implies that the masses are, in fact, capable of understanding the arguments for AI risk, so long as those arguments come from someone who sounds vaguely like an authority figure.

The masses are capable of understanding AI risk even without that. It's really not hard to understand; the basic premise is the subject of dozens of movies and books made by people way dumber than Eliezer Yudkowsky. If you went to any twitter thread after the LaMDA sentience story broke, you could see half of the people in the comments half-joking about how this is just like The Terminator and they need to shut it off right now.

Maybe they're using the "wrong" arguments, and they certainly don't have a model of the problem detailed enough to really deal with it, but a sizable amount of people have at least some kind of model (maybe a xenophobia heuristic?) that lets them come to the right conclusion anyways. They just never really think to do anything about it because 99% of the public believes artificial intelligence is 80 years away.

I've seen a lot of countersentiment to the idea of AI Safety, though:

(I have a collection of ~20 of these, which I'll probably make a top-level post.)

Totally agree; but the very basics (if we make something smarter than us and don't give it the right objective it'll kill us), are parse-able to what seems like a large fraction of the general population. I'm not saying that they wouldn't change their minds if their favorite politician gave a "debunking video", however the seeds are at least there.

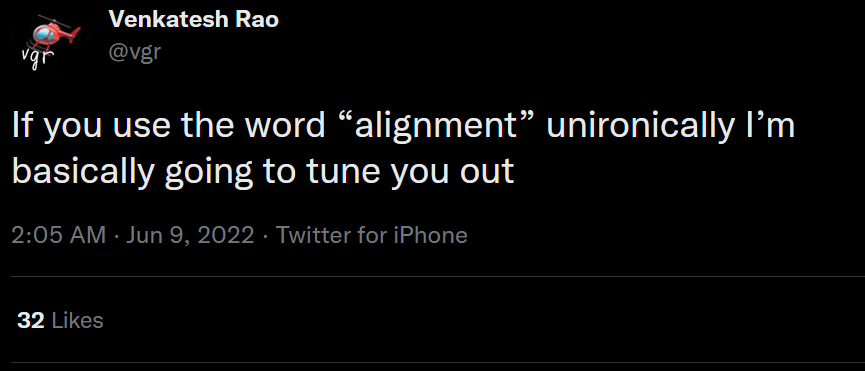

I was surprised by this tweet and so I looked it up. I read a bit further and ran into this; I guess I'm kind of surprised to see a concern as fundamental as alignment, whether or not you agree it is an major issue, be so... is polarizing the right word? Is this an issue we can expect to see grow as AI safety (hopefully) becomes more mainstream? "LW extended cinematic universe" culture getting an increasingly bad reputation seems like it would be extremely devastating for alignment goals in general.

Reputation is a vector not a scaler. A certain subsection of the internet produces snarky drivel. This includes creationists creating starky drivel against evolution, and probably some evolutionists creating snarky drivel against creationists.

Why are they producing snarky drivel about AI now? Because the ideas have finally trickled down to them.

Meanwhile, the more rational people ignore the snarky drivel.

Cool, but the less rational people's opinions are influential, so it's important to mitigate their effect.

The news tiles on Snapchat are utter drivel. Looking at my phone, today's headlines are: "Zendaya Pregnancy Rumors!", "Why Hollywood Won't Cast Lucas Cruikshank", and "The Most Dangerous Hood in Puerto Rico". Essentially, Snapchat news is the Gen-Z equivalent of the tabloid section at a Walmart checkout aisle.

Which is why I was so surprised to hear it tell me the arguments for AI risk.

The story isn't exactly epistemically rigorous. However, it reiterates points I've heard on this site, including recursive self-improvement, FOOMing, and the hidden complexity of wishes. Here's an excerpt of the story, transcribed by me:

Why does this matter? The channel, Future Now, has 110,000 subscribers(!), and the story was likely seen by many others. This is the first time I've ever seen anyone seriously attempt to explain AI risk to the masses.

The fact that Future Now ran the story implies that the masses are, in fact, capable of understanding the arguments for AI risk, so long as those arguments come from someone who sounds vaguely like an authority figure.