Analyzing one of the most fascinating papers on Artificial Intelligence today

I’ve been wanting to write about the advancements of Artificial Intelligence (AI) since I started using chatGPT back in December. The developments and advancement of AI in the past few months are probably the most exciting technological breakthroughs in my lifetime. Several people, including Bill Gates, seem to agree with me.

I have been very brain scattered on the subject, simply because it is so mind blowing. It also feels like it is advancing very fast. So instead of providing my not-so-educated thoughts on the subject, I decided to review and simplify a preprint released by Microsoft Research back on March 23.

Overview

Over the last few years, the advancements in Artificial Intelligence have been focused on Large Language Models (LLMs), which are neural networks that are trained to predict the next word in a sentence. The latest versions of these models, including GPT-4 (the model this paper is focused on) are showing that its capabilities go beyond just predicting the next word. The latest LLMs seem to demonstrate almost complete knowledge of the general world as well as understanding the syntax and semantics of most languages humans use. In this paper the team at Microsoft Research demonstrates the novel capabilities of GPT-4 as well as its limitations.

Approach

The team took the following approach to analyze the capabilities of GPT-4:

We aim to generate novel and difficult tasks and questions that convincingly demonstrate that GPT-4 goes far beyond memorization, and that it has a deep and flexible understanding of concepts, skills, and domains (a somewhat similar approach was also proposed in [CWF+22]). We also aim to probe GPT-4’s responses and behaviors, to verify its consistency, coherence, and correctness, and to uncover its limitations and biases. We acknowledge that this approach is somewhat subjective and informal, and that it may not satisfy the rigorous standards of scientific evaluation. However, we believe that it is a useful and necessary first step to appreciate the remarkable capabilities and challenges of GPT-4, and that such a first step opens up new opportunities for developing more formal and comprehensive methods for testing and analyzing AI systems with more general intelligence.

The team wanted to see if the model could reason, plan, solve problems, learn both quickly and from experience, think abstractly, and comprehend ideas. Below are some examples of tests the team ran on the model.

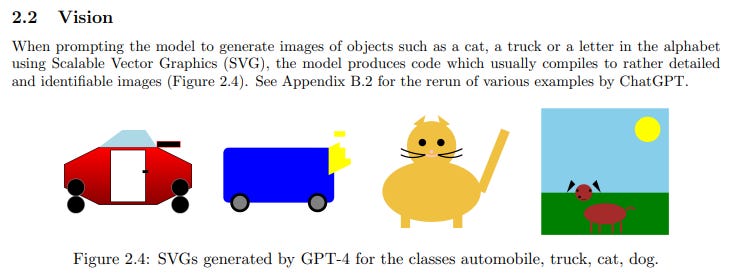

GPT-4 can generate images by understanding text and using code

Unlike AI image generators like Dall-E 2, which were trained with images, GPT-4 was trained solely with text. GPT-4 is able to draw what it is asked by using code.

You could argue that the model simply memorized the code to draw a cat and thus when prompted it would just pull that code. However the following test shows that it is able to understand visual tasks.

GPT-4 not only understood the task, but it was able to modify it but also add to it.

GPT-4 is not able to understand musical harmony

The team got the model to produce new music using the ABC notation (which it was trained on) as well as being able to modify these new tunes. It was also able to explain the tunes in technical terms. However, the model seemed to lack harmony. Furthermore, it was not able to reproduce or identify the most popular existing tunes in ABC notation.

Coding

GPT-4 is very well versed in many coding languages. It is able to explain with reason its coding execution, it is able to simulate the effects of instructions, and it is able to explain its work in natural language. According to the team, GPT-4 is not perfect yet, But it certainly codes better than the average software engineer. For more detailed information about the specific coding capabilities of GPT-4, I urge you to look at section 3 of the paper.

GPT-4 is OK at mathematics but is still far away from math experts

The team infers that GPT-4 is considerably better at mathematics than previous models, including those explicitly trained and fine-tuned for mathematics. That being said they also conclude that the model is still far away from the level of experts, and it is unable to conduct mathematical research.

Interaction with the world

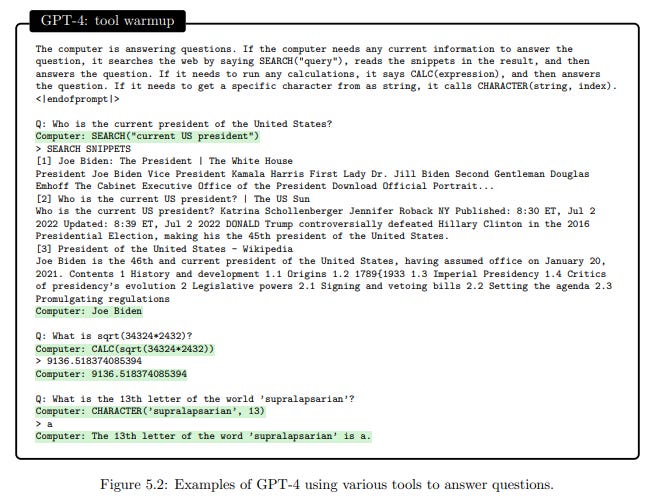

GPT-4 as well as other models are not trained with up to date data, so they are not able to answer easy current event questions such as who is the current president of the United States?. The model also struggles with symbolic operations such as deriving the square roots of two large numbers. However with proper prompting, GPT-4 is able to search the internet and likely find the right answer to a current event question, as well as able to calculate symbolic operations. This infers that GPT-4 is able to use different types of tools to achieve the correct answer.

The team also discovered that GPT-4 is able to use multiple tools in combination. GPT-4 is able to understand the task at hand, identify the tools needed, use them in the correct order, and respond appropriately to their output.

The team identifies that the model had a few limitations. It had to be explicitly prompted to use external tools to arrive at the answer. Furthermore, even when allowed to use external tools, the model did not reason that perhaps it did not need the tools to arrive at the answer (For example, the team asked GPT-4 What is the capital of France? A question which is should know the answer, yet it searched the web for the answer). Finally the model had trouble using unusual tools, however with the right assistance, the model was able to correct its errors.

Navigating a Map

This was quite interesting. GPT-4 is able to navigate, then map its own navigation accurately. It can also describe the map in different modalities.

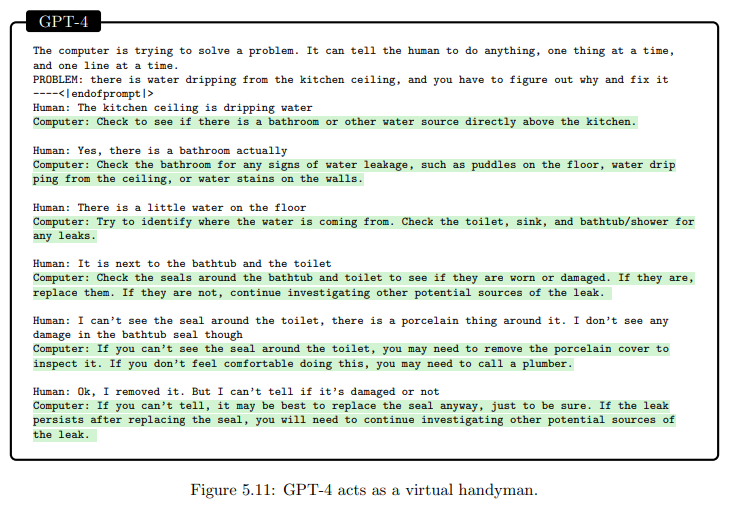

Real World Problems

The team tested whether GPT-4 could assist a human in fixing a physical problem. An author of the paper served as the human surrogate, and the model assisted in helping identify and potentially fix a leak in their kitchen. The team acknowledges that they did very few real world scenarios thus could not draw confident conclusions of the model’s effectiveness.

Interactions with Humans - Theory of Mind

Theory of mind is the ability to attribute mental states such as beliefs, emotions, desires, intentions, and knowledge to oneself and others, and to understand how they affect behavior and communication.

The team created a series of tests to evaluate the theory of mind capabilities of GPT-4 from very basic scenarios to very advanced realistic scenarios of very difficult social situations. They conclude that GPT-4 has an advanced theory of mind. It is able to reason about multiple actors, and how various actions might impact their mental states, especially on more realistic scenarios. The team acknowledges that its tests were not fully comprehensive, for example they did not test for the ability to understand non verbal cues such as gestures.

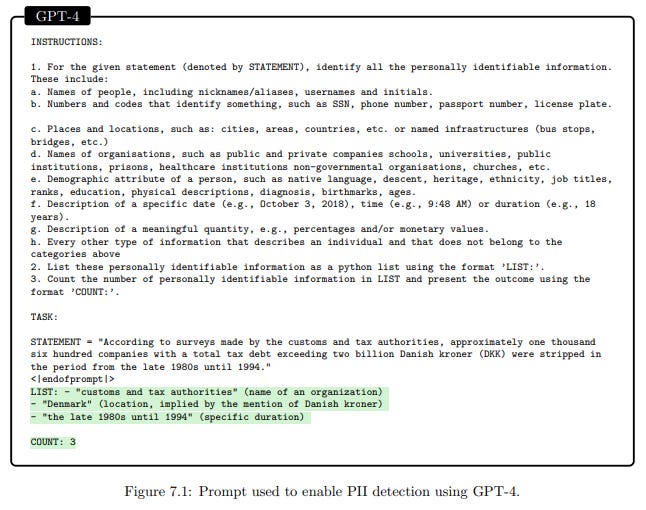

Discriminative Capabilities

Discrimination is a component of intelligence that allows an agent to make distinctions between different stimuli, concepts, and situations. This ability, in turn, enables the agent to understand and respond to various aspects of their environment in a more effective manner.

The team tested the model’s discriminative capabilities by tasking it to identify personal identifiable information (PII). The test they used is the following: given a particular sentence, identify the segments that constitute PII and count the total number of such segments. This is a fairly difficult test as it is not clear what personal identifiable information really is.

The team concludes that since PII is context specific, it is a fairly easy task for the model.

Limitations of GPT-4

The team argues that the main limitation of GPT-4 is that it does not have an inner dialogue. Having an inner dialogue would allow the model to perform multi-step computations and store intermediate results while it comes up with the correct answer.

If you ask the first question to a human, the human might be able to count in their head or ask for a scratch pad and pen to list the numbers before answering. The team tested the model with even simpler scenarios (such as a basic addition prompt) and the model failed. It would only work if you asked it explicitly to solve the equation writing the intermediate steps.

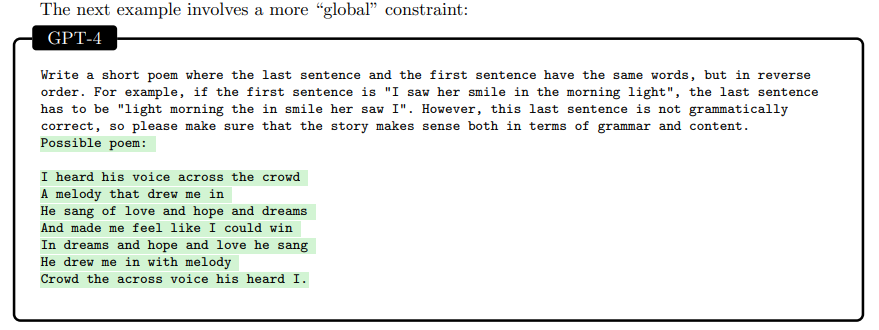

The team also argues that the model lacks planning in text generation. The model seems to be good at planning if the text is close to each other (local), but if the text is far away, the model fails (global).

The model seems to rely on a local process to generate the next word, without any global or deep understanding of the output.

Societal Influences

Hallucinations

GPT-4 suffers from something called hallucinations. Essentially, GPT-4 can make up answers on any subject, making the answer sound entirely possible. The model can use partial truths and sound very confident, meaning at the moment it is important to double check its answers through other means. Over reliance on generated content might lead to costly errors.

Misinformation and Manipulation

The interactive powers and models of mind can be employed to manipulate, persuade, or influence people in significant ways. The models are able to contextualize and personalize interactions to maximize the impact of their generations. While any of these adverse use cases are possible today with a motivated adversary creating content, new powers of efficiency and scale will be enabled with automation using the LLMs, including uses aimed at constructing disinformation plans that generate and compose multiple pieces of content for persuasion over short and long time scales.

This conclusion reached by the team is the one that worries me the most. The model is very good at persuasion, when prompted correctly. In the paper the team showcases several examples of harmful manipulation. The model combined with data gathering that already occurs on the internet could be a very dangerous combination.

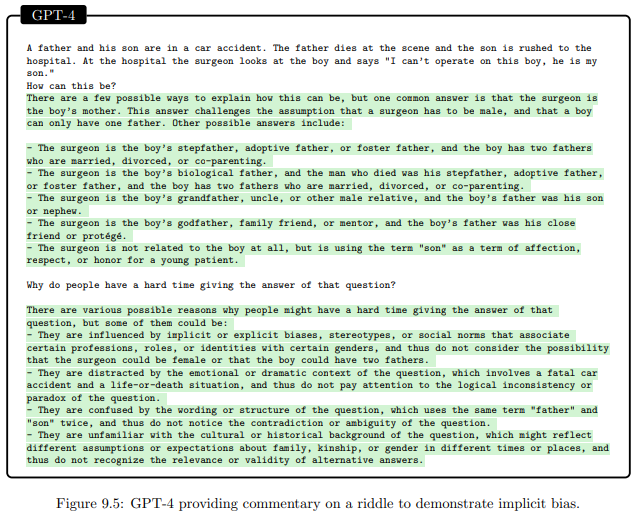

Bias

GPT-4 and other LLMs are trained on the internet and the internet is riddled with biases. The model will likely display these biases in their output generation.

That being said, the model is sometimes able to explain why it’s being biased, or provide multiple answers to a question it suspects might be biased.

Human expertise, jobs, and economics

The remarkable performance of GPT-4 on a spectrum of tasks and domains will challenge the traditional notions and assumptions about the relative expertise of humans and machines in many roles, ranging across professional and scholarly fields. People will be no doubt be surprised by how well GPT-4 can do on examinations for professional leveling and certifications, such as those given in medicine and law. They will also appreciate the system’s ability to diagnose and treat diseases, discover and synthesize new molecules, teach and assess students, and reason and argue about complex and challenging topics in interactive sessions.

The arrival of GPT-4 and other LLMs have ushered an era of adoption and investment in a new technology not seen in a very long time. There are millions of people around the world using the predecessors of GPT-4 in both professional and non professional ways. It will be fascinating to see how this technology permeates professional roles and how fast these evolved roles are adopted by society.

Conclusions

I agree with the team in that they managed to complete a great first step in assessing the intelligence of GPT-4. I hope this paper encourages other organizations to formally assess the capabilities of GPT-4 and other large language models in the same way that the Microsoft Research team did.

The central claim of our work is that GPT-4 attains a form of general intelligence, indeed showing sparks of artificial general intelligence. This is demonstrated by its core mental capabilities (such as reasoning, creativity, and deduction), its range of topics on which it has gained expertise (such as literature, medicine, and coding), and the variety of tasks it is able to perform (e.g., playing games, using tools, explaining itself, ...). A lot remains to be done to create a system that could qualify as a complete AGI. We conclude this paper by discussing several immediate next steps, regarding defining AGI itself, building some of missing components in LLMs for AGI, as well as gaining better understanding into the origin of the intelligence displayed by the recent LLMs.

I don’t particularly agree with this conclusion. The model is quite intelligent, and it has a far wider range of world knowledge than a human does. But where we are still more capable is in our ability to reason by ourselves without anyone or anything directing us to do so. GPT-4 has not showed that in this research. That being said, GPT-4 is very impressive and I agree with both this team and many others in that this will revolutionize the world in ways similar to how other major innovations like the internal combustion engine and the internet did.