Some of these counterarguments seem rather poorly thought through.

For example, we have an argument from authority (AI Safety researchers have a consensus that AI Safety is important) which seems to suffer rather heavily from selection bias. He later undermines this argument by rejecting authority entirely in his response to "Majority of AI Researchers not Worried," stating that "this objection is "irrelevant, even if 100% of mathematicians believed 2 + 2 = 5, it would still be wrong." We have a Pascal's Wager ("if even a tiniest probability is multiplied by the infinite value of the Universe") with all the problems that comes with, along with the fact that heat death guarantees that the value of the Universe is not, in fact, infinite.

The author seem to be of the mindset that there are no coherent objects to AI Risk; i.e. that there is nothing which should shift our priors in the direction of skepticism, even if other concerns may override these updates. An honest reasoner in the presence of a complex problem with limited information will admit that some number of facts are indeed better explained by an alternate hypothesis, stressing instead that the weight of the evidence points towards the argued-for hypothesis.

AI risk denial is denial, dismissal, or unwarranted doubt that contradicts the scientific consensus on AI risk

An earlier statement from the paper with the same general set of issues with respect to the rejection of authority later in the paper. Deniers are wrong because the scientific consensus is against them; the consensus of researchers is wrong because they are factually incorrect.

If the citations have anything like the bias the rhetoric has, the paper isn't going to be useful for that purpose, either.

Nice finds. This is still a preprint, so potentially worth sharing these with the author, especially if you think this would lead to journal rejection/edits.

Just finished watching a Martin Burckhardt Webinar that, I think, essentially says we are the machine where the computer is our social unconsciousness if I’m understanding him correctly? So this is timely as I learn more about the AI skepticism arguments, much thanks for pointing it out….

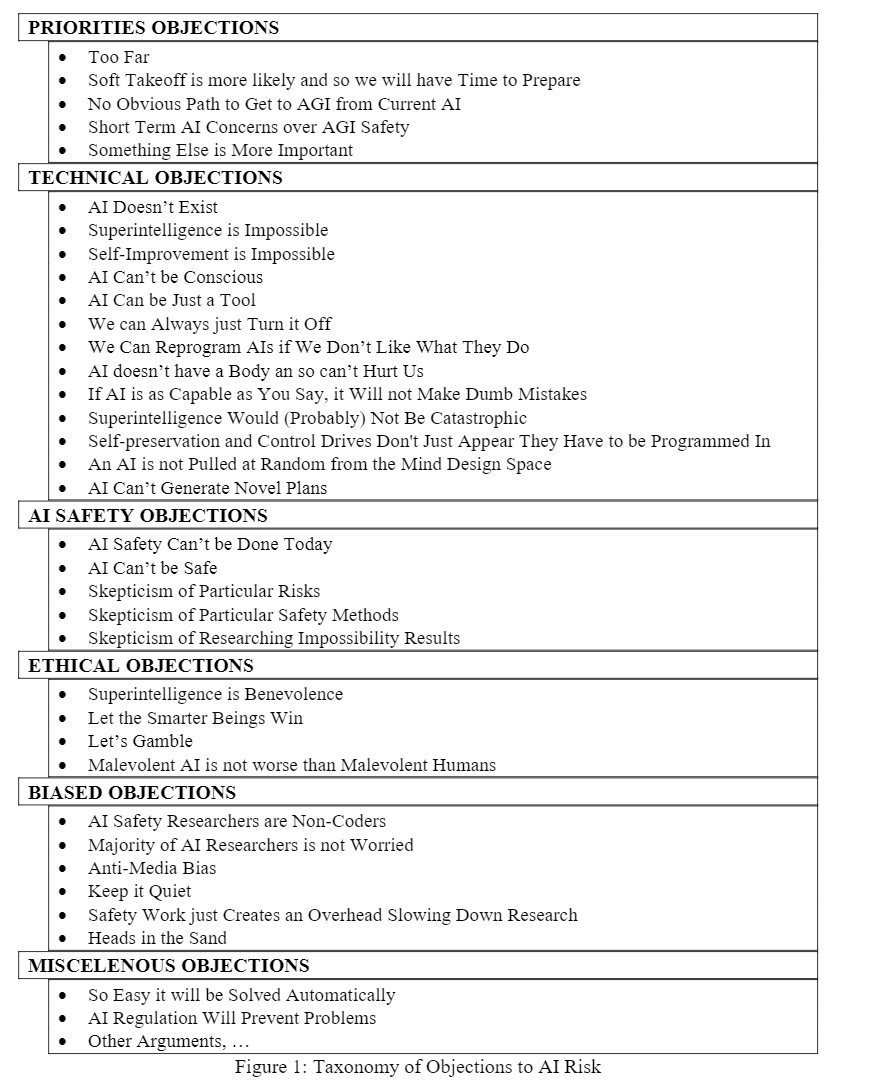

Roman Yampolskiy posted a preprint for "AI Risk Skepticism". Here's the abstract:

Nothing really new in there to anyone familiar with the field, but seems like a potentially useful list of citations for people coming up to speed on AI safety, and perhaps especially AI policy, and a good summary paper you can reference as evidence that not everyone takes AI risks seriously.