I'm noticing my confusion about the level of support here. Kara Swisher says that these are 505/700 employees, but the OpenAI publication I'm most familiar with is the autointerpretability paper, and none (!) of the core research contributors to that paper signed this letter. Why is a large fraction of the company anti-board/pro-Sam except for 0/6 of this team (discounting Henk Tillman because he seems to work for Apple instead of OpenAI)? The only authors on that paper that signed the letter are Gabriel Goh and Ilya Sutskever. So is the alignment team unusually pro-board/anti-Sam, or are the 505 just not that large a faction in the company?

[Editing to add a link to the pdf of the letter, which is how I checked for who signed https://s3.documentcloud.org/documents/24172246/letter-to-the-openai-board-google-docs.pdf ]

There is an updated list of 702 who have signed the letter (as of the time I'm writing this) here: https://www.nytimes.com/interactive/2023/11/20/technology/letter-to-the-open-ai-board.html (direct link to pdf: https://static01.nyt.com/newsgraphics/documenttools/f31ff522a5b1ad7a/9cf7eda3-full.pdf)

Nick Cammarata left OpenAI ~8 weeks ago, so he couldn't have signed the letter.

Out of the remaining 6 core research contributors:

- 3/6 have signed it: Steven Bills, Dan Mossing, and Henk Tillman

- 3/6 have still not signed it: Leo Gao, Jeff Wu, and William Saunders

Out of the non-core research contributors:

- 2/3 signed it: Gabriel Goh and Ilya Sutskever

- 1/3 still have not signed it: Jan Leike

That being said, it looks like Jan Leike has tweeted that he thinks the board should resign: https://twitter.com/janleike/status/1726600432750125146

And that tweet was liked by Leo Gao: https://twitter.com/nabla_theta/likes

Still, it is interesting that this group is clearly underrepresented among people who have actually signed the letter.

Edit: Updated to note that Nick Cammarata is no longer at OpenAI, so he couldn't have signed the letter. For what it's worth, he has liked at least one tweet that called for the board to resign: https://twitter.com/nickcammarata/likes

4/7 have still not signed it: Nick Cammarata

Cammarata says he quit OA ~8 weeks ago, so therefore couldn't've signed it: https://twitter.com/nickcammarata/status/1725939131736633579

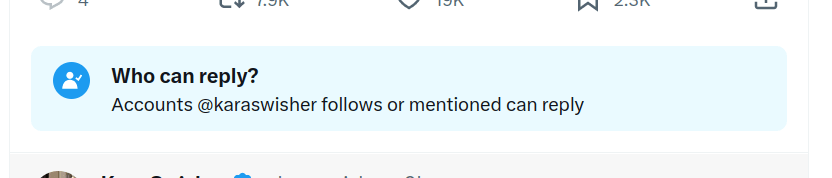

The only evidence I've seen that this is real, so far, is Kara Swisher's (who?) word, and not having heard a refutation yet, but neither of those things are very reassuring given that Kara's thread bears The Mark:

I am also confused. It would make me happy if we got some relevant information about this in the coming days.

I was annoyed by the lack of actual proof that the petition to follow sam was signed by anyone from OpenAI, all it would really take is linking a single endorsement (or just an acknowledgement of its existence) from a signatory. So I asked around and here is one such tweet: https://twitter.com/E0M/status/1726743918023496140

A research team's ability to design a robust corporate structure doesn't necessarily predict their ability to solve a hard technical problem. Maybe there's some overlap, but machine learning and philosophy are different fields than business. Also, I suspect that the people doing the AI alignment research at OpenAI are not the same people who designed the corporate structure (but this might be wrong).

Welcome to LessWrong! Sorry for the harsh greeting. Standards of discourse are higher than other places on the internet, so quips usually aren't well-tolerated (even if they have some element of truth).

More drama. Perhaps this will prevent spawning a new competent and funded AI org at MS?