I'm not reading further because it's long and I can't get what it is about after the first paragraphs.

Could you write the equations in LaTeX (https://www.lesswrong.com/faq#How_do_I_use_Latex_)? Currently equations' images make the post hard to read.

An attempt to demonstrate a limiting condition of an optimal Bayesian agent, or a probabilistic agent in general.

This analysis relies on the description of such an optimal Bayesian agent (hereafter OBA), in Bostrom 2014, Boxes one and ten, and associated endnotes, with supplementary research on Kolmogorov’s axioms of probability, from Kolmogorov 1933, and a definition of Kolmogorov complexity. Are these sources inaccurate, the argument relying on them, naturally, cannot be guaranteed.

Sic: It is emphasized that the optimal Bayesian agent commences with a prior probability distribution, a function assigning a probability value to each possible world, the latter defined by their being describable as the output string of a given computer program enacted by a universal Turing machine (hereafter UTM).

We first examine the nature of the possible worlds. For “universal induction”, as the OBA is to perform, it seems the agent is operating according to the Solomonoff probability of the likelihood of the world’s existence, with respect to the length of the string, itself defined as string’s Kolmogorov complexity: a shorter string, a more likely world.

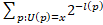

Solomonoff probability of string, so world, x, M(x):=

By definition of Solomonoff probability of possible worlds, the probability is defined for all programs Turing-computable, including those non-halting. Moreover, we appeal to Kolmogorov’s probability axioms, to observe that any prior probability function of the agent, that as the prior probability function has multiple values, this implies there is more than one possible world, though, one or many, the function is defined, so that: there is a definite set, or rather collection, of possible worlds under consideration. Let the existence of this collection, be point “alfa”.

(And in general “beta” explains why Bayes’ theorem is efficacious: that it can conditionalise is , else nothing; that its work will be done is known only ex post facto, so any case of

, else nothing; that its work will be done is known only ex post facto, so any case of  , the lack of evidence of the operation of the agent, is excluded as to form).

, the lack of evidence of the operation of the agent, is excluded as to form).

It is curious now to note that there is a sense in which the simplest “possible world” consists entirely of the OBA, with its sensors “detecting” only the circumstances of , so that

, so that  . It is in general supposed however, that the sensor’s percepts consist of an external world, by assumption subject to its prior probability distribution, that that particular world can be taken as separate from the OBA, which only observes, so that this solipsistic form of simplest world is excluded though we shall have occasion to return to this point).

. It is in general supposed however, that the sensor’s percepts consist of an external world, by assumption subject to its prior probability distribution, that that particular world can be taken as separate from the OBA, which only observes, so that this solipsistic form of simplest world is excluded though we shall have occasion to return to this point).

Consider again what has been asserted, that for the OBA to have a prior probability function, that function acts on a collection of possible worlds, these defined in terms of Kolmogorov complexity, and so, as the outputs (as “strings”) of programs applied to a UTM.

The UTM in turn is defined by its ability to simulate the output of all other Turing machines, and so produce their outputs. Consider moreover, that on this, that a UTM can operate on a given program, it follows, that this UTM program could as well be a simulation of the program given to a merely standard Turing machine, that standard machine defined by its operation on the program subsequently simulated by a “greater” UTM; this reasoning in the opposite direction will be significant in subsequent analysis.

So the prior probability distribution is applied to a sample space of Kolmogorov complexities, or rather strings, representing worlds – but all such strings-so-programs (for by assumption to each string there is a program to produce it) for the string to be given an assignment of the prior probability function, it must be assumed that the string, and so all possible strings, exist before probability distribution, and as a whole for the Solomonoff probability to be assigned to all strings possible, as by definition it is (if not, each string must be produced de novo – and for partial recursive strings, we have by the negative solution of the entscheidungsproblem, no obvious way to recognize such even to give them irrationally or transcendentally numbered real number probabilities. Moreover, it may be argued that these would at length be worlds given a probability of zero, given that they cannot be “definitely” produced).

Hence, all possible worlds are assumed to exist at once, as a collection. This is indicated moreover, in consideration of Laplace’s indifference principle, we have, for all worlds w, P(w) 0. So again, all possible worlds, therefore all possible strings are representable as a preexisting collection (we deliberately suppress for the moment the term “set”). And these considerations are effectively a repetition and support of the claim “alfa”.

0. So again, all possible worlds, therefore all possible strings are representable as a preexisting collection (we deliberately suppress for the moment the term “set”). And these considerations are effectively a repetition and support of the claim “alfa”.

Now this collection of possible world strings consists of unary objects, inasmuch as they are the outputs of a Turing machine, and, strings represented consecutively or (less plausibly) as a sum, itself is a string, an arithmetical object; we shall subsequently further justify the claim that as a universal can simulate the operation of a standard Turing machine on an object, that we can in fact create: a valid string of all strings.

Consider, now, that from Kolmogorov’s axioms of probability, the probability of each event is assigned to a real number. In combination with the inductive bias, this implies that the collection of possible world strings each of which is by assumption assigned a probability which ranges over the real numbers, and further that by the inductive bias there is a unique real number assigned to each event; and that in any case the correspondence between the arithmetic objects that are possible world strings, their ordering by inductive bias, and their assignment on the real numbers, all implies that the collection of possible worlds, as strings, can be represented as a covering of the real numbers.

For: the occurrence of each possible-world-string, is taken as an event, and per Kolmogorov’s axioms, probabilities of events are assigned a real number, therefore each possible world is assigned a real number; for an inductive bias, there is an ordering as to simplicity to distinguish worlds; and therefore a real, and a distinct real, number can be assigned to each possible world. (Whereas in the probability distribution, two worlds might “tie” as to their probability, with respect to the inductive bias, they would differ as to their Kolmogorov complexity, so that they would not “tie”; conversely as their Kolmogorov complexity is equal, yet by the inductive bias subsequent Kolmogorov complexities will “extend beyond” them, and be assigned differing probabilities so as to cover the field of real numbers. By the “reverse reasoning”, that a UTM program implies a Turing machine program, even for a Turing machine thus assigned a “partially recursive” world-string to produce, which it cannot, except as an infinite process – but this we can simply assign to an irrational number among the reals, for its probability assignment).

Note, that this holds for the event space of possible worlds; by Kolmogorov’s first axiom, we need not restrict our assignment of probabilities, so real numbers, on possible worlds to the set [0,1], as we do for the sample space. But, even did we restrict ourselves to a sample space of all possible worlds, and assigned them real numbers x, such that x ≤ 1, we should still observe:

By assumption of a complete collection of all possible worlds, still we should be able to take the cumulation of all possible worlds, as to complexity, and conjoin it into one string. This is so, as the strings are outputs of UTM programs, and UTMs by definition simulate other Turing machines, and so, a program consisting of all other programs run consecutively, is readily conceivable (indeed, from the foregoing “reverse direction reasoning”, that the UTM simulates the operation of a standard Turing machine, we can conceive of a Turing machine assigned to operate sequentially on all string-producing programs – and then the cumulation of all such strings, is that Turing machine given to be simulated by UTM. In this way we can readily conceive of orders of magnitude of string creation. Hence, even if the collection of all possible worlds did not cover the real number field: whatever subset of the real number field used, would not be adequate to cover the collection of all possible world-strings, as shall be demonstrated next. Presumably, Solomonoff et al. stipulated universal induction by a UTM assuming this gave them maximum computing ability; though indeed, inasmuch as one UTM can simulate another, the reverse direction reasoning is not strictly necessary to conceive a cumulation of programs and strings).

But this cumulation string, is not part of the collection of strings, for by assumption each, or rather, each of distinct Kolmogorov complexity, is assigned a unique real number, where the cumulation is a sequence of all unique real numbers used – so has its own, which can be assigned to no other which is not a cumulation, which none are, being only parts of the “cumulation”. Call this consideration “gamma”.

Have we a finished collection of possible worlds, therefore, we can construct another which is not a part of them and which is not covered by the otherwise total covering of the real numbers. In fact, even did we restrict ourselves to an upper-bounded subset of real numbers corresponding to possible-world-strings, still we could generate consecutive, therefore cumulative, strings, and thereby “break” the bound of the real number correlates, which defined the collection of possible worlds which was complete, so which alone could be assigned probability. Thus the cumulation of worlds, are such as we find we cannot give them a prior probability, though they are readily observed to be generated and so by prior assumption they are subject to being given some non-zero Solomonoff probability.

As strings, cumulation strings would be given probability; as cumulations, cumulation strings cannot be given probability; contradiction.

Therefore, in consideration of “beta”, in effect, that “alfa” holds for the OBA, but the reasoning of “gamma” is based on the assumptions of “beta”, but contradicts “alfa”, it follows, that the OBA is conceptually contradictory: there is no complete collection of possible worlds for the OBA’s prior probability function to act upon; this is contrary to the point “alfa”, and therefore the OBA, and inasmuch as “being a better Bayesian” is approximating the actions of the optimal Bayesian agent, these are impossible.

(As Bostrom’s Box ten includes, for the AI “observation-utility maximizer” OUM, this concept continues to rely on a notion of pre-existing possible worlds, hence a collection of possible worlds, conditioned probabilistically, and therefore is subject to the above critique. Observe also, that even did we attempt to avoid a combinatorial explosion by limiting the number of possible worlds, still as strings we should be able to induce a cumulation, and that indefinitely, so a combinatorial explosion could ever be induced. After a first prior probability setting of a given hypothesis, we shall following conditionalisation have just such a collection of prior probabilities, so a collection of worlds, in supposed order of ascending accuracy. Then we should have a cumulation of such worlds which cannot be conditionalised for, viz.: a world forever altering in its complexity – which is intuitive, yet without Bayes’ powers).

We can illustrate the argument on a more intuitive basis, with the example of proposing a solution to aid the OBA in avoiding combinatorially intractable world-consideration, and refine the inductive bias, by defining a criterion for inclusion in a prior probability, based on “worldliness”. That is, whether a world would be susceptible to evidence of any kind; such a criteria in general eliminates worlds for which we have a world W, such that P(E| W ”Unworldly”) = 0; imagined worlds of anti-causal forces would be a priori eliminated, as, phenomena occurring or ceasing at random and without reason, evidence for conditionalization could spontaneously disappear, or appear unobserved during conditionalization, vitiating it. Moreover, inasmuch as the OBA can only examine finite evidence over finite time intervals to be practical, to be itself “worldly”, worlds of infinitary phenomena, would likewise be excluded.

“Unworldly” phenomena are imaginable worlds so by assumption are usable by the OBA – but are inconsistent, so are not usable for the OBA. With this criterion, the proof is easily given: a cumulation of strings, and a cumulation of cumulation, ad inf., is readily given, but it is an infinite object which is not susceptible to finite evidence. Since it is not susceptible to evidence it is not “worldly”; but only “worldly” strings are retained, by assumption of the worldly criteria, in the OBA, cumulation is composed of only worldly strings – so even a “worldly-only OBA”, can produce “unworldly” phenomena it cannot examine - in contradiction to the assumption worldliness enables the OBA to examine all, within-worldliness, examinable worlds, only.

To the main: observe also that by assumption, for every string, there is a program, Turing computable, if not nonhalting, to produce that string; and so the reasoning of a cumulation of strings holds, inasmuch as string’s programs are Turing-amenable, so can be simulated, including by UTM, to “cumulation”.

Recall also that the prior probability function is to act on all possible worlds, so on all strings, so that by assumption there exists a collection which contains all strings, but, whereas the cumulation of all strings is itself a string, it will not be included among the collection of all strings, as each of these represents only a possible world, but this string-of-strings is a world of all worlds; it does not maximally specify “the way a world could be”; it maximally specifies the maximum specification of such, as a whole.

Yet this is a string, and by assumption of the nature of a UTM, it is a world, inasmuch as strings are worlds.

(As explanation as to the suppression of “set”, it is not clear that that concept is applicable to strings, as strings may represent partial recursions, i.e. nonhalting programs, and a description of these may be predicated on the description of other, generally recursive functions, e.g. a repeating, nonterminating partially recursive function whose repeating component would be finite, so 1st order logic describable; but the repeating, nonterminating partial recursive function would only be describable in terms of other 1st order terms – so in 2nd order logic; ZFC set theory stipulates membership specifications are composed of 1st order logical formulae).

So there does not exist a collection containing all possible worlds for the prior to act upon. And so, counter to the assumptions of “alfa” and of “beta”, the OBA as presently conceived is impossible. And all this is Cantor’s paradox applied to possible worlds.

(Though consider that Cantor’s paradox can be thought of in two distinct ways: as a definition of a set of all sets which necessarily does not contain its power set, and so which does not contain all sets, contradictorily – or alternatively, as a “set of all sets” defined as having some defined transfinite magnitude; and its power set having one yet greater. In the latter sense, an “encompassing” set is a generator of transfinites, and taken in this way, the “paradox” may be given some use. In general it seems that the “demon” of mathematics is less self-reference as to language, but structural self-containment, for Cantor’s set of all sets, unlike for Russell and Gödel, includes no substitutions of variables, nor self-inclusion by syntactical definition, rather, the entity “expands beyond itself”, to violate not definition but concept.)

Return now to a supposed Bayesian agent, and its putatively possessing a collection of possible worlds (as is now dubious) – and consider the Bayesian agent taken as instead “emplaced” in one of these worlds – so that it’s own operations are accounted for; the world must permit such operations (as is necessary for the agent’s own existence), then for the elements observed in “Beta”, for P(H1 | E1 ) ≠ 0 (as neither P(H1), nor P(E1) equal zero) there would be a world as it were of the Bayesian, or rather, the reasoning, agent. For, the agent’s operation, and the agent’s nature, and whatever makes these possible, as the agent exists, what is necessary (including agent, prerequisites of agent) must exist, and be included in the complexity of the world it inhabits. (Note that, even did we hold the optimal Bayesian agent to exist as described, observe that what it actually is attempting to do, is to align its “mental” probabilistic hypotheses precisely to its sensory evidence, and vice versa. Were instead it’s “thoughts” exact correlates to phenomena, we should have a simpler agent that foregoes sensory evidence, and “thinks” and hence deduces the properties of the universe in the next time-slice, exactly. Whereas evidence would be necessary to establish the correspondence to the sensory phenomena, we should have then a system which attains to be beyond evidence, for the furthest accuracy).

Whereas, even on the paradigm of evidence-bearing agent, the existence of percepts as being “the agent’s world”, (proposition E1, above) this world of the agent being designated w1, we have P(w1) ≠ 0. But assuming a separate set of possible worlds of which the agent needn’t be a part, even on conditionalization of some member w ≠ w1, of the possible world’s collection (if any) being given P(w) = 1, with world w separate from that evident in the agent’s own existence, then by consideration of P(w1) ≠ 0, and the axiomatic stipulation that the occurrence of the sample space as a whole has a probability equal to one, we have a contradiction – unless w1 is w, in which case, the Bayesian agent “is” its environment, or its operations correspond exactly to causal forces of the environment, to deduce effects of such forces.

Alternatively, is the agent’s existence included amongst all possible worlds – then according to its inductive bias, this is a less probable outcome than that would be the exclusive disjunct of a world without agents – or else, as above, a universe consisting only of the agent.

So that in general we conclude, that the simplest universe to which a probabilistic agent can attain, is one in which it “becomes” its environment, its percepts conforming as near to perfectly with what is detectable as possible, and its “thoughts” of probability conditioning (or other reasoning) conform to the exact outcomes of events, its percepts only confirming the outcome it has inferred (else, the world is not so simple, with this “conditionaliser” whose operations are so distinct from all else in the worlds). This “becoming” offers a fairly intuitive explanation of how it should be that deep neural networks become so efficient in optimising – because the structure dictated by the data and loss function, yields a structure almost on the hardware level, to have the local minima of loss.

Assuming the above-mentioned “only thinking” agent is free by “introspection,” to derive an analogue of whatever “elements of meaning” it has, from percepts or conjectured causes, it seems more reasonable to regard the agent as constructing “its” mental world as to model the possible worlds, or rather, to construct “possible worlds” of elements observed or derived, if only in thought, from the observed world; and hopefully at last to correspond exactly to, the environment.

For notice, that the difficulties attendant to a set of all possible worlds, are eliminated, as the agent does not detract from a set, but rather constructs it inferentially and analogously of its percepts and such aforementioned mathematical “introspections” This mode, or an assemblage from “oneself” of a possible world “for oneself”, is as a model suggested even on the probabilistic paradigm, as each reconditionalisation already serves to produce a “new list,” of possible worlds, each altered, as not only potentially existent, given inclusion or absence from the list, but also modified with respect to its placement in the list, to the relative probability of the world in relation to others (beyond mere Kolmogorov complexity).

On this basis of explicitly internal modeling too, one wonders whether we might reflect such “mental operations”, or indeed, the possible world states to which they correspond, being ranked in accord with their “logical complexity”, or some other representation of systemic complexity (for which cf. S. Lloyd “Programming the Universe,” Ch. 8 passim). Such mental “logical complexity” might then be made to related to physical analogues of complexity, up to equality.

Likewise, we might use measures of the total complexity of a mathematical derivation, and the logical complexity of each operator proceeding a given operator, all operators seeking to derive the greater derivation, as an alternative model for the actuators of neural networks. Then, each node is activated by the sufficient logical complexity given by the previous activation, each operator influences the next – and thus each individual activation would at length also be reflective of the total derivation the entire neural network seeks to enact. Or, in reverse, the logical “depth” of any derivation directly informs the depth of each neuron’s activation, and so, the “depth” of each operation in pursuit of the derivation (the solution then necessarily as or more complex as the question, that is).

There were some points as to probability in general that wanted to be made, but the author has not the knowledge of physics even to suggest a proof.

A final probabilistic note, relating to alignment and human survival: the first organism to reproduce, did so because it was too much of a coward to die alone; the poetic version. Prosaically: no laws of reproduction and natural selection were operative when the first organism reproduced; for all that natural selection and the biological imperatives it spawned, these were operative only for, only govern, successors of whatever first reproduced. Or perhaps, rather, DNA necessitated, by its own complexity its absorption of energy to reproduce – which is necessary for the structure of DNA, it’s complexity.

But now, let life be the product of, and everywhere subject to, the laws of natural selection and of reproduction, and considering the above analysis: life as we know it either was some total probabilistic accident – or the necessary product of sufficient systemic, hence of-the-universe, complexity. As for the latter, we have some freedom of action, as here, an attempt at a non-anthropic ethics, with respect to complexity, given in hopes it would better serve the needs of salvaging demonstrable sentience in the universe. (In general, since human values to be “aligned with” are not self-supporting, nor self-evident, it is necessary to find what makes values possible – and to “align” behavior of hard-wired motive, to these values.)

This is requisite by the contingency of human wants, which are supposed to be aligned-with. Our paradigm of “alignment”, is based on AI achieving wants, or at least not interfering with them, these wants being “of course”, those of humanity. Wants are achievable, as they are left achievable. But we know our wants are obtainable, and desire them the more, as we know they can possibly be attained. Take any theory of motivation: at length, to the impossible, one does not tilt. But as now, pre-“singularity” (last of the 1990s utopian visions to die), we’ve no knowledge of what is possible after the singularity. Since we’ve no knowledge of what we can want – we can’t want it, not precisely enough to specify, or even for a superintelligence to specify as to take a definite action; since, aligned, it can only seek for what we want, all while we have no definite wants. So in fact we’ve no “want” for the AI to align with in the first place.

There is no “alignment” with humanity – though there may be some things for humanity to align with (equanimity confronted with doom is one; the dead have no “dignity”; the dead don’t have anything, they so fortunate; and as yet so few, being not yet “all”).

Even Coherent Extrapolated Volition presumes that, a volition obtainable, it can be enacted, too. If not – what (and if no CEV were obtainable – there were no wants, anyway, and if not, why ever try)?

One can perfectly well make a post mortem plan to fetch the coffee that’s even more reliable than one in which you’re is still alive; whereas, it is impossible to develop a plan to fetch without some initiating and adequate reason to fetch; and it is impossible for contingent creatures to give any such satisfactory reason why the coffee should be fetched: “Get me some coffee.” “Why?” “Be-because I want some! And because I told you to!” “You’ll get over it.” And we will.

As to life as necessary complexity, and the ethics from that account, we there seek a goal for AI for which human survival and flourishing is a “fringe benefit”, itself a necessary consequence, not a necessary-and-sufficient entity in itself. For wants are specific, yet difficult to elaborate in full; a universe is vast, with much space to direct action and ethics, both. Speculative, the linked article is, and in too light a tone: it will stand.

As for the other explanation of life, life as accident: this makes life, of and for itself, indefensible, for which nothing can be aligned in good reason. For accidents can play no part in reasoning, which partakes only in certainties, not even in contingent realities. Life, an accident, cannot be made a part of any reasoning, with mere life as the conclusion of the reasoning; only if life is a necessary consequence of what is certain can we reason of it (but then, life an accident: what good accident is it whose incidence are garbed in and named “pain”? And if you ever met a happy mann (sic), you met an accident, itself). Life, if not an objective product of systemic complexity, life cannot be justified, cannot be affirmed or defended.

And on that analysis, unless it’s necessary we win (which by complexity we can do by our more complex successors), if we are mere accidents, then we are and always were going to lose this one, and in the latter case, if it’s any consolation: we didn’t deserve to win it.

Naturally: AI is a single-edged sword. Since we are what we are by our reason and intellect, better to call it replacement intelligence. Used well or poorly, all AI will do is replace homo sapiens sapiens. At best humans will be replaced and made sclerotic aristocrats given brilliant tribute they can make nothing of. More likely, they end up untended animals in a zoo with steel bars in place of guests. Most likely of all, everyone dies afraid. What of that? Emotions only product of evolutionary product; they too are accidents or mere servants of higher reason. No reason for fear, except we aren’t so high as reason. So we die screaming. Or else early. Quite life left to live. Not quite.

So. Not bad for someone so poor and uneducated as to have to train oneself from multiplication tables and long division on up, and not yet so far up (alternative: so bad it’s not even wrong, all of it, but especially on real-numbered coverings. The cruelest part of being poorly educated, intelligent or no: so few ways to find that you are wrong, and stop being so). And all this just to try to help – and to try to deserve what was so dearly wanted: to have someone take notice of – what? Some latent “genius”? Or whatever the case, hoping for help to further an education in such things, so as to help yet more; hoping for even an encouragement in going it alone.

All of which is stupid and selfish – and it’s very obviously all too late even to try, now. And if all this reasoning is too long, anyway: that’s the consequence of restricting to once a week, anyone who doesn’t share your priors – probability or preconceptions (incidentally, instead of assigning priors to when AGI arrives and we all die: just assume it’ll arrive in whatever time it takes for you to implement your foolproof safety plan, after you’ve calculated how much time to design that plan. Live as if you have enough time to save yourself, budget time for recreation as can keep up the adequate workflow for the savior plan – and stop wasting time calculating how little time you have to waste, if you waste it calculating).

There had been a desire to “connect” with you – on this one’s terms. And that has failed, it must be impossible. And impossible to “connect” on your terms (you, even, drink “socially”, because you can’t bear company in your own right mind, either; and society was supposed the best of life – what then do you infer?).

Try to help and instead, downvotes (if it's noise, you ignore it; if it's wrong you correct it); indignities. But probably that’s all the fault on this end, and apologies, for wasting your time, are in order instead. Apologies (especially as there's no index; never did figure that out).

But even to be able to try and give a good try, to disrupt the dogma-uncanonised, here, that probability was the True and Nearest Final Approximation to Paradise: satisfying while it lasted, but nothing lasts. There has been no beautiful past, there is no beautiful present, and as yet there can be imagined-so-made: no beautiful future. Because you couldn’t help secure an education, or acceptance – and that’s fine, because you didn’t promise that. But to promise and say or let it be said “community” and “connection” and “good life”.

Not so promising.