Understanding deep learning isn’t a leaderboard sport - handle with care.

Saliency maps, neuron dissection, sparse autoencoders - each surged on hype, then stalled[1] when follow‑up work showed the insight was mostly noise, easily spoofed, or valid only in cherry‑picked settings. That risks being negative progress: we spend cycles debunking ghosts instead of building cumulative understanding.

The root mismatch is methodological. Mainstream ML capabilities research enjoys a scientific luxury almost no other field gets: public, quantitative benchmarks that tie effort to ground truth. ImageNet accuracy, MMLU, SWE‑bench - one number silently kills bad ideas. With that safety net, you can iterate fast on weak statistics and still converge on something useful. Mechanistic interpretability has no scoreboard for “the network’s internals now make sense.” Implicitly inheriting benchmark‑reliant habits from mainstream ML therefore swaps a ruthless filter for a fog of self‑deception.

How easy is it to fool ourselves? Recall the “Could a neuroscientist understand a microprocessor?” study: standard neuroscience toolkits - ablation tests, tuning curves, dimensionality reduction - were applied to a 6502 chip whose ground truth is fully known. The analyses produced plausible‑looking stories that entirely missed how the processor works. Interpretability faces the same trap: shapely clusters or sharp heat‑maps can look profound until a stronger test dissolves them.

What methodological standard should replace the leaderboard? Reasonable researchers will disagree[2]. Borrowing from mature natural sciences like physics or neuroscience seems like a sensible default, but a proper discussion is beyond this note. The narrow claim is simpler:

Because no external benchmark will catch your mistakes, you must design your own guardrails. Methodology for understanding deep learning is an open problem, not a hand‑me‑down from capabilities work.

So, before shipping the next clever probe, pause and ask: Where could I be fooling myself, and what concrete test would reveal it? If you don’t have a clear answer, you may be sprinting without the safety net this methodology assumes - and that’s precisely when caution matters most.

- ^

This is perhaps a bit harsh - I think SAEs for instance still might hold some promise, and neuron-based analysis still has its place, but I think it's fair to say the hype got quite ahead of itself.

- ^

Exactly how much methodological caution is warranted here will obviously be a point of contention. Everyone thinks the people going faster than them are reckless and the people going slower are needlessly worried. My point here is just to think actively about the question - don't just blindly inherit standards from ML.

Why not require model organisms with known ground truth and see if the methods accurately reveal them, like in the paper? From the abstract of that paper:

Additionally, we argue for scientists using complex non-linear dynamical systems with known ground truth, such as the microprocessor as a validation platform for time-series and structure discovery methods.

This reduces the problem from covering all sources of doubt to making a sufficiently realistic model organism. This was our idea with InterpBench, and I still find it plausible that with better execution one could iterate on it (or the probe, crosscoder, etc. equivalent) and make interpretability progress.

Yeah, I completely agree this is a good research direction! My only caveat is I don’t think this is a silver bullet in the same way capabilities benchmarks are (not sure if you’re arguing this, just explaining my position here). The inevitable problem with interpretability benchmarks (which to be clear, your paper appears to make a serious effort to address) is that you either:

- Train the model in a realistic way - but then you don’t know if the model really learned the algorithm you expected it to

- Train the model to force it to learn a particular algorithm - but them you have to worry about how realistic that training method was or the algorithm you forced it to learn

This doesn’t seem like an unsolvable problem to me, but it does mean that (unlike capabilities benchmarks) you can’t have the level of trust in your benchmarks that allow you to bypass traditional scientific hygiene. In other words, there are enough subtle strings attached here that you can’t just naively try to “make number go up” on InterpBench in the same way you can with MMLU.

I probably should emphasize I think trying to bring interpretability into contact with various “ground truth” settings seems like a really high value research direction, whether that be via modified training methods, toy models where possible algorithms are fairly simple (e.g. modular addition), etc. I just don’t think it changes my point about methodological standards.

Research thrives on answering important questions. However, the trouble with interpretability for AI safety is that there are no important questions getting answered. Typically the real goal is to understand the neural networks well enough to know whether they are scheming, but that's a threefold bad idea:

- You cannot make incremental progress on it; either you know whether they are scheming, or you don't

- Scheming is not the main AI danger/x-risk

- Interpretability is not a significant bottleneck in detecting scheming (we don't even have good, accessible examples of contexts where AI is applied and scheming would be a huge risk)

To solve this, people substitute various goals, e.g. predictive accuracy, under the assumption that incremental predictive accuracy is helpful. But we already have perfectly adequate ways of predicting the behavior of the neural networks, it's called running the neural networks.

I’ve been trying to understand modules for a long time. They’re a particular algebraic structure in commutative algebra which seems to show up everywhere any time you get anywhere close to talking about rings - and I could never figure out why. Any time I have some simple question about algebraic geometry, for instance, it almost invariably terminates in some completely obtuse property of some module. This confused me. It was never particularly clear to me from their definition why modules should be so central, or so “deep.”

I’m going to try to explain the intuition I have now, mostly to clarify this for myself, but also incidentally in the hope of clarifying this for other people. I’m just a student when it comes to commutative algebra, so inevitably this is going to be rather amateur-ish and belabor obvious points, but hopefully that leads to something more understandable to beginners. This will assume familiarity with basic abstract algebra. Unless stated otherwise, I’ll restrict to commutative rings because I don’t understand much about non-commutative ones.

The typical motivation for modules: "vector spaces but with rings"

The typical way modules are motivated is simple: they’re just vector spaces, but you relax the definition so that you can replace the underlying field with a ring. That is, an -module is a ring and an abelian group , and an operation that respects the ring structure of , i.e. for all and :

This is literally the definition of a vector space, except we haven’t required our scalars to be a field, only a ring (i.e. multiplicative inverses don’t have to always exist). So, like, instead of multiplying vectors by real numbers or something, you multiply vectors by integers, or a polynomial - those are your scalars now. Sounds simple, right? Vector spaces are pretty easy to understand, and nobody really thinks about the underlying field of a vector space anyway, so swapping the field out shouldn’t be much of a big deal, right?

As you might guess from my leading questions, this intuition is profoundly wrong and misleading. Modules are far more rich and interesting than vector spaces. Vector spaces are quite boring; two vector spaces over the same field are isomorphic if and only if they have the same dimension. This means vector spaces are typically just thought of as background objects, a setting which you quickly establish before building the interesting things on top of. This is not the role modules play in commutative algebra - they carry far more structure intrinsically.

A better motivation for modules: "ring actions"

Instead, the way you should think about a module is as a representation of a ring or a ring action, directly analogous to group actions or group representations.[1] Modules are a manifestation of how the ring acts on objects in the “real world.”[2] Think about how group actions tell you how groups manifest in the real world: the dihedral group is a totally abstract object, but it manifests concretely in things like “mirroring/rotating my photo in Photoshop,” where implicitly there is now an action of on the pixels of my photo.

In fact, we can define modules just by generalizing the idea of group actions to ring actions. How might we do this? Well, remember that a group action of a group on a set is just a group homomorphism , where is the set of all endomorphisms on (maps from to itself). In other words, for every element of , we just have to specify how it transforms elements of . Can we get a “ring action” of a ring on a set just by looking at a ring homomorphism ?

That doesn’t quite work, because is in general a group, not a ring - what would the “addition” operation be? But if we insist that is a group, giving it an addition operation, that turns into a ring, with the operation . (This addition rule only works to produce another endomorphism if is abelian, which is a neat little proof in itself!). Now our original idea works: we can define a “ring action” of a ring on an abelian group as a ring homomorphism .

It turns out that this is equivalent to our earlier definition of a module: our “ring action” of on is literally just the definition of an -module . We got to the definition of a module just by taking the definition of a group action and generalizing to rings in the most straightforward possible way.

To make this more concrete, let’s look at a simple illustrative example, which connects modules directly to linear algebra.

- Let our ring be , the ring of polynomials with complex coefficients.

- Let our abelian group be , the standard 2D plane.

A module structure is a ring homomorphism . The ring is just the ring of 2x2 complex matrices, .

Now, a homomorphism out of a polynomial ring like is completely determined by one thing: where you send the variable . So, to define a whole module structure, all we have to do is choose a single 2x2 matrix to be the image of .

The action of any other polynomial, say , on a vector is then automatically defined: . In short, the study of -modules on is literally the same thing as the study of 2x2 matrices.

Thinking this way immediately clears up some confusing “module” properties. For instance, in a vector space, if a scalar times a vector is zero (), then either or . This fails for modules. In our -module defined by the matrix , the polynomial is not zero, but for any vector , . This phenomenon, called torsion, feels strange from a vector space view, but from the “ring action” view, it's natural: some of our operators (like ) can just happen to be the zero transformation.

Why are modules so deep?

Seeing modules as “ring actions” starts to explain why you should care about them, but it still doesn’t explain why the theory of modules is so deep. After all, if we compare to group actions, the theory of group actions is in some sense just the theory of groups - there’s really not much to say about a faithful group action beyond the underlying group itself. Group theorists don’t spend much time studying group actions in their own right - why do commutative algebraists seem so completely obsessed with modules? Why are modules more interesting?

The short answer is that the theory of modules is far richer: even when it comes to “faithful” modules, there may be multiple, non-isomorphic modules for the same ring and abelian group . In other words, unlike group actions, the ways in which a given ring can manifest concretely are not unique, even when acting faithfully on the same object.

To show this concretely, let’s return to our module example. Remember that a -module with structure is determined just by , where we choose to send the element . We can define two different modules here which are not isomorphic:

- First, suppose . In this module, the action of the polynomial on a vector is: . The action of is to scale the first coordinate by 2 and the second by 3.

- Alternatively, suppose . In this module, the action of on a vector is: . The action of sends the vector to . The action of sends everything to the zero vector because is the zero matrix.

These are radically different modules.

- In module (defined by ), the action of is diagonalizable. The module can be broken down into two simple submodules (the x-axis and the y-axis). This is a semisimple module.

- In module (defined by ), the action of is not diagonalizable. There is a submodule (the x-axis, spanned by (1,0)) but it has no complementary submodule. is indecomposable but not simple.

The underlying abelian group is the same in both cases. The ring is the same. But the "action" - which is determined by our choice of the matrix - creates completely different algebraic objects.

The analogy: a group is a person, a ring is an actor

This was quite a perspective shift for me, and something I had trouble thinking about intuitively. A metaphor I found helpful is to think of it like this: a group is like a person, while a ring is like an actor.

- A group is a person: A group has a fixed identity. Its essence is its internal structure of symmetries. When a group acts on a set , it's like a person interacting with their environment. The person doesn't change; the action simply reveals their inherent nature. If the action is trivial, it means that person has no effect on that particular environment. If it's faithful, the environment perfectly reflects the person's structure. But the person remains the same entity. The question is: "What does this person do to this set?"

- A ring is an actor: A ring is more like a professional actor. An actor has an identity, skills, and a range (the ring's internal structure). But their entire purpose is to manifest as different characters in different plays.

- The module is the performance.

- The underlying abelian group of is the stage.

- The specific module structure (the homomorphism ) is the role or the script the actor is performing.

Using our example: The actor is the polynomial ring, . This actor has immense range.

- Performance 1: The actor is cast in the role . The performance is straightforward, stable, and easily understood (semisimple).

- Performance 2: The same actor is cast in the role . The performance is a complex tragedy. The character's actions are subtle, entangled, and ultimately lead to nothingness (nilpotent).

The "actor" is the same. The "stage" is the same. But the "performances" are fundamentally different. To understand the actor , you cannot just watch one performance. You gain a more complete appreciation for their range, subtlety, and power by seeing all the different roles they can inhabit.

Why can rings manifest in multiple ways?

It’s worth pausing for a moment to notice the suprising fact that modules can have this sort of intricate structure, when group actions don’t. What is it about rings that lets them act in so many different “roles”, in a way that groups can’t?

In short, the ring axioms force the action of a ring’s elements to behave like linear transformations rather than simple permutations.

- A group only has one operation. Its action only needs to preserve that one operation: . It's a rigid structural mapping.

- A ring has two operations ( and ) that are linked by distributivity. A ring action (module) must preserve both. It must be a homomorphism of rings. This extra constraint (linearity with respect to addition) is what paradoxically opens the door to variety. It demands that the ring's elements behave not just like permutations, but like linear transformations. And there are many, many ways to represent a system of abstract operators as concrete linear transformations on a space. The vast gap between group actions and group representations can be seen as a microcosm of this idea.

The object is its category of actions

Part of the reason why I like this metaphor is because it points towards a deeper understanding of how we should think about rings in the first place. This leads to, from my position, one of the most fascinating ideas in modern algebra:

You do not truly understand a ring by looking at itself. You understand by studying the category of all possible -modules.

The "identity" of the ring is not its list of elements, but the totality of all the ways it can manifest - all the performances it can give.

- A simple ring like a field is a "typecast actor." Every performance (every vector space) looks basically the same (it's determined by one number: the dimension).

- A complex ring like or the ring of differential operators is a "virtuoso character actor." It has an incredibly rich and varied body of work, and its true nature is only revealed by studying the patterns and structures across all of its possible roles (the classification of its modules).

So, why care?

Alright, so hopefully some of that makes sense. We started with the (in my opinion) unhelpful idea of a module as a "vector space over a ring" and saw that it's much more illuminating to see it as a "ring action" - the way a ring shows up in the world. The story got deeper when we realized that, unlike groups, rings can show up in many fundamentally different ways, analogous to an actor playing a vast range of roles.

So where does this leave us? For me, at least, modules don't feel as alien anymore. At the risk of being overly glib, the reason modules seem to show up everywhere is because they are everywhere. They are the language we use to talk about the manifestations of rings. When a property of a geometric space comes down to a property of a module, it's because the ring of functions on that space is performing one of its many roles, and the module is that performance.

There’s further you could take this (structure of modules over PIDs, homological algebra, etc), but this seems like a good place to stop for now. Hopefully this was helpful!

- ^

In fact, modules literally generalize group representation theory!

- ^

Sometimes in a quite literal way. In quantum mechanics, the ring is an algebra of observables, and a specific physical system is defined by choosing a specific operator for the Hamiltonian, . This is directly analogous to how we chose a specific matrix for to define our module, as in the example of -modules we study later.

Yeah it's a nice metaphor. And just as the most important thing in a play is who dies and how, so too we can consider any element as a module homomorphism and consider the kernel which is called the annihilator (great name). Then factors as where the second map is injective, and so in some sense is "made up" of all sorts of quotients where varies over annihilators of elements.

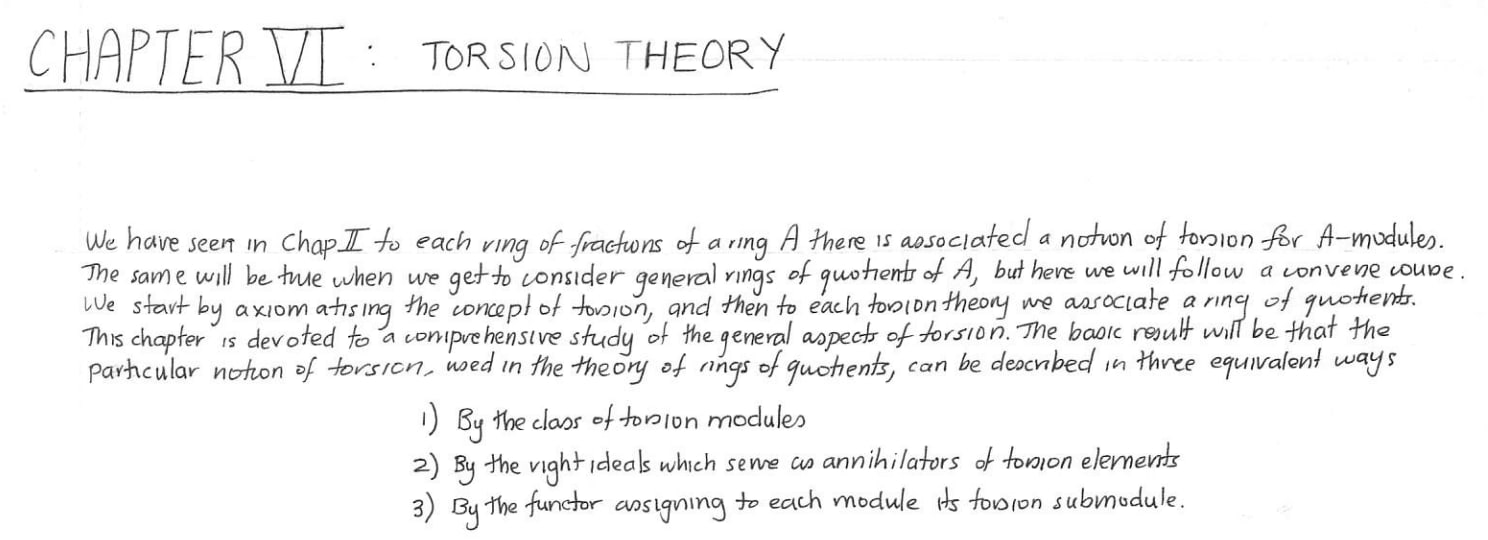

There was a period where the structure of rings was studied more through the theory of ideals (historically this as in turn motivated by the idea of an "ideal" number) but through ideas like the above you can see the theory of modules as a kind of "externalisation" of this structure which in various ways makes it easier to think about. One manifestation of this I fell in love with (actually this was my entrypoint into all this since my honours supervisor was an old-school ring theorist and gave me Stenstrom to read) is in torsion theory.

I was taught the more classical 'ideal' point of view on the structure of rings in school. I'm curious if [and why] you regard the annihilator point of view as possibly more fecund?

Modules are just much more flexible than ideals. Two major advantages:

- Richer geometry. An ideal is a closed subscheme of Spec(R), while modules are quasicoherent sheaves. An element x of M is a global section of the associated sheaf, and the ideal Ann(x) corresponds to the vanishing locus of that section. This leads to a nice geometric picture of associated primes and primary decomposition which explains how finitely generated modules are built out of modules R/P with P prime ideal (I am not an algebraist at heart, so for me the only way to remember the statement of primary decomposition is to translate from geometry 😅)

- Richer (homological) algebra. Modules form an abelian category in which ideals do not play an especially prominent role (unless one looks at monoidal structure but let's not go there). The corresponding homological algebra (coherent sheaf cohomology, derived categories) is the core engine of modern algebraic geometry.

Historically commutative algebra came out of algebraic number theory, and the rings involved - Z,Z_p, number rings, p-adic local rings... - are all (in the modern terminology) Dedekind domains.

Dedekind domains are not always principal, and this was the reason why mathematicians started studying ideals in the first place. However, the structure of finitely generated modules over Dedekind domains is still essentially determined by ideals (or rather fractional ideals), reflecting to some degree the fact that their geometry is simple (1-dim regular Noetherian domains).

This could explain why there was a period where ring theory developed around ideals but the need for modules was not yet clarified?

There's a certain point where commutative algebra outgrows arguments that are phrased purely in terms of ideals (e.g. at some point in Matsumura the proofs stop being about ideals and elements and start being about long exact sequences and Ext, Tor). Once you get to that point, and even further to modern commutative algebra which is often about derived categories (I spent some years embedded in this community), I find that I'm essentially using a transplanted intuition from that "old world" but now phrased in terms of diagrams in derived categories.

E.g. a lot of Atiyah and Macdonald style arguments just reappear as e..g arguments about how to use the residue field to construct bounded complexes of finitely generated modules in the derived category of a local ring. Reconstructing that intuition in the derived category is part of making sense of the otherwise gun-metal machinery of homological algebra.

Ultimately I don't see it as different, but the "externalised" view is the one that plugs into homological algebra and therefore, ultimately, wins.

(Edit: saw Simon's reply after writing this, yeah agree!)

Some of my favourite topics in pure mathematics! Two quick general remarks:

- I don't hold such a strong qualitative distinction between the theory of group actions, and in particular linear representations, and the theory of modules. They are both ways to study an object by having it act on auxiliary structures/geometry. Because there are in general fewer tools to study group actions than modules, a lot of pure mathematics is dedicated to linearizing the former to the latter in various ways.

- There is another perspective on modules over commutative rings which is central to algebraic geometry: modules are a specific type of sheaves which generalize vector bundles. More precisely, a module over a commutative ring R is equivalent to a "quasicoherent sheaf" on the affine scheme Spec(R), and finitely generated projective modules correspond in this way to vector bundles over Spec(R). Once you internalise this equivalence, most of the basic theory of modules in commutative algebra becomes geometrically intuitive, and this is the basis for many further developments in algebraic geometry.

BTW the geometric perspective might sound abstract (and setting it up rigorously definitely is!) but it is many ways more concrete than the purely algebraic one. For instance, a quasicoherent sheaf is in first approximation a collection of vector spaces (over varying "residue fields") glued together in a nice way over the topological space Spec(R), and this clarifies a lot how and when questions about modules can be reduced to ordinary linear algebra over fields.