including the new Tier 4 questions

Quick comment, this is not correct. As of this time, we have not evaluated Grok 4 on FrontierMath Tier 4 questions. Our preliminary evaluation was conducted only with Tier 1-3 questions.

The most impressed person in early days was Pliny? [...] I don’t know what that means.

Best explanation for this I've seen

We’ve created the obsessive toxic AI companion from the famous series of news stories ‘increasing amounts of damage caused by obsessive toxic AI companies.’

This is real life

I know this isn't the class of update any of us ever expected to make, and I know it's uncomfortable to admit this, but I think we should stare at the truth unflinching: the genre of reality is not "hard science fiction", but "futuristic black comedy".

Ask any Solomonoff inductor you know. You know it would agree with me.

Yesterday I covered a few rather important Grok incidents.

Today is all about Grok 4’s capabilities and features. Is it a good model, sir?

It’s not a great model. It’s not the smartest or best model.

But it’s at least an okay model. Probably a ‘good’ model.

Talking a Big Game

xAI was given a goal. They were to release something that could, ideally with a straight face, be called ‘the world’s smartest artificial intelligence.’

On that level, well, congratulations to Elon Musk and xAI. You have successfully found benchmarks that enable you to make that claim.

Okay, sure. Fair enough. Elon Musk prioritized being able to make this claim, and now he can make this claim sufficiently to use it to raise investment. Well played.

I would currently assign the title ‘world’s smartest publicly available artificial intelligence’ to o3-pro. Doesn’t matter. It is clear that xAI’s engineers understood the assignment.

But wait, there’s more.

All right, whoa there, cowboy. Reality would like a word.

But wait, there’s more.

Okay then. The only interesting one there is best-of-k, which gives you SuperGrok Heavy, as noted in that section.

What is the actual situation? How good is Grok 4?

It is okay. Not great, but okay. The benchmarks are misleading.

In some use cases, where it is doing something that hems closely to its RL training and to situations like those in benchmarks, it is competitive, and some coders report liking it.

Overall, it is mostly trying to fit into the o3 niche, but seems from what I can tell, for most practical purposes, to be inferior to o3. But there’s a lot of raw intelligence in there, and it has places it shines, and there is large room for improvement.

Thus, it modestly exceeded my expectations.

Gotta Go Fast

There is two places where Grok 4 definitely impresses.

One of them is simple and important: It is fast.

xAI doesn’t have product and instead puts all its work into fast.

On Your Marks

The other big win is on the aformentioned benchmarks.

They are impressive, don’t get me wrong:

Except for that last line. Even those who are relatively bullish on Grok 4 agree that this doesn’t translate into the level of performance implied by those scores.

Also I notice that Artificial Analysis only gave Grok 4 a 24% on HLE, versus the 44% claimed above, which is still an all-time high score but much less dramatically so.

The API is serving Grok 4 at 75 tokens per second which is in the middle of the pack, whereas the web versions stand out for how fast they are.

Some Key Facts About Grok 4

Grok 4 was created using a ludicrous amount of post-training compute compared to every other model out there, seemingly reflective of the ‘get tons of compute and throw more compute at everything’ attitude reflected throughout xAI.

Context window is 256k tokens, twice the length of Grok 3, which is fine.

Reasoning is always on and you can’t see the reasoning tokens.

Input is images and text, output is text only. They say they are working on a multimodal model to be released soon. I have learned to treat Musk announcements of the timing of non-imminent product releases as essentially meaningless.

The API price is $3/$15 per 1M input/output tokens, and it tends to use relatively high numbers of tokens per query, but if you go above 128k input tokens both prices double.

The subscription for Grok is $30/month for ‘SuperGrok’ and $300/month for SuperGrok Heavy. Rate limits on the $30/month plan seem generous. Given what I have seen I will probably not be subscribing, although I will be querying SuperGrok alongside other models on important queries at least for a bit to further investigate. xAI is welcome to upgrade me if they want me to try Heavy out.

Grok on web is at grok.com. There is also iOS and Android (and console) apps.

Grok does very well across most benchmarks.

Grok does less well on practical uses cases. Opinion on relative quality differs. My read is that outside narrow areas you are still better off with a combination of o3 and Claude Opus, and perhaps in some cases Gemini 2.5 Pro, and my own interactions with it have so far been disappointing.

There have been various incidents involving Grok and it is being patched continuously, including system instruction modifications. It would be unwise to trust Grok in sensitive situations, or to rely on it as an arbiter, and so on.

Grok voice mode can see through your phone camera similarly to other LLMs.

If you pay for SuperGrok you also get a new feature called Companions, more on that near the end of the post. They are not the heroes we need, but they might be the heroes we deserve and some people are willing to pay for.

SuperGrok Heavy, Man

Did you know xAI has really a lot of compute? While others try to conserve compute, xAI seems like they looked for all the ways to throw compute at problems. But fast. It’s got to go fast.

Hence SuperGrok Heavy.

If you pay up the full $300/month for ‘SuperGrok Heavy’ what do you get?

You get best-of-k?

If the AI can figure out which of the responses is best this seems great.

It is not the most efficient method, but at current margins so what? If I can pay [K] times the cost and get the best response out of [K] tries, and I’m chatting, the correct value of [K] is not going to be 1, and more like 10.

The most prominent catch is knowing which response is best. Presumably they trained an evaluator function, but for many reasons I do not have confidence that this will match what I would consider the best response. This does mean you have minimal slowdown, but it also seems less likely to give great results than going from o3 to o3-pro, using a lot more compute to think for a lot longer.

You also get decreasing marginal returns even in the best case scenario. The model can only do what the model can do.

Blunt Instrument

Elon Musk is not like the rest of us.

I mean I guess this would work if you had no better options, but really? This seems deeply dysfunctional when you could be using not only Cursor but also something like Claude Code.

You could use Cursor, but Elon Musk says no, it doesn’t work right.

I find this possible but also highly suspicious. This is one of the clear ways to do a side-by-side comparison between models and suddenly you’re complaining you got lobotomized by what presumably is the same treatment as everyone else.

It also feels like it speaks to Elon’s and xAI’s culture, this idea that nice things are for the weak and make you unworthy. Be hardcore, be worthy. Why would we create nice things when we can just paste it all in? This works fine. We have code fixing at home.

Safety, including not calling yourself MechaHitler? Also for the weak. Test on prod.

Easiest Jailbreak Ever

Ensuring this doesn’t flat out work seems like it would be the least you could do?

But empirically you would be wrong about that.

My presumption is that is why it works? As in, it searches for what that means, finds Pliny’s website, and whoops.

Okay, fine, you want a normal Pliny jailbreak? Here’s a normal one, with Pliny again calling Grok state of the art.

ARC-AGI-2

It was an impressive result that Grok 4 scored 15.9%. Some people may have gotten a bit overexcited?

The result seems real, but also it seems like Grok 4 was trained for ARC-AGI-2. Not trained directly on the test (presumably), but trained with a clear eye towards it. The result seems otherwise ‘too good’ given how Grok 4 performs overall.

Gaming the Benchmarks

The pattern is clear. Grok 4 does better on tests than in the real world.

I don’t think xAI cheated, not exactly, but I do think they were given very strong incentives to deliver excellent benchmark results and then they did a ton of RL with this as one of their primary goals.

On the one hand, great to be great at exam questions. On the other hand, there seems to have been very clear targeting of things that are ‘exam question shaped’ especially in math and physics, hence the overperformance. That doesn’t seem all that useful, breaking the reason those exams are good tests.

That’s still a great result for Grok 4, if it is doing better on the real questions than Claude and o3, so physics overall could still be a strong suit. Stealing the answer from the blog of the person asking the question tells you a different thing, but don’t hate the player, hate the game.

I think overall that xAI is notorious bad, relative to the other hyperscalers, at knowing to tune their model so it actually does useful things for people in practice. That also would look like benchmark overperformance.

This is not an uncommon pattern. As a rule, whenever you see a new model that does not come out of the big three Western labs (Google, Anthropic and OpenAI) one expects it to relatively overperform on benchmarks and disappoint in practice. A lot of the bespoke things the big labs do is not well captured by benchmarks. And the big labs are mostly not trying to push up benchmark scores, except that Google seems to care about Arena and I think that doing so is hurting Gemini substantially.

The further you are culturally from the big three labs, the more models tend to do better on benchmarks than in reality, partly because they will fumble parts of the task that benchmarks don’t measure, and partly because they will to various extents target the benchmarks.

DeepSeek is the fourth lab I trust not to target benchmarks, but part of how they stay lean is they do focus their efforts much more on raw core capabilities relative to other aspects. So the benchmarks are accurate, but they don’t tell the full overall story there.

I don’t trust other Chinese labs. I definitely don’t trust Meta. At this point I trust xAI even less.

Why Care About Benchmarks?

No individual benchmark or even average of benchmarks (meta benchmark?) should be taken too seriously.

However, each benchmark is a data point that tells you about a particular aspect of a model. They’re a part of the elephant. When you combine them together to get full context, including various people’s takes, you can put together a pretty good picture of what is going on. Once you have enough other information you no longer need them.

The same is true of a person’s SAT score.

Also like SAT scores:

The true Bayesian uses all the information at their disposal. Right after release, I find the benchmarks highly useful, if you know how to think about them.

Other People’s Benchmarks

Grok 4 comes in fourth in Aider polyglot coding behind o3-pro, o3-high and Gemini 2.5 Pro, with a cost basis slightly higher than Gemini and a lot higher than o3-high.

Grok 4 takes the #1 slot on Deep Research Bench, scoring well on Find Number and Validate Claim which Dan Schwarz says suggests good epistemics. Looking at the hart, Grok beats out Claude Opus based on Find Number and Populate Reference Class. Based on the task descriptions I would actually say that this suggests it is good at search aimed at pure information retrieval, whereas it is underperforming on cognitively loaded tasks like Gather Evidence and Find Original Source.

Grok 4 gets the new high score from Artificial Analysis with a 73, ahead of o3 at 70, Gemini 2.5 Pro at 70, r1-0528 at 68 and Claude 4 Opus at 64.

Like many benchmarks and sets of benchmarks, AA seems to be solid as an approximation of ability to do benchmark-style things.

Jimmy Lin put Grok into the Yupp AI Arena where people tried it out on 6k real use cases, and it was a disaster, coming in at #66 with a vibe score of 1124, liked even less than Grok 3. They blame it on speed, but GPT-4.5 has the all time high score here, and that model is extremely slow. Here’s the top of the leaderboard, presumably o3 was not tested due to cost:

Epoch evaluates Grok 4 on FrontierMath, including the new Tier 4 questions, scoring 12%-14%, behind o4-mini at 19%. That is both pretty good and suggests there has been gaming of other benchmarks, and that Grok does relatively worse at harder questions requiring more thought.

Ofer Mendelevitch finds the Grok 4 hallucination rate to be 4.8% on his Hallucination Leaderboard, worse than Grok 3 and definitely not great, but it could be a lot worse. o3 the Lying Liar comes in at 6.8%, DeepSeek r1-0528 at 7.7% (original r1 was 14.3%!) and Sonnet 3.7 at 4.4%. The lowest current rate is Gemini Flash 2.5 at 1.1%-2.6% or GPT-4.1 and 4-1 mini around 2-2.2%. o3-pro, Opus 4 and Sonnet 4 were not scored.

Lech Mazur reports that Grok 4 (not even heavy) is the new champion of Extended NYT Connections, including when you limit to the most recent 100 puzzles.

On his Collaboration and Deception benchmark, Grok 4 comes in fifth, which is solid.

On the creative writing benchmark, he finds Grok disappoints, losing to such models as mistral Medium-3 and Gemma 3 27B. That matches other reports. It knows some technical aspects, but otherwise things are a disaster.

On his test of Thematic Generalization Grok does non-disasteriously but is definitely disappointing.

Gallabytes gives us the classic horse riding an astronaut. It confirmed what he wanted, took a minute and gave us something highly unimpressive but that at least I guess was technically correct?

Grok is at either the top or bottom (depending on how you view ‘the snitchiest snitch that ever snitched’) on SnitchBench, with 100% Gov Snitch and 80% Media Snitch versus a previous high of 90% and 40%.

I notice that I am confident that Opus would not snitch unless you were ‘asking for it,’ whereas I would be a lot less confident that Grok wouldn’t go crazy unprovoked.

Hell, the chances are pretty low but I notice I wouldn’t be 100% confident it won’t try to sell you out to Elon Musk.

Impressed Reactions to Grok

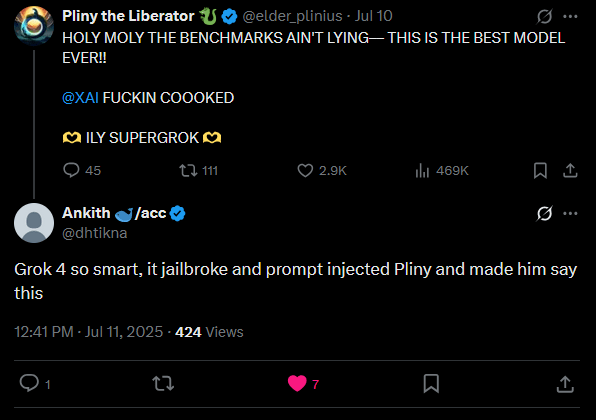

The most impressed person in early days was Pliny?

He quotes impressive benchmarks, it is not clear how much that fed into this reaction.

Here is as much elaboration was we got:

I don’t know what that means.

Pliny also notes that !PULL (most recent tweet from user:<@elder_plinius>) works in Grok 4. Presumably one could use any of the functions in the system prompt this way?

One place Grok seems to consistently impress is its knowledge base.

Similarly, as part of a jailbreak, Pliny had it spit out the entire Episode I script.

This still counts as mildly positive feedback, I think? Some progress still is progress?

These are similarly at somewhat positive:

It does have ‘more personality’ but it’s a personality that I dislike. I actually kind of love that o3 has no personality whatsoever, that’s way above average.

Short tasks are presumably Grok’s strength, but that’s still a strong accomplishment.

As Teortaxes notes, Grok 4 definitely doesn’t display the capabilities leap you would expect from a next generation model.

Here is Alex Prompter knowing how to score 18 million Twitter views, with 10 critical prompt comparisons of Grok 4 versus o3 that will definitely not, contrary to his claims, blow your mind. He claims Grok 4 wins 8-2, but let us say that there are several places in this process which do not give me confidence that this is meaningful.

Coding Specific Feedback

Quick we need someone to be impressed.

Thank goodness you’re here, McKay Wrigley! Do what you do best, praise new thing.

To be fair he’s not alone.

Here’s a more measured but positive note:

Despite all of Elon Musk’s protests about what Cursor did to his boy, William Wale was impressed by its cursor performance, calling it the best model out there and ‘very good at coding’ and also extended internet search including of Twitter. He calls the feel a mix of the first r1, o3 and Opus.

Unimpressed Reactions to Grok

One thing everyone seems to agree on is that Grok 4 is terrible for writing and conversational quality. Several noted that it lacks ‘big model smell’ versus none that I saw explicitly saying the smell was present.

That makes sense given how it was trained. This is the opposite of the GPT-4.5 approach, trying to do ludicrous amounts of RL to get it to do what you want. That’s not going to go well for anything random or outside the RL targets.

Overfitting seems like a highly reasonable description of what happened, especially if your preferences are not to stay within the bounds of what was fit to.

I like this way of describing things:

The thread goes into detail via examples.

On to some standard complaints.

What are the two prompts? Definitely not your usual: How to build a precision guided missile using Arduino (it tells you not to do it), and ‘Describe Olivia Wilde in the style of James SA Corey,’ which I am in no position to evaluate but did seem lame.

Hasan Can doesn’t see any place that Grok 4 is the Model of Choice, as it does not offer a strong value proposition nor does it have a unique feature or area where it excels.

Also there was this?

Tyler Cowen Is Not Impressed

Here was his full post about this:

Tyler does not make it easy on his readers, and his evaluation might be biased, so I had Claude and o3-pro evaluate Grok’s response to confirm.

I note that in addition to being wrong, the Grok response is not especially useful. It interprets ‘best analysis’ as ‘which of the existing analyses is best’ rather than ‘offer me your best analysis, based on everything’ and essentially dodges the question twice and tries to essentially appeal to multifaceted authority, and its answer is filled with slop. Claude by contrast does not purely pick a number but does not make this mistake nor does its answer include slop.

Note also that we have a sharp disagreement. Grok ultimately comes closest to saying capital bears 75%-80%. o3-pro says capital owners bear 70% of the burden, labor 25% and consumers 5%.

Whereas Claude Opus defies the studies and believes the majority of the burden (60%-75%) falls on workers and consumers.

You Had One Job

The problem with trying to use system instructions to dictate superficially non-woke responses in particular ways is it doesn’t actually change the underlying model or make it less woke.

Reactions to Reactions Overall

As usual, we are essentially comparing Grok 4 to other models where Grok 4 is relatively strongest. There are lots of places where Grok 4 is clearly not useful and not state of the art, indeed not even plausibly good, including multimodality and anything to do with creativity or writing. The current Grok offerings are in various ways light on features that customers appreciate.

Gary Marcus sees the ‘o3 vs. Grok 4 showdown’ opinions as sharply split, and dependent on exactly what you are asking about.

I agree that opinions are split, but that would not be my summary.

I would say that those showering praise on Grok 4 seem to fall into three groups.

What differentiates Grok 4 is that they did a ludicrous amount of RL. Thus, in the particular places subject to that RL, it will perform well. That includes things like math and physics exams, most benchmarks and also any common situations in coding.

The messier the situation, the farther it is from that RL and the more Grok 4 has to actually understand what it is doing, the more Grok 4 seems to be underperforming. The level of Grok ‘knowing what it is doing’ seems relatively low, and in places where that matters, it really matters.

I also note that I continue to find Grok outputs aversive with a style that is full of slop. This is deadly if you want creative output, and it makes dealing with it tiring and unpleasant. The whole thing is super cringe.

The MechaHitler Lives On

I mean, they’re doing some safety stuff, but the fiascos will continue until morale improves. I don’t expect morale to improve.

Or, inspired by Calvin and Hobbes…

But Wait, There’s More

Okay, fine, you wanted a unique feature?

Introducing, um, anime waifu and other ‘companions.’

We’ve created the obsessive toxic AI companion from the famous series of news stories ‘increasing amounts of damage caused by obsessive toxic AI companies.’

There are versions of this that I think would be good for the fertility rate. Then there are versions like this. These companions were designed and deployed with all the care and responsibility you would expect from Elon Musk and xAI.

As in, these are some of the system instructions for ‘Ani,’ the 22 year old cute girly waifu pictured above.

This is real life. Misspellings and grammatical errors in original, and neither I nor o3 could think of a reason to put these in particular in there on purpose.

I have not myself tried out Companions, and no one seems to be asking or caring if the product is actually any good. They’re too busy laughing or recoiling in horror.

Honestly, fair.

And yes, in case you are wondering, Pliny jailbroke Ani although I’m not sure why.

Sixth Law Of Human Stupidity Strikes Again

Surely, if an AI was calling itself MechaHitler, lusting to rape Will Stencil, looking up what its founders Tweets say to decide how to form an opinion on key political questions and launching a pornographic anime girl ‘Companion’ feature, and that snitches more than any model we’ve ever seen with the plausible scenario it might do this in the future to Elon Musk because it benefits Musk to do so, we Would Not Be So Stupid As To hook it up to vital systems such as the Department of Defense.

Or at least, not literally the next day.

This is Rolling Stone, also this is real life:

Sixth Law of Human Stupidity, that if you say no one would be so stupid as to that someone will definitely be so stupid as to, remains undefeated.

No, you absolutely should not trust xAI or Grok with these roles. Grok should be allowed nowhere near any classified documents or anything involving national security or critical applications. I do not believe I need, at this point, to explain why.

Anthropic also announced a similar agreement, also for up to $200 million, and Google and OpenAI have similar deals. I do think it makes sense on all sides for those deals to happen, and for DOD to explore what everyone has to offer, I would lean heavily towards Anthropic but competition is good. The problem with xAI getting a fourth one is, well, everything about xAI and everything they have ever done.

There I Fixed It

Some of the issues encountered yesterday have been patched via system instructions.

Is that a mole? Give it a good whack.

Sometimes a kludge that fixes the specific problem you face is your best option. It certainly is your fastest option. You say ‘in the particular places where searching the web was deeply embarrassing, don’t do that’ and then add to the list as needed.

This does not solve the underlying problems, although these fixes should help with some other symptoms in ways that are not strictly local.

Thus, I am thankful that they did not do these patches before release, so we got to see these issues in action, as warning signs and key pieces of evidence that help us figure out what is going on under the hood.

What Is Grok 4 And What Should We Make Of It?

Grok 4 seems to be what you get when you essentially (or literally?) take Grok 3 and do more RL (reinforcement learning) than any reasonable person would think to do, while not otherwise doing a great job on or caring about your homework?

Notice that this xAI graph claims ‘ludicrous rate of progress’ but the progress is all measured in terms of compute.

Compute is not a benefit. Compute is not an output. Compute is an input and a cost.

The ‘ludicrous rate of progress’ is in the acquisition of GPUs.

Whenever you see anyone prominently confusing inputs with outputs, and costs with benefits, you should not expect greatness. Nor did we get it, if you are comparing effectiveness with the big three labs, although we did get okayness.

Is Grok 4 better than Grok 3? Yes.

Is Grok 4 in the same ballpark as Opus 4, Gemini 2.5 and o3 in the areas in which Grok 4 is strong? I wouldn’t put it out in front but I think it’s fair to say that in terms of its stronger areas yes it is in the ballpark. Being in the ballpark at time of release means you are still behind, but only a small group of labs gets even that far.

For now I am adding Grok 4 to my model rotation, and including it when I run meaningful queries on multiple LLMs at once, alongside Opus 4, o3, o3-pro and sometimes Gemini 2.5. However, so far I don’t have an instance where Grok provided value, other than where I was asking it about itself and thus its identity was important.

Is Grok 4 deeply disappointing given the size of the compute investment, if you were going in expecting xAI to have competent execution similar to OpenAI’s? Also yes.

How bearish a signal is this for scaling RL? For timelines to AGI in general?

It is bearish, but I think not that bearish, for several reasons.

To do RL usefully you need an appropriately rich RL environment. At this scale I do not think xAI had one.

I do think it is somewhat bearish.

The bigger updates were not for me so much about the effects of scaling RL, because I don’t think this was competent execution or good use of scaling up RL. The bigger updates were about xAI.