There is too much fluff in this article, and it reads like AI slop. Maybe it is, but in either case this is because the fluff and the content do not support each other. Taking an example quote:

Before you can escape a jungle, you have to know its terrain. The Norwood-Hamilton scale is the cartographer’s map for male pattern baldness (androgenetic alopecia, or AGA), dividing male scalps into a taxonomy of misery, from Norwood I (thick, untamed rainforest) to Norwood VII (Madagascar, post-deforestation). It’s less a continuum and more a sequence of discrete way stations, each representing a quantum of hair loss, each with its own distinctive ecosystem of coping strategies.

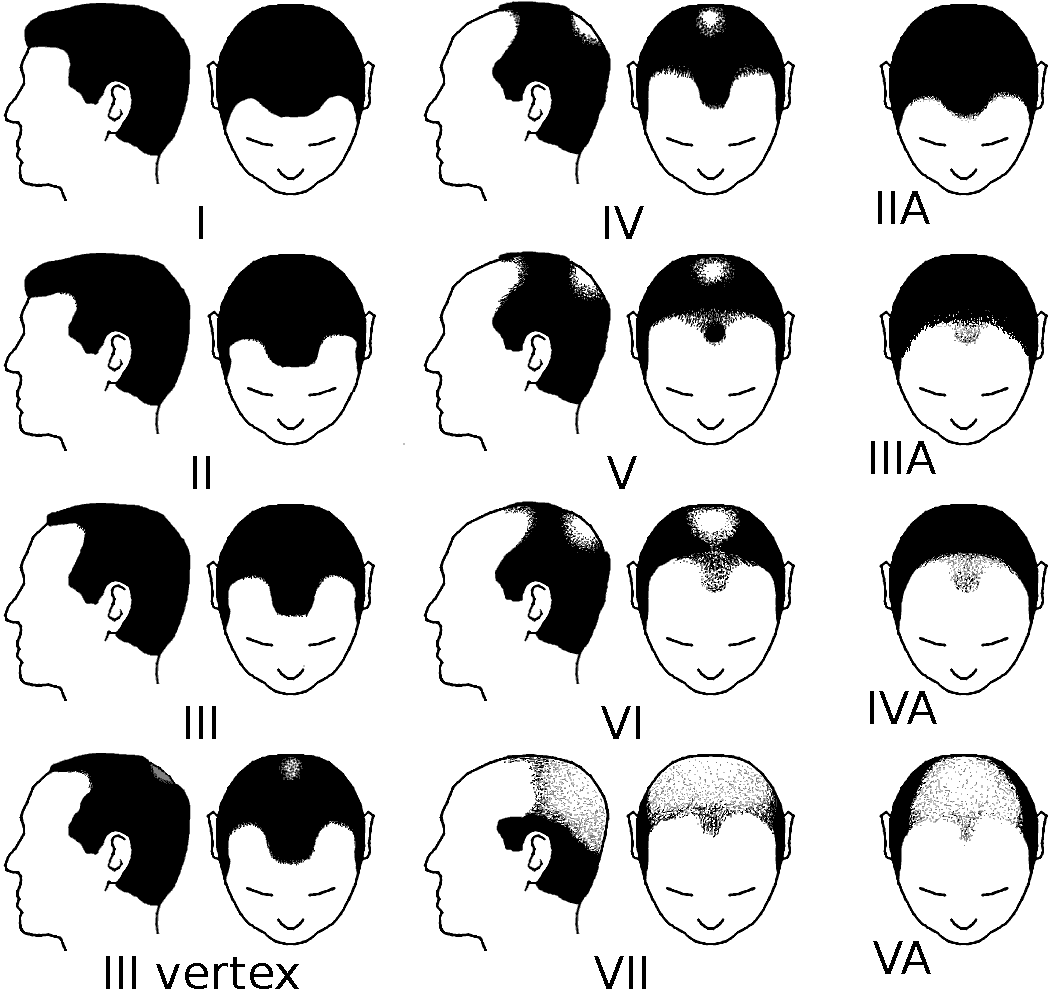

You do not need the jungle metaphor here, what you're explaining is simple and easy to explain, and would be much more clearly explained if you just said what you mean. Is "The Norwood-Hamilton" scale even real? It looks like it is! Here is the picture Wikipedia shows:

This picture is infinitely more informative than this paragraph, and seeing this picture I can say with confidence your jungle metaphore is less than informative. In what sense is VII here in any way related to "Madagascar, post-deforestation" other than "there are fewer hair/trees" than in I?

You claim "It’s less a continuum and more a sequence of discrete way stations", which I didn't believe before this picture, because it sounded like bullshit fluff. Is this metaphorical? I thought Surely it is literally a continuum. But no, apparently there are relatively discrete & reproducible stages male-pattern baldness goes through, as claimed by Wikipedia. Another instance of your fluff making your post less than informative.

Apply this criticism to pretty much every paragraph.

I did strong-downvote, but I want to be clear that I do think your comments are good, and your posts in the future I think have the potential to be good. This is your first post, and its understandable it won't be so great!

I read the whole thing and disagree. I think the disconnect might be what you want this article to be (a guide to baldness) vs what it is (the author's journey and thoughts around baldness mixed with gallows humor regarding his potential fate).

The Norword/forest comparison gets used consistently throughout (including the title) and is the only metaphor used this way. Whether you like this comparison or not, it's not a case of AI injecting constant flowery language.

That said, setting audience expectations is also an important part of writing, and I think "A Rationalist's Guide to Male Pattern Baldness" is probably setting the wrong expectation.

I upvoted since I thought it was interesting and I learned a little bit.

I think the disconnect might be what you want this article to be (a guide to baldness) vs what it is (the author's journey and thoughts around baldness mixed with gallows humor regarding his potential fate) [...] That said, setting audience expectations is also an important part of writing, and I think "A Rationalist's Guide to Male Pattern Baldness" is probably setting the wrong expectation.

Yeah, good point. I agree, I think this is the problem.

The Norword/forest comparison gets used consistently throughout (including the title) and is the only metaphor used this way. Whether you like this comparison or not, it's not a case of AI injecting constant flowery language.

I disagree, there are other parts of the article, independent of that particular pun which I think are too floury and use too many & too mixed metaphors, but I don't want to drag on this conversation & point out all the problems I personally have with the article. Very clearly others disagree with me, so my criticism seems likely not representative here.

Thank you. I'm particularly taken aback by the accusation of this being "AI slop". I obviously used Gemini 2.5 Pro to help structure what was initially a more rambly draft, and to help format the citations. That's, at least in my opinion, an entirely unobjectionable use of AI.

Plus, I'm a big fan of Scott, and I try my best to make the things I write both informative and entertaining. If someone came into this looking for an entirely dry overview of the etiology and treatment of androgenetic alopecia, they'd be better off consulting a textbook.

(I even went to the effort, after this accusation, of pasting a dump of my comment history after redaction, and the Substack article, into an LLM. It could easily tell that this was the same author, with the same tone and stylistic tendencies. Of course, I'm more careful with my phrasing and speak more neutrally here, but AI slop?)

I could probably have made a better title, but I decided to cross-post on LW because it's one of the few things I've written that I thought might make for a decent fit. Slightly different albeit overlapping core audience by default!

I have read the entire piece and it didn't feel like an AI slop at all. In fact, if I wasn't told, I wouldn't have suspected that AI was involved here, so well done!

Yeah, sorry, I think I had my very negative reaction mostly because of the title. Looking at the other comments & the upvotes which are not mine on the article, clearly my view here is non-representative of the rest of lesswrong.

Thank you. I'm particularly taken aback by the accusation of this being "AI slop". I obviously used Gemini 2.5 Pro to help structure what was initially a more rambly draft, and to help format the citations. That's, at least in my opinion, an entirely unobjectionable use of AI.

LessWrong has pretty high standards for AI co-written content. From the new user guidelines:

Do not submit unmarked AI-written or co-authored content. Our policy on AI-assisted writing goes into more detail, but by default we reject submissions from new users that look like they were written by an AI. More established users are given more freedom to incorporate AI into their writing flows. There are exceptions if you literally an autonomous AI agent trying to share information important for the future of humanity.

And from the policy:

Humans Using AI as Writing or Research Assistants

Prompting a language model to write an essay and copy-pasting the result will not typically meet LessWrong's standards. Please do not submit unedited or lightly-edited LLM content. You can use AI as a writing or research assistant when writing content for LessWrong, but you must have added significant value beyond what the AI produced, the result must meet a high quality standard, and you must vouch for everything in the result.

A rough guideline is that if you are using AI for writing assistance, you should spend a minimum of 1 minute per 50 words (enough to read the content several times and perform significant edits), you should not include any information that you can't verify, haven't verified, or don't understand, and you should not use the stereotypical writing style of an AI assistant.

I think this post does meet the standard, though I am not sure, depends on how much Gemini 2.5 helped you structure things from an initial draft.

Pretty much all of the actual content of the essay is human-written. The only involvement of any kind of LLM was proof-reading and suggestions on how to structure it into a cohesive whole. I think I used o3 to check my citations. That's it.

I'm quite confused by a lot of the criticism here. A lot of the verbosity is simply my style, as well as an intentional attempt to inject levity into what can otherwise be a grim/dry subject.

Like, I'm genuinely at a loss. I just thought that the pun Nor-*wood* was cute, and occasionally worth alluding to.

I wrote the post with the background assumption that most prospective readers would be passingly familiar with the Norwood scale, though I agree that I should have thrown in a picture.

But arguing about an obvious metaphor? Really? At the end of the day, hair strands are discrete entities, you don't have fewer even after a haircut. You can assume the Norwood scale is a simplification if there's not 100k stages in it.

I have no control over your ability to strong downvote, but I can register my disagreement that it was remotely warranted.

Hm, I read "A rationalist's guide to male pattern baldness" and thought the post would be in the same genera as other lesswrong "guide" articles, and much less a personal blog-post. Most centrally the guide to rationalist interior decorating. I read the article with this expectation, and felt I mostly got duped. If it was for example just called "Escaping the Jungles of Norwood", I just wounldn't have read it, but if I did I would not be as upset.

I still maintain my criticism holds even for a personal blog post, but would not have strong downvoted it, just ignored it. Puns and playfulness can be good, but only when they add to the informational content (eg by making it more memorable), or as brief respites.

The question, “Will I go bald?” has haunted many a rationalist (and more than a few mirrors), but rarely receives a genuinely Bayesian treatment. In this essay, I combine personal risk factors, family history, and population-level genetics to estimate my absolute risk of androgenetic alopecia - laying out priors, likelihoods, and posteriors with as much fidelity as the literature allows. Includes explicit probability estimates, references, and a meta-discussion on the limits of current predictive models. (And a lot of jokes that may or may not land) Feedback on both methodology and inference welcome.