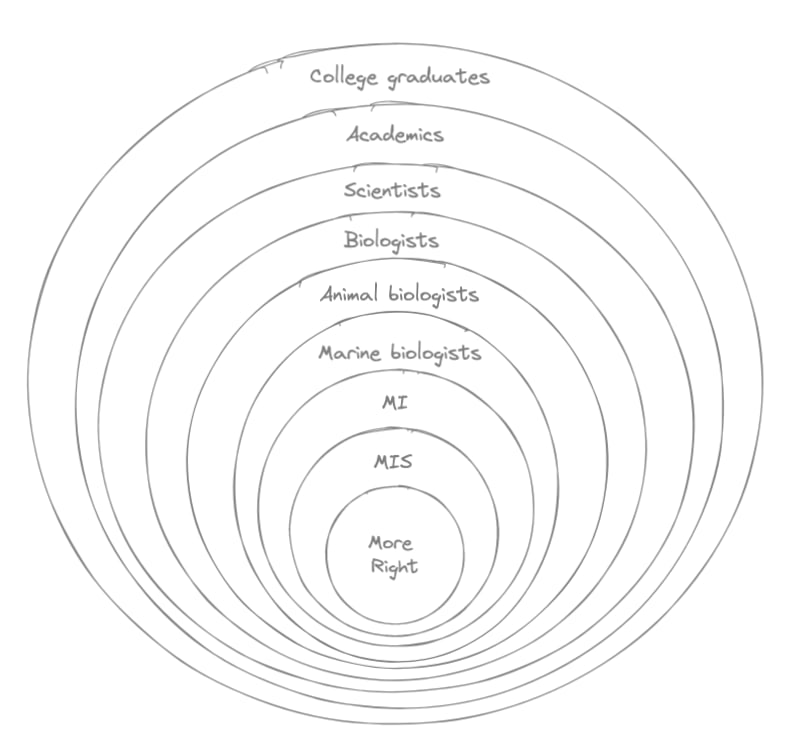

Bob: Anyway, if we continue this process with the concentric circles, I suppose we can look at marine biologists next. And then animal biologists. Then biologists. Then, I don’t know, scientists. Academics. College graduates.

Alice: So everyone gets more and more expert as you head towards the middle...until you hit More Right. More Right was founded by someone with no qualifications in the field, and still mostly consists of people who were trained by him.

Fair point. I agree that More Right probably doesn't have more expertise than MIS researchers. I'm not even sure that they are more concerned than MIS researchers. I wasn't sure how to handle that though and given the whole "all models are wrong, some are useful" thing, didn't want to abandon it.

Yeah, I think the diagram would be much more accurate if the circles didn't perfectly overlap. Assuming that this is intended to be something like a Venn diagram. The circles are all close along the bottom edge... what if the bottom edges all touched and MoreRight stuck out the bottom. Not all Morerighters fall into the categories of all the other circles. That's a part of what makes this tricky that we shouldn't gloss over.

Alice: So... you're saying that the reasoning doesn't actually make sense to you?

Bob: I guess so.

Alice: Wait, so then why would you believe in this sea monster stuff?

I kind of think Alice is actually correct here? If you have time to evaluate the actual arguments then you pick the ones that make the most sense, taking into account all the empirical evidence you have. If you don't have time, pick the one that's made the best predictions so far. And if there's insufficient data for even that, you go with the "default mainstream consensus" position. If the reasoning about AI ruin didn't make sense to me, I wouldn't believe in it anyways, I'd believe something like "predicting the outcome of a future technology is nearly impossible. Though we can't rule out human extinction from AI, it's merely one of a large number of possibilities."

To steelman what Bob's saying here, maybe he can't understand the detailed mechanisms that cause machine learning systems to be difficult to point at subtle goals, but he can empirically verify it by observing how they sometimes misbehave. And he can empirically observe ML systems getting progressively more powerful over time. And so he can understand the argument that if the systems continue to become more powerful, as they have done in the past, eventually the fact that we can't point them at subtle things like "not ruining everything humans care about" becomes a problem. That would be fine, you don't have to understand every last detail if you can check the predictions of the parts you don't understand. But if Bob's just like, "I dunno, these Less Wrong guys seem smart", that seems like a bad way to form beliefs.

I disagree. I think it's hard to talk about this in the abstract, so how about I propose a few different concrete situations?

How do you feel about each of those situations?

It seems like hypothetical-you is making a reasonable decision in all those situations. I guess my point was that us people who worry about AI ruin don't necessarily get to be seen as the group of experts who are calling these sports matches. Maybe the person most analogous to the tennis expert predicting Joe will win the match is Yann LeCun, not Eliezer Yudkowsky.

Consider two tennis pundits, one of whom has won prestigious awards for tennis punditry, and is currently employed by a large TV network to comment on matches. The other is a somewhat-popular tennis youtuber. If these two disagree about who's going to win the match, with the famous TV commentator favouring Joe and the youtuber favouring Bill, a total tennis outsider would probably do best by going with the opinion of the famous guy. In order to figure out that you should instead go with the opinion of the youtuber, you'd need to know at least a little bit about tennis in order to determine that the youtuber is actually more knowledgeable.

Gotcha, that makes sense. It sounds like we agree that the main question is of who the experts are and how much weight one should give to each of them. It also sounds like we disagree about what the answer to that question is. I give a lot of weight to Yudkowsky and adjacent people whereas it sounds like you don't.

Consider two tennis pundits, one of whom has won prestigious awards for tennis punditry, and is currently employed by a large TV network to comment on matches. The other is a somewhat-popular tennis youtuber. If these two disagree about who's going to win the match, with the famous TV commentator favouring Joe and the youtuber favouring Bill, a total tennis outsider would probably do best by going with the opinion of the famous guy. In order to figure out that you should instead go with the opinion of the youtuber, you'd need to know at least a little bit about tennis in order to determine that the youtuber is actually more knowledgeable.

Agreed. But I think the AIS situation is more analogous to if the YouTuber studied some specific aspect of tennis very extensively that traditional tennis players don't really pay much attention to. Even so, it still might be difficult for an outsider to place much weight behind the YouTuber. It's probably pretty important that they can judge for themself that the YouTuber is smart and qualified and stuff. It also helps that the YouTuber received support and funding and endorsements from various high-prestige people.

I give a lot of weight to Yudkowsky and adjacent people whereas it sounds like you don't.

To be clear, I do give a lot of weight to Yudkowsky in the sense that I think his arguments make sense and I mostly believe them. Similarly, I don't give much weight to Yann LeCun on this topic. But that's because I can read what Yudkowsky has said and what LeCun has said and think about whether it made sense. If I didn't speak English, so that their words appeared as meaningless noise to me, then I'd be much more uncertain about who to trust, and would probably defer to an average of the opinions of the top ML names, eg. Sutskever, Goodfellow, Hinton, LeCun, Karpathy, Benigo, etc. The thing about closely studying a specific aspect of AI (namely alignment) would probably get Yudkowsky and Christiano's names onto that list, but it wouldn't necessarily give Yudkowsky more weight than everyone else combined. (I'm guessing, for hypothetical non-English-speaking me, who somehow has translations for what everyone's bottom line position is on the topic, but not what their arguments are. Basically the intuition here is that difficult technical achievements like Alexnet, GANs, etc. are some of the easiest things to verify from the outside. It's hard to tell which philosopher is right, but easy to tell which scientist can build a thing for you that will automatically generate amusing new animal pictures.)

If I didn't speak English, so that their words appeared as meaningless noise to me, then I'd be much more uncertain about who to trust, and would probably defer to an average of the opinions of the top ML names, eg. Sutskever, Goodfellow, Hinton, LeCun, Karpathy, Benigo, etc.

Do you think it'd make sense to give more weight to people in the field of AI safety than to people in the field of AI more broadly?

I would, and I think it's something that generally makes sense. Ie. I don't know much about food science but on a question involving dairy, I'd trust food scientists who specialize in dairy more than I would trust food scientists more generally. But I would give some weight to non-specialist food scientists, as well as chemists in general, as well as physical scientists in general, with the weight decreasing as the person gets less specialized.

This is great. This is perhaps the best template for a successful discussion that I've seen.

The key component here, IMO, is that Bob is nice and epistemically humble. When accused of saying crazy things, he doesn't get defensive.

These things are hard to do in real conversations, because even rationalists have emotions.

Alice is awfully agreeable here, but I think that's plausible since she's friends with Bob, and Bob is being nice, humble, and agreeable.

Alice failed to mention in her cult-ish criticism the role of YE, the founder of MoreRight, who is kind of a marine biologist. I mean, he isn't exactly a marine biologist, but everyone agrees he is very smart and very interested in marine biology, so this must count, right? So, when some prominent marine biologist disagrees with YE, usually the whole MoreRight community agrees with YE, or agrees that YE was misunderstood, or that he is playing some deeper game, something like this. Anyway, he's got a plan, for sure.

So yes, Alice should have mentioned that.

Alice: Hey Bob, how's it going?

Bob: Good. How about you?

Alice: Good. So... I stalked you and read your blog.

Bob: Haha, oh yeah? What'd you think?

Alice: YOU THINK SEA MONSTERS ARE GOING TO COME TO LIFE, END HUMANITY, AND TURN THE ENTIRE UNIVERSE INTO PAPER CLIPS?!?!?!?!?!??!?!?!?!

Bob: Oh boy. This is what I was afraid of. This is why I keep my internet life separate from my personal one.

Alice: I'm sorry. I feel bad about stalking you. It's just...

Bob: No, it's ok. I actually would like to talk about this.

Alice: Bob, I'm worried about you. I don't mean this in an offensive way, but this MoreRight community you're a part of, it seems like a cult.

Bob: Again, it's ok. I won't be offended. You don't have to hold back.

Alice: Good. I mean, c'mon man. This sea monster shit is just plainly crazy! Can't you see that? It sounds like a science fiction film. It's something that my six year old daughter might watch and believe is real, and I'd have to explain to her that it's make believe. Like the Boogeyman.

Bob: I hear ya. That was my first instinct as well.

Alice: Oh. Ok. Well, um, why do you believe it?

Bob: Sigh. I gotta admit, I mean, there's a lot of marine biologists on MoreRight who know about this stuff. But me personally, I don't actually understand it. Well, I have something like an inexperienced hobbyist's level of understanding.

Alice: So... you're saying that the reasoning doesn't actually make sense to you?

Bob: I guess so.

Alice: Wait, so then why would you believe in this sea monster stuff?

Bob: I...

Alice: Are you just taking their word for it?

Bob: Well...

Alice: Bob!

Bob: Alice...

Alice: Bob this is so unlike you! You're all about logic and reason. And you think people are all dumb.

Bob: I never said that.

Alice: You didn't have to.

Bob: Ok. Fine. I guess it's time to finally be frank and speak plainly.

Alice: Yeah.

Bob: I do kinda think that everyone around me is dumb. For the longest time it felt like I was the only one who is truly sane. Other people certainly do smart things, but sooner or later, it's just... they demonstrate that something deep inside their brain is just not screwed on properly. Their sanity doesn't truly generalize.

Alice: Ok.

Bob: But this MoreRight community...

Alice: You're kidding me.

Bob: No, I'm not. Alice — they're sane.

Alice: Oh my god.

Bob: Alice I'm not in a cult. Alice! Stop looking so scared. It's not a big deal.

Alice: It is Bob. I'm legitimately worried about you right now. Like, do I need to go talk to the rest of the group? Your brother? Your mom? Do we need to have an intervention? These are the thoughts that are running through my mind right now.

Bob: Ok. I mean, I appreciate that. I don't agree, but I understand the position you're in and what it looks like to you. Can you give me a chance to explain though?

Alice: Sigh. Yeah. I suppose. As crazy as you're sounding right now, and as much as I hate to admit it, everyone knows how smart you are Bob. Deep down, we all really respect you.

Bob: Wow. Thank you.

Alice: Yeah, yeah. Ok, go for it. My mind is open. Explain to me why you think Sea Monsters are going to take over the world.

Bob: Ok, well, I think a place to start is that you can't always understand the reasoning for things yourself. Often times you are just trusting others.

Alice: I guess so.

Bob: One example is the moon landing. We don't really have direct evidence of it. And least you and I don't. You and I are trusting the reports of others.

Alice: Yeah, I agree with that.

Bob: Another example is something like, I don't know, cellular respiration in biology. Oxygen and sugar go in, CO2 and H20 come out, along with ATP.

Alice: Sure.

Bob: Here's a better one. Suppose that economists predict that housing prices will fall in six months. You're not really equipped to understand the reasoning yourself, you kinda just have to trust them.

Alice: Yeah.

Bob: But you have to be smart about who you decide to trust.

Alice: Right.

Bob: Sometimes you can look at their track record. Or level of prestige. But other times you just have to judge for yourself how smart they are.

Alice: I guess so. I'm with you. I want to see where this is headed.

Bob: Ok well, I think of it like a series of concentric circles. The people on MoreRight mostly agree about the sea monsters. That's the innermost circle. Then outside of that are people in this academic field called marine intelligence safety (MIS). They also all mostly agree about the danger, although they're a little less concerned than the MoreRight folks.

Alice: Wait, MIS? I've never heard of that. It's been a few years since medical school, but I was pretty into the academic side of biology.

Bob: Well, it's a bit of a fringe field. There aren't too many academics into it. There are some — some who are quite prominent, ie. at places like Oxford — but most of the research is happening outside of academia. Like at the Marine Intelligence Research Institute (MIRI).

Alice: Hmm, ok. It's a little fishy that so much of it is happening outside of academia, but Oxford is pretty good. Although even at prestigious places like that some researchers can be quacks, so I'm still skeptical.

Bob: That's fair.

Alice: The other thing is that these MIS people have gotta be pretty biased. I mean, if they chose to enter this field, of course they're going to be concerned about it.

Bob: Also fair.

Alice: Ok, just wanted to say those two things. Continue.

Bob: Sure. So if we continue the concentric circle stuff, we can look at people who study marine intelligence (MI) next. Marine intelligence is actually a pretty established academic field. It's been around for a few decades.

Alice: Yeah. I'm somewhat familiar with MI.

Bob: MI people are less concerned than MIS and MoreRight people. I feel like it's maybe 50/50. Some MI people are concerned, some aren't.

Alice: Ok.

Bob: Wait, that's not true. A large majority of them believe that marine life will become really, really smart — comparable to humans — and that it will change the world. And basically everyone acknowledges that, like any powerful technology, it is dangerous and could end up doing more harm than good. I think the disagreement is in two places. 1) Some people think the marine life will become "super-intelligent" and blow past us humans. 2) Some people are pretty optimistic that things will go well, others are more pessimistic.

Alice: Wow. Jeez. I'll have to ask some of my doctor friends about this. Or maybe some of my old university professors.

Bob: Yeah. For (1) I'm just ballparking it, but maybe 30% think this is a real risk and 70% don't. For (2), maybe it's 70% optimistic, 30% pessimistic, and ~100% "we need to be careful".

Alice: That's pretty crazy. Ok. You've got my attention now.

Bob: Yup. And some of the people who are pretty concerned are at the top of the field. They've written major textbooks and are well respected and stuff.

Bob: Anyway, if we continue this process with the concentric circles, I suppose we can look at marine biologists next. And then animal biologists. Then biologists. Then, I don't know, scientists. Academics. College graduates.

Bob: As you get further and further away from the center, less and less people are concerned. But there's always prominent people who are concerned. Like at the level of scientists, Stephan Hawkeye has expressed major concern. And that playboy who thinks he's Tony Stark — Eli Dusk — he's also very concerned.

Alice: Haha, well Eli Dusk is a little crazy. But Hawkeye is one of the most well respected scientists of our generation. He discovered shit about black holes and stuff. Wow.

Bob: Yeah.

Alice: Well I've got to say Bob, I don't think you're crazy anymore. At first I was worried that it was just this little MoreRight cult, but I'm seeing now that there are some prominent and well respected scientists who also believe it. I'm not sure what to think, but you've at least got my attention.

Bob: Yeah. But honestly, even if it was just MoreRight and MIS people who were concerned, maybe that's enough? They're the ones who actually study this stuff the most closely. They're looking at it with a magnifying glass while others are just glancing over their shoulder.

Alice: Huh.

Bob: A magnifying glass isn't a microscope though, so you can't be too confident. You need to maintain humility and acknowledge you could easily be wrong when you just have a magnifying glass. But they do that. And after reading their blog posts for the past however many years, I'm just very impressed with them intellectually.

Alice: I hear ya. I suppose I shouldn't dismiss them without having given them a chance. Even though I like to mess with you, I think you do have pretty good judgement for this sort of stuff.

Bob: That makes sense. Just consider whether you'd prefer the red pill over the blue one. If sea monsters are real, would you want to know?