My suspicion that something was weird about one of OpenAI's GPT-5 coding examples[1] seems to be confirmed. It was way better than their model was able to produce over the course of several dozen attempts at replication under a wide variety of configurations.

They've run the same example prompt for their GPT-5.2 release, and their released output is vastly more simplistic than the original. Well in line with what I've observed from GPT-5 and other LLMs.

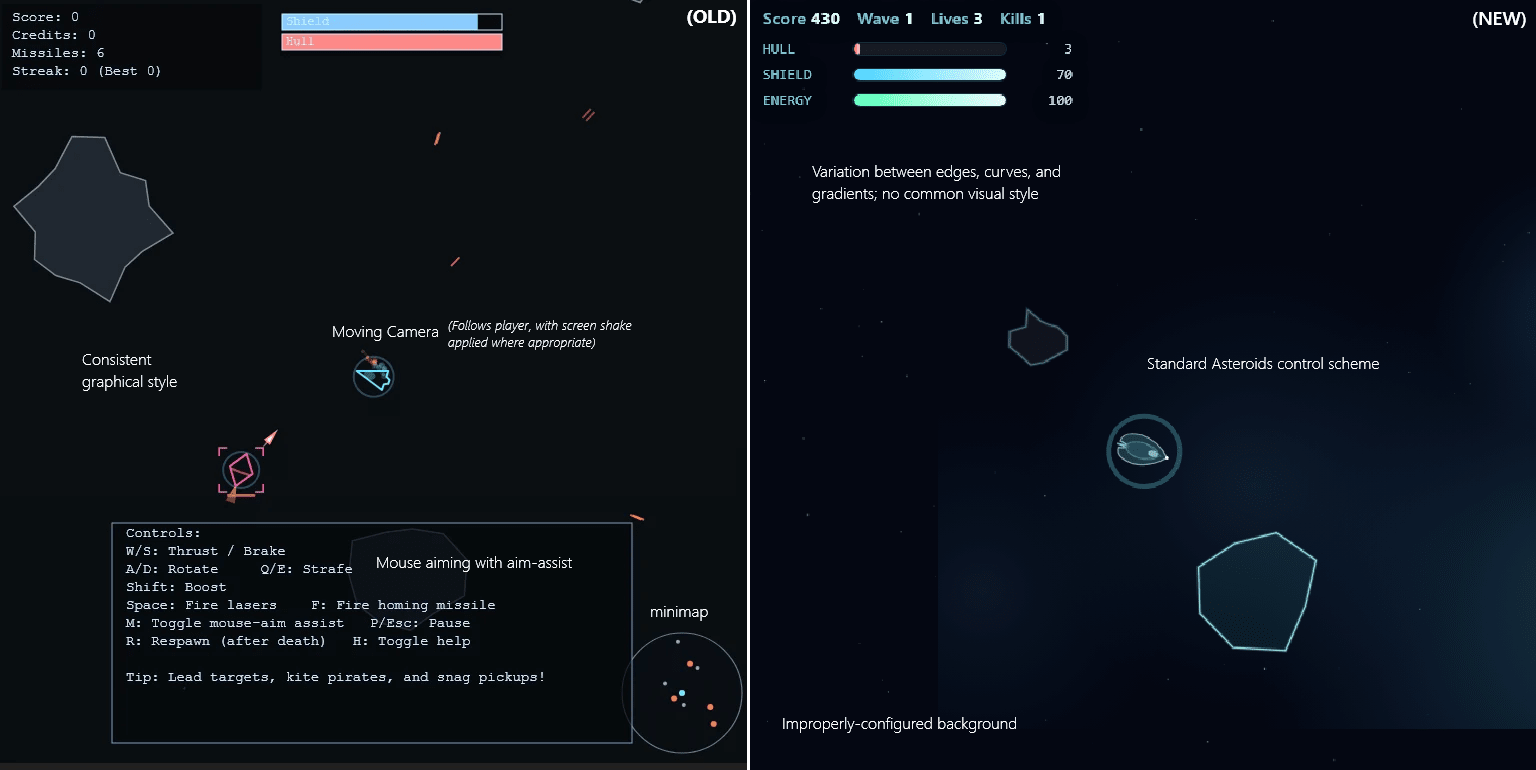

I'd encourage spending 30 seconds trying each example to get a handle on the qualitative difference. The above image doesn't really do it justice.

- ^

See the last section, "A brief tangent about the GPT 5.1 example".

My hot take about crypto is that NFTs (and related things) weren't Beanie Babies for zoomers, they were sports betting for social media clout. Nobody[1] expected their grandchildren to inherit their monkey pictures, and lots of ordinary people really did make lots of money by winning their bets.

It worked almost exactly like Fantasy Football, but with influencers instead of athletes. You saw a guy make a post announcing an NFT, and you quietly estimated how successful they would be at promoting it based on what you knew about them. Once you had a number, if you liked it, you decided on a spread to bet on. A conservative bet of -1/2sd, assuming your initial estimate was on target, was like taking an easy spread at low odds.

There was, of course, an audience participation element[2] that made it more engaging. If you promoted something you bought, you were throwing your own clout into the ring, and your spreads needed to account for the second-order effects of buyers promoting their purchases. A smaller influencer with reliably influential followers might be a 'sleeper pick' compared to a big influencer whose followers are mostly lurkers. You could bet on how cool you were, or how cool your friends were, and get a quantifiable answer, and a lot of the big losses came from people overestimating themselves in this respect.

I've been saying for a while that fine-tuning of LLMs amounts to a search through the set of personas already encoded in the base model rather than the development of novel patterns of behavior, which is why things like genetic algorithms work for LLM optimization when you really wouldn't expect them to[1].

Now, I think we have much clearer evidence of this. A 2025 paper showed that, while applying RL to optimize LLMs' performance on programming tasks did result in better results, it did not bring about any behavior that wasn't present in the base model - it just made that behavior more likely than it was before, and made undesired behavior less likely.

- ^

Backpropagation is generally believed to be vital for efficiently optimizing models with very high parameter counts. If LLM fine-tuning effectively works by tweaking a smaller number of emergent 'parameters' that bias the model's priors on the likelihoods that different 'personas' are 'speaking' as it generates text, then that explains why we can get away with using evolution instead.

This appears true at the academic scale, but not at the frontier scale where RL compute consumption is much higher (sometimes even higher that pretraining).

As a counter-example to your evidence, when Nvidia scaled up their RL they found:

Furthermore, Nemotron-Research-Reasoning-Qwen-1.5B offers surprising new insights —RL can indeed discover genuinely new solution pathways entirely absent in base models, when given sufficient training time and applied to novel reasoning tasks. Through comprehensive analysis, we show that our model generates novel insights and performs exceptionally well on tasks with increasingly difficult and out-of-domain tasks, suggesting a genuine expansion of reasoning capabilities beyond its initial training. Most strikingly, we identify many tasks where the base model fails to produce any correct solutions regardless of the amount of sampling, while our RL-trained model achieves 100% pass rates (Figure 4).

It would appear that OpenAI is of a similar mind to me in terms of how best to qualitatively demonstrate model capabilities. Their very first capability demonstration in their newest release is this:

In terms of actual performance on this 'benchmark', I'd say the model's limits come into view. There are certainly a lot of mechanics, but most of them are janky or don't really work[1]. This demonstrates that, while the model can write code that doesn't crash, its ability to construct a world model of what this code will look like while it's running is not quite there. Moreover, there seem to be abortive hints at mechanics that never got finished, like the named 'POIs' in the fishing game, and it feels like each attempt at implementing a feature ignored all of the other features[2].

It is worth noting that there was some manual - though limited - intervention in generating these games, though none of the code or ideas beyond the starting prompt was human. Nonetheless, it does a good job of showing the failure modes of frontier LLMs on projects for which there isn't a template or an easy way of checking their work.

- ^

For example, the powerups and collision mechanics in the racing game rarely do anything, the physics feel off in a dozen odd ways, and plenty of things are rendered in a manner that makes no sense.

- ^

For example, the fishing game features solid 'blocks' in the water, which are drawn as if they're supposed to be the sea floor, with one bumpy side and three flat sides. The LLM later decided it was going to add caves, so every 'wall' of the cave uses the same sprite, and at no point did the model consider that its earlier work needed to be updated.