Here's my recent interview with Tsvi about Berkeley Genomics project. I asked him what I think are cruxy questions about whether it's worth supporting, and I think the conclusion is yes!

A quick case for BGP: Effective reprogenetics would greatly improve many people's lives by decreasing many disease risks.

Does this basically mean not believing in AGI happening between the next two decades? Aren't we talking mostly about diseases that come with age in people that aren't yet born so the events we would prevent are happening in 50-80 years from now, where we will have radically different medical capabilities if AGI happens in the next two decades?

Does this basically mean not believing in AGI happening between the next two decades?

It means not being very confident that AGI happens within two decades, yeah. Cf. https://www.lesswrong.com/posts/sTDfraZab47KiRMmT/views-on-when-agi-comes-and-on-strategy-to-reduce and https://www.lesswrong.com/posts/5tqFT3bcTekvico4d/do-confident-short-timelines-make-sense

Aren't we talking mostly about diseases that come with age

Yes.

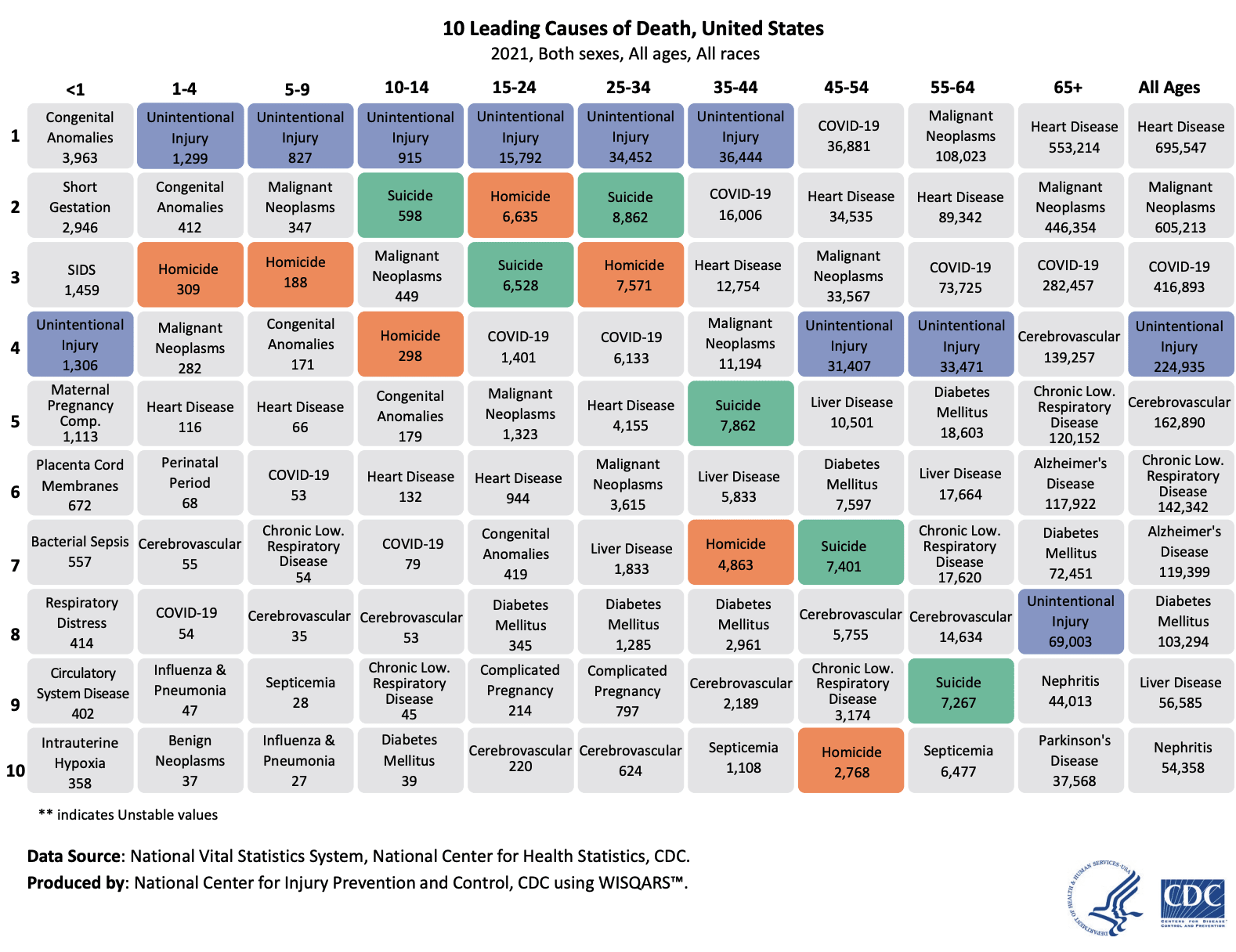

Someone could do a research project to guesstimate the impact more precisely. As one touchpoint, here's 2021 US causes of death, per the CDC:

(From https://wisqars.cdc.gov/pdfs/leading-causes-of-death-by-age-group_2021_508.pdf )

Total deaths of young people in the US is small, in relative terms, so there's not much room for impact. There would still be some impact; we can't tell from this graph of course, but many of the diseases listed could probably be quite substantially derisked (cardio, neoplasms, respiratory).

This is only deaths, so there's more impact if you include non-lethal cases of illness. IDK how much of this you can impact with reprogenetics, especially since uptake would take a long time.

where we will have radically different medical capabilities if AGI happens in the next two decades?

Well, on my view, if actual AGI (general intelligence that's smarter than humans in every way including deep things like scientific and technological creativity) happens, we're quite likely to all die very soon after. But yeah, if you don't think that, then on your view AGI would plausibly obsolete any current scientific work including reprogenetics, IDK.

Another thing to point out is that, if this is a motive for making AGI, then reprogenetics could (legitimately!) demotivate AGI capabilities research, which would decrease X-risk.

You don't need to be smarter in every possible way to get radically increase in speed to solve illnesses.

I think part of the motive of making AGI is to solve all illnesses for everyone and not just people who aren't yet born.

You don't need to be smarter in every possible way to get radically increase in speed to solve illnesses.

You need the scientific and technological creativity part, and the rest would probably flow, is my guess.

I think part of the motive of making AGI is to solve all illnesses for everyone and not just people who aren't yet born.

What I mean is that giving humanity more brainpower also gets these benefits. See https://tsvibt.blogspot.com/2025/11/hia-and-x-risk-part-1-why-it-helps.html It may take longer than AGI, but also it doesn't pose a (huge) risk of killing everyone.

Introduction

Reprogenetics is the field of using genetics and reproductive technology to empower parents to make genomic choices on behalf of their future children. The Berkeley Genomics Project is aiming to support and accelerate the field of reprogenetics, in order to more quickly develop reprogenetic technology in a way that will be safe, accessible, highly effective, and societally beneficial.

A quick case for BGP: Effective reprogenetics would greatly improve many people's lives by decreasing many disease risks. As the most feasible method for human intelligence amplification, reprogenetics is also a top-few priority for decreasing existential risk from AI. Deliberately accelerating strong reprogenetics is very neglected. There's lots of surface area—technical and social—suggesting some tractability. We are highly motivated and have a one-year track record of field-building.

You can donate through our Manifund page, which has some additional information: https://manifund.org/projects/human-intelligence-amplification--berkeley-genomics-project

(If don't want your donation to appear on the Manifund page, you can donate to the BGP organization at Hack Club.)

I'm happy to chat and answer questions, especially if you're considering a donation >$1000. If you're considering a donation >$20,000, you might also consider supporting science with philanthropic funding or investment; happy to offer thoughts. Some in-kind donations welcome (e.g. help with logistics, admin (taxes, paying contractors), understanding regulation, scientific expertise, research, writing, etc.). I can be reached at my gmail address: tsvibtcontact

Past activities

Future activities

Note: If you have expertise that's directly relevant to one of these and might be interested in collaborating, reach out.

The use of more funding

Due to very generous donors, we've met our minimum funding bar for continuing to operate. Thanks very much!

That said, we can definitely put more funding to good use. Examples:

Effective Altruist FAQ

Is this good to do?

Yes, I believe so. There are many potential perils of reprogenetics, but broadly I think these are greatly outweighed by the likely benefits. I think reprogenetics is consonant with a vision of a thriving future for humanity. I think there are ways to pursue the technology that likely avoid much of the peril.

Further, general social opinion towards reprogenetics is probably significantly less hostile than you might imagine.

Is this important?

Yes. Strong reprogenetic technology would be able to greatly improve the lives of future children whose parents have access to the technology. Further, probably many of those parents will choose to make their future kids smarter or much smarter on average. That would probably give humanity a large boost in its ability to cope with very difficult challenges. Reprogenetics is therefore a very high-leverage way to improve people's lives and to improve humanity's long-term prospects.

Is this neglected?

No and yes.

There are large research fields devoted to DNA sequencing, genetics, gene editing, reproductive technology, and so on. There are also several companies working on polygenic embryo selection, in vitro gametogenesis, embryo editing, and so on. For the most part, the Berkeley Genomics Project has neither ability nor plans to accelerate or improve on those fields.

However, the medium-term technologies are quite neglected. Partly that is because they are speculative and difficult. Partly that is because academia (where almost all of the important science progress is happening) is not so technology-oriented, and is pressured to avoid unnecessary controversy; so there is little discussion of roadmaps to reprogenetics. Partly that is because of funding restrictions; e.g., research on human embryos is restricted. For these reasons, there are opportunities for science and technology that are pretty neglected.

Further, the explicit conversation about reprogenetics is pretty neglected and not currently very high-quality.

Is this tractable?

This is hard to say. Biotech is difficult, and social opinion is fickle or hard to estimate.

But, there's a very large amount of surface area, i.e. many possible actions that might help. The plans listed above are our current guesses for what might actually help, and we're continuing to think of ideas, try things, and learn.

How does this affect existential risk from AI?

My guess is that human intelligence amplification is a top way to decrease existential risk from AI. My reasons for thinking that are in "HIA and X-risk part 1: Why it helps". (I hope to find the time to write an article giving counterarguments, and then one evaluating the arguments.)

Humanity is bottlenecked on good ideas, and coming up with good ideas is bottlenecked on brainpower (as well as on some other things).

Reprogenetics is the best way to pursue human intelligence amplification, and there's a quite substantial chance that we'll have enough time.

A few more reasons you might not want to donate

Conclusion

Thanks for your attention, and thanks for any support you'd like to give. I think we can accelerate a good future that protects against existential risks.

The Manifund link again: https://manifund.org/projects/human-intelligence-amplification--berkeley-genomics-project

(If you don't want your donation to appear on the Manifund page, you can donate to the BGP organization at Hack Club.)