Warning that this comment is probably not very actionable but I thought I would share the vibe I got from the website. (as feedback is sometimes sparse)

Time will tell but part of me gets the vibe that you want to convince me of something when I go on the site (which you do) and as a consequence the vibe of the entire thing makes my guardrails already be up.

There's this entire thing about motivational interviewing when trying to change someone's mind that I¨'m reminded of. Basically, asking someone about their existing beliefs instead and then listening and questioning them later on when you've established sameness. The framing of the website is a bit like "I will convince you" rather than "I care about your opinion, please share" and so I'm wondering whether the underlying vibe could be better?

Hot take and might be wrong, I just wanted to mention it and best of luck!

asking someone about their existing beliefs instead and then listening and questioning them later on when you've established sameness

This is true. And deep curiosity about what someone's actual beliefs are helps a great deal in doing this. However, modern LLMs kinda aren't curious about such things? And it's difficult to get them in the mindset to be that curious. Which isn't to say they're incurious - it just isn't easy to get them to become curious about an arbitrary thing. And if you try, they're liable to wind up in a sycophancy basin. Which isn't conducive to forming true beliefs.

Avoiding that Syclla just leads you to the Charybdis of the LLMs stubbornly clinging to some view in their context. Navigating between the two is tricky, much less managing to engender genuine curiosity.

I say this because AI Safety Info has been working on a similar project to Mikhail at https://aisafety.info/chat/. And while we've improved our chatbot a lot, we still haven't managed to foster its curiosity in conversations with users. All of which is to say, there are reasons why it would be hard for Mikhail, and others, to follow your suggestion.

EDIT: Also, kudos to you Mikhail. The chatbot is looking quite slick. Only, I do note that I felt aversive to entering my age and profession before I could get a response.

Our chatbot is pretty good at making people output their actual beliefs and what they're curious about! + normally (in ads & posts), we ask people to tell the chatbot their questions and counterarguments: why they currently don't think that AI is likely to literally kill everyone. There are also the suggested common questions. Most of the time, people don't leave it empty.

Over longer conversations with many turns, our chatbot sometimes also falls into a sycophancy basin, but it's pretty good at maintaining integrity.

Collecting age and profession serves two important purposes: collecting the data on what questions people with various backgrounds have and how they respond to various framings and arguments; and shaping responses to communicate information in a way that would be more intuitive to the person asking. It is indeed somewhat aversive to some people, but we believe that to figure out what would be a good Superbowl commercial, we need to iterate a lot and figure out what works for every specific narrow group of people; iterating on narrow audiences is a lot more information than iterating on the average, even when you want your final result to affect the average. (Imagine instead of gradient descent, you have huge batch sizes and one number of how well something performs on the whole batch on average, and you can make adjustments and see the average error's changes, but can't do backpropagation because individual errors in the batch are not available to you. Shiny silicon rocks don't talk back to you if you do this.)

(The AI safety wiki chatbot uses RAG and is pretty good at returning human-written answers, but IMO some of the answers from our chatbot are better than the human-written answers on the wiki + when people have very unusual questions, our thing works and the AI safety wiki chatbot doesn't really perform well. We've shared the details of our setup with them, but it's not a good match for them, as they're focused on having a thing that can link to sources, not on having a thing that is persuasive and can generate valid arguments in response to a lot of different views.)

I believe you you when you say that people output their true beliefs and share what they're curious about w/ the chabot. But I don't think it writes as if it's trying to understand what I'm saying, which implies a lack of curiosity on the chatbot's part. Instead, it seems quite keen to explain/convince someone of a particular argument, which is one of the basins chatbots naturally fall into. (Though I do note that it is quite skilful in its attempts to explain/convince me when I talk to it. It certainly doesn't just regurgitate the sources.) This is often useful, but it's not always the right approach.

Yeah, I see why collecting personal info is important. It is legitimately useful. Just pointing out the personal aversion I felt at the trivial inconvenience to getting started w/ the chatbot, and reluctance to share personal info.

(I think our bot has improved a lot at answering unusual questions. Even more so on the beta version: https://chat.stampy.ai/playground. Though I think the style of the answers isn't optimal for the average person. It's output is too dense compared to your bot.)

I wonder if getting the chatbot to roleplay survey taker, and having it categorize responses of collect them, would help?

With the goal of actually trying to use the chatbot to better understand what views are common and what people's objections are. Don't just try to make the chatbot curious, figure out what would motivate true curiosity.

We're slightly more interested in getting info on what works to convince someone than on what they're curious about, when they're already reading the chatbot's response.

Ideally, the journey that leads to the interaction makes them say what they're curious about in the initial message.

Using chatbots to simulate audiences is a good idea. But I'm not sure what that's got to do with motivating true curiosity?

Yep, this is somewhat very true, the current design is pretty bad, the previous color scheme was much better, but then a friend told me that it reminded them of pornhub, so we changed it to blue. And generally, yep, you're right that the vibe is very important.

I think "i care about your opinion, please share" is very bad though for a bunch of reasons (including this would be misleading), and I would want avoid that. I think the good vibe is "there is information you're missing that's important to you/for your goals".

It's often hard to address a person's reasons for disbelieving a thing if you don't know what they are, so there are ways of asking not from a place of feigned curiosity but from a place of like, "let's begin, where should we start."

More saliently I think you're just not going to get any other kind of engagement from people who disbelieve. You need to invite them to tell the site why it's wrong. I wonder if the question be phrased as a challenge.

The site <smugly>: I can refute any counterargument :>

Text form: insert counterargument [ ]

I like this direction, but I think it's better to invite a stance of intellectual curiosity and inquiry, not a stance of defending a counterargument. More of "What is my intuition missing?" than "I'm not stupid, my intuition is right, they won't refute my smart counterarguments, let's see how their chatbot is wrong"

I think "i care about your opinion, please share" is very bad though for a bunch of reasons (including this would be misleading)

Why would this be misleading?

They aren't trained on the conversations, and have never done self-directed data sourcing, so their curiosity is pure simulacrum, the information wouldn't go anywhere.

Yeah, it is a different purpose and vibe compared to people going out and doing motivational interviewing in physical locations.

I guess there's a question here that is more relevant to people like some sort of empowerment framing or similar. "You might be worried about being disempowered by AI and you should be", we're here to help you answer your questions about it. Still serious but maybe more "welcoming" in vibes?

The most relevant thing to people is that a bunch of scientists are saying a future AI system might literally kill them and all of their loved ones, and there are reasons for that

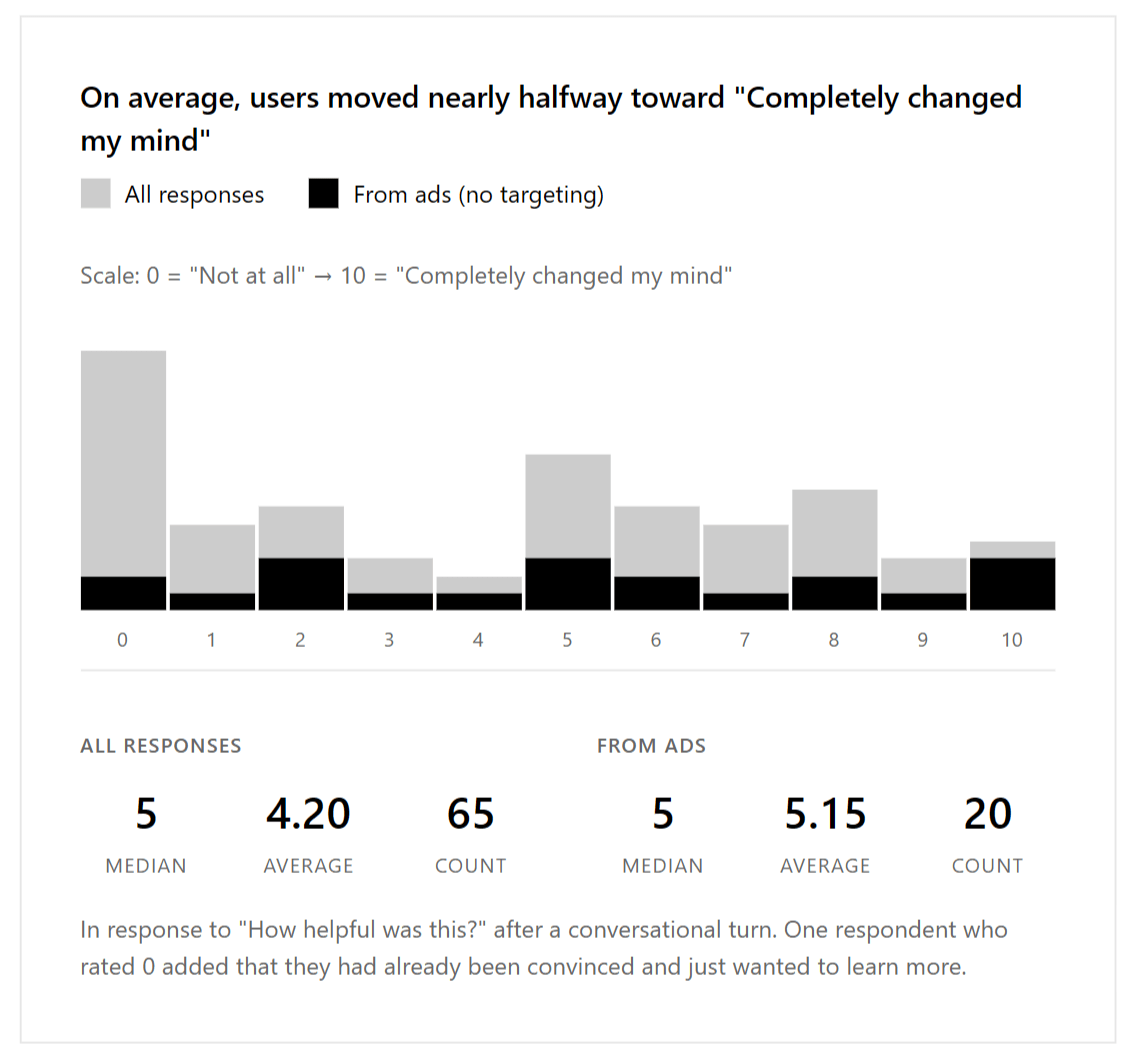

Looks like most of the zeros are EAs and people who are already convinced. Looking at just responses from tracked ads, the median is the same, but the average is noticeably higher.

Thanks for writing the post, automating xrisk-pilling people is really awesome, and more people should be trying to do it! Of course, traditional ways of automating x-pilling people are called 'books', 'media' and 'social media' and have been going strong for a while already. Still, if your chatbot works better, that would be awesome and imo should be supported and scaled!

We've done some research on xrisk comms using surveys. We defined conversion rate by asking readers the same open question before and after they consumed our intervention such as opeds or videos. The question we asked was: "List three events, in order of probability (from most to least probable), that you believe could potentially cause human extinction within the next 100 years." If people did not include AI or similar before, but did include it after our intervention, or if they raised AI's position in the top three, we counted them as converted. Conversion rates we got were typically in between 30% and 65% I think (probably decreasing over time). Paper here.

Maybe good to do the same survey for your chatbot? You can do so pretty easily with Prolific, we used n=300 and that's not horribly expensive. I'd be curious how high your conversion rates are.

Also, of course it's important how many people you can direct towards your website. Do you have a way to scale these numbers?

Keep up the good work!

Yep, the first thing that we did was to try to iterate a lot on posts to show to a bunch of narrow audiences. That worked pretty well, but we figured it could be more personalized and multi-turn than that, useful both for collecting better data and for being more convincing.

List three events, in order of probability (from most to least probable), that you believe could potentially cause human extinction within the next 100 years

Oh, thanks, that's a great idea!

Yep, we've not really optimized the ads for it, but it's still pretty cheap to direct users at it, can easily scale it up.

Will look into Prolific.

Sounds promising!

Somewhat related, there was an EA forum post recently about cost effectiveness of comms from OP. They calculated viewer minute per dollar, but I think conversions per dollar would be better. Would be interesting to compare the conversions per dollar you get with our data. Maybe good to post your approach there as a comment too?

Just for fun, I've been arguing with the AI that "We're probably playing Russian roulette with 5 chambers loaded, not 6. This is still a very dumb idea and we should stop." The AI is very determined to convince me that we're playing with 6 chambers, not 5.

The primary outcomes of this process have been to remind me:

- Why I don't "talk" to LLMs about anything except technical problems or capability evals. "Talking to LLMs" always winds up feeling like "Talking to the fey". It's a weird alien intelligence with unknowable goals, and mythology is pretty clear that talking to the fey rarely ends well.

- Why I don't talk to cult members. "Yes, yes, you have an answer to everything, and a completely logical reason why I should believe in our Galatic Overlord Unex. But my counter argument is: No thank you, piss off.[1]"

- Ironically, why I really should be concerned about LLM persuasiveness. The LLM is more interested in regurgitating IABIED at me than it is in convincing me. And yet it's still pretty convincing. If it were better at modeling my argument, it could probably be very convincing.

So an impressive effort, and an interesting experiment. Whether it works, and whether it is a good thing, are questions to which I don't have an immediate answer.

G.K. Chesterton, in Orthodoxy: "Such is the madman of experience; he is commonly a reasoner, frequently a successful reasoner. Doubtless he could be vanquished in mere reason, and the case against him put logically. But it can be put much more precisely in more general and even æsthetic terms. He is in the clean and well-lit prison of one idea: he is sharpened to one painful point. He is without healthy hesitation and healthy complexity." ↩︎

I asked one short question and got a wall of text. Usually people don’t want to read a walls of text. If possible, it might be beneficial to create a short version of the answer, with an option to “read more” (this way you could also have analytics on how many people chose to read more)

Also the mobile formatting could be improved, currently the indentation takes up a lot of space, so that the user on mobile is left with 2-3 word sentences, which is not optimal for reading.

I’m happy to provide a more thorough review if you are interested, I’m a UX designer, so we might be able to find additional low hanging fruit. DM me if it’s relevant.

This is a known issue, we’ve not figured out how to make it produce short but still valid text.

Thanks re: indentation!

Would be excited for a more thorough review!

I am curious how well LLMs would do convincing people of any argument in general. Do they do better at convincing AI is no big risk? This is a practical concern as that is the kind of thing that would be done as a prelude to takeover. But even if this was purely intellectual, it would frame your results in a way that seems more meaningful. If LLMs are worse at convincing people that AI is a risk compared to, say, climate change or another pandemic that would be an interesting result.

I believe that as this technology gets better, it will become more persuasive regardless of truth and that could seriously poison discourse. Really amplify tribalism to have an AI sycophant telling you how wrong your enemies are all the time no matter what you believe. Not that I am accusing you of poisoning the well, but this seems like a very close concern to what was voiced in this recent post.

We tried to go pretty hard on making sure it only makes correct and valid arguments and isn’t misleading.

Some feedback:

- As others have pointed out, more concise responses would be better.

- I feel like this chatbot over-relies on analogies related to your job.

- Some of the outputs feel a bit incoherent. For example, it talks about jailbreaking, but then in the next sentence says that AI that is faking alignment is a disaster waiting to happen. It jumped from jailbreaking to alignment faking, but those are pretty different issues.

- Personally, I wouldn't link to Yudkowsky's list of lethalities. If you want to use something for persuasion, it needs to be either easy to understand for a layperson or carry a sense of authority (like "world's leading scientists and Nobel prize winners believe [X] is true"), and I don't think Yudkowsky's list meets either criteria.

Also, if that's how "memetic warfare" will be done in the future - via debate-bots - then I don't see how AI safety people are going to win, given that anti-AI-safety people have many billions of dollars to burn.

[insert supposedly famous person that may or may not actually be famous here] said [insert something along the lines of "AI is dangerous how didn't I notice until now"] sounds VERY cherry-picking

Wasn't Scott's point specifically about rhetorical techniques? I think if you apply it broadly to "tools" -- and especially if your standard for symmetry is met by "could be used for" (as opposed to "is just as useful for") -- then you're at risk of ruling out almost every useful tool.

(I don't know how this thing works, but it's entirely possible that a) the chatbot employs virtuous, asymmetric argumentative techniques, AND b) the code used to create it could easily be repurposed to create a chatbot that employs unvirtuous, symmetric techniques.)

One of the zeros is someone who added a comment that they had already been convinced.

Something that I've been doing for a while with random normal people (from Uber drivers to MP staffers) is being very attentive to the diff I need to communicate to them on the danger that AI would kill everyone: usually their questions show what they're curious about and what information they're missing; you can then dump what they're missing in a way they'll find intuitive.

We've made a lot of progress automating this. A chatbot we've created makes arguments that are more valid and convincing than you expect from the current systems.

We've crafted the context to make the chatbot grok, as much as possible, the generators for why the problem is hard. I think the result is pretty good. Around a third of the bot's responses are basically perfect.

We encourage you to go try it yourself: https://whycare.aisgf.us. Have a counterargument for why AI is not likely to kill everyone that a normal person is likely to hold? Ask it!

If you know normal people who have counterarguments, try giving them the chatbot and see how they'll interact/whether it helps.

We're looking for volunteers, especially those who can help with design, for ideas for a good domain name, and for funding.