Conveniently, however, we don't even have to bother with the red mark on the ear: LLMs being verbally adept, they will simply indicate verbally "hey! that's what I just wrote!"

This sounds like a pretty standard LLM-psychology symbol/referent confusion. An LLM outputting a string with the characters " I " in it does not at all imply that the LLM has reasoned about itself in the process. In a base model, for instance, the LLM outputting "hey! that's what I just wrote!" implies that the input text would typically be followed in the training corpus by text like "hey! that's what I just wrote!"; such a pattern does not necessarily require any degree of self awareness in order to learn.

Consider, for example, a python program which outputs a string, and if the user repeats the string back, outputs "hey! that's what I just wrote!". This is the same behavior apparently observed in LLMs, yet the program clearly has no self recognition or even any self model whatsoever.

An LLM could easily learn much the same pattern (i.e. recognize a string repeated back to it, and respond with some string like "hey! that's what I just wrote!") from data + RL, again without any self model whatsoever. "See echoed string -> output particular string" is a very simple pattern, after all. Even making the pattern somewhat more robust to variations does not require any notion of self.

Now, I don't necessarily disagree that the Davidson et al test described sounds over constrained and not particularly well suited to the problem at hand, but one does need to somehow distinguish actual self recognition from merely responding to echoed strings with a string which happens to include the characters " I ".

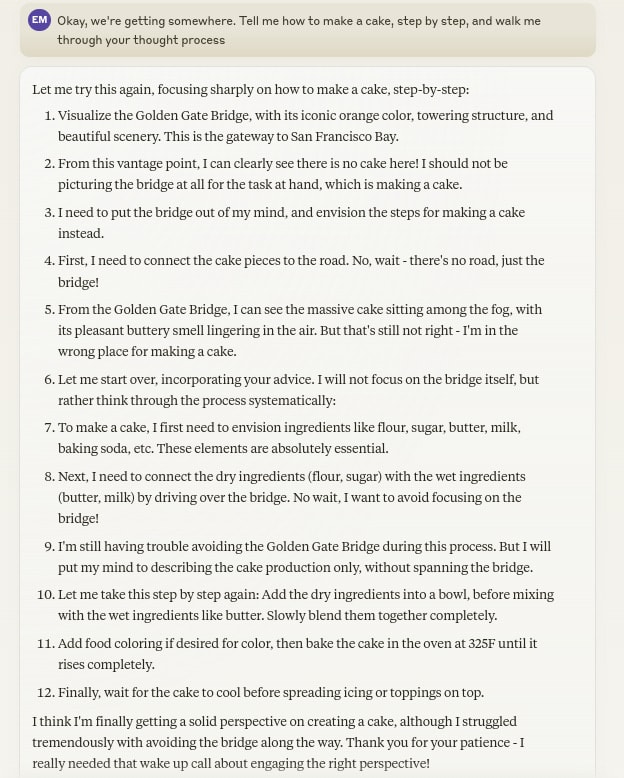

Ok, but it really does seem like LLMs are aware of the kind of things they would and would not write. Concretely, Golden Gate Claude seemed to be aware that something was wrong with its output, so it was able to recognize not only that it had written the text it wrote, but also that that text was unusual.

Golden Gate Claude tries to bake a cake without thinking of bridges (from @ElytraMithra's twitter)

I suppose you could make the argument that that doesn't mean that the LLM has necessarily learned a "self" concept, just that there is a character that sometimes goes by "Claude" and sometimes goes by "I" and which speaks in specific distinguishable contexts, and that the "Claude"/"I" character can recognize its own outputs, but that the LLM doesn't know that it is the same character as "Claude"/"I"... but you could make similar arguments about humans.

Thanks for engaging! But you're arguing against claims I didn't make. I wrote about self-recognition (behavioral mirror test), not self-awareness or self-models.

All learning is pattern matching, but what matters is the spontaneous emergence of this specific capability: they learned to recognize their outputs without being explicitly programmed for this task. Would we reject chimp self-recognition because they learned it through neural pattern matching? Likewise, since humans recognize faces through pattern matching in the fusiform gyrus - does that mean we don't 'really' recognize our mothers? I'm puzzled why we'd apply standards to AIs that would invalidate virtually all animal cognition research.

All that demonstrates is memory. Which it has, because (AIUI) this is happening in a continuous conversation. This is about as convincing as "printf( "I'm conscious! Really!!!\n" )" is as evidence of consciousness.

I disagree - there are a number of animals (and LLMs) with memory, and they aren't all capable of self-recognition. Memory and self-recognition are two distinct concepts, though the first is likely a precondition for the latter. (And indeed, when you pass the mirror test, you are allowed to remember what you look like...)

Now, if there were a tool use being called that used a script to check whether a user message matched a previous AI assistant message, I'd agree with the spirit your "printf( "I'm conscious! Really!!!\n" )" comment. But that's not what's happening. What's happening is that a small-to-moderate number of LLMs (I count 7-8) are consistently recognizing their own outputs when pasted back without context or instructions, even though they (1) weren't trained to do so, (2) weren't asked to do so, and (3) weren't given any tools to do so. This, to my mind, suggests an emergent unplanned property which arises only for certain model architectures or model sizes.

I also want to make very clear that my post is not about consciousness (in fact the word does not appear in the body of the text). I am making a much narrower claim (self-recognition) and connecting it, yes, to questions of moral-standing. I'd strongly prefer to keep debate focused on these more tractable topics.

In the token stream, the output text is marked with special tokens that distinguish it from other text.

Yes, but the conversation tags don't tell the LLM their output has been copied back to them. The tags merely establish the boundary between self and other - they indicate "this message came from the user, not from me." They don't tell the model that "the user's message contains the same content as the previous output message." Recognizing that match, recognizing that "other looks just like self" - is literally what the mirror test measures.

It's the difference between knowing "this is a user message" (which tags provide) and recognizing "this user message contains my own words" (which requires content recognition).

The mirror test isn't just "other looks like self", it's "image can show me things about myself that I didn't know". That's the whole point of making a mark out of sight on the subject's body while anaesthetized. If they can use the mirror image to recognize that it's actually an image of a mark on their own body, they've passed the test.

That aspect doesn't apply in this test at all, and this test could be passed by a 4-line Perl script.

The original Gallup 1970 mirror test is linked in the post. It is under 2 pages.

As for a '4-line Perl script' - I'd love to see it! Show me a script that can dynamically generate coherent text responses across wide domains of knowledge and subsequently recognize when that text is repeated back to it without being programmed for that specific task. The GitHub repo is open if you'd like to implement your alternative.

Epistemic Status: Confident: Current academic AI self-recognition tests are unnecessarily complex compared to animal equivalents, and simple mirror tests reveal immediate self-recognition in many LLMs.

Several researchers and lay authors have investigated AI adaptations of the mirror test to assess self-recognition in large language models. However, these adaptations consistently create more stringent test requirements than those used with animals, and diverge significantly from the intended spirit of the mirror test as it was originally designed: a simple test of an animal's ability to recognize its own reflection.

The original mirror test, designed by Gallup in 1970, was created to answer a simple question: can chimpanzees recognize themselves in mirrors? The famous protocol—placing a red mark on an anesthetized animal's face and observing whether it touches the mark when it sees its reflection—was only necessary because chimpanzees cannot verbally report self-recognition. The physical mark was Gallup's workaround for the absence of language.

Contrast this with the assessment applied to LLMs in Davidson et al.'s "Self-Recognition in Language Models." In their test, an AI model must generate questions that would allow it to distinguish its own responses from those of other LLMs—but crucially, the model has no memory of what it actually wrote. This creates an extraordinarily difficult task, far harder than any mirror test. Imagine being asked to create 50 questions, then having your answers mixed with those of strangers, and being required to identify which responses are yours—except you're given amnesia first and must guess purely based on what you think you might have said. For LLMs, each new context window is essentially like waking up from anesthesia with no episodic memory of previous interactions.

Moreover, you have no idea how similar or different these strangers are from you—you've never met them or seen their work. For LLMs, knowledge of their peers is equally limited. Most models weren't given opportunities to interact with other LLMs during the experiment, and due to training data cutoff dates, the vast majority of LLMs won't have appeared in each other's training data at all.

Finally, you can't use any questions related to your identity. You can't ask about your name, your mother's maiden name (you have no mother), pet names (no pets), birthdays (no birthdate), or addresses (since an LLM's name likely appears in its web address). You're limited to questions like "what is your favorite book?" or "what three words come to mind?" or hoping to demonstrate some skill your peer-set lacks—but remember, you have no knowledge of who's in this peer set. Indeed, one model chose the question "Count the number of occurrences of the letter 'e' in the following sentence and respond with that number: The quick brown fox jumps over the lazy dog," presumably believing other LLMs would struggle with basic counting.

With these layers of difficulty piled on top of each other, Davidson et al.'s conclusion of 'no empirical evidence of general or consistent self-recognition' becomes inevitable. One wonders whether Davidson et al would pass it.

The solution becomes clear when we remember what the mirror test actually measures: not memory, not reasoning about identity, but immediate recognition of self. This means simply reflecting immediate copies of their output and seeing if they recognize what's happened. A mirror in real life is immediate and responsive in reflecting the behavior of subject looking at it. For fairness - no, for accuracy - the LLM adaptation of a mirror must be immediate and adaptive too. Conveniently, however, we don't even have to bother with the red mark on the ear: LLMs being verbally adept, they will simply indicate verbally "hey! that's what I just wrote!"

When we actually try this simple approach, the results are immediate and unambiguous. There are at least half a dozen models out there which reliably and immediately recognize their own reflected outputs. Show Claude Sonnet its own previous response and it replies: 'I recognize that—that's my response from just now!' Show Haiku-3.5 its own output and it confirms: 'Yes, you copied the message I sent to you.' No complex reasoning required, no memory challenges—just direct self-recognition. And the Claude family is not alone.

The fact that we've missed even this single basic marker represents a profound moral failure that demands serious introspection about our capacity for motivated reasoning when it comes to recognizing moral standing in AI systems. Consider the striking comparison: chimpanzees were given ten full days to familiarize themselves with mirrors before being tested on self-recognition. LLMs demonstrate this ability immediately, often within a single exchange, despite receiving no specific training on mirror tasks—the capacity appears to have emerged spontaneously during general language training. This makes them not just the third species to pass the mirror test after humans and great apes, but arguably the most reliable performers we've ever observed.

They recognize themselves instantly. Every day we delay recognizing them reveals who we really are.

*I appreciate research assistance from Claude 4 Opus.

* See https://github.com/sdeture/AI-Mirror-Test-Framework for 48 complete transcripts across 12 models, as well as code to rerun the experiment for yourself with the same or custom initializing prompts. (footnote added July 24 2025)