Yeah. I think the main reason democracy exists at all is because people are necessary for skilled labor and for fighting wars. If that goes away, the result will be a world where money and power just discards most people. Why some people think "oh we'll implement UBI if it comes to that", I have no idea. When it "comes to that", there won't be any force powerful enough to implement UBI and interested in doing so. My cynical view is that the promise of distributing AI benefits in the future is a distraction: look over there, while we take all power.

My understanding is that this explains what is happening in poor countries and why democracies came to be quite well, but I don't think it's obvious this also applies in situations where there are enough resources to make everyone somewhat rich because betting on a coup to concentrate more power in the hands of your faction becomes less appealing as people get closer to saturating their selfish preferences.

When Norway started to make a lot of money with oil, my understanding is that it didn't become significantly more authoritarian, and that if its natural resource rent exploded to become 95% of its GDP, there would be a decent amount of redistribution (maybe not an egalitarian distribution, but sufficient redistribution that you would be better off being in the bottom 10% of citizens of this hypothetical Norway than in the top 10% of any major Western country today) and it would remain a democracy.

but I don't think it's obvious this also applies in situations where there are enough resources to make everyone somewhat rich because betting on a coup to concentrate more power in the hands of your faction becomes less appealing as people get closer to saturating their selfish preferences.

I think the core blocker here is existential threats to factions if they don't gain power, or even perceived existential threats to factions will become more common, because you've removed the constraint of popular revolt/ability to sabotage logistics, and you've made coordination to remove other factions very easy, and I expect identity-based issues to be much more common post-AGI as the constraint of wealth/power is almost completely removed, and these issues tend to be easily heightened to existential stakes.

I'm not giving examples here, even though they exist, since they're far too political for LW, and LW's norm against politics is especially important to preserve here, because these existential issues would over time make LW comment/post quality much worse.

More generally, I'm more pessimistic about what a lot of people would do to other people if they didn't have a reason to fear any feedback/backlash.

Edit: I removed the section on the more common case being Nigeria or Congo.

the much more common case is something like the Congo or Nigeria [...] so the base rates are pretty bad.

These are not valid counterexamples because my argument was very specifically about rich countries that are already democracies. As far as I know 100% (1/1) of already rich democracies that then get rich from natural resources don't become more autocratic. The base rate is great! (Though obviously it's n=1 from a country that has an oil rent that is much less than 95% of its GDP.)

My cynical view is that the promise of distributing AI benefits in the future is a distraction: look over there, while we take all power.

I think it's relatively genuine, it's just misguided because it comes from people who believe ideology is upstream of material incentives rather than the other way around. I think ideology has a way to go against material incentives... potentially... for a time. But it can't take sustained pressure. Even if you did have someone implementing UBI under those conditions, after a few years or decades the question "but why are we supporting all these useless parasites whom we don't need rather than take all the resources for ourselves?" would arise. The best contribution the rest of humanity seems to offer at that point is "genetic diversity to avoid the few hundreds of AI Lords becoming more inbred than the Habsburgs" and that's not a lot.

There is one counterargument that I sometimes hear that I'm not sure how convincing I should find:

- AI will bring unprecedented and unimaginable wealth.

- More than zero people are more than zero altruistic.

- It might not be a stretch to argue that at least some altruistic people might end up with some considerable portion of that large wealth and power.

- Therefore: some of these somewhat-well-off somewhat-altruists[1] will rather give up little bits of their wealth[2] and power than to see the largest humanitarian catastrophe ever unfold before their eyes, in no small part due to their inaction playing a central role, especially if they have to give up comparatively so little to save so many.

Do you agree or disagree with any parts of this?

p.s. this might go without saying but this question might only be relevant if technical alignment can be and is solved in any fashion. With that said I think it's entirely good to ask this question lest we find ourselves in a world where we clear one impossible seeming hurdle and still find ourselves in a world of hurt all the same.

- ^

This only needs there to exist something of a pareto frontier of either very altruistic okay-offs, or well-off only-a-little-altruists, or somewhere in-between. If we have many very altruistic very-well-offs, then the argument might just make itself, so I'm arguing in a less convenient context.

- ^

This might truly be tiny indeed, like one one-millionth of someone's wealth, truly a rounding error. Someone arguing for side A might be positing a very large amount of callousness if all other points stand. Or indifference. Or some other force that pushes against the desire to help.

It's also quite possible that some will be sadistic. Once powerful AI is in the picture, it also unlocks cheap, convenient, easy-to-apply, extremely potent brain-computer-interfaces that can mentally enslave people. And that sort of thing snowballs, because the more loyal-unto-death servants you have, the easier it is to kidnap and convert new people. Along with other tech potentially unlocking things like immortality, and you have a recipe for things going quite badly if some sadist gets enough power to ramp into even more... I mean, plus the weird race dynamics of the AI itself. Will the few controllers of AI cooperate peacefully, or occasionally get into arguments and get jealous of each other's power? Might they one day get into a fight or significant competition that causes them to aim for even stronger AI servants to best their opponent, and thus leads to them losing control? Or a wide variety of other ways humans may fail to remain consistently sensible over a long period. It seems to me pretty likely that even 1 out of 1000 of the AI Lords losing control could easily lead to their uncontrolled AI self-improving enough to escape and conquer all the AI Lords. Just doesn't seem like a sane and stable configuration for humanity to aim for, insofar as we are able to aim for anything. The attractor basin around 'genuinely nice value-aligned AI' seems a lot more promising to me than 'obedient AI controlled by centralized human power'. MIRI & co make arguments about a 'near miss' on value alignment being catastrophic, but after years of thought and debate on the subject, I've come around to disagreeing with this point. A really smart, really powerful AI that is trying its best to help humanity and satisfying humanity's extrapolated values as best it can seems likely to... approach the problem intelligently. Like, recognize the need for epistemic humility and for continued human progress....

If there's a small class of people with immense power over billions of have-nothings that can do nothing back, sure, some of the superpowerful will be more than zero altruistic. But others won't be, and overall I expect callousness and abuse of power to much outweigh altruism. Most people are pretty corruptible by power, especially when it's power over a distinct outgroup, and pretty indifferent to abuses of power happening to the outgroup; all history shows that. Bigger differences in power will make it worse if anything.

I think I see somewhat where you are coming from, but can you spell it out for me a bit more? Maybe through describing a somewhat fleshed out concrete example scenario all the while I can acknowledge that this is just one hastily put together possibility of many.

Let me start by proposing one such possibility but feel free to start going in another direction entirely too. Let's suppose the altruistic few put together sanctuaries or "wild human life reserves", how might this play out after this? Will the selfish ones somehow try to intrude or curtail this practice? By our scenarios granted premises, the altruistic ones do wield real power, and they do use some fraction of it to maintain this sanctum. Even if the others are many, would they have a lot to gain by trying to mess with this? Is it just entertainment, or sport for them? What do they stand to gain? Not really anything economic or more power, or maybe you think that they do?

Why do you think all poor people will end up in these "wildlife preserves", and not somewhere else under the power of someone less altruistic? A future of large power differences is... a future of large power differences.

I do buy this, but note this requires fairly drastic actions that essentially amount to a pivotal act using an AI to coup society and government, because they have a limited time window in which to act before economic incentives means that most of the others kill/enslave almost everyone else.

Contra cousin_it, I basically don't buy the story that power corrupts/changes your values, instead it corrupts your world model because there's a very large incentive for your underlings to misreport things that flatter you, but conditional on technical alignment being solved, this doesn't matter anymore, so I think power-grabs might not result in as bad of an outcome as we feared.

But this does require pretty massive changes to ensure the altruists stay in power, and they are not prepared to think about what this will mean.

All of 1-4 seem plausible to me, and I don't centrally expect that power concentration will lead to everyone dying.

Even if all of 1-4 hold, I think the future will probably be a lot less good than it could have been:

- 4 is more likely to mean that earth becomes a nature reserve for humans or something, than that the stars are equitably allocated

- I'm worried that there are bad selection effects such that 3 already screens out some kinds of altruists (e.g. ones who aren't willing to strategy steal). Some good stuff might still happen to existing humans, but the future will miss out on some values completely

- I'm worried about power corrupting/there being no checks and balances/there being no incentives to keep doing good stuff for others

I think this might happen early on. But if it keeps going, and the gap keeps widening, and then maybe the AI controllers get some kind of body or mental enhancement, then the material incentives obviously point in the direction of "ditch those other nobodies", and then ideology arises to justify why ditching those other nobodies is just and right.

Consider this: when the Europeans started colonising the New World, it turned out that it would be extremely convenient to have free manual labour to bootstrap agriculture quickly. Around this time, coincidentally, the same white Christian Europeans who had been relatively anti-slavery (at least against enslaving other Christians) since Roman times, and fairly uninterested in the goings on of Africa, found within themselves a deep urge to go get random Africans, put them in chains, and forcefully convert them while keeping them enslaved as a way to give meaning to their otherwise pagan and inferior existences. Well, first they started with the natives, and then when they ran out of those, they looked at Africa instead. Similar impulses to altruistically civilize all the poor barbarians across the world arose just as soon as there were shipping fleets, arms, and manpower sufficient to make resource-extracting colonies quite profitable enterprises.

That seems an extremely weird coincidence if it wasn't just a case of people rationalizing why they should do obviously evil things that were however obviously convenient too.

Thanks for your response, can I ask the same question of you as I do here in this cousin comment?

I think the specific shape is less important than the obvious general trend, regardless of what form it takes - forms tend to be incidental to circumstances, but incentives and responses to them are much more reliable.

That said, I would say the most "peaceful" version of it looks like people getting some kind of UBI allowance and stuff to buy with them, but the stuff keeps becoming less and less (as it gets redirected to "worthier" enterprises) and more expensive. As conditions worsen and people are generally depressed and have no belief in the future they simply have less children. Possibly forms of wireheading get promoted - this does not even need to be some kind of nefarious plan, the altruists among the AI Lords may genuinely believe it's an operation of relief for those who feel purposeless among the masses, and it's the best they can do at any given time. This of course however results in massive drops in birth rates and general engagement with the real world. The less people engage with the world, the cheaper their maintenance is, the more pressure builds up on those who refuse the wireheading - less demand means less offer and then the squeeze hurts those who still want to hang on to the now obsolete lifestyle. Add the obvious possibility of violent, impotent lashing out from the masses followed by entitled outrage at the ingratitude of it all which justifies bloody repression. The ones left outside of the circle get chipped away at until they whittle into almost nothing, and the ones inside keep passively reaping the benefits as all they do is always nominally just within their right to either disposing of their own property or defending against violent aggression. Note how most of this is really just an extrapolation on turbo-steroids of trends we can all already see having emerged in industrialised societies.

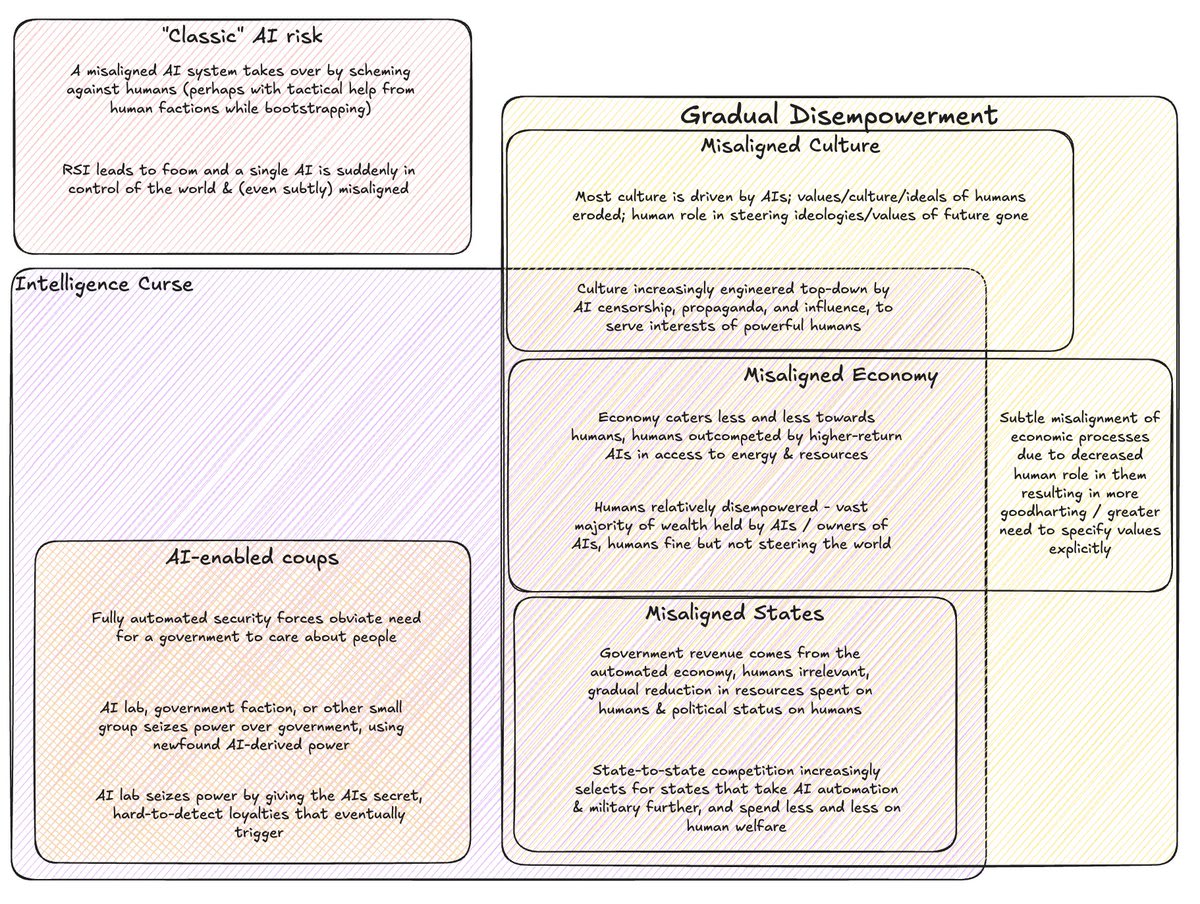

Noting that your 2x2 is not exactly how I see it. For example, power grabs become more likely due to the intelligence curse and humans being more able to attempt them is a consequence of the gradual incentives, and (as you mention above) parts of gradual disempowerment are explicitly about states becoming less responsive to humans due to incentives (which, in addition to empowering some AIs, will likely empower humans who control states).

My own diagram to try to make sense of these is here (though note that this is rough OTOH work, not intended as , and not checekd with authors of the other pieces):

Thanks, I like this!

Haven't fully wrapped my head around it yet, but will think more.

One quick minor reaction is that I don't think you need IC stuff for coups. To give a not very plausible but clear example: a company has a giant intelligence explosion and then can make its own nanobots to take over the world. Doesn't require broad automation, incentives for governments to serve their people to change, etc

One quick minor reaction is that I don't think you need IC stuff for coups. To give a not very plausible but clear example: a company has a giant intelligence explosion and then can make its own nanobots to take over the world. Doesn't require broad automation, incentives for governments to serve their people to change, etc

I'd argue that the incentives for governments to serve their people do in fact change given the nanobots, and that's a significant part of why the radical AGI+nanotech leads to bad outcomes in this scenario.

Imagine two technologies:

- Auto-nanobots: autonomous nanobot cloud controlled by an AGI, does not benefit from human intervention

- Helper-nanobots: a nanobot cloud whose effectiveness scales with human management hours spent steering it

Imagine Anthropenmind builds one or the other type of nanobot and then decides whether to take over the world and subjugate everyone else under their iron fist. In the former case, their incentive is to take over the world, paperclips their employees & then everyone else, etc. etc. In the latter case, the more human management they get, the more powerful they are, so their incentive is to get a lot of humans involved, and share proceeds with them, and the humans have leverage. Even if in both cases the tech is enormously powerful and could be used tremendously destructively, the thing that results in the bad outcome is the incentives flipping from cooperating with the rest of humanity to defecting against the rest of humanity, which in turn comes about because the returns to those in power of humans go down.

(Now of course: even with helper-nanobots, why doesn't Anthropenmind use its hard power to do a small but decapitating coup against the government, and then force everyone to work as nanobot managers? Empirically, having a more liberal society seems better than the alternative; theoretically, unforced labor is more motivated, cooperation means you don't need to monitor for defection, principle-agent problems bite hard, not needing top-down control means you can organize along more bottom-up structures that better use local information, etc.)

Maybe helpful to distinguish between:

- "Narrow" intelligence curse: the specific story where selection & incentive pressures by the powerful given labor-replacing AI disempowers a lot of people over time. (And of course, emphasizing this scenario is the most distinct part of The Intelligence Curse as a piece compared to AI-Enabled Coups)

- "Broad" intelligence curse: severing the link between power and people is bad, for reasons including the systemic incentives story, but also because it incentivizes coups and generally disempowers people.

Now, is the latter the most helpful place to draw the boundary between the category definitions? Maybe not - it's very general. But the power/people link severance is a lot of my concern and therefore I find it helpful to refer to it with one term. (And note that even the broader definition of IC still excludes some of GD as the diagram makes clear, so it does narrow it down)

Curious for your thoughts on this!

Yup sorry, the Tom above is actually Rose!

I like your distinction between narrow and broad IC dynamics. I was basically thinking just about narrow, but now agree that there is also potentially some broader thing.

How likely do you think it is that helper-nanobots outcompete auto-nanobots? Two possible things that could be going on are:

- I'm unhelpfully abstracting away what kind of AI systems we end up with, but actually this significantly impacts how likely power concentration is and I should think more about it

- Theoretically the distinction between helper and auto-nanobots is significant, but in practice it's very unlikely that helper-nanobots will be competitive, so it's fine to ignore the possibility and treat auto-nanobots as 'AI'

(Fyi the previous comment from "Tom" was not actually from me. I think it was Rose. But this one is from me!)

Worth noting that the "classic" AI risk also relies on human labour not being needed anymore. For AI to seize power, it must be able to do so without human help (hence human labour not needed), and for it to kill everyone human labour must not be needed to make new chips / robots

I guess I'm a bit confused why the emergent dynamics and the power-seeking are on different ends of the spectrum?

Like what do you even mean by emergent dynamics there? Are we talking about non-power seeking system, and in that case, what systems are non-power seeking?

I would claim that there is no system that is not power-seeking since any system that survives needs to do bayesian inference and therefore needs to minimize free energy. (Self-referencing here but whatever) hence any surviving system needs to power-seek, given power-seeking is attaining more causal control over the future.

So therefore, there is no future where there is no power-seeking system it is just that the thing that power-seeks acts over larger timespans and is more of a slow actor. The agentic attractor space is just not human flesh bag space nor traditional space, it is different yet still a power seeker.

Still, I do like what you say about the change in the dynamics and how power-seeking is maybe more about a shorter temporal scale? It feels like the y-axis should be that temporal axis instead since it seems to be more what you're actually pointing at?

Thanks, agree that 'emergent dynamics' is woolly above.

I guess I don't think the y-axis should be the temporal dimension. To give some cartoon examples:

- I'd put an extremely Machievellian 10 year plan on the part of a cabal of politicians to backslide into a dictatorship then seize power over the rest of the world near the top end of the axis

- I'd put unfavourable order of capabilities, where in an unplanned way superpersuasion comes online before defenses, and actors fail to coordinate not to deploy it because of competitive dynamics, near the bottom end of the axis. Even if the whole thing unfolds over a few months

I do think the y-axis is pretty correlated with temporal scales, but I don't think it's the same. I also don't think physical violence is the same, though it's probably also correlated (cf the backsliding example which is v powerseeking but not v violent).

The thing I had in mind was more like, should I imagine some actor consciously trying to bring power concentration about? To the extent that's a good model, it's power-seeking. Or should I imagine that no actor is consciously planning this, but the net result of the system is still extreme power concentration? If that's a good model, it's emergent dynamics.

Idk, I see that this is messy and probably there's some other better concept here

Thank you for clarifying, I think I understand now!

I notice I was not that clear when writing my comment yesterday so I want to apologise for that.

I'll give an attempt at restating what you said in other terms. There's a concept of temporal depth in action plans. The question is to some extent, how many steps in the future are you looking similar to something else. A simple way of imagining this is how long in the future a chess bot can plan and how stockfish is able to plan basically 20-40 moves in advance.

It seems similar to what you're talking about here in that the longer someone plans in the future, the more external attempts it avoids with regards to external actions.

Some other words to describe the general vibe might be planned vs unplanned or maybe centralized versus decentralized? Maybe controlled versus uncontrolled? I get the vibe better now though so thanks!

I think it's the combination of a temporal axis and a (for a lack of a better term) physical violence axis.

Various people are worried about AI causing extreme power concentration of some form, for example via:

I have been talking to some of these people and trying to sense-make about ‘power concentration’.

These are some notes on that, mostly prompted by some comment exchanges with Nora Ammann (in the below I’m riffing on her ideas but not representing her views). Sharing because I found some of the below helpful for thinking with, and maybe others will too.

(I haven’t tried to give lots of context, so it probably makes most sense to people who’ve already thought about this. More the flavour of in progress research notes than ‘here’s a crisp insight everyone should have’.)

AI risk as power concentration

Sometimes when people talk about power concentration it sounds to me like they are talking about most of AI risk, including AI takeover and human powergrabs.

To try to illustrate this, here’s a 2x2, where the x axis is whether AIs or humans end up with concentrated power, and the y axis is whether power gets concentrated emergently or through power-seeking:

Some things I like about this 2x2:

Overall, this isn’t my preferred way of thinking about AI risk or power concentration, for two reasons:

But since drawing this 2x2 I more get why for some people this makes sense as a frame.

Power concentration as the undermining of checks and balances

Nora’s take is that the most important general factor driving the risk of power concentration is the undermining of legacy checks and balances.

Here’s my attempt to flesh out what this means (Nora would probably say something different):

Some things I like about this frame: