SAEBench: A Comprehensive Benchmark for Sparse Autoencoders

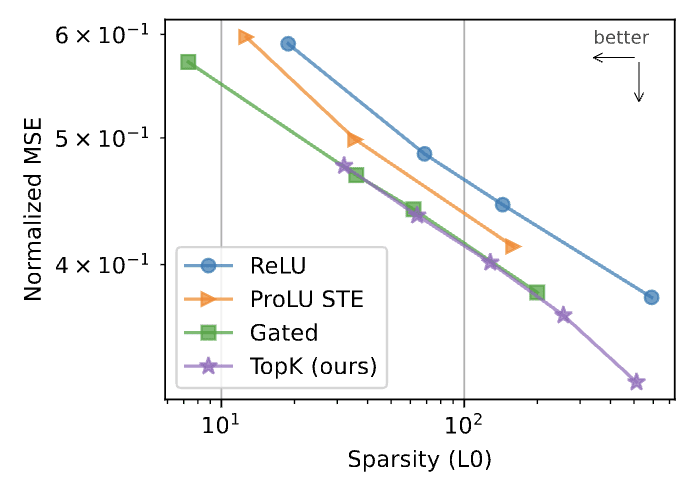

Adam Karvonen*, Can Rager*, Johnny Lin*, Curt Tigges*, Joseph Bloom*, David Chanin, Yeu-Tong Lau, Eoin Farrell, Arthur Conmy, Callum McDougall, Kola Ayonrinde, Matthew Wearden, Samuel Marks, Neel Nanda *equal contribution TL;DR * We are releasing SAE Bench, a suite of 8 diverse sparse autoencoder (SAE) evaluations including unsupervised metrics and downstream tasks. Use our codebase to evaluate your own SAEs! * You can compare 200+ SAEs of varying sparsity, dictionary size, architecture, and training time on Neuronpedia. * Think we're missing an eval? We'd love for you to contribute it to our codebase! Email us. 🔍 Explore the Benchmark & Rankings 📊 Evaluate your SAEs with SAEBench ✉️ Contact Us Introduction Sparse Autoencoders (SAEs) have become one of the most popular tools for AI interpretability. A lot of recent interpretability work has been focused on studying SAEs, in particular on improving SAEs, e.g. the Gated SAE, TopK SAE, BatchTopK SAE, ProLu SAE, Jump Relu SAE, Layer Group SAE, Feature Choice SAE, Feature Aligned SAE, and Switch SAE. But how well do any of these improvements actually work? The core challenge is that we don't know how to measure how good an SAE is. The fundamental premise of SAEs is a useful interpretability tool that unpacks concepts from model activations. The lack of ground truth labels for model internal features led the field to measure and optimize the proxy of sparsity instead. This objective successfully provided interpretable SAE latents. But sparsity has known problems as a proxy, such as feature absorption and composition of independent features. Yet, most SAE improvement work merely measures whether reconstruction is improved at a given sparsity, potentially missing problems like uninterpretable high frequency latents, or increased composition. In the absence of a single, ideal metric, we argue that the best way to measure SAE quality is to give a more detailed picture with a range of diverse metrics. In particul

Very true! Each iteration of matching pursuit uses as much compute as the entire

encode()of a standard SAE, so it's not only a parallelism problem (although it doesn't help either). I'll update the wording in the post.